But the time has arrived for raising consciousness in cognitive science. Books by Edelman, Rosenfield, Dennett, Varela, and others have appeared almost simultaneously, with a strikingly common theme: Biological and psychological evidence suggests that better understanding of consciousness is not only possible, but necessary if we are to improve our understanding of cognition. This evidence varies considerably, ranging from how neurological structures develop, the effects of neural dysfunctions on human behavior, perceptual illusions, the evolution of the human species, and the philosophy of language. In this comparative review, I consider the work of Edelman and Rosenfield. Taken together, these books may stimulate a broader view of intelligence, give further credence to the situated cognition view of language, and provide a more biological basis for "neural net" approaches. Prior work by Putnam, Dreyfus, and Winograd, to name a few previous critics, may also appear less threatening or less nonsensical when argued in neurophysiological terms.

Like Sacks and Luria, Rosenfield uses historically well-documented cases to illustrate and contrast theories of memory, learning, and consciousness. Like them, he provides an ethnographic perspective on the patient, not merely as a patient with a lesion but as a person struggling to make sense of emotional, physical, and social experience. He considers not only laboratory evidence of the patients'verbal and perceptual behavior, but the stories they tell about their social life and mental experience.

Building on simple observations and comparison across cases, Rosenfield

provides a broad view of experiences that theories of memory especially

must address. For example, Mr. March could move his left hand, but only

when told to do so (p. 58), and otherwise seemed not to relate to it as

his own. When a nurse made her hand appear to be his left hand, he casually

made sense of the situation:

What is a patient doing when he says that last Saturday he was in the city of La Rochelle, when he has no idea what day was yesterday and denies that he is still in La Rochelle today? Rosenfield closely examines such story-telling behaviors, revealing that patients are not merely retrieving facts from memory, but revealing how they make sense of experience. By examining what can go wrong--in maintaining a sense of continuous time, an integrated personality and body image, and abstract categorical relations--we can understand better how consciousness structures everyday experience.

Rosenfield's view of the brain is consistent with cognitive science

descriptions of behavior patterns (scripts, grammars), in so far as he

acknowledges that such patterns are real psychological phenomena that need

to be explained. Yet, he insists on an alternative view of neurological

mechanism, by which observed behavior patterns are the product of interactions

at both social and neural levels, This idea of dialectic organization

is important in biology and anthropology, but quite different from the

mechanisms designed by most engineers, computer scientists, and cognitive

modelers. Stephen Jay Gould provides a useful introduction:

...the three classical laws of dialectics embody a holistic vision that views change as interaction among components of complete systems, and sees the components themselves not as a priori entities, but as both the products of and the inputs to the system. Thus the law of "interpenetrating opposites" records the inextricable interdependence of components; the "transformation of quantity to quality" defends a systems-based view of change that translates incremental inputs into alterations of state; and the "negation of negation" describes the direction given to history because complex systems cannot revert exactly to previous states. (Gould, 1987, p. 153-4)

Like Edelman, Rosenfield emphasizes the "interpenetrating" multiple levels of individual development, species evolution, and the interaction of cultural and neural processes. But in the more narrow style of cognitive neuropsychology, he focuses on what abnormal behavior reveals about normal function. In order to explain dysfunctions, as well as the openness and subjectivity of categories in everyday life, Rosenfield argues for a brain that continuously and dynamically reorganizes how it responds to stimuli (p. 134). Rather than retrieving and matching discrete structures or procedures, the brain composes itself in-line, in the very process of coordinating sensation and motion (hence behavior is "situated"). By Dewey's analysis, perceptual and motor processes in the brain are configuring each other without intervening subconscious "deliberation" (Clancey, in press).

Most interpretations of patients with dysfunctions (e.g., an inability

to speak certain kinds of words) have postulated isolated memory or knowledge

centers for different kinds of subsystems: auditory, visual, motor. The

"diagram makers," exemplified by Charcot in the mid-1800s, drew pictures

of the brain with "centers," linking memory and parts of the body. Dysfunctions

were explained as loss of memory, that is, loss of specific knowledge stored

in the brain. Rosenfield claims instead that memory loss in a brain-damaged

patient is not the loss of a "memory trace," but evidence of a restructuring

of how the brain operates. That is, we are not observing a primary, isolated

"loss," but a secondary process of reorganization for the sake of

sustaining self-image:

Patients with brain damage are confused when they fail to recognize and remember, and it is this confused, altered awareness, as much as any specific failures of memory, that is symptomatic of their illness. (p. 34)

The first section of the book recapitulates some of the analysis from

Rosenfield's Invention of Memory (Rosenfield, 1988; Clancey, 1991),

but elaborated from the perspective of conscious experience:

Unlike Edelman, Rosenfield makes no attempt to address the AI audience directly. His statements require some reformulation to bring home the insights. For example, Rosenfield says, "No machine is troubled by, or even intrigued by, feelings of certainty that appear contradictory." (p. 12) Yet, AI researchers cite examples of how a program detects contradictory conclusions and uses that information. Without further discussion, it is unclear how being troubled is an essential part of creating new goals and values.

Providing a convincing case requires understanding the reader's point of view well enough to anticipate rebuttals. To this end, I re-present Rosenfield's key cases and contrast his analysis with other cognitive science explanations. The central themes of his analysis are: non-localization of function, the nature and role of self-reference (subjectivity), the origin and sense of time in remembering, the relational nature of linguistic categories, and the problem of multiple personalities. I reorder these topics in order to more clearly convey the neural-architectural implications.

For example, "Mary Reynolds could, at different times, call the same

animal a 'black hog'or a 'bear.'" This behavior was integrated with alternative

personalities, one daring and cheerful, the other fearsome and melancholic.

From the standpoint of cognitive modeling based on stored linguistic schemas,

there would be two memories of facts and skills, two coherent subconscious

sets of representational structures and procedures. Rosenfield argues instead:

Referring to another multiple personality, Rosenfield says,

The nature of self-reference is revealed by patients with loss of awareness of their limbs ("alien limbs"), as well as the changed experience of people who become blind. For example, Hull suggests that after becoming blind he has difficulty in recollecting prior experiences that involve seeing. Rosenfield's interpretation is that neurological structures involved in seeing are now difficult to coordinate with Hull's present experience. His memories are not lost, but his visual self-reference is limited. That is, remembering is a kind of coordination process. Hull's present self-image is visually restricted: "There is no extension of awareness into space…I am dissolving. I am no longer concentrated in a particular location…" (quoted by Rosenfield, p. 64) Without an ongoing visual frame of reference, he is unable to establish relations to his prior visual experience.

Again, recollecting isn't retrieving and reciting the contents of memory, but a dynamic process of establishing "relations to one's present self." (p. 66) "Establishing relations" means physically integrating previous neural activations with currently active neural processes. A stored linguistic schema model fits Hull's experience less well than a model of memory based on physical processes of bodily coordination.

The case of Oliver Sacks's (1984) alien leg (paralyzed and without feeling because of an accident) also reveals self-referential aspects of awareness. Upon seeing his leg in his bed and not recognizing it, Sacks actually tossed it out of the bed, landing on the floor. Rosenfield emphasizes that "seeing is not by itself 'knowing'and that the lack of inner self-reference, together with the incontrovertible sight of the leg, therefore created a paradoxical relation to it" (p. 53). Sacks is not just sensing his leg, for he can clearly see it and recognizes that it is a leg. Instead, Sacks is relating his categorizations to his ongoing sense of himself and his surroundings. If we see a strange object in our bed (especially an unfamiliar leg), we move to throw it out. Rosenfield argues that we cannot separate categorizing from this ongoing process of sustaining the self vs. non-self relation. Both Rosenfield and Edelman argue that most cognitive models and AI programs lack self-reference, or view it as a secondary reflection after behavior occurs. Edelman's models suggest that self-reference involves neurological feedback between levels of categorization, including feedforward relations between higher-level coordinating and lower-level perceptual categorizations.

Another patient studied by Charcot, Monsieur A, lost his ability to recognize shapes and colors. He could no longer draw or visualize images (both of which he previously did extraordinarily well) or even recognize his family. As often occurs in these cases, Monsieur A was now, in his words, "less susceptible to sorrow or psychological pain." This diminished sense of pleasure and pain indicates a change in self-reference, of awareness of the self. Oddly, Monsieur A could speak and answer questions and continued his work and everyday life in a somewhat disjointed way. But he was unable to establish a relation between words and his sensory experience. He understood words only in their abstract relations. As in Hull's case, this inability to perceive now impaired his ability to remember; as Monsieur A put it, "Today I can remember things only if I say them to myself, while in the past I had only to photograph them with my sight." (p. 93) This suggests again that remembering is integrated with sensory experience, that remembering is a form of perceiving.

Early work by the "diagram makers" viewed neural lesions in terms of cutting off areas of the brain, such that stored images, word definitions, or the like are inaccessible. Contradicting Charcot, Rosenfield claims that Monsieur A had not lost specific visual memories, but his ability to integrate present visual experience--to establish a present sense of himself--that included immediate and practical relation with colors and shapes. Semantic content doesn't reside in a store of linguistic categorizations, but in the relation of categorizations to each other. Indeed, every categorization is a dynamic relation between neural processes. Edelman's model suggests that in Monsieur A neural maps that ordinarily relate different subsystems in the brain are unable to actively coordinate his visual sensory stimuli with ongoing conceptualization of experience. Experiments show that sensory categorization may still be occurring (e.g., some patients unable to recognize friends and family may exhibit galvanic skin responses) (p. 123). But conscious awareness of sensation requires establishing a relation with the current conceptualization of the self.

The process of sustaining self-coherence has a holistic aspect, such that loss of any one sensory modality has global effects on memory and personality. Again, this argues for consciousness not as a side-effect of a discrete assembly of components, but as the business of the brain as it coordinates past activation relations with ongoing perception and movement.

Mabille and Pitres'patient in 1913, Mr. Baud, provides a good example. When asked if he knows the town of La Rochelle, he replies that he went there some time ago to find a pretty woman. He remembers where he stayed, and says that he never went back. Yet he has been in a hospital in La Rochelle for thirteen years. He also says he has a mistress whom he sees every Saturday. Asked when he last saw her, he responds, "Last Saturday." But again he hasn't left the hospital in all this time (p. 80). Contradicting Mabille and Pitres, Rosenfield tells us that we have no idea what the patient meant by "last Saturday." It could not possibly be a specific Saturday since he has no idea what day today is; Mr. Baud has no specific memory beyond the past twenty seconds.

Oddly, Mr. Baud is a bit like a stored linguistic schema program. He knows how to use words in a conversation, but he has no ongoing, connected experience. He is like a program that has only been living for twenty seconds, but has a stored repertoire of definitions and scripts. He knows the patterns of what he typically does and answers questions logically on this basis: Since he goes every Saturday, he must have gone last Saturday. But from the observer's perspective, which transcends Mr. Baud's twenty-second life span, he lacks a sense of time. Interpreting his case is tricky, because Mr. Baud can't be recalling La Rochelle as we know it if he doesn't acknowledge that he's currently in that town. How in fact, could he be recalling any place at all or any time at all in the sense that we make sense of our location and temporal experience?

Rosenfield argues that our normal "relation to the world is not sometimes abstract and sometimes immediate, but rather always both." (p. 80) To say that Mr. Baud has abstract, long-term memory, but lacks immediate, short-term memory ignores how our attention shifts in normal experience as we relate recollections to what we are experiencing now. "Distant experiences become specific--refer to a specific event in our past--when we can relate them to our present world." (pp. 75-76) Without this ability to coordinate his reminiscences with his present experience, Mr. Baud exhibits a breakdown in an aspect of sense-making, not merely a loss of memory or inability to store recent experiences. His recollections are "odd abstractions, devoid of temporal meaning."(p. 77) That is, a sense of time involves a kind of self-reference that Mr. Baud cannot experience.

Strikingly, this view of meaning goes beyond the idea of "indexicality," previously emphasized in situated cognition (e.g., Agre, 1988). Understanding is not just establishing the relation of words like "last Saturday" to the present situation. Knowing the present situation involves having a dynamic sense of self. Without being able to relate my experiences (either past or present) back to me (p. 87), my awareness of the past, of history, of memory, and the present situation will be impaired. Put another way, understanding, as well as remembering and reasoning, involve orienting my self. If I am confused about who I am, I can't understand what "here" and "now" mean.

Consciousness as a mechanism sustains a relation between our recollections and our ongoing sense of self. Naming, history-telling, and theorizing--integral aspects of sense-making (Schön, 1990)--are ways of establishing relationships in our experience (p. 98). Mr. Baud, lacking an "immediate" relation to his surroundings, can't have an abstract relation either. His recollections are timeless in lacking a relation to the present (p. 80). The view that there are isolated functions, such as short and long-term memory, and that one is simply missing, is inadequate. It is Mr. Baud's ability to coordinate neural processes, not access stored facts, that is impaired. This illustrates the thematic contention of Rosenfield and Edelman that cognitive science benefits from a biological re-examination of the nature of memory. In effect, prevalent functional models and computational engines assume a separability of activation and processing that the brain does not employ.

Rosenfield cites studies that suggest that dysfunctions like Mr. Baud's

appear to be caused by damage to the hippocampus and associated structures

in the limbic system (p. 85), which is "essential for establishing the

correlations between the body image and external stimuli that are the basis

of consciousness." (p. 86) Edelman's analysis goes further to relate such

"primary consciousness" to conceptual categorization by the cortex.

As an example, Rosenfield describes brain-damaged patients unable to use terms like "red" abstractly, but who can nevertheless perceive and sort objects by color. Again, Rosenfield argues against a "disconnection model" in which concepts like "red" are assumed to be innate categories. This model is still current in cognitive neuropsychology, with claims that different kinds of words such as proper nouns, verbs, prepositions, etc. are stored in different parts of the brain that are genetically functionally specialized. An alternative explanation is that the mechanism by which relations are constructed and differences generalized is impaired: The patient "finds puzzling Gelb and Goldstein's insistence that all the variant shades are 'red.'" (p. 103) The patient has difficulty forming kinds of conceptual categorizations (i.e., coordinating perceptual categorizations), not retrieving facts about colors.

Using examples from infant learning, Rosenfield argues, "Learning a language might well be described as the acquisition of the skill of generalization or categorization." (p. 105) That is, naming is a sense-making activity, and processes of categorization build on each other. So, for example, "children first learn the words for size and only later the words for colors." (p. 105)

Crucially, categories are relations, as Rosenfield says repeatedly.

Edelman calls coherent responses to stimuli "perceptual categorization."

But according to Rosenfield, this fails to emphasize that each categorization

is a relation to other coherent coordinations (ongoing and previously activated)

(p. 83). That is, the meaning of a concept is embodied in the functional

relation of ongoing neural processes, themselves constructed from prior

coordinations. Bartlett (1932) made this same point:

[T]here is no reason in the world for regarding these [traces/schemata] as made complete at one moment, stored up somewhere, and then re-excited at some much later moment. (p. 211)

Possible relations are constrained by the available mechanisms in the brain, the evolution of human language as a social process, and the development of the individual. Rosenfield illustrates the generalization process of creating new languages with examples of sign language and Creole. Note that the issue is how a new language develops, not how people learn an existing language. In this case, linguists observe a two-generation process by which the first generation of children and adults develops gestures or pidgin, with only a simple grammar if any at all. The younger children of the second generation "abstract (categorize) the gestures of older students, creating from them symbols and more abstract categories of relations among these symbols--a true grammar. An older child may point (gesture) to a rabbit to indicate his subject; a younger one will categorize the pointing gesture as 'rabbit,'and the gesture becomes a symbol." (p. 110-111) The need for a second generation suggests that neural mechanisms alone are not sufficient, in the individual, for developing a new grammatical language.

In effect, the experience of many different gestures present in the environment becomes categorized into a repeated experience of "gesturing," with an associated typology and ordering of gestures as symbols. A similar analysis is demonstrated by Bamberger's (1991) studies of children learning to perceive and use musical tones, not just as integrated parts of a melody, but as named and ordered objects that can be manipulated to produce meaningful sequences. This developmental process illustrates the "brain's constant reworking of its own generalizations." (p. 111)

The patterns between sign language and Creole language development suggest the importance of social sharing of language, as well as neurological constraints that limit an older child's ability to abstract a language beyond its immediate and practical relations. We are reminded of brain-damaged patients who lack a sense of abstract time, color, shape, etc., but can handle particulars in the "here and now." (Again, without a possible concomitant abstract meaning, the particulars have a different sense than experienced by people with abstraction capabilities.)

Rosenfield and Edelman both argue against the existence of specific innate categories or grammar rules, and emphasize the overwhelming importance of cultural influences on what can be accomplished by individuals. However, they agree that the mechanism that enables grammatical language to develop involves neurological structures that evolved in the human species and are not found in other animals. Nevertheless, these are "new areas of the brain…not new principles of mental function." (p. 119) This is supported by Edelman's model, which shows new relations between existing processes of categorization, not a new kind of compositional activation process. Similar arguments are made by Head and Bartlett; more recently, Calvin (1990) claims that sequencing control processes for physical movements such as throwing are involved in speech and complex conceptualization. That is, the same kinds of neurological processes may occur in different areas of the brain, becoming specialized for different functions through use. Rosenfield calls this the "holistic" view, in which parts are "not independently specialized, but interdependent." (p. 24) Categorizing areas of the brain establish dynamic, time-sensitive relations to other areas, as opposed to storing discrete representations of words, sounds, meanings, etc. in isolation. This is also what Rosenfield means when he says that functions are not predetermined, either inborn in the infant or as pre-stored responses in the adult.

The primary repertoire of neural interactions, within which categorization and coordination occurs, is not determined by genes, but develops in adolescence through a complex process involving topological constraints, redundant connections, and experiential strengthening. Even the brains of identical twins are wired differently (p. 82). This is of course strong evidence against the idea that specific linguistic rules or categories could be inherent in the brain. Rather the existence of commonalities in human language, known as universal grammar, is evidence of common transformational principles by which categories are formed.

Specifically, language adds a new kind of self-reference (p. 119), in

which we become explicitly conscious of ourselves, by naming of phenomenological

experience, historical accounts and causal rationalizations (Schön,

1990). This self reference required the evolution of a special memory system

that "categorized the vocal cord's gestural patterns":

The title Bright Air, Brilliant Fire comes from Empedocles, "a physician, poet, and an early materialist philosopher of mind" (p. xvi) in the sixth century B.C., who suggested that perception can be understood in terms of material entities. Edelman believes that understanding the particular material properties of the actual "matter underlying our minds--neurons, their connections, and their patterns,"(p. 1) is essential for understanding consciousness and building intelligent machines, because the brain works unlike any machine we have ever built. Of special interest is how Edelman relates his understanding of sensorimotor coordination to Lakoff's analysis of concepts as embodied processes.

The book is organized into four parts: 1) Problems with current models of the mind; 2) Origins of new approaches based on evolution and developmental biology; 3) Proposals for neurobiological models of memory, consciousness, and language; and 4) Harmonies or "fruitful interactions that a science of mind must have with philosophy, medicine, and physics." (p. 153) The book concludes with a forty page postscript, "Mind without biology," criticizing objectivism, mechanical functionalism, and formal approaches to language. I will cover these ideas in the same order: 1) biological mechanisms that are potentially relevant, and perhaps crucial, to producing artificial intelligence; 2) a summary of the theory of Neural Darwinism; 3) how consciousness arises through these mechanisms; and 4) the synthesis of these ideas in the Darwin III robot. In Section 4, I elaborate on the idea of pre-linguistic coordination, which reveals the limitations of stored linguistic schema mechanisms. In Section 5, I argue for preserving functionalism as a modeling technique, while accepting Edelman's view that it be rejected as a theory of the mind.

Cognitivism is the view that reasoning is based solely on manipulation

of semantic representations. Cognitivism is based on objectivism

("that an unequivocal description of reality can be given by science")

and classical categories (that objects and events can be "defined

by sets of singly necessary and jointly sufficient conditions")(p. 14).

This conception is manifest in expert systems, for example, or any cognitive

model that supposes that human memory consists only of stored linguistic

descriptions (e.g., scripts, frames, rules, grammars). Echoing many similar

analyses (e.g., (Winograd and Flores, 1986; Lakoff, 1987; Dennett, 1992a)),

Edelman characterizes these computer programs as "axiomatic systems" because

they contain the designer's symbolic categories and rules of combination,

from which all the program's subsequent world models and sensorimotor procedures

will be derived. Paralleling the claims of many other theorists, from Collingwood

and Dewey to Garfinkel and Bateson, he asserts that such linguistic models

"…are social constructions that are the results of thought, not

the basis of thought." (p. 153) He draws a basic distinction between what

people do or experience and their linguistic descriptions (names, laws,

scripts):

Although science cannot exhaustively describe particular, individual experiences, it can properly study the constraints on experience. Edelman focuses on biological constraints. He claims that the biological organization of matter in the brain produces kinds of physical processes that have not been replicated in computers. "By taking the position of a biologically-based epistemology, we are in some sense realists" (recognizing the inherent "density" of objects and events) "and also sophisticated materialists" (p. 161) (holding that thought, will, etc. are produced by physical systems, but emphasizing that not all mechanisms have the same capabilities).

Edelman believes that cognitivism produced "a scientific deviation as great as that of the behaviorism it attempted to supplant" (p. 14) in assuming that neurobiological processes have no properties that computers don't already replicate (e.g., assembling, matching, and storing symbol structures). This assumption limits what current computers can do, as manifest in: the symbol grounding problem, combinatorial search, inflexibilities of a rule-bound mechanism, and inefficient real-time coordination. The most "egregious" category mistake is "the notion that the whole enterprise [of AI] can proceed by studying behavior, mental performance and competence, and language under the assumptions of functionalism without first understanding the underlying biology." (p. 15) By this account, Newell's (1990) attempts to relate psychological data to biological constraints (not cited by Edelman) are inadequate because the "bands" of Unified Theories of Cognition misconstrue the interpenetration of neural and environmental processes (Section 2). Putting "mind back into nature" requires considering "how it got there in the first place.... [We] must heed what we have learned from the theory of evolution."

Edelman proceeds to summarize the basic developmental neurobiology of

the brain, "the most complicated material object in the known universe."(p.

17) Development is epigenetic, meaning that the network and topology of

neural connections is not prespecified genetically in detail, but develops

in the embryo through competitive neural activity. Surprisingly, cells

move and interact: "in some regions of the developing nervous system up

to 70 percent of the neurons die before the structure of that region is

completed!"1 (p. 25) The brain is not organized like conventionally

manufactured hardware: the wiring is highly variable and borders of neural

maps change over time. Individual neurons cannot carry information in the

sense that electronic devices carry information, because there is no predetermination

of what specific connections and maps mean:

Edelman observes that only biological entities have intentions, and asks, what kind of morphology provides a minimum basis for mental processes, and "when did it emerge in evolutionary time"? (p. 33) How did the brain arise by natural selection? By better understanding the development of hominid behavior in groups and the development of language, we can better characterize the function and development of mental processes, and hence understand how morphology was selected. Given the 99% genetic similarity between humans and chimpanzees, we would do well to understand the nature, function, and evolution of the differences. Edelman seeks to uncover the distinct physical capabilities that separate animals from other life and humans from other primates. What hardware organizations make language and consciousness possible?

2) No two people have identical antibodies;

3) The system exhibits a form of memory at the cellular level (prior to antibody reproduction).

The species concept arising from…population thinking is central to all ideas of categorization. Species are not 'natural kinds'; their definition is relative, they are not homogeneous, they have no prior necessary condition for their establishment, and they have no clear boundaries. (p. 239)

Edelman's theory of neuronal group selection (TNGS) has several components: 1) How the structure of the brain develops in the embryo and during early life (topobiology); 2) a theory of recognition and memory rooted in "population thinking" (Darwinism); and 3) a detailed model of classification and neural map selection (Neural Darwinism).

Topobiology "refers to the fact that many of the transactions between one cell and another leading to shape are place dependent."(p. 57) This theory partially accounts for the nature and evolution of three-dimensional functional forms in the brain. Movement of cells in epigenesis is a statistical matter (p. 60), leading identical twins to have different brain structures. Special signaling processes account for formation of sensory maps during infancy (and in some respects through adolescence). The intricacy of timing and placement of forms helps explain how great functional variation can occur; this diversity is "one of the most important features of morphology that gives rise to mind." (p. 64) Diversity is important because it lays the foundation for recognition and coordination based exclusively on selection within a population of (sometimes redundant) connections.

Population thinking is a characteristically biological mode of

thought "not present or even required in other sciences" (p. 73). It emphasizes

the importance of diversity--not merely evolutionary change, but selection

from a wide variety of options. "Population thinking states that evolution

produces classes of living forms from the bottom up by gradual selective

processes over eons of time." (p. 73) Applied to populations of neuronal

groups, there are three tenets:

--experiential selection, the creation of a secondary level repertoire, called neuronal groups, through selective strengthening and weakening of the neural connections, and

--reentry, which links two maps bi-directionally through "parallel selection and correlation of the maps'neuronal groups" (p. 84).

A neural map is composed of neuronal groups. Two functionally

different neural maps connected by reentry form a classification couple:

| Species

|

Functionally segregated map, responding to local features & participating in classification couples with other maps. |

| Population | Map composed of Neuronal Groups |

| Individual | Neuronal Group |

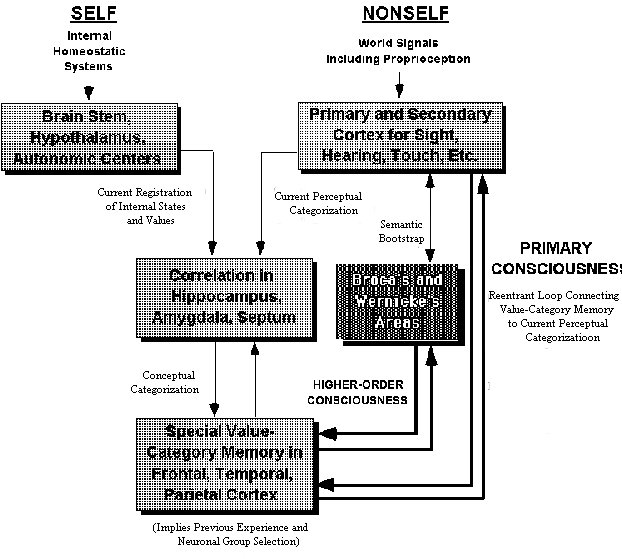

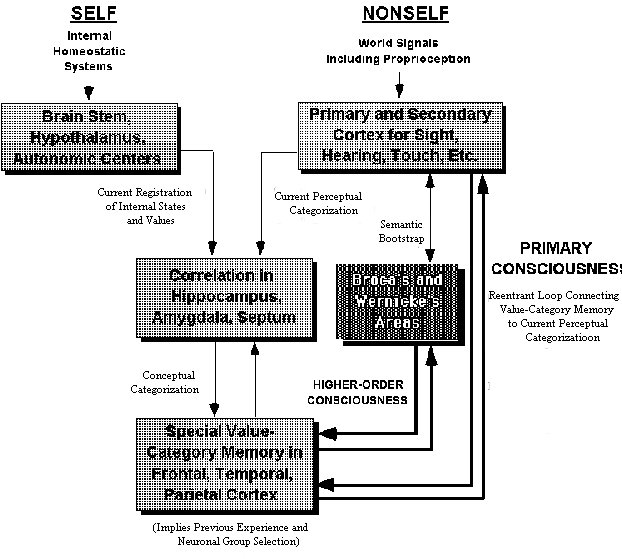

4. Formation of synaptic connections (primary repertoire) and neuronal groups (secondary repertoire) can be intermixed (p. 85). The extraordinary, three-fold increase in human brain size after birth (Leakey, 1992, p. 159) may be related to the formation of reentrant loops between the conceptual cortex and perceptual categorization, enabling primary consciousness (Figure 1).

5. See Reeke, et al. (1990) for further comparison

of reentry to recursion.

Memory "results from a process of continual recategorization.

By its nature, memory is procedural and involves continual motor activity."

(p. 102) Hence, memory is not a place or identified with the low-level

mechanisms of synaptic reactivation; and certainly memory is not a coded

representation of objects in the world (p. 238). Rather, "memory is a system

property" (p. 102) involving not only categorization of sensory-motor activations,

but categorizations of sequences of neural activations:

The brain...has no replicative memory. It is historical and value driven. It forms categories by internal criteria and by constraints acting at many scales. (p. 152)

[Q]ualia may be usefully viewed as forms of higher-order categorization, as relations reportable to the self.... (p. 116)

[I]n some animal species with cortical systems, the categorizations of separate causally unconnected parts of the world can be correlated and bound into a scene…a spatiotemporally ordered set of categorizations of familiar and unfamiliar events…. [T]he ability to create a scene...led to the emergence of primary consciousness. (p. 118)

--a "value-category" memory, allowing "conceptual responses to occur in terms of the mutual interactions of the thalamocortical and limbic-brain stem systems" (p. 119); and

--"continual reentrant signaling between the value-category memory and the ongoing global mappings that are concerned with perceptual categorization in real time."

Creation of language in the human species required the evolution of

1) cortical areas (named after Broca and Wernicke) to finely coordinate

"acoustic, motor, and conceptual areas of the brain by reentrant connections…[serving]

to coordinate the production and categorization of speech" and 2) another

layering of categorization, on top of conceptualization, to provide "the

more sophisticated sensorimotor ordering that is the basis of true syntax."

(p. 127) See Table 2 and Figure 1.7

|

|

|

|

|

|

|

Cortex reentrant loop connecting value-category memory to current perceptual categorization | Chimpanzees,

probably most mammals and birds |

Awareness of directed attention in activity

(awareness of intention; basic self reference). |

|

|

Broca's & Wernicke's areas; bootstrapping perceptual

categorization through linguistic symbolization

|

Humans

|

Awareness of having previous experiences, imagining experiences; conceptualization of self, others, world (awareness of self reference). |

In a process called "semantic bootstrapping," "the brain must have reentrant

structures that allow semantics to emerge first (prior to syntax)

by relating phonological symbols to concepts." (p. 130) By "phonological

symbols" Edelman apparently means words, viewed as acoustic categorizations.

He goes on to say, "When a sufficiently large lexicon is collected, the

conceptual areas of the brain categorize the order of speech elements."

Thus, syntactic correspondences are generated, "not from preexisting rules,

but by treating rules developing in memory as objects for conceptual

manipulation." This is a memory for actual speaking coordinations, not

a memory of stored grammar expressions. Edelman doesn't clearly say what

he means by conceptual manipulation, but it presumably involves recategorization

of previous symbol sequences, as well as categorization of the relation

of concepts to symbol sequences:

Thus, there are stages of intention, reference, awareness, and control:

Conceptualization enables an animal to exhibit intention. Primary consciousness

involves awareness of intention, relating the self to ongoing events.

Through categorizations of scenes involving intentional acts of self and

others, animals with primary consciousness can exhibit an understanding

of reference (e.g., a dog seeing a ball knowing that a game is beginning).

Intentional acts are imagined, modeled, and controlled through linguistic

actions over time in higher-order consciousness. With language, reference

becomes symbolic. Self reference begins as value-oriented categorization.

When concepts of the self, the past, and the future relate conceptual-symbolic

models produced in speech to ongoing perceptual experience, we become aware

of self-reference and consciously direct it (e.g., Monsieur A's statement

about the need to say things to himself in order to remember them). Reentrant

loops give us the ability to project visual, verbal, and emotional experiences;

we can attentively enact previously imagined actions--"as if one piece

of spacetime could slip and map onto another piece." (p. 169) The problem

of coordinating awareness of doing, talking, and visualizing so as to be

"consciously unconscious" is a well known problem in sports (Gallwey, 1974).

"We live on several levels at once." (p. 150)

Edelman extends his model to broadly explain how neural dysfunctions

lead to the kinds of behavior discussed by Rosenfield. He underscores that

"All mental diseases are based on physical changes." (p. 178) In particular,

he believes that Freud's explanations are limited by inadequately characterizing

biological processes:

7. Recalling Rosenfield’s remark that Edelman should

emphasize relations instead of categories, we might remain alert to misleading

aspects of such diagrams. In particular, "correlation" occurs as areas

of the brain configure each other; particular kinds of categories or memories

are not located in particular boxes. If we treat boxes uniformly as structural

areas of the brain and the lines as reentrant activation links, then classification

occurs as a coupled reconfiguration of maps (composed of neuronal groups)

within two or more boxes. That is, categorizing physically exists only

as a process of co-configuring multiple areas, not as stuff stored in some

place.

Darwin III8 is a "recognition automata that performs as a global mapping" (p. 92) that coordinates vision with a simulated tactile arm in a simulated environment. It is capable of "correlating a scene" by reentry between value-category memory and perceptual categorizations. Values are built in (e.g., light is better than darkness), but the resulting categorizations are all internally developed. The system consists of 50 maps, containing 50,000 cells and over 620,000 synaptic junctions (Reeke, et al., 1990, p. 608). This system rests on the model of "reentrant cortical integration" (RCI) which has been tested with much larger networks (129 maps, 220,000 cells, and 8.5 million connections) that simulate visual illusions and the detection of structure from motion in the monkey's visual cortex.

The statistical, stochastic nature of selection is common to many connectionism

models. It was mentioned by Bateson (1979) in his own discussion of parallels

between the evolution of biological phenotypes and the development of ideas.

Edelman's model probes deeper by specifying how neural nets are grown,

not merely selected, and how learning is based on internal value. Neural

Darwinism can be contrasted with other connectionist approaches in these

aspects:

NOMAD is a robotic implementation of Darwin III, claimed to be "the

first nonliving thing capable of 'learning'in the biological sense of the

word." (p. 193) But Edelman demurs of replicating the capabilities of the

brain. Building a device capable of primary consciousness will require

simulating

To understand why stored linguistic schema models poorly capture the

flexibility of human behavior, Rosenfield makes an analogy between posture

and speech:

Memory, too, comprises the acquired habits and abilities for organizing postures and sentences--for establishing relations. (p. 122)

Now, the claim implicit in Edelman's and Rosenfield's argument about biological function (and indeed, implied by Dewey (1896)) is that the human sensorimotor system achieves increasing precision in real-time, as part of its activity. Learning to be more precise occurs internally, within an active coordination. Animal behavior clearly shows that such adaptations don't require language. Indeed, there is a higher-order of learning in people, involving a sequence of behaviors, in which we represent the world, reflect on the history of what we have done, and plan future actions. In this case, exemplified by the engineer redesigning the robot, the representational language develops in conscious behavior, over time, in cycles of perceiving and acting. Newell and Simon (1972, p. 7) called this kind of learning a "second-order effect."

Rosenfield and Edelman insist that learning is also primary and is at this level not limited by linguistic representational primitives. Certainly, a scientist looking inside will see that adaptations are bound by the repertoire of neural maps available for selection and the history of prior activations. But first, the learning does not require reasoning about programs, either before or after activity. The bounding is in terms of prior coordinations, not descriptions of those coordinations, either in terms of the agent's body parts or places in the world. The claim is that this direct recomposition of prior sensorimotor coordinations, in the form of selection of maps and maps of maps, provides a "runtime" flexibility that executing linguistic circumscriptions of the world does not allow.

Indeed, reflection on prior behavior, learning from failures, and representing the world provide another kind of flexibility that this primary, non-linguistic learning does not allow. Chimps are still in trees; men walk on the moon. But understanding the role of linguistic models requires understanding what can be done without them. Indeed, understanding how models are created and used--how they reorient non-linguistic neurological components (how speaking changes what I will do)--requires acknowledging the existence and nature of this nonlinguistic mechanism that drives animal behavior and still operates inside the human. For example, it is obvious that the dynamic restructuring of posture and speech at a certain grain size bear, for certain kinds of knowledgeable performances, a strong isomorphic mapping to linguistic descriptions, as for example piano playing is directed by a musical score. But in the details, we will find non-linguistically controlled improvisation, bound not by our prior descriptions, but by our prior coordinations. For example, the piano player must sometimes play an error through again slowly to discover what finger is going awry, thus representing the behavior and using this description as a means of controlling future coordinations. How that talk influences new neurological compositions, at a level of neural map selection that was not consciously influenced before, becomes a central issue of neuropsychology.

The machine learning idea of "compiled knowledge" suggests that subconscious processes are merely the execution of previously conscious steps, now compiled into automatic coordinations. Edelman and Rosenfield emphasize that such models ignore the novel, improvised aspect of every behavior. Certainly the model of knowledge compilation has value as an abstract simplification. But it ignores the dynamic mechanism by which sensorimotor systems are structured at runtime with fine relational adjustments that exceed our prior verbal parameterizations. And for animals, such models of learning fail to explain how an animal learns to run through a forest and recognize prey without language at all.

In learning to ski, for example, there is a complex interplay of comprehending an instructor's suggestions, automatically recomposing previous coordinations, and recomposing (recalling) previous ways of describing what is happening. Behavior is coordinated on multiple levels, both linguistic and nonlinguistic, with prior ways of talking, imagined future actions, and attention to new details guiding automatic processes. The important claim is that representing what is happening, as talk to ourselves and others, occurs in our conscious behavior, that it is a manifestation of consciousness, and that it must necessarily be conscious in order to have deliberate, goal-directed effect. Dewey and Ryle's claim that deliberation occurs in our behavior, and not in a hidden way inside, is another way of framing Edelman and Rosenfield's claim that we must understand the structure of consciousness, the progressive flow of making sense of experience, if we wish to understand human cognition.

10. History does not record whether Head’s

"happening," so far in advance of the 1960s American theatrical form

by the same name, bears any relation to the "be-in" experienced by Heidegger.

Edelman's narrow conception of computer science is manifested in his use of the terms "software," "instruction," "computation," "information," "machine," and "computer" itself. For example, he says that it is not meaningful to describe his simulations of artifacts "as a whole as a computer (or Turing machine)." (p. 191) Thus, he identifies "computer" with "prespecified effective procedure." This is silly, given that his own system, NOMAD, is built from an N-cube supercomputer. The useful distinctions are the differing architectures, not whether a computer is involved. It is a category error to identify a particular software-hardware architecture as "acting like a computer." Here Edelman speaks like a layperson, as if "the computer" is a theory of cognition.

Unfortunately, this misunderstanding leads Edelman to reject all functional approaches to cognitive modeling. He believes that functionalism characterizes psychological processes in terms of software algorithms, implying that the hardware ("the tissue organization and composition of the brain", p. 220) is irrelevant. From this perspective, functionalism involves promoting a particular kind of hardware architecture, namely that of today's computers, as well as a particular kind of computational model, namely algorithms.

Part of the difficulty is that "functionalism" in cognitive science

refers to the idea that principles of operation can be abstractly described

and then implemented or emulated in different physical systems (e.g., mental

processes are not restricted to systems of organic molecules), as well

as the more specific view that existing computer programs are isomorphic

to the processes and capabilities of human thought (recently stated clearly

by Vera and Simon (in press)). Within this strong view of Functionalism

proper (capital F), proponents vary from claiming that the brain is equivalent

to a Turing machine (e.g., Putnam, 1975), to saying that "some computer"

(not yet designed) with some "computational process" (probably more complex

than Soar) will suffice. Johnson-Laird states a version of Functionalism,

which Edelman is attacking:

On the other hand, it is obvious that Edelman accepts the weaker idea of functional descriptions, for he bases his distinction between perceptual, conceptual, and symbolic categorization on Lakoff's analysis of linguistic expressions (1987). Furthermore, Edelman explicitly acknowledges that in focusing on biology, he does not mean that artifacts must be made of organic molecules. When he says that "the close imitation of uniquely biological structures will be required," (p. 195) he means that developing artificial intelligence requires understanding the properties of mechanisms that today only exist on earth as biological structures. In this respect, Edelman is just as much a functionalist as Dennett. What he means to say is that certain capabilities may be practically impossible on particular hardware. For example, Pagels (1988) argues that it is practically impossible to simulate the brain using a Turing machine, even if it could be so described in principle. AI is a kind of engineering, an effort of practical construction, not of mathematical possibility. Without functional abstractions to guide us, we'd be limited to bottom-up assembling of components to see what develops.

Models of "universal grammar" exemplify how functionalist theories can be reformulated within the biological domain. Arguing against Chomsky's analysis, Edelman claims that Neural Darwinism doesn't postulate "innate genetically specified rules for a universal grammar." (p. 131) But he doesn't consider the possibility that universal grammar may usefully describe (and simplify) the transformations that occur as conceptual and symbolic recategorizations. Functional descriptions, as expressed in today's cognitive models, can provide heuristic guidance for interpreting and exploring brain biology.

Contrasting with Edelman's critique, Pagels (1988) offers a more accessible analysis of the limitations of cognitive science. Pagels states that "the result of thirty years'work…[is] brilliantly correct in part, but overall a failure…. The study of actual brains and actual computers interacting with the world…is the future of cognitive science." (p. 190-191) Pagels helps us realize the irony that the quest for a physical symbol system so often assumed that the material processes of interaction with the world are inconsequential (the Functionalist stance). Thus, mind is disembodied and a timeless, ungrounded mentalism remains.

According to the Physical Symbol System Hypothesis, the material processes of cognition are the data structures, memories, comparators, and read-write operations by which symbols are stored and manipulated. At its heart, Edelman's appeal to biology is a claim that other kinds of structures need to be created and recombined, upon which sensorimotor coordination, conceptualization, language, and consciousness will be based. This idea is certainly not new. Dewey (1938) argued for biologically-based theories of mind (by which he meant a functional analysis of life experience, akin to Rosenfield's level of description). Dewey also anticipated problems with exclusively linguistic models of the mind (Dewey and Bentley, 1949).

In conclusion, we might forgive Edelman's "bashing," in view of the fragmentation of views and varying formality of AI research. Edelman can hardly be criticized for adopting the most obvious meanings of the terms prevalent in the literature. In participating in our debate, we can't fault Edelman if he becomes bewildered when we respond, "Not that kind of computer (but one we have yet to invent)" or "Not that kind of memory (but rather one more like what a connectionist hopes to build)." We somehow expect newcomers to be not too critical of what's already on the table, and to sign up instead to the dream.

When studied in detail, Rosenfield's and Edelman's books provide a wealth of new starting points for AI. For example, recently there has been more interest in modeling emotions in AI. These books suggest moving beyond static taxonomies (which are useful early in a scientific effort) to viewing emotions as dynamic, functional, relational experiences. Could the phenomenology of emotional experience be modeled as integral steps in sense-making, as Bartlett's model of reminding suggests?

The oddity of Rosenfield's patients, coupled with an evolving architectural model of the brain, often brings to mind questions for further investigation. For example, how did Mr. Baud's inability to remember experience past twenty seconds impair learning new skills or concepts? Cognitive scientists today could easily suggest interesting problems to give Mr. Baud. Similarly, could Gelb and Goldstein's patient, who couldn't understand proverbs or comparisons, make up a story at all? Did she understand causal explanations? Could she describe and rationalize her own behavior? As Rosenfield's book suggests, cognitive neuropsychology is changing. It is time to seek synergy between our disparate models and evidence. As some reviewers of Newell's Unified Theory of Cognition suggest, this also entails reconceptualizing what models like Soar describe in relation to the brain (Arbib, in press; Pollack, in press).

Rosenfield's analysis provides a partial explanation: Representing and inquiry go on in activity, that is, in cycles of perceiving and expressing (talking, gesturing, writing) over time. By conjecture, sense-making is necessarily conscious because it involves action and comprehension of what our acts mean occurring together. For example, speaking is not just outputting prefabricated linguistic expressions, but a dual process of creating representations in action and comprehending what we are saying. We are "making sense" as we speak--perceiving appropriateness, adjusting, and restating in our activity itself. The process is "dual" because perception, awareness of what we are doing, is integral to every statement. Conscious awareness is not just passive watching, but an active process of sustaining a certain kind of attention that changes the results of inquiry. This analysis suggests specifically that we reconsider "remindings" and other commentary of the subject in experimental problem solving protocols as revealing the perceptual work of creating and using representations.

Following Edelman, the rest of the matter, what is going on behind the scenes, is non-linguistic coordination. Conscious acts of fitting--dealing with breakdowns (Winograd and Flores, 1986)--occur precisely because there is no other place for linguistic representations to be expressed and reflected on, but in our experience itself. This is why we write things down or "talk through" an experience to clarify meanings and implications for future action. Protracted, conscious experience--as in writing a paragraph--is not merely an awareness of elements placed in "working memory," but an active process of recoordinating and recomprehending (reperceiving) what we are doing. In the words of Bartlett, "turning around on our own schemata" is possible precisely because we can re-coordinate non-linguistic schemata in our activity of representing.

According to Edelman, the articulation process of "building a scene" is reflective at a higher-order because of reentrant links between Broca and Wernicke's areas and perceptual categorization (the "semantic bootstrap" of Figure 1): Our perceptual sense of similarity (reminding) and articulation (naming, history-telling, theorizing) are bound together, so symbolizing actions are driving subsequent perceptions. A sequence of such activity is coordinated by composing a story that accumulates observations and conceptual categories into a coherent sense of what we are trying to do (the scene). In other words, being able to create a story (e.g., the sense-making of a medical diagnostician) is precisely what higher-order consciousness allows. Crucially, human stories are not merely instantiated and assembled from grammars, but are coupled to non-linguistic coordinations grounded in perceptual and motor experience (Lakoff, 1987). Hence, consciously-created stories can have an aspect of improvisation and novelty that stored linguistic schema mechanisms do not allow.

This analysis provides an alternative, biologically-grounded perspective on recent arguments about planning and situated action (Suchman, 1987; Agre, 1988). The key idea is that perceiving and acting co-determine each other through reentrant links. Representing occurs in activity, as a means of stepping outside the otherwise automatic process by which neural maps (schemata) are reactivated, composed, and sequenced. Goal-directed, attentive behavior of primary consciousness involves holding active a higher-order organization (global maps) and coordinating the relation to ongoing perceptions (i.e., directed attention). In higher-order consciousness, these global maps are coupled to linguistic descriptions of objects, events, goals, and causal stories.

Robot designers may be impatient with the vagueness of such descriptive theorizing. But it is clear that the clinical evidence and neurobiological mechanisms of Rosenfield and Edelman are adequate to promote further reconsideration of our models of explanation, remembering, and story-telling, including the seminal work of Bartlett.

It is tempting to predict that the development of global map architectures, as in Darwin III, will become a dominant approach for neural network research, effectively building on situated cognition critiques of the symbolic approach (Clancey, in press). However, if it becomes essential to understand the chaotic processes of the brain, as Freeman(1991), Pollack (in press), and others argue, it is less clear how the researchers who brought us Pengi and Soar will participate in building the next generation of AI machines.

All told, there are probably more pieces here than most researchers can follow or integrate in their work. A good bet is that progress in AI will now depend on more multidisciplinary teams and efforts to bridge these diverse fields. Rosenfield and Edelman make a big leap forwards, showing consciousness to be an evolved activity, grounded in and sustaining an individual's participation in the world as a physical and social personality. With theories like self-reference, population thinking and selectionism, we pick up Bateson's challenge in Mind and Nature: A necessary unity, finding the patterns that connect the human world to nature and all of the sciences to each other.

Arbib, M.A. 1992. Schema theory. In S. Shapiro (editor), The Encyclopedia of Artificial Intelligence, New York: John Wiley & Sons.

Arbib, M.A. (in press). Review of Newell's Unified Theories of Cognition. To appear in Artificial Intelligence.

Bamberger, J. 1991. The mind behind the musical ear. Cambridge, MA: Harvard University Press.

Bartlett, F. C. [1932] 1977. Remembering-A Study in Experimental and Social Psychology. Cambridge: Cambridge University Press. Reprint.

Bateson, G.1979. Mind and Nature: A necessary unity. New York: Bantam.

Berger P.L and Luckmann, T. 1967. The Social Construction of Reality: A Treatise in the Sociology of Knowledge. Garden City, NY: Anchor Books.

Bruner, J. 1990. Acts of Meaning. Cambridge: Harvard University Press.

Calvin, W.H. 1990. The Cerebral Symphony: Seashore Reflections on the Structure of Consciousness. New York: Bantam Books.

Calvin, W. H. 1991. Islands in the mind: dynamic subdivisions of association cortex and the emergence of the Darwin Machine. The Neurosciences, 3, pp. 423-433.

Clancey, W.J. 1991. Review of Israel Rosenfield's The Invention of Memory. Artificial Intelligence, 50(2), 241-284.

Clancey, W.J. 1992. Representations of knowing: In defense of cognitive apprenticeship. Journal of Artificial Intelligence in Education, 3(2),139-168.

Clancey, W.J. (in press). Situated action: A neuropsychological interpretation: Response to Vera and Simon. To appear in Cognitive Science.

Dell, P.F. 1985. Understanding Bateson and Maturana: Toward a biological foundation for the social sciences. Journal of Marital and Family Therapy, 11(1), 1-20.

Dennett, D.C. 1992a. Consciousness Explained. Boston: Little, Brown and Company.

Dennett, D.C. 1992b. Revolution on the Mind: Reviews of The Embodied Mind and Bright Air, Brilliant Fire. New Scientist, June 13, pp. 48-49.

Dewey, J. [1896] 1981. The reflex arc concept in psychology. Psychological Review, III:357-70, July. Reprinted in J.J. McDermott (editor), The Philosophy of John Dewey, Chicago: University of Chicago Press, pp. 136-148.

Dewey, J. [1902] 1981. The Child and the Curriculum. Chicago: University of Chicago Press. Reprinted in J.J. McDermott (editor), The Philosophy of John Dewey, Chicago: University of Chicago Press, pp. 511-523.

Dewey, J. 1938. Logic: The Theory of Inquiry. New York: Henry Holt & Company.

Dewey, J. and Bentley, A.F. 1949. Knowing and the Known. Boston: The Beacon Press.

Edelman, G.M. 1987. Neural Darwinism: The Theory of Neuronal Group Selection. New York: Basic Books.

Edelman, G.M. 1989. The Remembered Present: A Biological Theory of Consciousness. New York: Basic Books.

Freeman, W. J. 1991. The Physiology of Perception. Scientific American, (February), 78-85.

Gallwey, W.T. 1974. The Inner Game of Tennis. New York: Bantam Books.

Gould, S. J. 1987. Nurturing Nature, in An Urchin in the Storm: Essays about Books and Ideas. New York: W.W. Norton and Company. pp. 145-154.

Greenwald, A.G. 1981. Self and memory. In G.H. Bower (editor), The Psychology of Learning and Motivation Volume 15. New York: Academic Press. pp. 201-236.

Hofstadter, D.R. 1979. Gödel, Escher, Bach: an Eternal Golden Braid. New York: Basic Books.

Johnson-Laird, P.N. 1983. Mental Models: Towards a Cognitive Science of Language, Inference, and Consciousness. Cambridge, MA: Harvard University Press.

Lakoff, G. 1987. Women, Fire, and Dangerous Things: What Categories Reveal about the Mind. Chicago: University of Chicago Press.

Leakey, R. and Lewin, R. 1992. Origins Reconsidered: In Search of What Makes Us Human. New York: Doubleday.

Luria, A.R. 1968. The Mind of a Mnemonist. Cambridge, MA: Harvard University Press.

Newell, A. 1990. Unified Theories of Cognition. Cambridge, MA: Harvard University Press.

Newell, A. and Simon, H. 1972. Human Problem Solving. Englewood Cliffs, NJ: Prentice Hall.

Ornstein, R. E. 1972. The Psychology of Consciousness. New York: Penguin Books.

Pagels, H.R. 1988. The Dreams of Reason: The Computer and the Rise of the Sciences of Complexity. New York: Bantam Books.

Pollack, J.B. (in press). Review of Newell's Unified Theories of Cognition. To appear in Artificial Intelligence.

Putnam, H. 1975. Philosophy and our mental life, in Philosophical Papers 2: Mind, Language and Reality. New York: Cambridge University Press. pp. 291-303.

Putnam, H. 1988. Representation and Reality. Cambridge: The MIT Press.

Reeke, G.N. and Edelman, G.M. 1988. Real brains and artificial intelligence. Daedalus, 117 (1) Winter, "Artificial Intelligence" issue.

Reeke, G.N. Finkel, L.H., Sporns, O., and Edelman, G.M. 1990. Synthetic neural modeling: A multilevel approach to the analysis of brain complexity. In G.M. Edelman, W.E.Gall, and W.M. Cowan (editors), The Neurosciences Institute Publications: Signal and Sense: Local and Global Order in Perceptual Maps (Wiley, New York) pp. 607-707 (Chapter 24).

Rosenfield, I. 1988. The Invention of Memory: A New View of the Brain. New York: Basic Books.

Ryle, G. 1949. The Concept of Mind. New York: Barnes & Noble, Inc.

Sacks, O. 1984. A Leg to Stand On. New York: HarperCollins Publishers.

Schön, D.A. 1979. Generative metaphor: A perspective on problem-setting in social policy. In A. Ortony (Ed), Metaphor and Thought. Cambridge: Cambridge University Press. 254-283.

Schön, D.A. 1990. The theory of inquiry: Dewey's legacy to education. Presented at the Annual Meeting of the American Educational Association, Boston.

Sheldrake, R. 1988. The Presence of the Past: Morphic Resonance and the Habits of Nature. New York: Vintage Books.

Sloman, A. 1992. The emperor's real mind: Review of Roger Penrose's The Emperor's New Mind: Concerning Computers, Minds, and the Laws of Physics. Artificial Intelligence, 56 (2-3), 355-396.

Smoliar, S.W. 1989. Review of Gerald Edelman's Neural Darwinism: The Theory of Neuronal Group Selection. Artificial Intelligence 39(1), 121-136.

Smoliar, S.W. 1991. Review of Gerald Edelman's The Remembered Present: A Biological Theory of Consciousness. Artificial Intelligence 52(3), 295-319.

Suchman, L. A. 1987. Plans and Situated Actions: The Problem of Human-Machine Communication. Cambridge: Cambridge University Press.

Tyler, S. 1978. The Said and the Unsaid: Mind, Meaning, and Culture. New York: Academic Press.

Vera A. and Simon. H. (in press). Response to comments by Clancey on "Situated Action: A symbolic interpretation." To appear in Cognitive Science.

von Foerster, H. 1980. Epistemology of communication. In K. Woodward (editor), The Myths of Information: Technology and Postindustrial Culture. Madison, WI: Coda Press.

Winograd, T. and Flores, F. 1986. Understanding Computers and Cognition: A New Foundation for Design. Norwood: Ablex.