For many computer scientists the phenomenon to be replicated--human intelligence--and knowledge are hereby placed in new relation. Previously, we explained intelligent behavior in terms of knowledge stored in memory: word definitions, facts and rules modeling complex causal processes in the world, plans and procedures for doing things. Now, we view these descriptions as descriptive models--which people use like maps, handbooks, and standards for guiding their actions. These descriptions, like the rules in an expert system, are not knowledge itself but representations of knowledge--lacking the conceptual grounding of verbal reasoning in the brain. Like an article in an encyclopedia, such descriptions require interpretation in the context of use, which in people involves not only generating additional descriptions, but conceptualizing in non-verbal modes. As Newell (1982) put it, "Knowledge is never actually in hand...Knowledge can only be created dynamically in time."

The change in perspective can be bewildering: no longer are symbols, representations, text, concepts, knowledge, and formal models equated. Symbols in the brain are not immutable patterns, but structural couplings between modular systems, continuously adapted in use by a generalization process that dynamically recategorizes and sequences different modalities of sensation, conception, and motion (Merzenich, 1983). Representations are not just coded artifacts such as diagrams and text, but in the brain are active processes that categorize and sequence behavior in time (Edelman, 1992; Rosenfield, 1992; Bickhard, 1995). Conceptualization is not just verbal, but may be rhythmic, visual, gestural--ways in which the neural system categorizes sequences of multimodal categorizations in time. In the view of Howard Gardner (1985), people have multiple intelligences, with different ways of making sense and coordinating action. The neuropsychological studies of Oliver Sacks (1987) reveal how people who cannot verbally abstract their experience may nevertheless dance and speak poetically; and people who speak in the particulars of geometric forms and rules may be unable to see the forest for the trees (Clancey, 1997a). Indeed, our textually-rooted robots, like these patients, once loaded with a knowledge base and shoved out into the world of shopping malls, doctor's offices, and national parks, may appear to be dysfunctional morons or idiot savants.

With these observations in mind, some AI researchers seek to invent a new kind of machine with the modularity and temporal dependence of neural coordination. Some connectionists are exploring computational recognition systems based on dynamic coupling, in which subsystems exist only in mutual reinforcement (Freeman, 1991). Structures in the brain form during action itself, like a car whose engine's parts and causal linkages change under the load of steeper hill or colder wind. Such mechanisms are "situated" because the repertoire of actions becomes organized by what is perceived--and what is perceived depends on the relations of previous coordinations now reactivating.

In retrospect, we view symbolic models (e.g., natural language grammars) as descriptions of gross patterns that "subsymbolic" systems produce in the agent's interactive behavior (Smolensky, 1988). The "symbolic" AI of the 1950s through the early 1980s is not wrong, but it may be a bit inside out. People do articulate rules, scripts, and definitions and refer to them. These descriptive artifacts are not in their brains, but on their desks and in their libraries. The representations in the brain are different in kind--dynamically formed, changing in use, categorizations of categorizations over time, and multimodal (not just verbal), as means of coordination. Meaning can be written down (described), but words are not concepts; the map is not the territory.

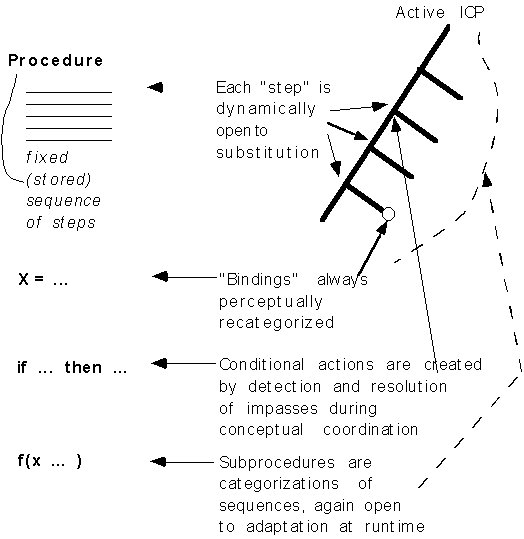

This paper illustrates how viewing human experience in a fresh way, applying a comparative-difference approach, may be fruitful for suggesting new kinds of physical coordination mechanisms. Rather than assuming that brains are capable of executing programs, we consider what neural coordinations are required for sequencing, composition, operand comparison, binding, etc., and look for evidence of neural coordinations that differ from programming operations such as procedural invocation. Examples of using a computer interface and grammatical comprehension are analyzed in detail to bring out properties of sequencing in practiced behavior. These properties are described by a notation called an ICP diagram, representing the "interactive coordination processes" of neural maps. This diagram allows us to contrast neural processes with aspects of stored computer programs that we take for granted in our symbolic cognitive models: ordered steps, variable bindings, conditional statements, and subgoaling. The analysis begins to reveal how the brain accomplishes these structures and relations more flexibly, and hence constitutes a new kind of computational device--one we don't fully understand and can't yet replicate. Finally, the example reveals how talk about "representations" in descriptive cognitive modeling research through the 1970s confused stored text and diagrams ("symbol structures") with active, always adapted processes, namely perceptual categorization, conceptual categorization of sequences of activation, and non-verbal forms of conceptualization (especially rhythm, gesture, and imagery). The advice for AI research is to investigate how the brain works, especially to explore how "multiple intelligences" (especially non-verbal) are interactively formed and coordinated in everyday behavior.

Why do I create a second window rather than using the first one? Why do I find it so easy to later return to the first window and send the message I first intended, when it may be unlabeled and I have no notes about what I intended to do with it? Why does it feel difficult to hold the first message in mind and reassign the first window to the second message?

The impression I have is that the first window, W1, is assigned; it embodies my intent; it has meaning already. I see W1 as "a message to person 1." Using W1 to send a message to the second person means seeing it in a new way. My feeling is that this disrupts my thoughts. It is much easier and obvious to create a second window.

Using the terminology of Bamberger and Schön (1991),the physical

structures visible on the screen, W1 and W2,

are reference entities.

To reassign W1 is to see it another way, to reconceive its meaning. But because W1 embodies the process of sending a message to person 1, to see W1 in a different way is to disrupt that process. This is a crucial observation: Seeing materials in a different way may require disrupting an ongoing neural process, an active coordination between what we are seeing and what we are doing, that otherwise has no observable manifestation. Significantly, "doing" has the larger sense of what I am doing during this current electronic mail session. In some sense, I am still sending that message to person 1, even though I have shifted my focus to person 2; the neural aspect of seeing and sending that message is still active. Conventionally, we would say that I am still "intending" to send message 1 using W1.

Instead of disrupting the first process, I hold it aside and start up a second process, embodied by W2. I send the second message and then return to W1. The windows on the screen serve as my external representation of messages I want to send. I don't even need to type in something in the TO: or SUBJECT: fields in W1. I can remember how to see W1. I know what it means.

The following aspects of this coordination are striking:

1. Visible spatial reminders on the screen allow me to coordinate multiple activities. I can interrupt what I am doing, both physically and mentally setting it aside, and pick up the activity later where I left off. I don't disrupt the interaction, I hold it active, keeping it current but making it peripheral.

2. The capability to create multiple processes in the electronic mail program facilitates my mental coordination. Activating the second "Send Mail" process in the mail program parallels and facilitates my allowing a second activity (sending a message to person 2) to take form. Indeed, the idea of setting aside message 1 and beginning message 2 is afforded by the concreteness of W1 now in place, and the "Send Mail" button, allowing a repeated operation to occur. Selecting back and forth between W1 and W2, by buttoning the mouse in each window in turn, feels like shifting my attention back and forth between the activities of sending the two messages. The effortless flow and context shift as windows shift forward and backward parallels my experience in thinking about the messages. The window I am seeing is the activity I am doing.

3. Completing the second message first is a bit odd. The impression is of a stack: I need to get rid of the second message before I can return to the first. The feeling is that the second message is more pressing. If I don't do it now, I will forget it. The idea of sending the second message arose effortlessly from the process of sending the first; but somehow the second message, as an interruption that only came to mind as a second thought, needs to be handled immediately.

Table 1. Alternative ways of sending two messages.

|

|

|

|

|

|

A)

W1 <- msg1 W2 <- msg2 |

C)

W2 <- msg1 W1 <- msg2 |

|

|

B)

W2 <- msg2 W1 <- msg1 |

D)

W1 <- msg2 W2 <- msg1 |

"Reassign windows" means to use the second window for the first message.

"Reorder messages" means that one would type the second message first.

For example, in the combination labeled "C" one would keep the order (sending

the first message first), but reassign the windows, so the first message

is typed into W2; one returns to W1 to send the second message.

Furthermore, in the two cases where one uses W2 second (combinations

A and D), one could create W2 before or after entering a message

into W1. For example, in D one could reassign W1 to the second

message, then create a second window for the first message after

sending off the second message. This gives six basic combinations. But

there are additional possibilities involving typing reminders about the

content of the messages:

First, in my experience, it is difficult to reassign W1. The impression is that I would lose the process of sending the first message. There is "no way to remember it," aside from reaching for a pad and making a note. It is difficult to switch contexts, to see W1 as being about (or for) the process of sending a message to person 2. The meaning persists; perceived forms are coordinated with activities. To destroy or reassign perceived objects is to lose the coordination: To lose a way of seeing is to lose an activity.

Second, it is difficult to do message 1 before message 2. Message 2 is active now, after "Send Mail" was selected a second time. I'm doing that now. I don't want to disrupt message 2 now. There would then be two disruptions. I need to keep going forward.

Oddly, it seems easier to remember what I intend to do with W1 than to make the effort of typing in some TO: or SUBJECT: information as a reminder. Typing anything in W1 after already pulling down W2 feels like actually doing the first message--going back to it. Crucially, I immediately pull down a second window by buttoning "Send Mail" at the very moment that I realize that I want to send a message to a second person.

Thus, we have the curious and fortunate balance: It is difficult to reinterpret forms (reference entities) that are part of a sequence of activity ("a chain of thought"). But it is relatively easy to recall how materials were previously perceived and engage in that process again. These possibilities work together here because interrupting the first process is perceived as a "holding aside," not a disruption, by the representation on the screen. The first message activity is held active, not reorganized or ignored; it is visible peripherally on the screen. The activity is set aside like an object in the physical world, and can be picked up again at the click of the mouse.

The effect is like illusions that reorganize the visual field, such as the Necker Cube, the duck-rabbit, old-young woman, etc. (Figure 2). Buttoning one of the windows makes that activity the figure and the other activity part of the ground. Fixing a figure-ground relationship on the screen by bringing W1 or W2 to the fore corresponds to making that activity "what I am doing now" and every other process in which I am engaged peripheral. The figure-ground relation appears to be basic to the idea of focus of attention. Referring to the discrete manner in which we see forms as one thing and can shift back in forth, Arbib (1980, p. 33) suggests that "The inhibition between duck schema and rabbit schema that would seem to underlie our perception of the duck-rabbit is not so much 'wired in' as it is based on the restriction of the low-level features to activate only one of several schemas."

|

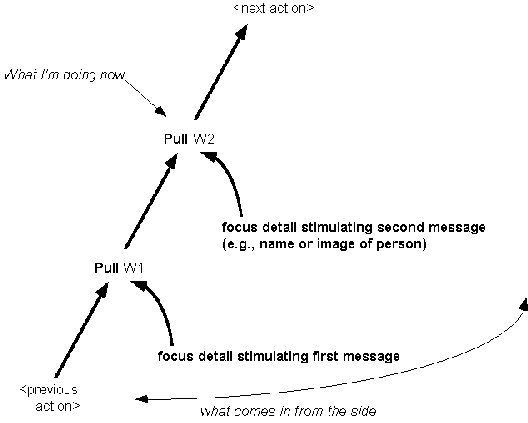

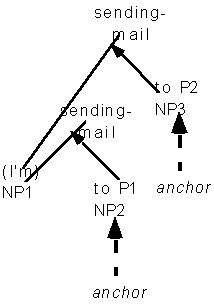

Figure 3 shows the coordination just after I have pulled down W2. Just after pulling down W1 a detail came to the foreground (e.g., the idea of another person or message). The diagram shows this second "focus detail" coming in "from the side." Because of the materials available to me, I didn't experience a sense of disruption (an impasse). For example, without the multiple-process capability of Lafite, I might have been forced to turn aside and write something down about message two on a sheet of paper. Instead, I immediately repeated the current activity of writing a message, pulling down W2. By this coordination, I incorporated the detail; I made it "what I am doing now." The relation is non-symmetric: I can button back and forth to see the windows as different activities, but I resist actually doing the first message activity after I have pulled down W2.

The two activities are parallel and coordinated, as if they indeed integrated in my understanding. Figure 3 is intended to represent this sequence; in some sense what I am doing now includes sending the first message. Narrowly, my focus is on the second message, but broadly, my coordinated activity includes the first message. Later I relate this process of composition by reactivation to the process of center-embedding in language comprehension.

In terms of the ICP notation, the node labeled "pull W2" represents a neural process that is activated just as the new focus detail comes to my attention. That is, there is a dialectic relation between the idea of sending a second message and the activity of doing so; in my experience, they come into being together. The new composition, represented by the node relating "pull W1" and the focus of the second message, is created by the activity of sending the second message.

In contrast, a serial or parallel architecture would involve a different timing and different kinds of representation: The idea of sending a second message would be described internally by data structures that included some kind of reference to the general description of the procedure of sending a message, plus a reference to the topic of the second message. Reaching to button "Send Mail" would be interpreted as executing this procedure, and Figure 3 would be viewed as a representation of a stack, showing the order in which procedures and "arguments" were "bound" and "invoked." I will expand on these claims after speculating further about the neural processes involved.

Crucially, the production of one neural organization from the previous one is embodied in the timing of neural activations, so the next-next-next sequence is biased to be reconstructed. That is, the activation of a perceptual-motor map will activate the next map in sequence by virtue of having neuronal groups that are physically activated by groups in the previously active map. In the example of sending two messages, a given coordination is repeated, but composed with different perceptual and conceptual maps. This might be the most simple kind of sequencing: Doing something again, but acting on different objects in the world.

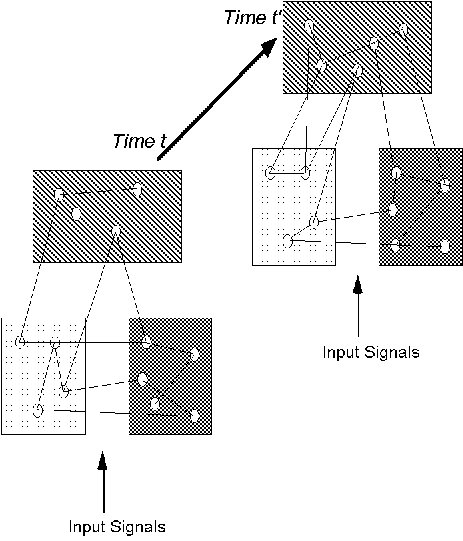

In this analysis, we are going beyond Edelman's focus on multimodal coupling of neural maps in simple categorization to consider how map activation occurs over time in behavior sequences. Figure 4 illustrates how a given set of maps are reactivated at a later time. With similar inputs, there is similar output. The groups and the reentrant links that are activated are always prone to change, because of changes in the external environment and changes in the correlations activated throughout the neural system. Broadly speaking, the theory of neuronal group selection (TNGS) claims that every activation is a generalization of past activation relations. Put another way, every activation is a recategorization, as opposed to a literal match or retrieval operation.

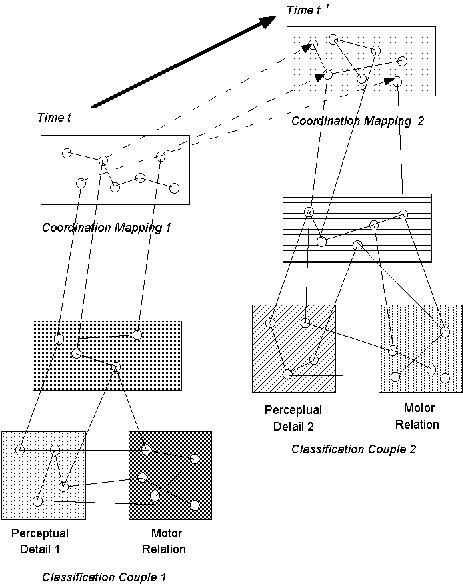

This recategorization is always occurring simultaneously within a larger sensorimotor coordination, involving conceptualization (at the level of maps of types of maps) and, often in people, verbalization. By conjecture, memory for sequence of activation involves one map of maps activating another over time (Figure 5). This corresponds to Bartlett's (1932) notion of a schema as a coordinating process.

In Figure 5, we see different maps of maps linked to different high-order maps. A perceptual detail may be a classification of a sound, a color, an object, a word, etc. The higher-order maps are physically linked by reentrant connections. However, the second higher-order map (labeled time t') only becomes activated after the first map has become active (labeled time t). In this manner, it is conjectured that maps of maps are sequenced over time via physical subsumption. By conjecture, the number of possible connections in the brain is so large that there are sufficient links to establish a link between any sets of maps, as required by activity. Furthermore, all learning is categorization over time. This means that all learning is activation "in place" of pre-existing links, which are composed over time into, and always within, sequences of activation. As Edelman states, there must be some way of holding such activations so they can be compared and further coordinated, allowing for continuity in our experience. I call this capability to hold active and relate multiple maps conceptual coordination. It is a key aspect of higher-order consciousness (Edelman, 1992).

This analysis is driven by two key observations about memory: First, Bartlett describes new conceptions as being manufactured out of previously active coordinations. How ways of seeing, moving, talking, etc. have worked together in the past will bias their future coordination, in the sense that they are physically connected again by the same relations. Second, I am building on Vygotsky's (1934) observation that every coordinated action (or descriptive claim, image, conception) is a generalization of what we are already doing (saying, seeing, categorizing). Putting these together, we tentatively view the ICP notation as showing a composition process over time (corresponding to inclusion of neural maps of the previous active coordination), such that each composing along the way is a generalization, qualified by details that specialize the neural construction, making it particular (focused on a thing) and unique.

Significantly, in this simple example, the new focus detail bears the same relation to "Pulling down" of "Sending a message" as the first focus detail. I am able to see the second focus detail as an instance of "what I am already doing." The process of "sending a message" (involving buttoning and following hand-eye, typing, information-entering processes) is reactivated, but with respect to a different figure (the name or image related to the second person). We can say that the two focus details, the references of the two messages, are correlated by their composition with the conceptualization of sending a message. But holding the two details together, the activity of deliberately relating the two topics (a crucial capability constituting higher-order consciousness), is very different from being in the activity of sending two messages. Grounded in the activity, the details are mutually exclusive. In reasoning about alternative actions they are both active and related as details within an encompassing conceptualization of ordering, correspondence, cause, negation, identity, etc. (the fundamental reasoning processes emphasized by Piaget).

The tree form of the ICP notation (Figure 3) should not suggest that there is a data structure that is being traversed, inspected, or otherwise manipulated by some other process in the brain. Just as in perceptual reorganizations, in which lines get reorganized to form different angles and objects, neural maps get reorganized to form different figure-ground relations to foci and conceptualizations of what I am doing now. When I button back and forth between W1 and W2 I am recoordinating my activity. I conjecture that each buttoning back and forth results in a new composition higher on the path shown in Figure 3. Indeed, the conceptualization of what I'm doing now becomes, "I'm buttoning back and forth on the screen" (a categorization of the sequence). That is, every activity must incorporate what is currently active as either figure or ground. The mechanism is not a process of moving pointers up and down a tree or restoring a previous organization by writing it into a buffer for execution. We are always moving forward, creating a new composition, albeit often a generalization of what we have done before.

Crucially, this example also illustrates that statements like "how the subject is representing the windows to himself" must be distinguished from "how the subject is describing the windows to himself." Indeed, like the fly coordinating his tongue with a passing fly or the blind man using the cane as an extension of his arm, a practiced message sender is perhaps not representing the windows any more than I am representing the location of the mouse on the desk, which I do not see. The message sender is apprehending relations, and no longer seeing forms. The distinction between conscious and subconscious is then reframed as a distinction between figures and peripheral processes.

Contrast my experience in sending two messages with my asking an assistant to finish my work: He is given two identical, blank windows, already open on the screen. He is told to send two messages to different people. Window position has no representational significance, except he will probably use the front one first. Now suppose I require that he assign the windows in the same manner as I saw them when they were created. Now I must represent these windows for him: "This is the window for Mark's message; here is the one for Wendy." Without a descriptive set of instructions, my friend can't replicate the activity I was engaged in: He needs to know what the two open windows represent.

But the windows weren't representations to me, they were just part of my activity of sending messages. Commonly, we would say that in sending the two messages I knew what the windows "mean." That is, if asked, I could tell you what I am doing, not that I view the windows as being representations. For me, they are places in which I am composing messages--not representations of messages, but the real thing. I am not perceiving the forms as windows, but as where my typing activity is occurring.

Lewis (1995) gives the following examples of comprehension difficulties1. This sentence is acceptable:

(S1) The dog that the girl scared ran away.

While this sentence, with three embedded noun phrases, is incomprehensible:

(S2) The cat that the bird that the mouse chased scared ran away.

S2 is an example of "double center-embedding." But the pattern is not clear. Other forms of center-embeddings, involving subject relatives and propositions, are acceptable:

(S3) That the food that John ordered tasted good pleased him.

And right-branching appears to be significantly less bounded:

(S4) The mouse chased a bird that scared a cat that ran away.

Lewis concludes, "The basic result is that center embedding is always more difficult than right branching, which can carry the same amount of content, but exhibits no severe limits on depth of embedding."

A related well-known problem, discussed by Berwick (1983), concerns "movement" of noun phrases (NP). Both of the following statements are acceptable:

(S5) I believe that Mary likes Bill.

(S6) Whom do I believe that Mary likes?

But introduction of the embedded NP, "the claim that," makes the sentence unacceptable:

(S7) Whom do I believe the claim that Mary likes?

For Berwick, this suggests a "locality principle," which Lewis (1994, p. 14) states as, "Who cannot be moved across more than one sentence boundary." However, we can understand sentences in which "whom" appears to "move" arbitrarily far:

(S8) Whom does John think that Mary said she saw Tom go to the store

with?

Explanations of these observations focus on the limitations of short-term (working) memory, which may be stated in terms of categorical structure ("each relation in working memory indexes at most two nodes" [Lewis, 1994, p. 14]) or activation interference (Church's closure principle, "only the two most recent nodes of the same category may be kept open at any time" [Lewis 1994, p. 24]). Similarly, the evidence may be explained in terms of a limited stack mechanism (items aside held during the parse) or a processing limitation on recursion. Examples of center-embedded subject relatives are interesting because no model currently accounts for why some are unacceptable and others are easy (Lewis, 1996, p. 10).

We can use the ICP notation to distinguish the various cases and to reformulate the limitations in terms of neural processes2. For example, S9 was predicted by NL-SOAR as unacceptable, but is comprehensible:

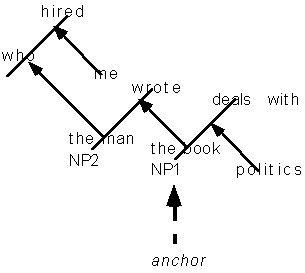

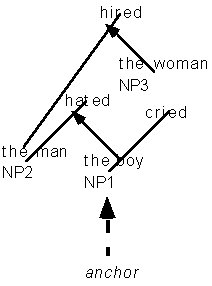

(S9) "The book that the man who hired me wrote deals with politics."

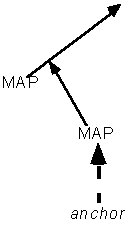

In applying the ICP notation for representing the process of sentence comprehension, I introduce the notion of an "anchor." The anchor is always the first NP or relative pronoun (whom, that) in the utterance. In S9, the first NP categorization ("the book") is held active as an anchor. Throughout, we find the pattern that the NP categorization can be "reused" in comprehending the utterance, as long as the anchor becomes incorporated in the subsequent composition ("the may who hired me wrote"). Then comprehension may continue from the anchor ("deals with politics). An anchor holds active a categorization mapping (so-called short-term or working memory), so effectively the syntactic categorization (NP) may be reactivated ("reused") in the same sentence construction. I call this "the rule of anchored-composition."

I stress than an anchor is not stored in a separate "buffer" off to the side (a special data structure), but is a categorizing process that is held active so it may be incorporated later in another composition. In Figure 3 an anchor is shown as a "detail" on the right side. Representations of grammatical structure necessarily involve several layers of ICP "backbones" (corresponding to subject-verb relations), each with their own details in "lower" backbones. An anchor is therefore a special "detail" denoted by an arrow and a label3. Besides center embedding, S9 illustrates a second pattern that occurs for all center-embedding examples and illustrates another restriction on multiple activation within a single construction. A semantic categorization (e.g., "the book" "the man") may play two roles, but the roles must be different. For example, "the book" is the object of "wrote" and the subject of "deals with." This rule explains why some subject relatives with double NPs are acceptable and others are not.

2. Except where noted, the following examples come from Lewis (1996). I have replaced "who" by "whom" throughout these examples, and made other changes, such as using "believe" instead of "think" for consistency.

3. To make the diagrams easier to read, I leave

out the arrows on the backbone, using them only to link details (e.g.,

NP2 in S9) to phrases. The backbone still designates a sequence (e.g.,

from subject to verb), which is fundamental in ICP diagrams. But by use

of an anchor, center-embedding is possible: Intervening words may appear

that are represented on other levels before the backbone the anchor begins

is completed (as illustrated by S9). In particular, note "that" in "that

the man" signifies a higher, subsuming composition, as does "who."

More conventionally, these words are shown as modifiers of a NP, hanging

below it in a parse tree, rather than being "higher."

If a semantic categorization plays the same role twice or more, the utterance will be unacceptable. Put another way: The comprehension construction process can categorize a categorization multiple ways, but not in the same way (e.g., as subject) in two different ways. In some sense, this is illogical. You can't say that X is a subject in way 1 and also in way 2. If X is a subject in the utterance, it must be a particular, single way.

For example, S10 is incomprehensible because an NP categorization (the man) plays the same role twice in a given sentence. To do this, it must be active in two ways at the same time.

(S10) The boy that the man that hired the woman yesterday hated cried.

S10 requires "the man" to play the role of subject in two ways simultaneously : "The man hired" and "The man hated." It can be shown as a composition of concurrent sequences (Figure 7).

This construction is invalid because a given node "the man" cannot be the head of two ICP sequences at the same time. More generally, a given semantic categorization can only appear once in a given ICP composition. To be included in two different ways (playing two different roles), "the man" would have to be subsumed within a higher composition (exemplified by the double role "the boy" plays in S10' or that "the book" plays in S9). "The man" plays the role of subject twice in S10, but plays two different roles in S9 (subject of "wrote" and qualifier of "who," which is the subject of the phrase "who hired").

According to Lewis, NL-Soar wrongly predicts that S10 is acceptable unless the parsing strategy were changed so "specifiers trigger creation of their heads." Furthermore, the use of an "anchor" is equivalent to the claim that the comprehension process is not strictly bottom up. I conclude that "self-embedding of NPs" is not the critical difference in comprehensibility. Rather, the issue is reuse of an open syntactic category (multiple NPs) or multiple, conflicting syntactic categorization of a semantic category.

More specifically, I claim that an ICP diagram of a sentence comprehension process represents the composed neural structure that is physically activated, categorized, sequenced, and composed during the comprehension or generation process. These diagrams describe mental representations, which are physical constructions.

The constraint on comprehension is a physical limitation related to composition of physical structures. You couldn't have a ball at the top of a pyramid while it also at the top of a cube. A ball can only be sitting on top of one structure. Of course, structures can be composed (e.g., a ball on top of a pyramid on top of a cube). This "recursiveness of mention" is accomplished in language by virtue of words like "that" and "who." These words (strictly speaking, their conceptual categorizations) are actually playing the other subject or object roles. The relation between "who" and "the man" is one of the roles that "the man" plays, as qualifier of another NP. This relation is a syntactic role categorization of a semantic categorization.

Now, to bring the linguistic data to bear on the message-sending example: The coordination of sending two messages involves two anchors, which we don't find in human language comprehension. The coordination is possible because the anchors are coupled to physical artifacts--reference entities; so two actively held neural processes are not required. This might be diagrammed as Figure 8.

As Figure 3 suggests, one might also diagram this as a single construction, "I'm sending-mail to P," which has activated twice in succession (so "sending-mail" would be collapsed to appear as one node). The anchors here are not just active neural processes, as in the case of the linguistic examples we will consider here, but perceptual categorizations coordinated with distinct windows in the visual field.

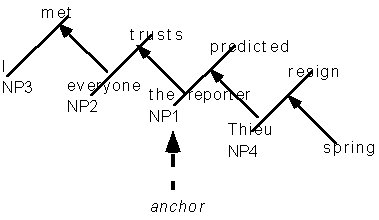

(S11) The reporter everyone I met trusts had predicted that Thieu would resign in the Spring.

Extended analysis with many more examples and sentential forms can be found in (Clancey, in preparation). I will proceed in this abridged form to discuss the general patterns and their explanation in neural terms.

Rather than saying that human memory is unable "to keep in mind only two or three similar chunks at the same time," the sentence comprehension data can be more parsimoniously explained in terms of reusing (reactivating and relating) categorizations within a new composition. The number is two (period) because the first use is held and the others are composed as details in a construct by a process that allows the categorization to be reactivated. Effectively, the composition process encapsulates the categorization by relating (mapping) to another categorization. Thus the physical construction is composed of such relational categorizations, allowing the "types" to be "used" multiple times. Furthermore, constructing a semantic structure requires that semantic categorizations are incorporated uniquely with respect to syntactic relations. That is, a given semantic category (word or phrase in surface structure) has only one relation to a given syntactic category/role. For example, a given noun can be a subject only once in the sentence. This is a complex way of saying what ICP diagrams show more simply.

In ICP notation, center-embedding has a simple structure (Figure 10):

In ICP notation, center-embedded clauses compose upward, from right to left (Figure 11).

In center-embedding, NPs are encountered first, followed by verbs, which then "pop" the stack from left to right (evident in the above examples). That is, the serial order of production for center-embedding is up through head nodes (NPs) and back down through tails and qualifiers (with an optional right-branching phrase appended on the end).

Right-branching sentences (e.g., S4) move forward to produce an identical compositional structure, but encounter the NPs in sequential, left to right order. That is, the traversal completely constructs each ICP diagonal before moving to another sequence (Figure 12).

The resultant structure of Figures 10 and 12 is identical. In both cases the higher sequence incorporating a detail is completed before the detail is used in a second role as the sentence goes forward. The difference is that in embedded clauses (Figure 10) the detail is encountered first and then embedded in a higher context. In the purely sequential case, the NPs appear in order from higher scope to lower (Figure 12).

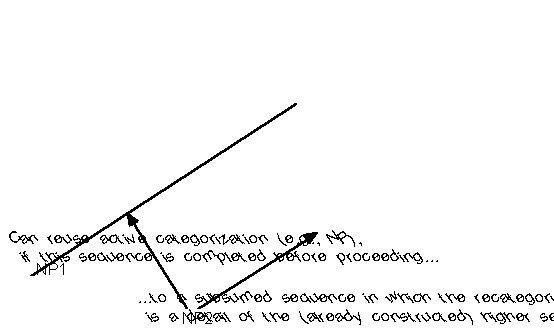

The sequence doesn't exist as a data structure but as activation in time. Anchoring is a means of holding a categorization active; this is apparently done by categorization of reference (see Clancey, 1997b, Chapters 12-13). Put another way, a given categorization is only active once at a given time. The apparent "keeping a second NP open" is really two processes:

1) attention holding onto the first activation so it can be composed in a second role. Thus, it is the ability to hold an anchor that allows composing propositions.

2) "recursive" reuse of a previously open categorization, such that the second use of the categorization is a detail in the first (Figure 12) or the second creates a sequence that contains the first (Figure 10).

In terms of neural maps, the simplest case is a syntactic map subsumed by a sequence that includes itself (Figure 13).

Such a map activates the sequence in two ways, first "bottom up" as a detail included in the sequence and second "head triggering" as the first element. The examples illustrate this pattern for both NPs and sentences. However, it is not necessary for the repeated activation to follow immediately (e.g., in S9 "the book the man"). It is easy to find acceptable reorderings:

(S11') The reporter everyone the editor met trusts had predicted that Thieu would resign in the spring.

By showing how syntactic structure and the composition process interact, my analysis teases apart multiple methods by which categorizations may be reused. This reveals that some examples may be explained by multiple mechanisms. For example, S5, "Mary, I believe, likes Bill," is acceptable because Mary and "I" are different kinds of NPs (the anchoring of "Mary" is not the necessary factor). But "Mary John believes likes Bill" is acceptable only because the first NP is anchored and so can be reused. Perhaps unexpectedly, S8 is acceptable despite having two NPs that are not anchored (Tom and Mary) because the embedded proposition is right-branching ("Mary said she saw Tom go").

Rather than saying "nodes are competing for the same structural relation" (Lewis, 1996, p. 13) I would say that a categorization (the relation) can only be active in one "instantiation" at a time. It can be reactivated, but cannot be held as two activations simultaneously. This makes sense because a global map is a "particular" neural structure. It can only be active or not. It cannot be copied and used in two different ways (e.g., to relate or bind different details) at the same time.

The basic idea is that a neural structure is active or not, and not copied and reused arbitrarily might underlie the limitations that Lewis labeled "interference." In fact, we can view "interference" as a placeholder, a term prevalent in descriptive cognitive models, which can be fleshed out by a theory of neural processes that gives rise to the effects. This illustrates the value of working at the descriptive (a.k.a. "symbolic") level and then moving to a theory of neural implementation.

Basically, the idea of not copying falls out from the basic constraints of a biological system based on activation: All the brain can do is activate (i.e., categorize), sequence, and compose. One interesting way around copying is simultaneous categorization in multiple modalities. For example, you can clap your hands, while you sing a song. You can visualize a relation you have described, etc. The visualization-body conception-verbalization triad provides an important way to hold, project, and coordinate multiple temporal and spatial relations. This gives a new twist to the idea of "analogical" representation.

What is meant by "recursion" and "reuse" in this theory? "'Recursive' reuse" means that a syntactic categorization may be incorporated in multiple sequences within a hierarchical construction. In computational terms, the syntactic categorization may be "reused." In a conventional parse tree, this means that a given node has multiple relation-links, but each link from a given node must be different. The compositional process is "recursive"; it builds a higher (subsuming) level or delves back down to subsumed sequences to supply the verbs, etc. Anchoring allows a single-level stack for NP and sentence categorizations. Composing is a sequential process and may itself be conceptually coordinated (i.e., syntactic composition is learned, though it rests directly on neural processes of composition and sequencing).

Would it be possible for maps to represent multiple categorizations of the same type, in some kind of graded manner? If by "represent" we mean "instantiate," then yes, a subject categorization for example may occur several times in a single construction. What is not allowed is for the semantic categories to play a single syntactic role in different ways. Here "represent" means that a category (such as "man") cannot be categorized as a subject in different ways.

The capability to hold categories active and relate them (and related representation capability often called "mental models") is the basis of procedural learning, but it has been taken for granted in most descriptive cognitive models. Our modeling languages have taken for granted the qualification, sequencing, substitution, composition, use of variables, etc. that distinguishes human conceptualization from that of other animals. NL-Soar research is starting to discover that there are strict limits and a model can't presuppose the power of arbitrary programming languages.

To summarize, the ICP notation is useful for comparing grammatical models

to the flexibility we claim is actually present in human conceptualization

(Figure 14). The primitive components of computational models--ordered

steps (a sequence of operators in a problem space), variable bindings,

conditional statements, and subgoaling--can be contrasted with classification,

sequencing, and composition of neural maps. Furthermore, constructing coordinated

sequences multidimensionally (from existing sequences, corresponding to

grammatical orders) would be like remembering stacks (a sequence of procedural

calls and variable bindings). In procedural terms, an ordering of steps

and binding constraints could be reestablished without running the procedures

themselves; this is what Soar's chunking provides. In terms of the ICP

notation, a sequence of perceptual classifications (a next-next-next path)

could be reestablished without recalling the specific actions that led

to these perceptions in the past.

|

The ICP notation helps reveal what Soar assigns to the "cognitive architecture," but doesn't explain. Specifically, we need the ICP account to explain how an operator hierarchy can be constructed at all. How are operators--situation-action coordinations occurring at different points in time--held active and related? Edelman (1992) suggests that such capabilities are not found in the more primitive, "primary-consciousness" of cats or birds.

This kind of analysis has fascinating implications for how a computer might be able to perform better than people (at least people who don't have computers to use!). That is, the analysis here suggests that our consciousness (mental representation creation capability) is limited in ways that are not logically required and that are easy to avoid in computer architectures. The flip side is that we don't appreciate fully what the brain's multimodal coordination architecture can do and haven't replicated it yet. So we have improvements in hand (computer tools) whose power we don't understand, built on a (programmed) architecture with limitations relative to the brain that we don't understand.

My hypothesis is that the interference effect and limits on short-term memory result from the mechanism by which conceptualizations activate and compose to form new constructions. Abstract depictions of these processes (the ICP diagrams) suggest that an extremely simple element-modifier relation and subsumption process explains the limits observed. The idea of "similarity-based interference" may thus be reformulated in terms of how a syntactic categorization can be reused or a semantic categorization related. These are two sides of the same coin, but reveal how the composition is syntactic: Syntactic roles (such as operators or grammatical categories) appear multiple times, but semantic categorizations (such as the meaning of a window or the role of a given NP) appear only once in a given behavior sequence.

When linguistic observations are reformulated by the ICP notation we find the kind of mechanism that Chomsky (1981) has argued for--a physiological process that structures conceptual content. The limitations on reuse show that procedural languages take for granted what neural processing cannot do directly (unlimited stack) or in other respects does with amazing facility (grasping compositional structure). However, the ICP notation is still a gross abstraction of an unknown activation and coordination mechanism.

My interest here has been architectural, that is, to describe the process mechanisms by which behavior is sequenced and composed. Following Bartlett's lead, I begin by assuming that learning and memory should be viewed as the dynamic construction of coordinated sequences from previous coordinations. That is, physical structures are reactivated and reused in accord with how they categorically and temporally worked together in the past. With further examples from neuropsychology (Clancey, in preparation), one can generalize the notion of coordination to multiple sensory systems and, especially in humans, multiple modes of conceiving. Fitting the original hypothesis that knowledge is not a body of text, this perspective emphasizes that verbalizations are not isomorphic to conceptualizations, and that scenes, rhythms, and melodies are other ways of organizing behavior. Hence, "gestalt" understanding, which appeared to our text-based mentality to be a pre-scientific concept like "intuition," can now be understood as a non-verbal form of conceptualization.

At the first order, I now make no distinction between "memory," "learning" and "coordinating." The control regime of descriptive cognitive models, such as strategy rules, is replaced by temporal-sequential conceptualizing: reactivating, generalizing, and recoordinating behavior sequences (whether occurring as outward movement or private imagination). In this respect, "knowledge" is constructed as processes "in-line" and integrated with sensorimotor circuits. Cognition is situated because perception, conception, and action are physically coupled. By this reformulation, cognition is not only physically and temporally situated ("knowledge is in the environment"), but conceptually situated as a tacit understanding of "what I am doing now." In people, this conceptualization is pervasively social in content; and hence we see that the proponents of situated action (Clancey, 1993) are making psychological claims about both the learning mechanism and the content of knowledge.

In accepting the broad patterns the schema theories of cognitive psychology describe, we are able to go beyond other models as well, such as Lashley's serial organization and Edelman's perceptual categorization. Nevertheless, for the purpose of psychological theory (though perhaps not pedagogical design) we reject the idea of a "body" of knowledge, a store that is indexed, compared, instantiated, and applied. Putting such descriptions aside, we ask What inherently developmental process or architecture could strategy rules and scripts be describing?

This exploration presents a new research program and the methods of analysis point to new synthetic approaches. The Interactive Coordination Processes notation is a means for inventing new learning mechanisms. The rules of a descriptive cognitive model are more convenient for classifying behavior, but the ICP notation is better for understanding development of sequences. By eliminating the mediating role of descriptions from the mechanism, we can show intuitively how "proceduralization" is not a secondary compilation step, but a constructive process that began in evolution as sensorimotor coordination and became the process we call conceptualizing, a means of holding active, ordering, and composing behavior sequences. The ICP notation, although valuable for coarsely diagramming the phenomenology of cognition, imperfectly describes multimodal coordinations and must be assumed to misconstrue the spatial and temporal properties of the neural mechanism in perhaps important ways.

The result is a sketch of an architecture for "process memory," based on notions of coupling, activation sequencing, composing, figure-ground shifting--the neurological origins of the transformations and organizing principles studied by Lashley, Piaget, Chomsky, and Bateson. A more complete analysis (Clancey, in preparation) relates the verbal and visual to temporal-sequential, manipulo-spatial, and scene conceptualization. In analyses of dysfunctions and dreaming, we discover further evidence for specialized brain "modules" for conceptual organizing--hypothesizing that these are always mutually configured, neither parallel or serially-related in conventional terms, and have no existence as structures or capabilities apart from some integrated coordination in time with other processes.

References

Arbib, M. (1980). Visuomotor Coordination: From Neural nets to Schema theory. Cognition and Brain Theory 4(1) 23-39.

Bamberger, J. and Schön, D.A. 1991. Learning as reflective conversation with materials. In F. Steier (Editor), Research and Reflectivity. London: Sage Publications.

Bartlett, F. C. (1932/1977). Remembering-A Study in Experimental and Social Psychology. Cambridge: Cambridge University Press. Reprint.

Bartlett, F. C. (1958). Thinking: An Experimental and Social Study. New York: Basic Books.

Berwick, R.C. (1983). Transformational grammar and artificial intelligence: A contemporary view. Cognition and Brain Theory, 6(4) 383-416.

Bickhard, M. H. and Terveen, L. (1995). The Impasse of Artificial Intelligence and Cognitive Science. Elsevier Publishers.

Chomsky, N. (1981). A naturalistic approach to language and cognition. Cognition and Brain Theory, 4(1): 3-22.

Clancey, W. J. (1993). Situated action: A neuropsychological interpretation (Response to Vera and Simon). Cognitive Science, 17(1), 87-107.

Clancey, W.J. (1997a). Conceptual coordination: Abstraction without description. International Journal of Educational Research. Volume ???, Number ???, Pages ???.

Clancey, W.J. (1997b). Situated Cognition: On Human Knowledge and Computer Representations. New York: Cambridge University Press.

Clancey, W.J. (in preparation). Conceptual Coordination: How the Mind Organizes Experience in Time.

Edelman, G. (1992). Bright Air, Brilliant Fire: On the Matter of the Mind. New York: Basic Books.

Elman, J. L. (1989). Structured representation and connectionist models. Proceedings of the Annual Conference of the Cognitive Science Society, Lawrence Erlbaum Publishers, pp. 17-23.

Freeman, W. J. (1991). The Physiology of Perception. Scientific American (February), 78-85.

Gardner, H. (1985). Frames of Mind: The Theory of Multiple Intelligences. New York: Basic Books.

Lewis, R. (1994). A theory of the computation architecture of sentence comprehension. Unpublished manuscript, dated 9/15/94.

Lewis, R. (1995). A theory of grammatical but unacceptable findings. Unpublished manuscript, dated 2/23/95.

Lewis, R. (1996). Interference in short-term memory: The magical number two (or three) in sentence processing. Journal of Psycholoinguistic Research, 25(1), pp. 93-115. (original dated August 11, 1995)

Merzenich, M., Kaas, J., Wall, J., Nelson, R., Sur, M., and Felleman, D. (1983). Topographic Reorganization of Somatosensory Cortical Area 3B and 1 in Adult Monkeys Following Restricted Deafferentation. Neuroscience, 8(1), 33-55.

Newell, A. (1982). The Knowledge Level. Artificial Intelligence, 18(1), 87-127.

Rosenfield, I. (1992). The Strange, Familiar, and Forgotten. New York: Vintage Books.

Sacks, O. (1987). The Man Who Mistook His Wife for a Hat. New York: Harper & Row.

Schön DA. (1979). Generative metaphor: A perspective on problem-setting in social policy. In: Ortony A. ed. Metaphor and Thought, Cambridge: Cambridge University Press, pp. 254-83.

Servan-Schreiber, D., Cleeremans, A., and McClelland, J. L. (1991). Graded State Machines: The representation of temporal contingencies in simple recurrent networks. Machine Learning 7(2/3), Sept '91, 161-193.

Smolensky, P. (1988). On the proper treatment of connectionism. The Behavioral and Brain Sciences, 11, 1-23.

Steels, L. and Brooks, R. (Eds.). (1995). The "Artificial Life" Route to "Artificial Intelligence": Building Situated Embodied Agents. Hillsdale, NJ: Lawrence Erlbaum Associates.

Vygotsky, L. ([1934] 1986). Thought and Language. Cambridge, MA: The MIT Press.