IN DEFENSE OF EXPERIMENTAL DATA IN A RELATIVISTIC MILIEU

SIU L. CHOW

1Department of Psychology, University of Regina, Regina, Saskatchewan, Canada S4S OA2

Abstract-The objectivity and utility of experimental data as evidential support for knowledge-claims may be found suspect when it is shown that (a) the interpretation of experimental data is inevitably complicated by social factors like experimenter effects, subject effects and demand characteristics, (b) social factors which affect experimental data are themselves sensitive to societal conventions or cultural values, (c) all observations (including experimental observations) are necessarily theory-dependent, and (d) experimental data have limited generality because they are collected in artificial settings. These critiques of experimental data are answered by showing that (i) not all empirical studies are experiments, (ii) experimental methodology is developed to exclude alternate interpretations of data (including explanations in terms of social influences), (iii) theoretical disputes and their settlement take place in the context of a particular frame of reference, and (iv) objectivity can be achieved with observations neutral to the to-be-corroborated theory despite theory-dependent observations if distinctions are made (a) between prior observation and evidential observation and (b) between a to-be-corroborated theory and the theory underlying the identity of evidential response.

Two recent publications are eloquent accounts of why we may be dissatisfied with the experimental approach to psychological research. The influences of social factors on psychological research are emphasized in both critiques of experimental studies in psychology. While Danziger (1990) is more concerned with the influences of various professional, institutional, societal and political constraints on psychologistsí investigative practice, Gergen (1991) emphasizes that psychologistsí appeal to experimental data is inevitably circular because all observations are theory-dependent. They also find psychologistsí appeal to empirical data, as well as their reliance on statistics, wanting because psychologists seem to pursue these technical niceties at the expense of conceptual sophistication or social relevance. These critics of experimental data seem to suggest that psychologists collect data in artificial settings because of their servitude to methodology. Moreover, in using statistics, psychologists overlook the importance of individual or group differences. Psychologistsí knowledge-claims are thereby necessarily suspect.

To Danziger (1990) and Gergen (1991), what psychologists have acquired through their research efforts are various knowledge-claims constructed in specific social contexts. The meanings, significance or usefulness of these knowledge-claims cannot be properly appreciated unless the social context in which they are constructed is taken into account. In short, it is inevitable that psychological knowledge-claims are not true in an absolute sense. They are assertions relative to a specific theoretical perspective in the context of a particular social milieu. Hence, psychological knowledge-claims cannot be assessed in objective, quantitative terms. This assessment of experimental psychologistsí current investigative practice will be called relativistic critique in subsequent discussion. Critics of psychologistsí investigative practice from the relativistic critique perspective will be called relativistic critics.

It will be shown that much of what is said about properly collected experimental data in the relativistic critique is debatable. The putative influences of social factors on research outcome iterated in the relativistic critique argument are neither as insidiously pervasive nor as validly established as they are said to be because (a) not all empirical researches are experimental research, and (b) the logic of experimental research renders it possible to exclude the various social factors as artifacts. How non-intellectual factors may affect research practice (e.g., the practical importance of the research result) is irrelevant to assessing the validity of knowledge-claims if a distinction is made between the rules of a game and how the game is played. A case can be made for objectivity despite theory-dependent observations if certain distinctions are made. Moreover, unambiguous theory-corroboration data can only be obtained in artificial settings.

The present discussion of the relativistic critique begins with a description of an experiment so as to provide the helpful frame of reference. Also described is the affinity of the experimental design with the formal structure of Millís (1973) inductive methods. The meanings of validity and control are explicated with reference to the experimental design so as to provide a rejoinder to the "methodolatry" characterization of experimentation. A case is made for ecological invalidity. The objectivity and non-circular nature of experimental data are illustrated with reference to a few meta-theoretical distinctions. The putative effects of the social context on experimental evidence are assessed by revisiting the social psychology of the psychological experiment, or SPOPE, for short.

THE LOGIC OF EMPIRICALJUSTIFICATION

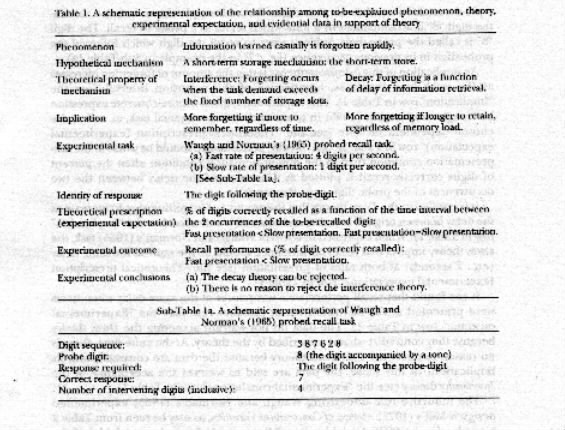

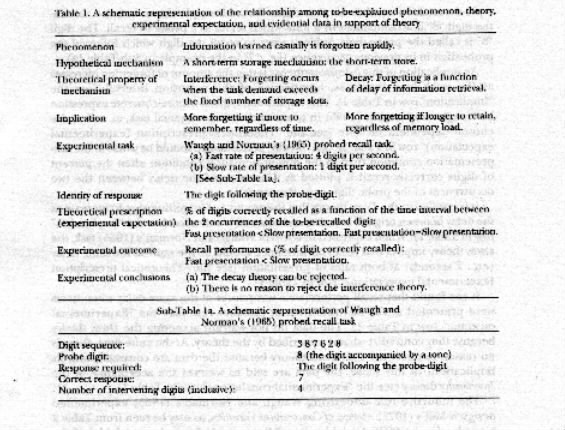

Consider the following commonplace experience. Suppose that you are introduced to a group of people in a party. By the time you meet the sixth individual, you most probably have forgotten the name of the first person to whom you have just been introduced. This phenomenon is to be explained in terms of a storage mechanism, called the short-term store (Baddeley, 1990), which retains information for only a few seconds (see the "Phenomenon" and "Hypothetical mechanism" rows in Table 1).

How information is lost from the short-term store depends on the theoretical properties attributed to the hypothetical memory store in question. Two theoretical possibilities readily suggest themselves (see the two entries in the "Theoretical property" row in Table 1). First, there is a finite number of storage slots in the short-term store. Old information is displaced when incoming information exceeds the storage capacity of the short-term store (i.e., the "Interference" theory). The second explanation is that it takes time to get introduced to several people. Information in the short-term store simply dissipates if not processed further, in much the same way alcohol evaporates if left in an uncovered beaker. Whatever is retained about the name of the first person would have decayed by the time you come to the sixth person (viz., the "Decay" theory).

Cognitive psychologistsí modus operandi is first to work out what else should be true if the to-be-corroborated theory is true (viz., the implications of the theory). Acceptance of the theory is to be assessed in terms of its implications. This is done one implication at a time, a process called "converging operations" (Garner, Hake & Eriksen, 1956). Suppose that a certain implication is used to test the theory in the study. The specific theoretical prescription is made explicit by expressing the to-be-tested implication in terms of the chosen experimental task, independent variable and dependent variable. This abstraction description of the theory-corroboration process may be explicated as follows.

Waugh and Norman (1965) used a probed-recall task to choose between the Interference and Decay theories. On any trial, subjects were presented auditorially with a sequence of decimal digits at either a fast rate (viz., 4 digits per second) or a slow rate (i.e., 1 digit per second). A digit might be repeated after being intervened by a pre-determined number of other digits. In the example shown in Sub-Table la, the digit "8" is accompanied by a tone which serves as a probe to recall. The digit "8" is called the probe-digit. Subjects were to recall the digit which followed the probe-digit in its previous occurrence (i.e., "7" in the example in Sub-Table la).

An implication of this Interference theory is that the amount of forgetting increases as the work load increases, irrespective of the retention interval (see the "Implication" row in Table 1). This implication is given a more concrete expression by Waugh and Norman (1965) in terms of their experimental task, as well as the chosen dependent variable (see the "Theoretical prescription (experimental expectation)" row in Table 1). Specifically, performance should be worse in the fast-presentation condition than in the slow-presentation condition when the percent of digits correctly recall is plotted as a function of the delay between the two occurrences of the probe digit (e.g., 2 seconds).

It is implied in the Decay theory that the amount of forgetting should increase as the delay between original learning and retrieval is extended (see the "Implication" row in Table 1). When expressed in terms of Waugh and Normanís (1965) task, the Decay theory implies that recall performance should be the same at the same delay (e.g., 2 seconds) at both rates of presentation (see the "Theoretical prescription (experimental expectation)" row in Table 1).

It was found that recall performance was poorer at the same delay when items were presented at the fast rate than at the slow rate (see the "Experimental outcome" row in Table 1). The data do not warrant accepting the Decay theory because they contradict what is prescribed by the theory. At the same time, there is no reason to reject the Interference theory because the data are consistent with its implication. In this sense, the data are said to warrant the acceptance of the Interference theory (see the "Experimental conclusions" row in Table 1).

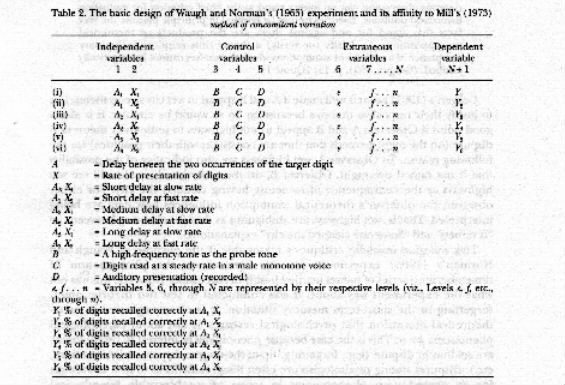

The inductive rule underlying Waugh and Normanís (1965) experimental design is Millís (1973) Method of Concomitant Variation, as may be seen from Table 2 (see also Copi, 1982). Variables 1 and 2 are the independent variables, Delay between the two occurrences of the target digit (A) and Rate of digit presentation (X), respectively. The dependent variable is Variable N + 1 (viz., the percent of digits recalled correctly). Variables 3 through 5 are three control variables, namely, (i) a high-frequency tone was used as the probe tone (i.e., B in Table 2), (ii) digits were read at a steady rate in a male monotone voice (viz., C in Table 2), and (iii) the stimulus presentation was done by using a recorded string of digits (i.e., D in Table 2), respectively.

Variables 3 through 5 are called control variables because each of them is represented at the same level at all six Delay by Rate combinations of the two independent variables. In theory, there are numerous extraneous variables (viz., Variables 6 through N). They are assumed to have been controlled (i.e., held constant at the same level at all Delay by Rate combinations) by virtue of the fact that all subjects received many trials of each of the six Delay by Rate combinations in a random order (i.e., the repeated-measures design is used).

Consider the experimental expectation, or theoretical prescription, of the Interference theory. Given any delay, the fast rate of digit presentation imposes greater demands on the short-term store than the slow rate because more digits are presented at the fast rate. The demand on the short-term store due to the fast presentation rate is amplified the longer the delay. In other words, the Delay by Rate combinations in Row (i) through (vi) in Table 2 represent increasingly greater demands on the short-term store according to the Interference theory. Larger subscripts of the dependent variable (1) represent poorer performance. In other words, an inverse relationship is expected between the treatment combinations of the two independent variables and the dependent variables. It is for this reason that Waugh and Normanís (1965) experimental design is said to be an exemplification of Millís (1973) Method of Concomitant Variation.2

ECOLOGICAL VALIDITY REVISITED

Relativistic critics would find Waugh and Normanís (1965) experiment objectionable because their experimental task and test condition were artificial. The message of the relativistic position is that, as the whole situation was so unlike any everyday activity, Waugh and Normanís (1965) conclusion could not possibly be applied to forgetting in real-life situations. This is a good example of the complaint that experimental data do not have ecological validity (called ecological invalidity in subsequent discussion).

It can be shown that the ecological invalidity feature of experimental data can be defended. In fact, it is this feature which renders non-circular experimental data. The necessity of ecological invalidity may be seen by supposing that city streets are wet early one morning. This phenomenon may suggest to Observer A that it rained overnight. However, Observer B may interpret the wet city streets to mean that city employees cleaned the city overnight. An appeal to wet highways as evidential data has ecological validity (Neisser, 1976, 1988) because wet highways are like wet city streets in terms of their location and being exposed to the same weather as do city streets.

This example illustrates why relativistic critics suggest that empirical data are not useful as the theory corroboration evidence, or as the means to choose between contending explanations of observable phenomena. In the words of a relativistic critic,

For if theoretical assumptions create the domain of meaningful facts, then rigorous observation can no longer stand as the chief criterion for evaluating theoretical positions. Theories cannot be falsified in principle because the very facts that stand for and against them are the products of theoretical presuppositions. To falsify (or verify) a theory thus requires preliminary acceptance of a network of assumptions which themselves cannot be empirically justified. (Gergen, 1991, p. 15) [Quote 1]

Gergenís (1991) point is well made if A and B appeal to wet city streets themselves to justify their respective theories because to do so would be circular. It is also a good point if Observers A and B appeal to wet highways to settle their theoretical dispute (on the mere grounds that they are consistent with their positions) for the following reason. To Observer A, wet highways are also indicative of the possibility that it has rained overnight. Observer B, on the other hand, would still see wet highways as the consequence of someone having cleaned the city. For either observer, the observerís theoretical assumption influences how data are being interpreted. That is, wet highways are ambiguous as data for choosing between the "It rained" and "Someone cleaned the city" explanations.

This ecological invalidity critique is reasonable if the purpose of Waugh and Normanís (1965) experiment was to demonstrate the phenomenon of remembering a series of names learned hastily in succession. However, this was not what the experiment was about. It was conducted to test two theories about forgetting in the short-term memory situation. It is often neglected in meta-theoretical discussion that psychological research is not about psychological phenomena per se. This is the case because phenomena of interest to psychologists are seldom in dispute (e.g., forgetting, tip-of-the-tongue, hyperactivity, emotions, etc.). Disputes among psychologists are often disagreements as to how to account for an agreed-upon phenomenon in terms of unobservable hypothetical mechanisms. In other words, the subject-matter of psychological research is theories about phenomena, not the phenomena themselves.

A prerequisite for an explanatory theory being non-circular is that it can be used to explain phenomena other than the original phenomenon. This is an iteration of cognitive psychologistsí modus operandi that they have to stipulate what else can be explained by the theory, in addition to the original phenomenon which invites theorization in the first place. What may be added here is that the "what else" emphasized in bold is more helpful the more different it is from the original phenomenon. This state of affairs is responsible for the fact that good theory-corroboration data do not have (and do not need) ecological validity.

In short, it is necessary to show that the "It rained" or "Someone cleaned the city" explanation can be used to explain phemonena other than wet city streets. Specifically, the "It rained" theory, but not the "Someone cleaned the city" theory, can explain wet leaves on the top of a tall tree. Alternatively, the "Someone cleaned the city," but not the "It rained," theory can explain the fact that the floor of the bandstand in the park is wet.3

Suppose Observers A and B wish to test Aís theory. They should examine the leaves on the top of a tall tree. If it did rain, the leaves should be wet. Hence, the "It rained" theory can be unambiguously rejected if the leaves are dry. In other words, Gergenís (1991) assertion that theories cannot be falsified in principle [see Quote 1] is debatable. Of importance to the ecological invalidity argument is the fact that wet leaves are different from wet city streets. Yet, wet leaves are the data to use to test the "It rained" theory.

It has been shown that the unambiguous evidence is unlike the to-be-explained phenomenon. This feature is reflected in cognitive psychologistsí modus operandi depicted in Table 1. It can be seen that the choice between the Interference and Decay theories is settled with data which are unlike the to-be-explained phenomenon (viz., recalling the digit after the probe-digit in the laboratory versus recalling the names of several strangers in an information-rich social gathering). In other words, the ecological validity criterion is the incorrect criterion to use when assessing whether or not experimental data warrant a theoretical position (Mook, 1983).

PRECEDENCE: DATA OR THEORY

The circular theory-data relationship envisaged in the relativistic account is given the inadvertent support when psychologists say, in their meta-theoretical moments, that they infer a theory from their data. This may be the result of their having been exposed to how experimentation is being described and explained in statistics textbooks (Chow, 1992a, 1992b). This misleading way of talking about the theory-corroboration procedure suggests that (a) observation precedes theory, and (b) the original observation itself, or observations very similar to the original observation, is used to support the theory. That is, the sequence is phenomenon

® theory ® phenomenon. This is how a Skinnerian would talk about experimentation (Skinner, 1938), and its conceptual difficulties have been made explicit by Chomsky (1959).Even though they agree with relativistic critics that no observation is theory-independent, contemporary experimental psychologistsí modus operandi is based on an observation1

® theory ® observation2 sequence. This may be illustrated with reference to two distinctions which have to be made when we assess whether or not empirical data can be used in a non-circular way in theory corroboration. The two distinctions are (i) prior data (i.e., observation1) versus evidential data (viz., observation2), and (ii) a to-be-corroborated theory versus the theory underlying the evidential observation.Not distinguishing between studying phenomenon and studying a theory about a phenomenon, critics of experimental psychology tend to lose sight of the fact that contemporary cognitive psychologists draw their theoretical insight from everyday psychological experiences and phenomena (e.g., the "Phenomenon" row in Table 1). Both the Interference and Decay theories are attempts to account for phenomena of forgetting experienced in everyday life. In other words, the phenomenon of forgetting hastily learned information is a datum which invites theorization. The said phenomenon may hence be characterized as prior data (or observation1) vis-à-vis either the Interference or Decay theory.

Contrary to some relativistic criticsí characterization (e.g., Gergen, 1988), psychologists do not use prior data to test a theory or to choose among contending theories. To do so would be indeed an exercise in circularity. Instead, they collected data in artificial settings (e.g., the artificial task in Sub-Table 1a), and they assess the experimental data thus collected with reference to an implication of the to-be-corroborated theory. Experimental data are evidential data (or observation2) vis-à-vis the to-be-corroborated theory. They are collected after the theory has been proposed. Hence, relativistsí phenomenon

® theory ® phenomenon sequence should be replaced by the observation1 ® theory ® observation2 sequence. More important, evidential data are not identical, or similar, to prior data in theory corroboration. As a matter of fact, in order to be unambiguous, evidential data have to be different from prior data.TO-BE-CORROBORATED THEORY VERSUS THEORY UNDERLYING EVIDENTIAL RESPONSE

Now consider the "theoretical assumptions create the domain of meaningful facts" assertion in [Quote 1] with reference to the "Identity of response" row in Table 1 and its entry, "The digit following the probe-digit." As a digit, the response is a decimal number. Decimal numbers are one of several kinds of numbers defined by different conventions (viz., hexadecimal, decimal, octal and binary numbers). In other words, the identity of the response used as evidence in Waugh and Normanís (1965) experiment (i.e., evidential response) is dependent on a non-empirical frame of reference (i.e., the decimal number system). More important to Gergenís (1991) "domain of meaningful acts" is the issue of how the response was categorized in the experiment (viz., as "correct" or "incorrect"). Note that there is an implicit distinction in Gergenís (1991) argument, namely, the observation (viz., the response) and the "fact" represented by the observation, or the "factual status" of the response (i.e., the fact of being correct or incorrect). The categorization was done with reference to the "probe-recall" feature of the task. That is to say, the factual status of a response is dependent on the experimental task, not on the Interference or Decay theory. This is contrary to an implication of [Quote 1] that the factual status of the evidential response is dependent on the to-be-tested theory.

At the same time, there is something in the experiment which is indeed dependent on the to-be-corroborated theory. Specifically, the two entries in the "Theoretical prescription" row in Table 1 are dependent on the Interference and Decay theories, respectively. In other words, it seems that the necessary distinction between the factual status of the observation and the theoretical prescription of the to-be-corroborated theory is not made in [Quote 1].

As may be seen, we are dealing with frames of reference, or theories, in different domains when we consider (a) the identity of the response, (b) the factual states of the response (viz., what fact is being represented by the response), and (c) the theoretical prescription of the experiment. There is no inter-dependence among (a), (b) and (c). Hence, it is possible to have atheoretical or theory-neutral observations (vis-à-vis a to-be-corroborated theory) even when all observations are theory-dependent.

In sum, there is no reason why individuals subscribing to different theories cannot agree on (a) what the evidential response is, (b) what "fact" is represented by the response, and (c) what should be the case if a theory is true. Consequently, it is possible to achieve objectivity (viz., inter-observer agreement on experimental observations, regardless of the observersí theoretical commitment), despite the theory-dependence of research observations. That is the case because multiple theories belonging to different domains or levels of abstraction are being implicated.

It is also important to note that the choice between the Interference and Decay theory is made within the same frame of reference (viz., cognitive psychology). Some relativistic critics may argue that what is said in either theory might be very different had the phenomenon of forgetting been conducted in the context of a different frame of reference (e.g., psychodynamics or positivistic psychology). This is true. However, the dispute envisaged by relativistic critics is not one between two theories of forgetting in the context of a frame of reference which is not in dispute. The relativistic critics are effectively suggesting an examination of the relative merits of two different frames of reference (e.g., cognitive psychology versus psychodynamics). Whether or not such a choice can be made with empirical data, let alone experimental data, depends on a host of issues which go beyond the scope of the present discussion. The crucial question is that questions about the choice between two meta-theoretical frames of reference are not questions as to whether or not a theory can be warranted by experimental data.

KNOWLEDGE-CLAIMS AND VALIDITY

The conclusion of Waugh and Normanís (1965) experiment is that interference is responsible for forgetting in the short-term memory situation. Important to the present discussion is whether or not the conclusion is warranted by their finding, a criterion called "warranted assertability" by Manicas and Secord (1983). This criterion is germane to the relativistic charge that experimental psychologists pay undue attention to their methodology.

To consider the "warranted assertability" of an experimental conclusion is to examine the theory-data relationship implicated in the experiment (viz., the theory

® observation2 part of the observation1 ® theory® observation2 sequence). A theory is justified, or warranted, by the data collected for the explicit purpose of testing the theory (i.e., observation2) when Cook and Campbellís (1979) criteria of statistical conclusion validity, internal validity and construct validity are met. The term, validity, is used in the present discussion to highlight the fact that "warranted assertability" is achieved when the three aforementioned kinds of validity are secured.4Issues about statistical conclusion validity are concerns that the correct statistical procedure is used to analyze the data (e.g., using the related-sample t test for the repeated-measures 1-factor, 2-level design). To examine the internal validity of the study is to ensure that the observed relationship between the independent and dependent variables cannot be explained in terms of the other features of the study. This amounts to excluding all alternative explanations in terms of the structural or procedural characteristics of the study (viz., the design of the study, the choice of independent and control variables, and the control procedures). This kind of concern is actually the concern with the formal requirement of the inductive principle underlying the design of the study. Hence, Cook and Campbellís (1979) internal validity is characterized as inductive conclusion validity (Chow, 1987, 1992a).

To Cook and Campbell (1979), an empirical study has construct validity when it is established that the observed relationship between the independent and dependent variables actually informs us about the to-be-investigated theoretical construct. This may be interpreted to mean the exclusion of alternative conceptual explanations of the data (as opposed to alternative explanations in terms of the procedural features of the study at the methodological level implicated in inductive conclusion validity). However, the term construct is often used in non-experimental validation of psychometric tests. That is, it has too heavy a psychometric overtone (Chow, 1987). Hence, Wampold, Davis and Goodís (1990) hypothesis validity seems more appropriate than construct validity in the context of experimental studies. In short, the hypothesis validity of a study is the extent to which an alternative conceptual explanation of the data is unambiguously excluded. Hence, Waugh and Normanís (1965) study has hypothesis validity because the Decay theory was unambiguously excluded in favor of the Interference theory.

VALIDITY OR METHODOLATRY

It is instructive to examine why the criterion validity has received scant notice, if at all, in the relativistic critique. This can be done by considering the exchange between Barber and Silver (1968a, 1968b) and Rosenthal (1966, 1968) regarding the experiment bias effect (viz., the view that the experimenter would, knowingly or unknowingly, induce the subjects to behave in a way consistent with the experimenterís vested interests). Barber and Silver (I 968a) argued that the putative evidence for the experimenter bias effect is debatable because, in studies purportedly in its support, (a) questionable levels of significance were sometimes used (e.g., p > 0.10), (b) some investigators failed to use the appropriate statistical analyses, (c) necessary statistical analyses were not carried out in some studies, (d) inappropriate data collection procedures were found in some studies, and (e) the necessary controls (in the sense of provisions for excluding specific alternative interpretations) were absent in many studies.

Barber and Silverís (1968a, 1968b) criticisms of the evidential support for the experimenter bias effect is a good example of what is wrong with psychology from the relativistic critique perspective.5 To begin with, the methodological and procedural details of concern to Barber and Silver (1968a, 1968b) are far removed from real-life experiences. Nor do these details have anything to do with the to-be-studied phenomenon (viz., experimenter bias effect). Second, psychologists effectively disregard findings which may have important practical and social implications when they reject studies for some arcane methodological reasons. Hence, psychologistsí concern with methodological issues or technical details is characterized as a rhetorical device by Gergen (1991) and a fetish or "methodolatry" by Danziger (1990).

In other words, the derogatory characterizations of psychologistsí attention to statistics and research design issues used in relativistic critique is reasonable if psychologists do so for merely pedantic reasons. However, theoretical discussions and the assessment of research findings are not everyday activities. Theoretical discussions necessarily require formal reasoning and abstract criteria. These intellectual activities require specialized skills and terminology to bring into focus formal and abstract considerations not important to everyday or literary discourse (see "misplaced abstraction" emphasized in italics in [Quote 2] below).

For example, researchers are reminded of the influences of chance factors by considering statistical significance. Psychologists adopt a conventional level of significance (viz.,

a = 0.05) because they wish to have a well-defined criterion of stringency when they reject chance factors as the explanation of data (Chow, 1988, 1991). Researchers concerned with reducing ambiguity would have to pay particular attention to controls as the means to exclude alternate interpretations of data. In other words, technical terms such as statistical significance and control are used to talk about the formal requirement necessary for establishing the validity of empirical studies.EMPIRICAL RESEARCH: EXPERIMENTAL VERSUS NON-EXPERIMENTAL

Some relativistic critics (e.g., Danziger, 1990) do recognize the importance of arriving at unambiguous research conclusions. It has just been shown that questions about statistics and research design are concerns about reducing ambiguity. Yet, relativistic critics dismiss technical concerns as methodolatry. It may be seen that this inconsistency is due to a misunderstanding of the concept, control, in the relativistic critique.

Danziger (1990) argues that contemporary psychologists owe their insistence on using empirical data to three origins, namely, (a) Wundtís psychophysics, (b) Binetís study of hypnosis with a comparison group and (c) Galtonís anthropometric measurement. Although these pioneers of psychological research used radically different data-collection procedures, their procedures have all been characterized as experimental by Danziger (1990). Specifically, it is said,

Discussion of experimentation in psychology have often suffered from a misplaced abstractness. Not uncommonly, they have referred to something called the experimental method, as though there was only one, . . . (Danziger, 1990, p. 24, emphasis in italics added) [Quote 2]

To Danziger (1990), the prevalent characteristic of psychological research is to achieve different kinds of control, as may be seen by considering an attempt in psychophysics to establish the absolute threshold for brightness with the Method of Constant Stimuli. An appropriate range of brightness is first chosen. This is "stimulus control." Each of these brightness values is presented to an observer many times. The entire ensemble of stimuli is presented to an observer in a random order. The observer is given only two response options on every trial, namely, to respond either "Yes" (to indicate that a light is seen) or "No" (to indicate that no light is seen). This is "response control."

Although stimulus and response controls were achieved in different ways in Wundtís, Binetís and Galtonís approaches, "control" was, nonetheless, an integral component of their research. Hence, Danziger (1990) treats them as different ways of conducting experiments. In any case, using stimulus control or response control is objectionable because their introduction constitutes "social control" (Danziger, 1990, p. 137) in the Skinnerian sense of shaping the subjectsí behavior. At the same time, it is said that the purpose of introducing stimulus and response controls in the data collection procedure is considered a means "to impose a numerical structure on the data . . ." (Danziger, 1990, p. 137) in order to use statistics. An implication of this argument is that "control" is not (or need not be) a necessary component of research had psychologists not been so obsessed with quantification and statistics. However, two rejoinders to this interpretation of "control" may be offered.

First, imagine simply asking an observer in an absolute-threshold task to watch the screen and give a response within a predetermined period of time (e.g., 2 seconds). This is an ambiguous instruction in an ill-defined situation. It is reasonable to assume that the observer would not know what to do, especially at the beginning. A likely outcome is either (a) that the observer responds in a way unrelated to the purpose of the study, or (b) the observer asks for explicit instruction as to what to do. This is not unlike the following situation.

Suppose that a biology teacher instructs a novice student to report what is seen under a microscope. The novice student may report things which have nothing to do with the class work. Another likely outcome is that the student asks for specific instruction as to what to look for. That is, people ask for information so as to reduce ambiguity when placed in an uncertain situation. In other words, it is debatable to say that giving an observer explicit instructions as to what to do is an attempt to shape the observerís behavior. That is, it is debatable to characterize giving unambiguous instruction to experimental subjects as "response control" in the sense of shaping the subjectsí behavior. Nor is following specific instructions by experimental subjects a contrived way of behaving which happens only in the laboratory. For example, drivers do stop at red lights even when they are in a hurry. That is, people do follow rules, conventions or instructions in everyday life. The second rejoinder to Danzigerís (1990) interpretation of control is based on three technical meanings of control identified by Boring (1954, 1969), none of them refers to shaping the behavior of research participants. Control is achieved in research when the following conditions are met:

It may readily be seen that the purpose of instituting stimulus control is to ensure that the pre-determined levels of a manipulated variable are used as prescribed. Contrary to Danzigerís (1990) characterization, stimulus control is not used as a form of social control because it is not instituted in the experiment for the purpose of shaping the subjectís behavior. It is used to satisfy the formal requirement of the inductive method underlying the experimental design.

Moreover, by itself, stimulus control is not the whole meaning of control. Hence, not all three components of control are found in a typical psychophysical study. This illustrates the point that not all empirical methods satisfy the three meanings of control. Specifically, neither Wundtís nor Galtonís approach satisfied all three criteria.

As a matter of fact, empirical research methods are divided into experimental, quasi-experimental and non-experimental methods. Specifically, (a) an experiment is one in which all recognized controls are present, (b) a quasi-experiment is one in which at least one recognized control is absent due to practical or logistic constrains (Campbell & Stanley, 1966; Cook & Campbell, 1969; Binetís approach, strictly speaking, fell into this category), and (c) a non-experimental research study is one in which no attempt is made to ensure the presence of control (e.g., research data collected by conducting an interview or by naturalistic observations).

The importance of control may be seen by supposing that Variable 3 in Table 2 (viz., B or using a high frequency tone as the probe tone) were absent in Waugh and Normanís (1965) experiment. What is the implication if tones of different frequencies were used for the six Delay and Rate combinations? Under such circumstances, the variation in the subjectsí performance (viz., Y1 through Y6 in Table 2) under the six treatment combinations might be accounted for by the difference in frequency among the probe-tones. The data would be ambiguous as to the verity of the Interference theory under such circumstances.

However, as the frequency of the probe tone was held constant in Waugh and Normanís (1965) experiment, it can unambiguously be excluded as the explanation of the data (Cohen & Nagel, 1934). This "exclusion" function is the utility of the three kinds of control which must be present in a properly conducted experiment. This becomes important when the SPOPE factors are discussed in subsequent sections.

In sum, ambiguities in data interpretation are reduced by excluding alternate explanatory accounts. At the same time, the purpose of instituting controls in empirical research is not to impose a numerical structure on data, nor to shape the subjectsí behavior. It is to exclude alternate interpretations of data. In other words, to provide controls is to attempt to reduce ambiguity. As controls may be achieved to various degrees of success, some empirical studies (viz., experimental studies) give less ambiguous results than other empirical studies (e.g., quasi-experimental or non-experimental).

It is unfortunate for meta-theoretical or methodological discussion that the role of control in research is misrepresented in the relativistic critique account. This misrepresentation may be responsible for the failure in the relativistic critique view to distinguish between experimental and non-experimental research. This failure, in turn, may be responsible for the aforementioned inconsistency between (a) the recognition of the need to reduce ambiguity, and (b) the relativistic criticsí disdain for technical rigor.

SOCIAL PSYCHOLOGY OF THE PSYCHOLOGICAL EXPERIMENT (SPOPE)

There is a set of factors collectively known as social psychology of the psychological experiment (or SPOPE for short) (Orne, 1962; Rosenthal, 1963) which putatively renders experimental data inevitably invalid. This set of social factors consists of experimenter effects (Rosenthal, 1966), subject effects (Rosenthal & Rosnow, 1969, 1975) and demand characteristics (Orne, 1962, 1969, 1973).

The essence of the SPOPE critique of experimental data is that the outcome of an experiment is inevitably influenced by who conducts the experiment with whom as subjects. These influences are the consequence of, among other things, (a) the experimenterís personal characteristics and expectation regarding the experimental result, (b) experimental subjectsí individual characteristics, and (c) the perceived roles entertained by the experimenter and subjects, the subjectsí desire to ingratiate with the experimenter, and rumors about the experiment. In short, the SPOPE argument may be the psychological reason why the relativistic critique argument is correct. Danziger (1990) takes the SPOPE factors for granted. However, it may be suggested that there is no valid empirical data in support of the SPOPE arguments (Chow, 1987,1992a, 1994).

A thesis of the demand characteristics charge is that subjects are willing to undertake any task, however meaningless or futile, in the context of experimental research. For example, thirsty subjects persevered in eating salty crackers upon request, or an individual would patiently cross out a particular letter from rows and rows of random letter strings (Orne, 1962, 1969, 1973). This is the extent of the evidential support for the demand characteristics claim. However, asking people to carry out a meaningless or futile task is not conducting an experiment. Berkowitz and Donnerstein (1982) are correct in saying that there is no evidential support the demand characteristics claim.

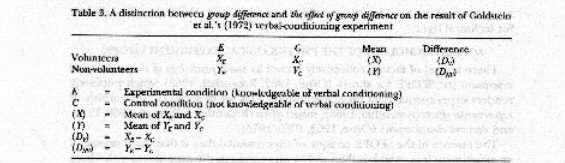

The fundamental problem with the subject effects claim is the failure in the SPOPE argument to distinguish between group differences and the effect of group differences on experimental result. For the subject effects claim to be true, it is necessary to demonstrate the latter. Demonstrating the former is insufficient. This difficulty may be illustrated with Goldstein, Rosnow, Goodstadt and Sulsí (1972) verbal-conditioning experiment.

They reinforced their subjects with a verbal reinforcer (e.g., "good") whenever the subjects emitted the first-person pronoun "I" or "We." There were two groups of subjects, namely, volunteers and non-volunteers. Half of each group was knowledgeable of the principle of operant conditioning; the other half of each group was not knowledgeable. All subjects were tested in four 20-trial blocks. The dependent variable was the difference between the number of first-person pronouns emitted in Block 4 and Block 1. This may be interpreted as a measure of how readily a subject can be conditioned. That is to say, (X) in Table 3 represents the differences between Block 4 and Block 1 for volunteers, and (Y) represents the same difference for non-volunteers. (DV) represents the difference between the experimental and control conditions for the volunteers, whereas (DNV) is the difference between the experimental and control conditions for the non-volunteers.

Goldstein et al. (1972) found that (X) was significantly larger than (Y). They concluded that willing subjects were "good" subjects in the sense that these subjects were more inclined to fulfill the demand of the experimental task (see Rosenthal & Rosnow, 1975, pp. 157-165). The subject effects claim is thereby established in the SPOPE view. As (X) and (Y) represent the rate of conditioning of the volunteers and non-volunteers, respectively, the difference between (X) and (Y) means that there is a difference between the two groups. This finding merely reinforces the knowledge that the two groups of subjects were different to begin with (viz., their being volunteers or non-volunteers).

It is important not to lose sight of the fact that the knowledgeability of verbal conditioning was included in the study. This manipulation means that the study was one about the differential consequences of the knowledgeability of verbal conditioning for the two groups. To demonstrate the effect of the group difference on the effect of knowledgeability on operant conditioning, it is necessary to show that (DV) is larger than (DNV). However, there was no difference between (DV) and (DNV). The issue is not that there are group or individual differences. What critics of experimental psychology have not shown is how group or individual differences actually affect experimental results.

Goldstein et al.ís (1972) experiment is typical of the genre of experiments used to support the subject effects claim. As has been seen, what is demonstrated is group difference when the required demonstration is an effect of group difference on experimental data. In other words, data from studies of this genre (however numerous) are insufficient as evidence for the subject effects claim.

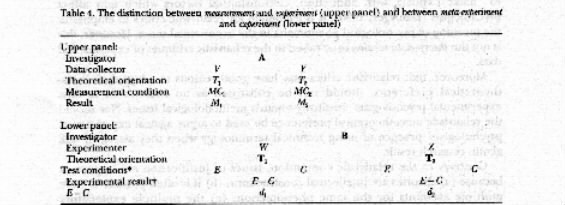

The difficulty with the experiment effects may be shown with reference to Table 4. In the top panel is depicted the design of Rosenthal and Fodeís (1963) photo-rating study. "A" represents Rosenthal and Fode, "Ví and "Yí represent two different groups of data-collectors who presented photographs to their respective subjects. The task of the subjects was to rate how successful, or unsuccessful, was the person in the photograph.

"V" and "Y" were given different "theoretical orientations." Specifically, data-collectors in "V" were told to expect a mean rating of +5, whereas those in "Y" were informed to expect a mean rating of -5. This differential instruction provides effectively different data-collection conditions for the "V" and "Y" groups. Hence, from the perspective of A (the investigator), the study had the formal structure of an experiment.

Ml was larger than M2. Rosenthal and Fode (1963) concluded that the data were consistent with the experimenter expectancy effects. The problem is that M1 and M2 are not experimental data to "V" or "Y". The necessary condition for the experimenter expectancy effects claim is that each of the individuals in Group "V" and "Y" must be given an experiment to conduct. However, the individuals in Group "V" and "Y" collected data under one condition only. In other words, M1 and M2 are only measurement data, not experimental data.

In sum, the design depicted in the upper panel of Table 4 is insufficient for investigating the experimenter expectancy effects. A reason why this inadequacy is not recognized is the failure to distinguish between meta-experiment (viz., an experiment about experimentation) and experiment, as may be seen in the lower panel of Table 4.

Investigator B has two groups of experimenters. Regardless of the theoretical orientation, each individual in "W" or "Z" collects data in two conditions, namely, the experimental and control conditions. Consequently, a difference between the two conditions is available from every individual in "W" or "Z." From the perspective of "W" or "Z," the study is an experiment because data are collected in two conditions which are identical in all aspects but one. The study is an experiment about the experiment conducted by "Wí or "Zí from the perspective of the investigator, B. For this reason, it is a "meta-experiment" to the investigator.

Using Rosenthal and Fodeís (1963) photo-rating task, Chow (1994) reported data from a meta-experiment. There was no differences between the two sets of experimental data, d1 and d2. The inescapable conclusions are that (i) the experimenter expectancy effects claim is based on data which are inadequate as the evidential data, and (ii) data from a properly designed meta-experiment do not support the experimenter expectancy effects claim.

In sum, while the SPOPE argument may be reasonable as a critique of non-experimental data, it is not applicable to experimental data. Specifically, controls are means with which experimenters can exclude alternate interpretations of data. By the same token, even if social conventions, cultural values and professional constraint may influence non-experimental data, appropriate controls can be (and are routinely) used to rule out these non-intellectual influences on experimental data. This is another reason why being attentive to technical details is not servitude to methodology.

SUMMARY AND CONCLUSIONS

The relativistic critique argument begins with the correct view that all data are theory-dependent. However, it is debatable to say that all assumptions underlying empirical research (particularly experimental research) have to be empirically justified simply because many assumptions are non-empirical in nature (e.g., rules in deductive logic, inductive principles, assumptions underlying statistical procedures, etc.).

Relativistic critics fault cognitive psychologists who practice Popperís (1968a/1959, 1968b/1962) conjectures and refutations approach for paying too much attention to problems of justification at the expense of questions about discovery. The question becomes the discovery of what. Psychologists do not invent phenomena to study. Instead, they speculate about the causes or reasons underlying varying mental and experiential phenomena found in everyday life. At this level, no contemporary experimental psychologist would disagree with relativistic critics that psychological theories are psychologistsí active constructions rather than passive reading of what are revealed to the psychologists. In exploring the hypothetical mechanisms capable of explaining observable phenomena, experimental psychologists do engage in the discovery process.

Many cognitive psychologists take to heart Popperís (1968a/1959, 1968b/1962) view that neither the origin of, nor the manner of acquiring, these constructivistic speculations really matters. Relativistic critics, on the other hand, find it necessary to make explicit, with adduction, circumstantial factors which may affect psychologists (Danziger, 1990). It is legitimate for relativistic critics to emphasize the meaning of psychological phenomena in the experiential sense. However, this is not the theory-data relation issue raised in the relativistic critiques of experimental data.

Moreover, that relativistic critics may have good reasons to adopt their meta-theoretical preference should not be construed as an indictment against experimental psychologistsí sensitivity towards methodological issues. Nor should the relativistic meta-theoretical preference be used to argue against experimental psychologistsí practice of using technical terminology when they assess, or talk about, research result.

Contrary to the relativistic contention, issues of justification are important because (a) theories are intellectual constructions, (b) it is often possible to have multiple accounts for the same phenomenon, (c) the multiple explanatory accounts may be mutually compatible, and (d) it is necessary to reduce ambiguity by excluding theoretical alternatives which are not warranted by data.

Many of the objections to empirical data raised in relativistic critique can readily be answered. Specifically, the ecological validity criterion loses its apparent attractiveness if it is realized that research is conducted to assess theories about phenomena, not the phenomena themselves. Accepting the legitimacy of data collected in artificial setting, experimental psychologists find it easier to honor the three technical meanings of control. To institute control in empirical research is to attempt to reduce ambiguity. Experimental psychologists pay attention to methodological details not for any pedantic reason. Nor does this mean that they are oblivious of other non-intellectual issues. It simply means that questions about validity are the only relevant issues when the discussion is about the relation between a theoretical assertion and its evidential support. Psychologists are meticulous about methodological details because they have to reduce ambiguity.

As recounted by Copi (1965), Euclid made a mistake in his first attempt to establish a geometry proof because Euclid allowed the content of his argument to interfere with his reasoning when he should have been guided by the form of his reasoning. That is to say, there is a time for content, as well as a time for form. By the same token, an exclusive concern for (a) the content of an assertion, (b) its practical utility, social implications or political correctness, or (c) the validity of the theory-data relationship may be called for on different occasions. This is not to say that psychologists are oblivious to pragmatic issues and/or utilitarian consequences of an assertion when they criticize a study on technical grounds. At the same time, to suggest that paying attention to technical details is a fetish or mere scientific rhetoric is to suggest that it is not necessary to consider the relation between a knowledge-claim and its evidential support.

REFERENCES

Baddeley, A. (1990). Human memory: Theory and practice. Needham Heights, MA: Allyn and Bacon.

Barber, T. X., & Silver, M. J. (1968a). Fact, fiction, and the experimenter bias effect. Psychological Bulletin Monograph Supplement, 70 (6, Part 2), 1-29.

Barber, T. X., & Silver, M. J. (I 968b). Pitfalls in data analysis and interpretation: A reply to Rosenthal. Psychological Bulletin Monograph Supplement, 70 (6, Part 2), 48-62.

Berkowitz, L., & Donnerstein, E. (1982). External validity is more than skin deep: Some answers to criticisms of laboratory experiments. American Psychologists, 37, 245-257.

Boring, E. G. (1954). The nature and history of experimental control. American journal of Psychology, 67, 573-589.

Boring, E. G. (1969). Perspective: Artifact and control. In R. Rosenthal & R. L. Rosnow (Eds.), A ifact in behavioral research (pp. 1-1 1). New York: Academic Press.

Campbell, D. T., & Stanley, J. L. (1966). Experimental and quasi-experimental designs for research. Chicago: Rand McNully.

Chomsky, N. (1959). Review of Skinnerís "Verbal Behavior." Language, 35, 26-58.

Chow, S. I,. (1987). Experimental psychology: Rationale, procedures and issues. Calgary: Detselig.

Chow, S. L. (1988). Significance test or effect size? Psychological Bulletin, 103, 105-1 10.

Chow, S. L. (1991). Conceptual rigor versus practical impact. Theory & Psychology, 1, 337-360.

Chow, S. L. (1992a). Research methods in psychology: A primer. Calgary: Detselig.

Chow, S. L. (1992b). Positivism and cognitive psychology: A second look. In C. W. Tolman (Ed.), Positivism in psychology: Historical and contemporary problems (pp.119-144). New York: Springer-Verlag.

Chow, S. L. (1994). The experimenterís expectancy effect: A meta-experiment. Zeitschrift für Pädagogische Psychologie/German Journal of Educational Psychology, 8 (2), 89-97.

Cohen, M. R., & Nagel, E. (1934). An introduction to logic and scientific method. London: Routledge & Kegan Paul.

Cook, T. D., & Campbell, D. T. (1979). Quasi-experimentation: Design & analysis issues for field settings. Chicago: Rand McNally.

Copi, 1. M. (1965). Symbolic logic (2nd ed.). New York: Macmillan.

Copi, 1. M. (1982). Symbolic logic (6th ed.). New York: Macmillan.

Danziger, K. (1990). Constructing the subject: Historical origins of psychological research. Cambridge: Cambridge University Press.

Garner, W. R., Hake, H. W., & Eriksen, C. W. (1956). Operationalism and the concept of perception. Psychological Bulletin, 87, 564-567.

Gergen, K. J. (1988). The concept of progress in psychological theory. In W. J. Baker, L. P. Mos, H. V. Rappard, & H. J. Stam (Eds.), Recent trends in theoretical psychology (pp. 1-1 4). New York: Springer-Verlag.

Gergen, K.J. (1991). Emerging challenges for theory and psychology. Theory & Psychology, 1,13-35.

Goldstein,J.J., Rosnow, R. L., Goodstadt, B., & Suls,J. M. (1972). The "good subject" in verbal operant conditioning research. Journal of Experimental Research in Personality, 6, 29-33.

Greenwood, J. D. (1991). Relations & representations. London: Routledge.

Manicas, P. T., & Secord, P. F. (1983). Implications for psychology of the new philosophy of science. Am can Psychologist, 38, 103-115.

Mill, J. S. (1973). A system of logic: Ratiocinative and inductive. Toronto: University of Toronto Press.

Mook, D. G. (1983). In defense of external invalidity. American Psychologist, 38, 379-387.

Neisser, U. (1976). Cognition and reality. San Francisco: W. H. Freeman.

Neisser, U. (1988). New vistas in the study of memory. In U. Neisser & E. Winograd (Eds.), Remembering reconsidered: Ecological approaches to the study of memory (pp. I -1 0). Cambridge: Cambridge University Press.

Orne, M. T. (1962). On the social psychology of the psychological experiment: With particular reference to demand characteristics and their implications. American Psychologist, 17, 776-783.

Orne, M. T. (1969). Demand characteristics and the concept of quasi-controls. In R. Rosenthal & R. L. Rosnow (Eds.), Artifact in behavioral research (pp. 143-179). New York: Academic Press.

Orne, M. T. (1973). Communication by the total experimental situation: Why it is important, how it is evaluated, and its significance for the ecological validity of findings. In P. Pliner, L. Krames, & T. Alloway (Eds.), Communication and affect (pp. 157-191). New York: Academic Press.

Popper, K. R. (1968a). The logic of scientific discovery (2nd ed.). New York: Harper & Row. (Original work published 1959)

Popper, K. R. (1968b). Conjectures and reputations. New York: Harper & Row. (Original work published, 1962)

Rosenthal, R. (1963). On the social psychology of the psychological experiment: The experimenterís hypothesis as unintended determinant of experimental results. American Scientist, 51, 268-283.

Rosenthal, R. (1966). Experimenter effects in behavioral research. New York: Appleton-Century-Crofts.

Rosenthal, R. (1968). Experimenter expectancy and the reassuring nature of the null hypothesis decision procedure. Psychological Bulletin Monograph Supplement, 70 (6, Part 2), 48-62.

Rosenthal, R., & Fode, K. L. (1963). Three experiments in experimenter bias. Psychological Report, 12, 491-511.

Rosenthal, R., & Rosnow, R. L. (1969). The volunteer subject. In R. Rosenthal & R. L. Rosnow (Eds.), Artifact in behavioral research (pp. 59-118). New York: Academic Press.

Rosenthal, R., & Rosnow, R. L. (1975). The volunteer subject. New York: John Wiley.

Skinner, B. F. (1938). The behavior of organisms: An experimental analysis. New York: Appleton-Century.

Wampold, B. E., Davis, B., & Good, R. H. II. (1990). Methodological contributions to clinical research: Hypothesis validity of clinical research. Journal of Consulting and Clinical Psychology, 58, 360-367.

Waugh, N. C., & Norman, D. A. (1965). Primary memory. Psychological Review, 72, 89-104.

End Notes

1

1 am grateful for the helpful comments of an anonymous reviewer on an earlier version of the paper.2

The other exemplification of Method of Concomitant Variation is the situation in which the functional relationship between the independent and dependent variables is a positive one. What should be added is that the said functional relationship must be established in the presence of all recognized control variables.3

There is the complication due to auxiliary assumptions. Specifically, a calm day is assumed in the example. If it is not reasonable to assume a calm day, the disputants would have to come up with a different test which takes into account the wind condition. Be that as it may, suffice it to say that this complication does not affect the present argument (see Chow, 1992a).4

An explanation is necessary for the exclusion of Cook and Campbellís (1979) external validity. Some criteria of external validity are generality, social relevance, practical usefulness, a point suggested by an anonymous reviewer of an earlier version of this paper. However, the assessment of the theory-data relationship is not affected by any of these considerations. For example, universality (or generality) refers to the extent to which a theory is applicable. The theory-data relation found in a research conclusion is not contingent on generalizing the conclusion beyond the well-defined population about which the research is conducted. That it may be nice to be able to do that is a different matter. That is, the applicability of the conclusion may be restricted to a well-defined population. Nonetheless, it must be warranted by a set of research data (Greenwood, 1991).5

It should be made clear that neither Danziger (1990) nor Gergen (1991) used the exchange between Barber and Silver (1968a, 1968b) and Rosenthal (I 966, 1968) as an example. At the same time, neither Danziger nor Gergen actually said in explicit terms why they found it objectionable to use technical criteria to assess research results. I think that Barber and Silverís (1968a, 1968b) papers represent best what Danziger (1990) and Gergen (1991) may disapprove in general terms.6

It may be helpful to iterate the distinction between a variable (e.g., color) and its levels (viz., red, blue, green, etc.). The relation between a variable and its levels is like that between a class and its members. If the hue blue is found in every data collection condition, the variable color is said to be held at the same (or constant) level blue.