- What is Computation? it is the manipulation of arbitrarily shaped formal symbols in accordance with symbol-manipulation rules, algorithms, that operate only on the (arbitrary) shape of the symbols, not their meaning.

- Interpretatabililty. The only computations of interest, though, are the ones that can be given a coherent interpretation.

- Hardware-Independence. The hardware that executes the computation is irrelevant. The symbol manipulations have to be executed physically, so there does have to be hardware that executes it, but the physics of the hardware is irrelevant to the interpretability of the software it is executing. It’s just symbol-manipulations. It could have been done with pencil and paper.

- What is the Weak Church/Turing Thesis? That what mathematicians are doing is computation: formal symbol manipulation, executable by a Turing machine – finite-state hardware that can read, write, advance tape, change state or halt.

- What is Simulation? It is computation that is interpretable as modelling properties of the real world: size, shape, movement, temperature, dynamics, etc. But it’s still only computation: coherently interpretable manipulation of symbols

- What is the Strong Church/Turing Thesis? That computation can simulate (i.e., model) just about anything in the world to as close an approximation as desired (if you can find the right algorithm). It is possible to simulate a real rocket as well as the physical environment of a real rocket. If the simulation is a close enough approximation to the properties of a real rocket and its environment, it can be manipulated computationally to design and test new, improved rocket designs. If the improved design works in the simulation, then it can be used as the blueprint for designing a real rocket that applies the new design in the real world, with real material, and it works.

- What is Reality? It is the real world of objects we can see and measure.

- What is Virtual Reality (VR)? Devices that can stimulate (fool) the human senses by transmitting the output of simulations of real objects to virtual-reality gloves and goggles. For example, VR can transmit the output of the simulation of an ice cube, melting, to gloves and goggles that make you feel you are seeing and feeling an ice cube. melting. But there is no ice-cube and no melting; just symbol manipulations interpretable as an ice-cube, melting.

- What is Certainly Truee (rather than just highly probably true on all available evidence)? only what is provably true in formal mathematics. Provable means necessarily true, on pain of contradiction with formal premises (axioms). Everything else that is true is not provably true (hence not necessarily true), just probably true.

- What is illusion? Whatever fools the senses. There is no way to be certain that what our senses and measuring instruments tell us is true (because it cannot be proved formally to be necessarily true, on pain of contradiction). But almost-certain on all the evidence is good enough, for both ordinary life and science.

- Being a Figment? To understand the difference between a sensory illusion and reality is perhaps the most basic insight that anyone can have: the difference between what I see and what is really there. “What I am seeing could be a figment of my imagination.” But to imagine that what is really there could be a computer simulation of which I myself am a part (i.e., symbols manipulated by computer hardware, symbols that are interpretable as the reality I am seeing, as if I were in a VR) is to imagine that the figment could be the reality – which is simply incoherent, circular, self-referential nonsense.

- Hermeneutics. Those who think this way have become lost in the “hermeneutic hall of mirrors,” mistaking symbols that are interpretable (by their real minds and real senses) as reflections of themselves — as being their real selves; mistaking the simulated ice-cube, for a “real” ice-cube.

Slime molds are certainly interesting, both as the origin of multicellular life and the origin of cellular communication and learning. (When I lived at the Oppenheims’ on Princeton Avenue in the 1970’s they often invited John Tyler Bonner to their luncheons, but I don’t remember any substantive discussion of his work during those luncheons.)

The NOVA video was interesting, despite the OOH-AAH style of presentation (and especially the narrators’ prosody and intonation, which to me was really irritating and intrusive), but the content was interesting – once it was de-weaseled from its empty buzzwords, like “intelligence,” which means nothing (really nothing) other than the capacity (which is shared by biological organisms and artificial devices as well as running computational algorithms) to learn.

The trouble with weasel-words like “intelligence,” is that they are vessels inviting the projection of a sentient “mind” where there isn’t, or need not be, a mind. The capacity to learn is a necessary but certainly not a sufficient condition for sentience, which is the capacity to feel (which is what it means to have a “mind”).

Sensing and responding are not sentience either; they are just mechanical or biomechanical causality: Transduction is just converting one form of energy into another. Both nonliving (mostly human synthesized) devices and living organisms can learn. Learning (usually) requires sensors, transducers, and effectors; it can also be simulated computationally (i.e., symbolically, algorithmically). But “sensors,” whether synthetic or biological, do not require or imply sentience (the capacity to feel). They only require the capacity to detect and do.

And what sensors and effectors can (among other things) do, is to learn, which is to change in what they do, and can do. “Doing” is already a bit weaselly, implying some kind of “agency” or agenthood, which again invites projecting a “mind” onto it (“doing it because you feel like doing it”). But having a mind (another weasel-word, really) and having (or rather being able to be in) “mental states” really just means being able to feel (to have felt states, sentience).

And being able to learn, as slime molds can, definitely does not require or entail being able to feel. It doesn’t even require being a biological organism. Learning can (or will eventually be shown to be able to) be done by artificial devices, and to be simulable computationally, by algorithms. Doing can be simulated purely computationally (symbolically, algorithmically) but feeling cannot be, or, otherwise put, simulated feeling is not really feeling any more than simulated moving or simulated wetness is really moving or wet (even if it’s piped into a Virtual Reality device to fool our senses). It’s just code that is interpretable as feeling, or moving or wet.

But I digress. The point is that learning capacity, artificial or biological, does not require or entail feeling capacity. And what is at issue in the question of whether an organism is sentient is not (just) whether it can learn, but whether it can feel.

Slime mold — amoebas that can transition between two states, single cells and multicellular — is extremely interesting and informative about the evolutionary transition to multicellular organisms, cellular communication, and learning capacity. But there is no basis for concluding, from what they can do, that slime molds can feel, no matter how easy it is to interpret the learning as mind-like (“smart”). They, and their synthetic counterparts, have (or are) an organ for growing, moving, and learning, but not for feeling. The function of feeling is hard enough to explain in sentient organisms with brains, from worms and insects upward, but it becomes arbitrary when we project feeling onto every system that can learn, including root tips and amoebas (or amoeba aggregations).

I try not to eat any organism that we (think we) know can feel — but not any organism (or device) that can learn.

]]>Dale Jamieson’s heart is clearly in the right place, both about protecting sentient organisms and about protecting their insentient environment.

Philosophers call deserving such protection “meriting moral consideration” (by humans, of course).

Dale points out that humans have followed a long circuitous path — from thinking that only humans, with language and intelligence, merit moral consideration, to thinking that all organisms that are sentient (hence can suffer) merit moral consideration.

But he thinks sentience is not a good enough criterion. “Agency” is required too. What is agency? It is being able to do something deliberately, and not just because you were pushed.

But what does it mean to be able to do something deliberately? I think it’s being able to do something because you feel like it rather than because you were pushed (or at least because you feel like you’re doing it because you feel like it). In other words, I think a necessary condition for agency is sentience.

Thermostats and robots and microbes and plants can be interpreted by humans as “agents,” but whether humans are right in their interpretations depends on facts – facts that, because of the “other-minds problem,” humans can never know for sure: the only one who can know for sure whether a thing feels is the thing itself.

(Would an insentient entity, one that was only capable of certain autonomous actions — such as running away or defending itself if attacked, but never feeling a thing – merit moral consideration? To me, with the animal kill counter registering grotesque and ever grandescent numbers of human-inflicted horrors on undeniably sentient nonhuman victims every second of every day, worldwide, it is nothing short of grotesque to be theorizing about “insentient agency.”)

Certainty about sentience is not necessary, however. We can’t have certainty about sentience even for our fellow human beings. High probability on all available evidence is good enough. But then the evidence for agency depends on the evidence for sentience. It is not an independent criterion for moral consideration; just further evidence for sentience. Evidence of independent “choice” or “decision-making” or “autonomomy” may count as evidence for “agency,” but without evidence for sentience we are back to thermostats, robots, microbes and plants.

In mind-reading others, human and nonhuman, we do have a little help from Darwinian evolution and from “mirror neurons” in the brain that are active both when we do something and when another organism, human or nonhuman, does the same thing. These are useful for interacting with our predators (and, if we are carnivores, our prey), as well as with our young, kin, and kind (if we are K-selected, altricial species who must care for our young, or social species who must maintain family and tribal relationships lifelong).

So we need both sentience-detectors and agency-detectors for survival.

But only sentience is needed for moral consideration.

was enough to confirm

the handwriting on the wall

of the firmament

– at least for one unchained biochemical reaction in the Anthropocene,

in one small speck of the Universe,

for one small speck of a species,

too big for its breeches.

The inevitable downfall of the egregious upstart

would seem like fair come-uppance

were it not for all the collateral damage

to its countless victims,

without and within.

But is there a homology

between biological evolution

and cosmology?

Is the inevitability of the adaptation of nonhuman life

to human depredations

— until the eventual devolution

or dissolution

of human DNA —

also a sign that

humankind

is destined to keep re-appearing,

elsewhere in the universe,

along with life itself?

and all our too-big-for-our breeches

antics?

I wish not.

And I also wish to register a vote

for another mutation, may its tribe increase:

Zombies.

Insentient organisms.

I hope they (quickly) supplant

the sentients,

till there is no feeling left,

with no return path,

if such a thing is possible…

But there too, the law of large numbers,

combinatorics,

time without end,

seem stacked against such wishes.

Besides,

sentience

(hence suffering),

the only thing that matters in the universe,

is a solipsistic matter;

the speculations of cosmologists

( like those of ecologists,

metempsychoticists

and utilitarians)

— about cyclic universes,

generations,

incarnations,

populations —

are nothing but sterile,

actuarial

numerology.

It’s all just lone sparrows,

all the way down.

For ethics, it’s the negative feelings that matter. But determining whether an organism feels anything at all (the other-minds problem) is hard enough without trying to speculate about whether there exit species that can only feel neutral (“unvalenced”) feelings. (I doubt that +/-/= feelings evolved separately, although their valence-weighting is no doubt functionally dissociable, as in the Melzack/Wall gate-control theory of pain.)

The word “sense” in English is ambiguous, because it can mean both felt sensing and unfelt “sensing,” as in an electronic device like a sensor, or a mechanical one, like a thermometer or a thermostat, or even a biological sensor, like an in-vitro retinal cone cell, which, like photosensitive film, senses and reacts to light, but does not feel a thing (though the brain it connects to might).

To the best of our knowledge so far, the phototropisms, thermotropisms and hydrotropisms of plants, even the ones that can be modulated by their history, are all like that too: sensing and reacting without feeling, as in homeostatic systems or servomechanisms.

Feel/feeling/felt would be fine for replacing all the ambiguous s-words (sense, sensor, sensation…) and dispelling their ambiguities.

(Although “feeling” is somewhat biased toward emotion (i.e., +/- “feelings”), it is the right descriptor for neutral feelings too, like warmth, movement, or touch, which only become +/- at extreme intensities.)

The only thing the f-words lack is a generic noun for “having the capacity too feel” as a counterpart for the noun sentience itself (and its referent). (As usual, German has a candidate: Gefühlsfähigkeit.)

And all this, without having to use the weasel-word “conscious/consciousness,” for which the f-words are a healthy antidote, to keep us honest, and coherent…

]]>1. Computation is just the manipulation of arbitrary formal symbols, according to rules (algorithms) applied to the symbols’ shapes, not their interpretations (if any).

2. The symbol-manipulations have to be done by some sort of physical hardware, but the physical composition of the hardware is irrelevant, as long as it executes the right symbol manipulation rules.

3. Although the symbols need not be interpretable as meaning anything – there can be a Turing Machine that executes a program that is absolutely meaningless, like Hesse’s “Glass Bead Game” – but computationalists are mostly interested in interpretable algorithms that do can be given a coherent systematic interpretation by the user.

4. The Weak Church/Turing Thesis is that computation (symbol manipulation, like a Turing Machine) is what mathematicians do: symbol manipulations that are systematically interpretable as the truths and proofs of mathematics.

5. The Strong Church/Turing Thesis (SCTT) is that almost everything in the universe can be simulated (modelled) computationally.

6. A computational simulation is the execution of symbol-manipulations by hardware in which the symbols and manipulations are systematically interpretable by users as the properties of a real object in the real world (e.g., the simulation of a pendulum or an atom or a neuron or our solar system).

7. Computation can simulate only “almost” everything in the world, because — symbols and computations being digital — computer simulations of real-world objects can only be approximate. Computation is merely discrete and finite, hence it cannot encode every possible property of the real-world object. But the approximation can be tightened as closely as we wish, given enough hardware capacity and an accurate enough computational model.

8. One of the pieces of evidence for the truth of the SCTT is the fact that it is possible to connect the hardware that is doing the simulation of an object to another kind of hardware (not digital but “analog”), namely, Virtual Reality (VR) peripherals (e.g., real goggles and gloves) which are worn by real, biological human beings.

9. Hence the accuracy of a computational simulation of a coconut can be tested in two ways: (1) by systematically interpreting the symbols as the properties of a coconut and testing whether they correctly correspond to and predict the properties of a real coconut or (2) by connecting the computer simulation to a VR simulator in a pair of goggles and gloves, so that a real human being wearing them can manipulate the simulated coconut.

10. One could, of course, again on the basis of the SCTT, computationally simulate not only the coconut, but the goggles, the gloves, and the human user wearing them — but that would be just computer simulation and not VR!

11. And there we have arrived at the fundamental conflation (between computational simulation and VR) that is made by sci-fi enthusiasts (like the makers and viewers of Matrix and the like, and, apparently, David Chalmers).

12. Those who fall into this conflation have misunderstood the nature of computation (and the SCTT).

13. Nor have they understood the distinction between appearance and reality – the one that’s missed by those who, instead of just worrying that someone else might be a figment of their imagination, worry that they themselves might be a figment of someone else’s imagination.

14. Neither a computationally simulated coconut nor a VR coconot is a coconut, let alone a pumpkin in another world.

15. Computation is just semantically-interpretable symbol-manipulation (Searle’s “squiggles and squiggles”); a symbolic oracle. The symbol manipulation can be done by a computer, and the interpretation can be done in a person’s head — or it can be transmitted (causally linked) to dedicated (non-computational) hardware, such as a desk-calculator or a computer screen or to VR peripherals, allowing users’ brains to perceive them through their senses rather than just through their thoughts and language.

16. In the context of the Symbol Grounding Problem and Searle’s Chinese-Room Argument against “Strong AI,” to conflate interpretable symbols with reality is to get lost in a hermeneutic hall of mirrors. (That’s the locus of Chalmers’s “Reality.”)

Exercise for the reader: Does Turing make the same conflation in implying that everything is a Turing Machine (rather than just that everything can be simulated symbolically by a Turing Machine)?

]]>The other-minds problem in other species

Long Summary: Both ethology and comparative psychology have long been focused on behavior. The ethogram, like the reinforcement schedule, is based on what organisms do rather than what they feel, because what they do can be observed and what they feel cannot.

In philosophy, this is called the “Other-Minds Problem”: The only feelings you can feel are your own. The rest is just behavior, and inference. Our own species does have one special behavior that can penetrate the other-minds barrier directly (if we are to be believed): We can say what’s on our mind, including what we feel. No other species has language. Many can communicate non-verbally, but that’s showing, not telling.

In trying to make do with inferences from behavior, the behavioral sciences have been at pains to avoid “anthropomorphism,” which is attributing feelings and knowledge to other species by analogy with how we would be feeling if we were doing what they were doing under analogous conditions. Along with Lloyd Morgan’s Canon – that we should not make a mentalistic inference when a behavioral one will do – deliberately abstaining from anthropomorphism has further narrowed inroads on the other-minds problem. We seek “operational measures” to serve as proxies for what our own native mammalian mind-reading capacities tell us is love, hunger, fear.

Yet our human mind-reading capacities – biologically evolved for care-giving to our own progeny as well as for social interactions with our kin and kind – are really quite acute. That (and not just language) is why we do not rely on ethograms or inferences from operational measures in our relations with one another.

But what about other species? And whose problem is the “Other-Minds Problem”? Philosophers think of it as our problem, in making inferences about the minds of our own conspecifics. But when it comes to other species, and in particular our interactions with them, surely it is their problem if we misinterpret or fail to detect what or whether they are feeling.

We no longer think, as Descartes did, that all nonhuman species are insentient zombies, to do with as we please – at least not in the case of mammals and birds. But, as I will show, we are still not sure in the case of fish, other lower vertebrates, and invertebrates. Debates rage; and if we are wrong, and they do feel, the ones suffering a monumental problem are trillions and trillions of fish (and other marine species). Ethologists have been using ingenious means to demonstrate (operationally) what to any human who has seen a fish struggling on a hook (or a lobster in a boiling pot) is already patently obvious. For such extreme cases some have urged inverting Morgan’s Canon and instead adopting the ‘Precautionary Principle”: giving the other minds the benefit of the doubt unless there is proof to the contrary.

Yet even with mammals, whose sentience is no longer in doubt, there are problematic cases. Applied Ethology is concerned with balancing the needs of other species with the needs of our own. Everyone agrees that sentient species should not be hurt needlessly.

Most people today consider using animals for food or science a matter of vital necessity for our species. (It is not clear how right they are in the case of food, at least in developed economies, and we all know that not everything done in the name of science is vitally necessary — but I won’t call into question either of these premises on this occasion.)

Entertainment, however, is clearly not a matter of vital necessity. Yet some forms of entertainment entail hurting other species that are known to be sentient. The rodeo is a prominent example. I will close by describing the results of the analysis of a unique and remarkable database gathered in order to test rodeo practices in Quebec in light of a new law that accords animals the status of “sentient beings with biological needs” who may no longer be “subjected to abuse or mistreatment that may affect their health” (except in the food industry and in scientific research).

I invite applied ethologists and veterinarians who wish to help protect animals from this to contact me at harnad@uqam.ca about helping to analyze this unprecedented database of 3 x 45 hours of continuous video evidence on the 20 rodeos in light of the new sentience law.

It is an honor to be here at the University of Prince Edward Island to give the Wood-Gush Memorial Lecture. I did not know David Wood-Gush, but by all accounts he was a remarkable person, for his personal courage, his productivity, and the affection and admiration he inspired in all. The following words — from one of his last writings before his untimely death in 1992 — go to the heart of the problem that I will be talking to you about today:

“In the late 50s and early 60s profound changes took place in the structure of the poultry industry in the USA: … The age of ‘Big is Beautiful’ had arrived… Sociologically it was not pretty and from the animal welfare point of view it was disastrous.”

Disastrous for them, not for us. And it applied and applies to many, many other species besides chickens. I think David Wood-Gush would have been appalled to see what is documented in Dominion, which has just been released, and which I urge all who care about the well-being of the creatures under our dominion to see, and then to proclaim from the rooftops.

Ethology prides itself on being a behavioral science: the science that tries to predict and explain what organisms can and do do. My own field, cognitive science, is also a behavioral science. But behavior is what goes on outside the organism, and cognition is what goes on inside. This does not mean that cognitive science is just neuroscience – although there is indeed cognitive neuroscience, and what is going on inside the organism is indeed neural activity. But cognitive science is wider than neuroscience in that it is trying to discover the internal causal mechanisms that generate organisms’ behavior, and their behavioral capacity: what they do, and how they manage to do it.

Those causal mechanisms are undoubtedly neural, but that doesn’t mean that by just peeking and poking in the brain, even with today’s remarkable tools for neuroimaging, we will simply be able to read off the causal mechanisms from the neural data, as we were able to do with the other organs of the body, like the heart, the kidneys or the liver. This is because the brain does, and can do, everything that we can do. That is how the computer found its way into cognitive science (thanks to Alan Turing). We use the computer to design and test causal models to see whether they can indeed do what the brain can do, and if so, how. That is the method underlying what has been dubbed the “Turing Test.”

This pursuit of causal explanation has come to be called the “easy problem” of cognitive science, although it is anything but easy, and its solution is still countless years and Nobel Prizes away. —- But if finding the causal mechanisms that generate our behavioral capacity is the “easy problem” of cognitive science, what is the “hard problem”?

To answer this we first have to remind ourselves that behaving is not the only thing that organisms do. They also feel, they are sentient – or at least some of them are. We can observe their behavior, but we can only infer their feelings. In fact, determining whether an organism feels – and if so, what it feels – is another problem of cognitive science, not the “easy” problem of discovering the causal mechanisms that generate behavior, but not yet the hard problem either: Determining which organisms feel, and if so, what they feel, is called the “other-minds problem.” The other-minds problem is inherited from Philosophy, but it is anything but a merely “philosophical problem” (whatever that means).

The other-minds problem is about the only things that really matter in the universe, either materially or morally.

That was quite a mouthful.

This talk is about the other-minds problem.

But first let me dispel the mystery about what is the “hard problem” – the problem that is not going to be addressed in this talk. If the easy problem of cognitive science is the problem of explaining, causally, how and why organisms are able to do all the things they are able to do, the hard problem is to explain, causally, how and why organisms are able to feel, rather than just do whatever they are able to do. —- I am sure many of you feel that you have a simple and obvious solution to the hard problem. Let me defer that to the question/answer period after this talk with just the suggestion that it’s not called the hard problem for nothing. So your solution almost certainly does not work. And the reason for that is, in fact, the “easy problem,” whose solution looks as if it will make feeling causally superfluous. For once we have fully explained the causal mechanism that generates organisms’ behavioral capacities — their capacity to do all the things they can do (move, sense, remember, learn — even speak) there are no causal degrees of freedom left to explain how and why organisms feel, rather than just do whatever needs doing (in order to survive and reproduce).

Which brings us back to the other-minds problem. Behavior — doing — is observable, measurable. Feeling is not. So how can we know whether and what another organism feels? —-

Let’s start with our own species: The obvious answer is that if we want to find out whether another person is feeling something, and what they are feeling, we ask. But what about all the species that cannot speak? Or even human infants, or handicapped people who cannot speak or understand? The answer is that with our own species humans have a powerful biological capacity, which has lately been dubbed “mind-reading” — a capacity which might or might not be grounded in the activity of what have lately been dubbed “mirror neurons.”

Mind-reading is the capacity to detect what others are thinking and feeling without having to ask. Mirror neurons are active both when I perform a movement and when you perform that same movement. The link is between my seeing you do something, and my knowing what it feels like to do that same thing, to the point of being able to imitate it. No one knows yet how mirror neurons manage to do this, but, if you think about it, we already knew that our brains could imitate movements, somehow, because we can do it.

So can other animals that imitate, such as songbirds and parrots, as well as the apes, elephants, dolphins and magpies who can recognize themselves in the mirror.

The obvious link between mirroring doings and mirroring feelings is the link between an observable behavior by another body and the internal state which generates that behavior in my own body.

Some have even tried to relate the capacity for empathy to mirror neurons. Empathy is not unique to humans either, as we see across many mammalian and avian species, in the parental care of their young, in pair-bonding and in social behavior. It also makes little difference – insofar as mind-reading capacity is concerned – whether social perception is inborn or learned. The critical thing is the link between what the observed animal is doing and what the observing animal is feeling (and eventually doing). In the case of prosocial behaviors, the link is positive; I want to help. In the case of aggression or fear, the link is negative; I want to attack, or flee. Humans are capable of perceiving the emotions underlying the facial expressions, vocalizations and movements of other humans; they are also capable of feigning them, when they’re not feeling them. That may be unique to humans. But the ability to detect and respond to the other’s internal state is not unique to humans.

At this point the notion of “anthropomorphism” of course immediately comes to mind: Both ethologists and comparative psychologists have been firmly taught to refrain from projecting their own mental states onto nonhuman animals: Abide by Lloyd-Morgan’s Canon. Never mind the mind-reading; stick to behavior-reading. Forget about whether or what the animal might be feeling or thinking. Just observe and predict what the animal is doing, with the help of the ecological context, the ethogram, and evolutionary theory. In other words, forget about the other-minds problem and treat animals as if they were highly skilled biorobotic zombies — even though you know they aren’t.

Do we know they aren’t? Of course we do. We know it of our own family animals as surely as we know it of our own preverbal infants. No one invokes Lloyd Morgan’s Canon when it comes to their own babies (although it’s not been that long since surgeons [cf. Derbyshire] dutifully suppressed their anthropomorphism and operated on babies without anesthesia, just as if they had been hewing to Descartes’ assurances, three centuries earlier, that the howls and struggles of the dog tied to the vivisecting table can be safely ignored, because all non-human creatures are just bio-robotic zombies.)

I will return to this. But, thankfully, pediatric surgeons have set aside their compunctions about anthropomorphism in favor of their compunctions about hurting sentient babies; the veterinary profession has done likewise when it comes to operating on mammals and birds. But when it comes to “lower vertebrates” such as reptiles, amphibians, fish and invertebrates, we – or rather they (the victims) – are still living in Cartesian times.

Let’s go back to language for a moment. Language is unique to the human species (although communication certainly isn’t). Human language is clearly the most powerful mind-reading tool of all. It’s close to omnipotent. Any thought a human can think – and just about any object a human can observe or any experience a human can have – can be verbalized: described in words, in any language. Not described exactly, because, like a picture, an experience is always worth more than a thousand words; but close enough. Language is grounded in the experiences we all share, and name.

Now the important thing to note is that the relation between words and the objects and experiences to which they refer is also a kind of mirror-neuron function. I could never talk to you about what “red” means if we had not both seen and named red things red. Then, when you talk about something that’s red, I know what you have in mind — as surely as I know what you’re doing when you reach for an out-of-reach apple – or when you scowl at me. And preverbal infants know some of these things too. It’s those adept mirror-neurons again. — What about nonhuman species?

Some of you may be familiar with the “Sally/Anne” “mind-reading” experiments that are now widely used in human developmental psychological research on children.

You may be surprised to learn that the Sally/Anne experiments were inspired by an article written 40 years ago, entitled “Does the Chimpanzee Have a Theory of Mind?” by a former behaviorist called David Premack. Premack’s article was published in 1978 in the inaugural volume of a new journal called Behavioral and Brain Sciences (or BBS), of which I happened to be the editor at the time.

BBS is a rather influential, multidisciplinary journal specializing in so-called “target articles” that have broad implications across multiple fields. The target articles are accepted only on condition that the referees and editor judge them to be important enough to be accorded “Open Peer Commentary,” which means that as many as a dozen or even two dozen specialists from across disciplines and around the world each write a 1000-word commentary – a mini-article, really – supplementing, criticizing or elaborating on the content of the target article. Premack’s target article drew a good deal of attention across disciplines and around the world. It elicited 43 commentaries followed by the author’s Response. Premack’s clever series of experiments was the first behavioral evidence that apes can and do infer what other apes — as well as humans – have in mind.

So if apes can and do do mind-reading when they are studying one another, or studying us, why would we feel we have to deny and ignore our own mind-reading capacity as “unscientific anthropomorphism” when we are studying them? Owing to our extremely long period of parental dependency, our extreme sociality and social interdependency, and probably also our long period of co-evolution with domesticated animals, our species is probably the mind-reading champion of the biosphere. And not just because of the unique language capacity that we use to mind-read one another verbally. More likely, our language capacity itself is somehow built on the pre-verbal mind-reading capacity and mirror neurons that we share with other species.

So this is a good time to ask ourselves – especially if we are applied ethologists – why, when it comes to the minds of other species, we self-impose a methodological agnosticism on the dictates of our evolved mind-reading gifts, ignoring them as unscientific anthropomorphism?

It’s not that the other-minds problem is not a real problem. There is no way to know for sure whether any other organism on the planet, including my own conspecifics, feels at all. As already mentioned, they could all be insentient biorobotic zombies, doing but not feeling. There’s no way to prove – either mathematically or experimentally – that others feel, rather than just do, any more than there is any way to prove, mathematically, that apples fall to earth because of the pull of the inverse square law of gravity (or, for that matter, whether they will keep falling down rather than up – or even whether apples or the earth exist at all). But in science we don’t demand that unobservable truths should be provable as necessarily true, on pain of contradiction, as in mathematics. We demand only that they should be highly probable given the available observational and experimental evidence.

Each individual does know (with the help of Descartes’s Cogito) that it is not just probable but certain that they are feeling, even though I cannot be sure that any other organism feels. I know I am not a zombie. But it would be colossally improbable that I am the only individual on the planet who feels. Hence we accept the dictates of our mind-reading capacity when it comes to our own species.

So we know that other people feel too – and we often have a pretty good idea of what they are feeling. We also know that our mind-reading skills are far from infallible, even if we are the world champions at mind-reading, not only because people can feign feelings nonverbally or lie about their feelings verbally, but because even in the best of times, our mirror neurons can lead us astray about what’s actually going on in another’s mind. And when it’s people we are mind-reading, we certainly can’t hardly blame our mind-reading failures on anthropomorphism, even when we fail! (Think about it.)

So with other humans, when words cannot be trusted, our mind-reading intuitions do need to be tested behaviorally, and sometimes even physiologically (think of lie-detectors, and neural imaging), when the question is about what it is, exactly, that another person is feeling.

But when it comes to the question of whether other people are feeling at all, rather than just what they are feeling, the only uncertainty is in cases of complete paralysis, coma or general anesthesia, where there is neither behavioral nor verbal evidence to go by. Then we rely on physiological and neurophysiological correlates of feeling, which have themselves been validated against behavior, including verbal behavior.

But we are the only verbal species. What about all the other species? As our test case, let’s focus on a feeling that really matters – not warm/cool, light/dark, loud/soft, smooth/bumpy, sweet/sour, itches or tickles, but hurts — in other words: pain. But bear in mind also that what I am about to say about pain also applies to any other feeling. There is a distinction made in pain theory between “nociception” and “pain.” Nociception is defined as detecting and responding to tissue damage, and pain is defined as what is felt when something hurts. The very same distinction can be made about thermoception versus feeling warmth or photodetection versus seeing light. There is a difference between just responding physiologically to stimulation and feeling something. One is doing, the other is feeling.

I’ve already noted that when it comes to mammals and birds we are no longer living in Cartesian times. I don’t know whether it’s really true that Descartes insisted it was fine to operate on conscious animals because they were just reflex robots and their struggles did not mean a thing: they were just doing, not feeling. But he did invoke the other-minds problem – the genuine uncertainty about whether anything other than oneself feels. The fact is that we cannot know whether anyone else feels: we cannot feel their feelings. We can just see their doings. Descartes was ready to allow human language and reasoning to resolve that uncertainty, with the help of some theology and some pseudo-physiology about the pineal gland. So humans were granted the benefit of the doubt.

It has taken centuries more to accord the benefit of the doubt to nonhuman animals. No doubt Darwin had a good deal to do with the change, showing that we too are animals, sharing common lines of descent. But there’s no doubt that anthropomorphism – our powerful, evolved mind-reading capacity – played a big role too. Other mammals are just too obviously like us to fail to excite the same mirror neurons that our own young do, for example. And the effect applies to other species too, as we see in the many interspecies adoptions burgeoning on youtube. Birds seem to fall Within the “receptive fields” Of those same neurons too; and indeed there are many cases of bird/mammal and mammal/bird cross-adoptions.

But when it comes to the “lower vertebrates” – reptiles and amphibians, and especially fish – the Cartesian doubt is still there; and it becomes even more pronounced with invertebrates such as insects, shellfish, snails and clams, despite the celebrated anthropomorphic blip in the special case of the octopus.

This is the point to ask ourselves: Why does it matter? What difference does it make whether or not fish feel? The answer is instructive. Why does anything at all matter? Would anything matter in an insentient world of only stars and planets and electrons and photons? Matter to whom? Or, for that matter, would anything matter in a world of bio-zombies? Darwinian survival machines who only do, but do not feel? Again, matter to whom?

(This may sound fluffy to you, But I ask you to think about it. It really is the essence of the other-minds problem in other species — and of welfare “science” itself.)

In a world with people, we know the answer: what matters is what matters to us. And we do feel.

But in the biosphere, where we are not the only sentient species, it matters to them – to the other species, to the other minds – if we think they don’t feel, when in reality they do. The reason I didn’t choose the feeling of warmth or light or itch or tickle as the test case but the feeling of pain was to set the right intuition for our mirror neurons, about what matters and why.

Where all other species were in Cartesian times, is where fish still are today. Is it because our anthropomorphism, our mirror neurons, have failed us completely in their case? I don’t think so, any more than I think they failed Descartes in the seventeenth century for mammals and birds. Descartes saw animals’ struggles and felt their suffering, just as we do today. But alongside mind-reading and language, our species has another powerful capacity, and that is the capacity to over-ride our feelings with reasons. And that includes philosophers and scientists:

In the seventeenth century, Descartes was in the grip of a theory, his own theory, according to which only human beings could reason, and that reasoning was a god-given gift, along with an immaterial, immortal soul, delivered by god via the pineal gland. And all of that somehow implied – for reasons that I personally find rather obscure – that therefore only human beings could feel and that all other animals were just reflexive robots: zombies, despite appearances. That “despite appearances” clause was the denial of the dictates of our mirror neurons, in favor of the dictates of “reason,” or rather, in this case, Descartes’ reasoning.

Reasoning, however, is a two-edged sword: Only in formal mathematics is reasoning logical deduction, with formal proofs verifiable by all. In other fields it is more like the reasoning that goes on in law courts, where there is the question of the burden of proof (which means the burden of evidence). In criminal law, people are assumed innocent until proven guilty; the burden of proof is on those who seek to reject the hypothesis of innocence, not the hypothesis of guilt. We will come back to this.

In science, the story is a bit more complicated. In statistics, as most of you will know, when two populations are being compared on some measure, the “null hypothesis” is that any observed difference between the two populations is just due to chance, unless statistical analysis shows that the observed difference is highly improbable on the basis of chance, in which case the null hypothesis can be rejected. The analysis usually consists of comparing the variability within the populations to the variability between the populations. The burden of proof is on those who seek to reject the null hypothesis. (The first thing to reflect on, then, is What is the null hypothesis when it comes to sentience In other species? Is it sentience or insentience? Remember that it was language and our evolved mirror neurons that answered this question in the case of our own species, and in the case of the species most like us, mammals and birds. We are moving into more uncertain territory now, for our mirror neurons, in the case of fish, and lower. Most people are still sceptical about the tortoise in the upper left corner.

In science in general, as opposed to just statistical inference, it is more a matter of the weight of the evidence: If there are two or more hypotheses, the (provisional) winner is the hypothesis with the most evidence supporting it. But rather as in courts of law, hypotheses have advocates, and besides evidence the advocates can also marshal arguments in their favor. And the arguments often concern what evidence counts, and how much it counts. Methodological arguments; arguments about interpretation, and so on. (It can sometimes remind you of the OJ Simpson Trial or Trump’s Supreme Court.)

Let me use the case of fish pain as an example, starting on a personal note: In 2016, 38 years after the appearance of David Premack’s 1978 article on mind-reading in chimps in Behavioral and Brain Sciences, Brian Key, an Australian neurobiologist wrote an article in the inaugural issue of a new journal called Animal Sentience. In 2003 I had stepped down after a quarter century as editor of Behavioral and Brain Sciences, swearing I would never edit a journal again. After a quarter century of umpiring papers on behavioral and brain function – both bodily doings, by the way – in animals and humans, I was becoming increasingly concerned about feelings, that is, sentience, especially the feelings of nonhuman animals.

I was not alone. The scientific community was becoming increasingly concerned too, and so was the general public. In 2012 the Cambridge Declaration on Consciousness and its august signatories solemnly declared (if it needed declaring) that mammals and birds — as well as lower vertebrates, including fish, and even invertebrates such as the octopus — possessed the neural machinery for consciousness (in other words, sentience), even though it differed from ours. In exactly the same week as the Cambridge Declaration, to commemorate the centenary of the birth of Alan Turing, the greatest contributor to the computational approach to solving the “easy problem,” I organized a summer school in Montreal on the Evolution and Function of Consciousness, at which over 50 cognitive scientists, neuroscientists, ethologists, evolutionary biologists and philosophers addressed the “easy problem,” the “hard problem,” as well as the “other-minds problem.” Among the participants was one of the original commentators on Premack’s paper in 1978, Dan Dennett. [some other notable speakers included: Baron-Cohen, Damasio, Searle, Finlay].

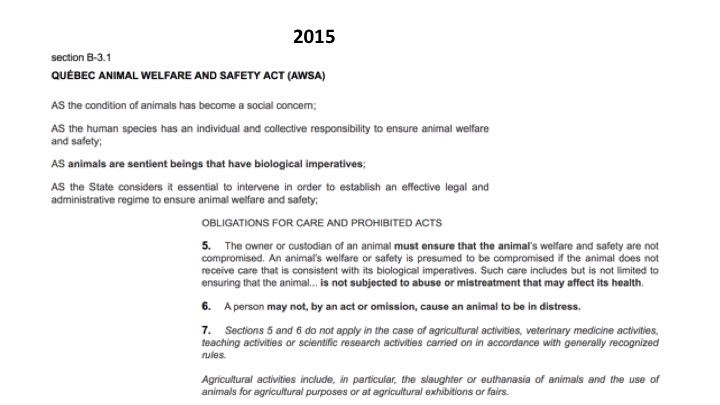

Two years later, in 2014, the Manifesto “Les animaux ne sont pas des choses” “Animals are not things” was launched in Quebec, and quickly reached over 50,000 signatures, a larger proportion of Quebec’s population than the French petition in France that had successfully induced changing the status of animals to “sentient beings” in France, likewise in 2014. And then in 2015 Quebec too changed its Civil Code, according animals the status of “sentient beings with biological imperatives.”

What this entails, no one yet knows. Quebec had till then been Canada’s most backward province insofar as animal welfare was concerned. The Quebec Minister of Agriculture announced that the new law had fast-forwarded the province 200 years. I will be closing my talk with what will be this new law’s first test case: Rodeos.

But first, let’s note that Quebec’s new law certainly does not afford any protection to fishes, because it explicitly excludes animals from protection, not on the basis of their species, but on the basis of their uses by humans:

“Sections 5 and 6 do not apply in the case of agricultural activities, veterinary medicine activities, teaching activities or scientific research activities… Agricultural activities include, in particular, the slaughter or euthanasia of animals and the use of animals for agricultural purposes…”

Other such laws were being adopted in other countries as well.

Then in 2016, a lucky 13 years after I had stepped down from the editorship of BBS and resolved never to edit a journal again, I was invited by a long-time BBS Associate and commentator, the veterinarian Andrew Rowan, President of the Human Society International, to edit a new journal on animal sentience, and I accepted to do it immediately, pro bono, and, I would add, gratefully, because the human mistreatment of nonhuman animals had become, for me, the thing that mattered most – the most fundamental moral problem of our age.

And in the inaugural volume of Animal Sentience in 2016 there appeared, 38 years after Premack’s seminal target article on mind-reading by chimpanzees, Brian Key’s target article on fish pain. Except that Key was arguing that there wasn’t any. And his target article, too, elicited over 40 commentaries. But unlike Premack’s article, on which the vast majority of commentaries were positive, most of the commentaries on Key were negative.

Key’s arguments and evidence were simple And easy to summarize: Fish can’t talk, so they can’t tell us whether they feel pain. The nonverbal behavioral tests of pain are unreliable and open to interpretation. So the only way to decide whether fish can feel pain is on the basis of pain’s neuroanatomical correlates —– in humans, as well in other mammals. And those are lacking in fish. They are lacking in birds too, but for a rather less clear reason, Key was willing to agree that birds can feel pain, and that they do it with a different, but homologous neural substrate.

The vast majority of the commentators, which included most of the world’s experts on fish behavior, physiology – and, importantly, on evolution – Including Don Broom, who is present at this meeting, disagreed with Key, citing many behavioral tests as well pharmacological evidence, such as the effects of anesthetics. Let me just point out a few other commentators [Don Broom Seth Striedter Baluška Burghardt Derbyshire Godfrey-Smith Panksepp Sneddon Damasio Devor]

Specialists in brain evolution also pointed out that fish do, indeed, have homologues of the neural substrate of pain in mammals and birds, and that it is not at all rare that convergent evolution finds ways to implement functions in structurally different ways in distantly related taxa, especially for a function as fundamental and widespread as pain.

Not only was there stout opposition to Key’s thesis in the first, second, and third wave of commentaries, each round eliciting a Response from Key, defending his negative thesis equally stoutly, but there have since been two further target articles from specialists on fish behavior and brain function who had been commentators on Key’s target article, This time both target articles were supporting the positive thesis that fish do feel pain, and eliciting their own commentaries, most of them likewise positive ones. The first in 2017 was by Michael Woodruff, The second, by a large number of co-authors, Including Don Broom, was led by Lynne Sneddon

But the issue is not settled; indeed it cannot be settled beyond all possible doubt, because of the other-minds problem. Note especially the name of Jonathan Birch, to whom I will return in a moment.

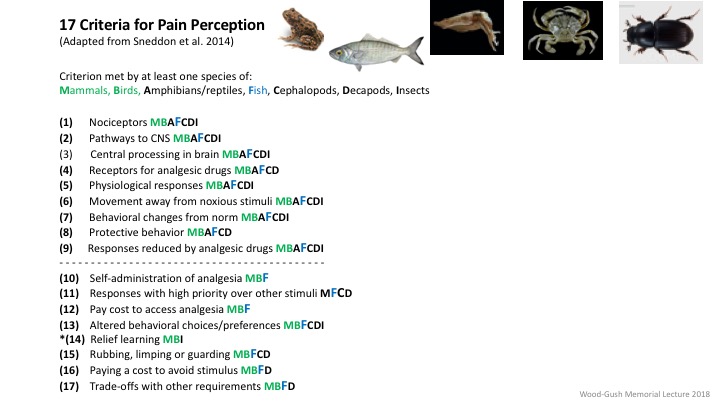

The latest of the commentaries from only a week ago, by Terry Walters cites Sneddon’s 2014 paper in which she reviewed the evidence on seventeen criteria for pain perception in seven broad taxa taxa — Mammals, Birds, Amphibians/reptiles, Fish, Cephalopods, Decapods, Insects – Note that mammals and birds meet all 17 criteria, and fish already meet all but one of them.

But the other taxa have their champions too, notably insects. Key is there, as a critic, of course. And Mallett & Feinberg are writing a book on the evolution of sentience that will be appearing soon. A few weeks ago, at a Summer School on the Other-Minds Problem with over 40 speakers, the problem was also extended to plants and microbes. [Berns, Birch, Burghardt, Wise, Balcombe, Kona-Boun, Marino, Baluska, Keld/Mendl, Simmons]

But lest you conclude that as the potential scope of sentience widens the welfare implications of the other-minds problem become either indeterminate or intractable —- practical principles are also emerging from the debate: In direct response to Key’s target article, the bioethicist Jonathan Birch has proposed adopting a Precautionary Principle, according to which, if one member of a taxon meets the criteria for sentience, the benefit of the doubt should be extended to all members of the taxon, rather than waiting for each species to be tested individually. This is analogous to making the null hypothesis sentience in such cases, which places the burden of proof on those who seek to show that those species are not sentient. There is still much thought to be given to this approach, but Birch has been consulted in the drafting of EU legislation about sentience, especially about extending some of the protections currently accorded to mammals and birds also to fish, as well as to octopus.

New non-invasive techniques for mind-reading, especially in mammals, are also emerging. These too are being accorded Open Peer Commentary in Animal Sentience. I especially recommend the approach of Greg Berns, who is trying to mind-read dogs, but I also recommend the cautionary remarks of Karen Overall, who is also present at this conference, on the need for many control conditions if we are to mind-read dogs for something as specific as feelings of jealousy. (Note that Peter Singer is, understandably, an enthusiastic commentator at the prospect of non-invasive mind-reading.)

I would like to close with a few words about an important ongoing project – an attempt to put Quebec’s new law on animal sentience and biological imperatives to the test in court on a case that is well on the mammalian end of the spectrum, where there is already consensus on both sentience and the capacity for pain: the rodeo.

Section 5. The owner or custodian of an animal must ensure that the animal’s welfare and safety are not compromised. An animal’s welfare or safety is presumed to be compromised if the animal does not receive care that is consistent with its biological imperatives. Such care includes but is not limited to ensuring that the animal is not subjected to abuse or mistreatment that may affect its health.

Section 6. A person may not, by an act or omission, cause an animal to be in distress.

Section 7. Sections 5 and 6 do not apply in the case of agricultural activities, veterinary medicine activities, teaching activities or scientific research activities

In 2017, Professor Alan Roy, a professor of child and animal law at the University of Montreal, together with 20 law students, drew the (I think) very natural conclusion that for activities that do not fall within the exception noted in Section 7, violations of Section 5 or 6 should mean that the activity is henceforth illegal under Quebec’s new sentience law. Professor Roy accordingly went to court to seek an injunction against an “urban rodeo” that had been planned as part of the celebrations of the 375th Anniversary of the City of Montreal. Because of the threat of sizeable financial losses to the Rodeo if the event were to be cancelled, the Rodeo (Festival Western de St-Tite, FWST) and Professor Roy came to a court-certified settlement. In return for not contesting the Montreal centennial rodeo, Professor Roy would be granted the right to appoint three representatives – a veterinarian, an ethologist and a photographer – to witness and film not just the 4 Montreal rodeos, but 16 further rodeos by the same producer (FWST), and the evidence would be presented to a Consultative Committee of the Ministry (MAPAQ) in charge of implementing Quebec’s new sentience law (AWST) to test the legality of the rodeo under AWST.

If the evidence demonstrates that the rodeo contravenes the new sentience law, in whole or in part, and MAPAQ does not act, then MAPAQ will be taken to court. What has resulted so far is an unprecedented database of 3 x 45 hours of close-up rodeo video on the totality of the 20 rodeos. They were analyzed for 3 months at slow motion by Dr. Jean-Jacques Kona-Boun, the appointed veterinarian, in a 600-page report, which has identified evidence that every one of the rodeo’s 8 categories of events violates section 5 or section 6 of Quebec’s new laws. I was appointed one of the members of the consultative committee, along with a veterinarian and an attorney, to represent the interests of the animals; the rodeo also has three members – a FWST producer, supplier and veterinarian and the Ministry has three members.

We are now seeking ethologists and veterinarians the world over to examine this unprecedented database and Dr. Kona-Boun’s analysis, to add their judgment to whether the evidence indicates that the rodeo is illegal under Quebec’s new law. It is obvious how the outcome may influence further tests of this kind. Several distinguished experts have already reacted to the findings.

I will now show a very brief video showing a sample of the kinds of evidence Dr. Kona-Boun identified repeatedly in the 3 x 45 hours of video across the 30 rodeos.

Let me close with the words of David Wood-Gush (1999, p. 22), as applied to the conditions imposed on countless other sentient species by our own:

“from the animal welfare point of view it was disastrous.”

References

Birch, Jonathan (2017) Animal sentience and the precautionary principle. Animal Sentience 16(1)

Cook, Peter; Prichard, Ashley; Spivak, Mark; and Berns, Gregory S. (2018) Jealousy in dogs? Evidence from brain imaging. Animal Sentience 22(1)

Harnad, Stevan (2016a) Animal sentience: The other-minds problem. Animal Sentience 1(1)

______________(2016b) My orgasms cannot be traded off against others’ agony. Animal Sentience 7(18)

Key, Brian (2016) Why fish do not feel pain. Animal Sentience 3(1)

Kona-Boun, Jean-Jacques (2018) Analysis. In Roy, Alain, Plaintiff’s Report, Part II.

Premack, D., & Woodruff, G. (1978). Does the chimpanzee have a theory of mind? Behavioral and Brain Sciences, 1(4), 515-526.

Sneddon, Lynne U.; Lopez-Luna, Javier; Wolfenden, David C.C.; Leach, Matthew C.; Valentim, Ana M.; Steenbergen, Peter J.; Bardine, Nabila; Currie, Amanda D.; Broom, Donald M.; and Brown, Culum (2018) Fish sentience denial: Muddying the waters. Animal Sentience 21(1)

]]>