Figure 1

Figure 2

Jerome Iglowitz

8285 Barton Road Granite Bay, California 95746 email: jiglowitz@rcsis.com http://www.foothill.net/~jerryi

Abstract

In this paper I will

propose just the simplest part of a three-part hypothesis for a solution

of the problem of consciousness.{1} It proposes that

the evolutionary rationale for the brains of complex organisms was neither

representation nor reactive parallelism as is generally presupposed, but

was specifically an internal operational organization of blind biologic

process instead. I propose that our cognitive objects are deep operational

metaphors of primitive biological response rather than informational referents

to environment. I argue that this operational organization was an evolutionary

necessity to enable an adroit functioning of profoundly complex metacellular

organisms in a hostile and overwhelmingly complex environment. I will argue

that this organization was antithetical to a representative role

however. I have argued elsewhere{2} that this hypothesis,

(in concert with ancillary logical and epistemological hypotheses), opens

the very first real possibility for an actual and adequate solution of

the problem of "consciousness".

1. REPRESENTATION: THE PERSPECTIVE FROM BIOLOGY

Sometimes we tentatively adopt a seemingly absurd or even outrageous hypothesis in the attempt to solve an impossible problem -and see where it leads. Sometimes we discover that its consequences are not so outrageous after all. I agree with Chalmers that the problem of consciousness is, in fact, "the hard problem". I think it is considerably harder than anyone else seems to think it is however. I think its solution requires new heuristic principles as deep and as profound as, (though different from), the "uncertainty", "complementarity and (physical) "relativity" that were necessary for the successful advance of physics in the early part of the 20th century. I think it involves an extension of logic as well. Consideration of those deep cognitive principles: "cognitive closure", (Kant and Maturana), "epistemological relativity", (Cassirer and Quine), and of the extension of logic, (Cassirer, Lakoff, Iglowitz), must await another discussion however.{3}

Sometimes it is necessary to walk around a mountain in order to climb the hill beyond. It is the mountain of "representation", and the cliff, (notion), of "presentation" embedded on its very face, which blocks the way to a solution of the problem of consciousness. This hypothesis points out the path around the mountain.

Maturana and Varela's "Tree of Knowledge",{4} is a compelling argument based in the very mechanics of physical science and biology against the possibility of a biological organism's possession of a representative model of its environment. They and other respected biologists, (Freeman, Edelman), argue even against "information" itself. They maintain that information never passes between the environment and organisms; there is only the "triggering" of structurally determinate organic forms. I believe theirs is the inescapable conclusion of modern science.

I will now present a specific and constructive counterproposal for another kind of model however, the "Schematic Operative Model". Contrary to the case of the representative model, it does remain viable within the critical context of modern science. I believe that we, as human organisms, do in fact embody a model. I believe it is the stuff of mind!

2. THE SCHEMATIC MODEL: DEFINITION AND EXAMPLES. (DEFINING "AN OBJECT")

2.1 THE SIMPLEST CASE: A DEFINITION BY EXAMPLE

Look first at very simple models. Consider the models of absolutely mundane training seminars -seminars in a sales organization for instance. "'Motivation' plus 'technique' yields 'sales'.", we might hear at a sales meeting. Or, (escalating just a bit), "'Self-awareness of the masses' informed by 'Marxist-dialectic' produces 'revolution'!", we might hear from our local revolutionary at a Saturday night cell meeting. Visual aids, (models), and diagrams are ever present in these meetings. The lecturer stands at his chalkboard and asks us to accept drawings of triangles, squares, cookies, horseshoes... as meaningful objects -with a "calculus"{5} of relations between them, (arrows, squiggles, et al). The icons, (objects), can be stand-ins for concepts or processes as diverse, (escalating just a bit more), as "motivation", "the nuclear threat", "sexuality", "productivity", and "evolution". The icons need not stand in place of entities in objective reality, however. What is "a productivity" or "a sexuality", for instance? I will argue that the function of objects, as objects in what I call these "schematic models" is instead specifically to illustrate, to enable, -to crystallize and simplify the very calculus of relation proposed between them! {6}

Two different lecturers might invoke different symbols, and a different "calculus" to explicate the same topic however. In analyzing the French Revolution in a history classroom, let us say{7}, a fascist, a royalist, a democrat might alternatively invoke "the Nietzschean superman", "the divine right of kings", "freedom", ... as actual "objects" on his board, (with appropriate symbols). He redistributes certain of the explanatory aspects, (and properties), of a Marxist's entities, (figures) -or rejects them as entities altogether.{8} That which is unmistakably explanatory, ("wealth", let us say), in the Marxist's entities, (and so which must be accounted for by all of them), might be embodied instead solely within the fascist's "calculus" or in an interaction between his "objects" and his "calculus". Thus and conversely the Marxist would, (and ordinarily does), reinterpret the royalist's "God"-figure, (and his –the Marxist’s- admitted function of that "God" in social interaction{9}), as "a self-serving invention of the ruling class". It becomes an expression solely of his "calculus" and is not embodied as a distinct symbol, (i.e. object). Their "objects" -as objects- need not be compatible! As Edelman noted: "certain symbols do not match categories in the world. ... Individuals understand events and categories in more than one way and sometimes the ways are inconsistent."{10}

Figure 1

Figure 2

What is important is that a viable "calculus"-plus-"objects", (a given model), must explain or predict "history". It must be compatible with the phenomena, (in this particular example the historical phenomena). But the argument applies to a much broader scope. I have argued elsewhere,{11} (following Hertz and Cassirer), that the same accounting may be given of competing scientific theories, philosophies, and, indeed, of any alternatively viable explanations.

The existence of a multiplicity of alternately viable calculuses, (sic), and the allowable incommensurability of their objects{12} suggests an interpretation of those "objects" contrary to representation or denotation however. It suggests the converse possibility that the function and the motivation of those objects, specifically as entities, (in what I will call these "schematic models"), is instead to illustrate, to enable, -to crystallize and simplify the very calculus of relation proposed between them!{13} They are "creatures" of the theory; not the converse.

I propose that the boundaries -the demarcations and definitions of these objects, (their "contiguity" if you will)- are formed to meet the needs of the operations. They exist to serve structure- not the converse.{14} I suggest that the objects of these "schematic models" –specifically as objects- serve to organize process, (i.e. analysis or response). They are not representations of actual objects or actual entities in reality.{15} This, I propose, is why they are "things"! These objects functionally bridge reality in a way that physical objects do not. I propose that they are, in fact, metaphors of analysis or response. The rationale for using them, (as any good "seminarian" would tell you), is clarity, organization and efficiency.

Though set in a plebian context, the "training seminar", (as presented), illustrates and defines the most general and abstract case of schematic non-representative models in that it presumes no particular agenda. It might as well be a classroom in nuclear physics or mathematics, the boardroom of a multinational corporation, -or a student organizing his lovelife on a scratchpad.

Figure 3 Figure 4

2.2 A CASE OF NON-REPRESENTATION

MORE SPECIFIC TO OUR SPECIAL PROBLEM: (NARROWING THE

FOCUS)

Instrumentation and control systems provide another example of the organizational, non-representational use of models and "entities". These entities, and the context in which they exist, provide another kind of "chalkboard".{16} Their objects need not mirror objective reality either. A gauge, a readout display, a control device, (the "objects" of such systems), need not mimic a single parameter -or an actual physical entity. Indeed, in the monitoring of a complex or dangerous process, it should not. Rather, the readout for instance, should represent an efficacious synthesis of just those aspects of the process which are relevant to effective response, and be crystallized around those relevant responses! A warning light or a status indicator, for instance, need not refer to just one parameter. It may refer to electrical overload and/or excessive pressure and/or... Or it may refer to an optimal relationship, (perhaps a complexly functional relationship), between many parameters -to a relationship between temperature, volume, mass, etc. in a chemical process, for instance.

The exactly parallel case holds for its control devices. A single control may orchestrate a multiplicity of (possibly disjoint) objective responses. The accelerator pedal in a modern automobile, as a simple example, may integrate fuel injection volumes, spark timing, transmission gearing...

Ideally, (given urgent constraints), instrumentation and control might unify in the same "object". We could then manipulate the object of the display and it could be the control device in itself as well. Think about the advantages of manipulating a "graphic" object which is simultaneously a readout and a control mechanism under urgent constraints. Think about this possibility in relation to our ordinary objects of perception -in relation to the sensory-motor coordination of the brain and the objects of naive realism!. The brain is a control system, after all. It is an organ of control and its mechanics must be considered in that perspective. Its function is exceedingly complex and the continuation of life itself is at stake. It is a complex and dangerous world.{17}

2.3 THE "GUI": THE

MOST PERTINENT AND SOPHISTICATED EXAMPLE OF A SCHEMATIC MODEL

(THE SPECIAL CASE)

The "object" in the graphic user interface, (GUI), of a computer is perhaps the best example of a schematic usage available. In my simplistic manipulation of the schematic objects of my computer's GUI, I am, in fact, effecting and coordinating quite diverse and disparate -and unbelievably complex- operations at the physical level of the computer, operations impossible, (in a practical sense), to accomplish directly. What a computer object, (icon), represents and what its manipulation does, at the physical level, can be exceedingly complex and disjoint. The disparate voltages and physical locations, (or operations), represented by a single "object", and the (possibly different) ones effected by manipulating it, correlate to a metaphysical object only in this "schematic" sense. Its efficacy lies in the simplicity of the "calculus" it enables!

Contemporary usage is admittedly primitive. Software designers have limiting preconceptions of the "entities" to be manipulated, of a necessary preservation of hierarchy, and of the operations to be accomplished in the physical computer by their icons and interface. But GUI's and their "objects", (icons), have a deeper potentiality of "free formation". They have the potential to link to any selection across a substrate, i.e. they could "cross party lines". They could cross categories of "things in the world", (Lakoff’s "objectivist categories"{18}).

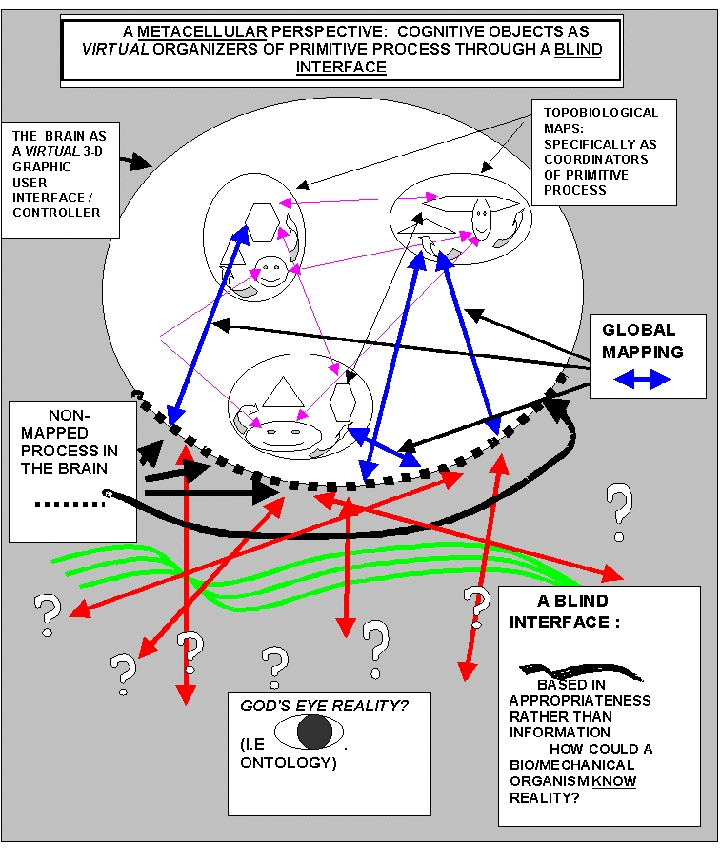

Biology supplies a fortuitous example of the sort of thing I am suggesting for GUI's in the brain's "global mapping" noted by Edelman.{19} The non-topological connectivity Edelman notes from the brain’s topobiological maps,{20} and specifically the connectivity, (the "global mapping"), from the objects of those maps to the non-mapped areas of the brain supplies a concrete illustration the kind of potential I wish to urge for a GUI. Ultimately I will urge it as the rationale for the brain itself. This global mapping allows "...selectional events", [and, I suggest, their "objects" as well], "occurring in its local maps ... to be connected to the animal's motor behavior, to new sensory samplings of the world, and to further successive reentry events." But this is specifically a non-topological mapping. This mapping does not preserve contiguity!

Here is a specific model illustrating the more abstract possibility of a connection of "objects"{21}, (in a GUI), to non-topological process, (distributed process) -to "non-objectivist categories", (using Lakoff’s terminology). Edelman's fundamental rationale is "Neural Darwinism", the ex post facto adaptation of process, not "information", and it is consistent with such an interpretation. It does not require "information". Nor does it require "representation". Edelman, (unfortunately), correlates the topobiological maps, (as sensory maps), directly, (and representatively), with "the world", but this is clearly inconsistent with his stated repudiation of "a God's eye view" which grounds his biologic epistemology.

Figure 5: A Graphic

Rendering of Edelman's Epistemology

(Note: hierarchy and contiguity are implicit!)

What if we turn his perspective around however? What if we blink the "God's eye" he himself specifically objected to, and step back from the prejudice of our human (animal) cognition. What if the maps and their objects both were taken as existing to serve blind primitive process instead of the converse?

Figure 6: A More Consistent Rendering of Edelman's

Epistemology Suggesting a New Paradigm for GUI's

(Neither hierarchy nor contiguity are implicit in

this model.)

This is the case I wish to suggest as an illustration of the most abstract sense of the GUI, (and which I will argue shortly). It opens an interesting possibility moreover. It suggests that evolution’s "good trick", (after P.S. Churchland’s usage), was not representation, but rather the organization of primitive process in a topological context. It suggests that the "good trick" was evolution's creation of the cortex!

3.1 THE ARGUMENT

Consider, finally, the formal and abstract problem. Consider the actual problem that evolution was faced with. Consider the problem of designing instrumentation for the efficient control of both especially complex and especially dangerous processes. In the general case, (imagining yourself the "evolutionary engineer"), what kind of information would you want to pass along and how would you best represent it? How would you design your display and control system?

It would be impossible, obviously, to represent all information about the objective physical reality of a, (any), process or its physical components, (objects). Where would you stop? Is the color of the building in which it is housed, the specific materials of which it is fabricated, that it is effected with gears rather than levers, -or its location in the galaxy- necessarily relevant information? (Contrarily, even its designer's middle name might be relevant if it involved a computer program and you were considering the possibility of a hacker's "back door"!){22} It would be counterproductive even if you could as relevant data would be overwhelmed and the consequent "calculus", (having to process all that information), would become too complex and inefficient for rapid and effective response. Even the use of realistic abstractions could produce enormous difficulties in that you might be interested in many differing, (and, typically, conflicting), significant abstractions and/or their interrelations.{23} This would produce severe difficulties in generating an intuitive and efficient "calculus" geared towards optimal response.

For such a complex and dangerous process, the "entities" you create must, (1) necessarily, of course, be viable in relation to both data and control -i.e. they must be adequate in their function. But they would also, (2) need to be constructed with a primary intent towards efficiency of response, (rather than realism), as well -the process is, by stipulation, dangerous! The entities you create would need to be fashioned to optimize the "calculus" while still fulfilling their (perhaps consequently distributed!) operative role!

Your "entities" would need to be fabricated in such a way as to intrinsically define a simple operative calculus of relationality between them -analogous to the situation in our training seminar. Maximal efficiency, (and safety), therefore, would demand crystallization into schematic virtual "entities" -a "GUI"- which would resolve both demands at a single stroke. Your objects could then distribute function so as to concentrate and simplify control, (operation). These virtual entities need not necessarily be in a simple (or hierarchical -i.e. via abstraction) correlation with the objects of physical reality however.{24} But they would need to allow rapid and effective control of a process which, considered objectively, might not be simple at all. It is clearly the optimization of the process of response that is crucial here, not literal representation. We, in fact, do not care that the operator knows what function(s) he is actually fulfilling, only that he does it (them) well!

But let's talk about the "atomic" in the "atomic biological function" of the previous statement. There is another step in the argument to be taken at the level of biology. The "engineering" argument, (made above), deals specifically with the schematic manipulation of "data". At the level of primitive evolution, however, it is modular (reactive) process that is significant to an organism, not data functions. A given genetic accident corresponds to the addition or modification of a given (behavioral/reactive) process which, for a primitive organism, is clearly and simply merely beneficial or not. The process itself is informationally indeterminate to the organism however -i.e. it is a modular whole. No one can presume that a particular, genetically determined response is informationally, (rather than reactively), significant to a Paramecium or an Escherichia coli, for example, (though we may consider it so). It is significant, rather, solely as a modular unit which either increases survivability or not. Let me therefore extend the prior argument to deal with the schematic organization of atomic, (modular), process, rather than of primitive, (i.e. absolute), data. It is my contention that the cognitive model, and cognition itself, is solely constituted as an organization of that atomic modular process, designed for computational and operational efficiency. The atomic processes themselves remain, and will forever remain, informationally indeterminate to the organism.

The evolutionary purpose of the model was computational simplicity! The calculational facility potentiated by a schematic and virtual object constitutes a clear and powerful evolutionary rationale for dealing with a multifarious environment. Such a model, (the "objects" and their "calculus"), allows rapid and efficient response to what cannot be assumed, a priori, to be a simplistic environment. From the viewpoint of the sixty trillion or so individual cells that constitute the human cooperative enterprise, that assumption, (environmental simplicity), is implausible in the extreme!

But theirs, (i.e. that perspective), is the most natural perspective from which to consider the problem. For five-sixths of evolutionary history, (three billion years), it was the one- celled organism which ruled alone. As Stephen Gould puts it, metacellular organisms represent only occasional and unstable spikes from the stable "left wall", (the unicellulars), of evolutionary history.

"Therefore, to understand the events and generalities of life's pathway, we must go beyond principles of evolutionary theory to a paleontological examination of the contingent pattern of life's history on our planet. ...Such a view of life's history is highly contrary both to conventional deterministic models of Western science and to the deepest social traditions and psychological hopes of Western culture for a history culminating in humans as life's highest expression and intended planetary steward."(Gould, 1994)

3.3 RETRODICTIVE

CONFIRMATION

3.4 CONCLUSION, (SECTION 3)

Evolution, in constructing a profoundly complex metacellular organism such as ours, was confronted with the problem of coordinating the physical structure of its thousands of billions of individual cells. It also faced the problem of coordinating the response of this colossus, this "Aunt Hillary" -Hofstadter's "sentient" ant colony.{27} It had to coordinate their functional interaction with their environment, raising an organizational problem of profound proportions.

Evolution was forced to deal with exactly the problem detailed above. The brain, moreover, is universally accepted as an evolutionary organ of response. I propose that a schematic entity, (and its corresponding schematic model), is by far the most credible possibility here. It efficiently orchestrates the coordination of the ten million sensory neurons with the one million motor neurons,{28} -and with the profound milieu beyond. A realistic, (i.e. representational /informational), "entity" would demand a concomitant "calculus" embodying the very complexity of the objective reality in which the organism exists, and this, I argue, is overwhelmingly implausible.{29}

Figure 7

4. CONSEQUENCES: A PARTIAL SKETCH

Let me sketch just two major consequences of my hypothesis. They are too huge to be ignored, and block the credibility of my exposition.

4.1 THE TERRIBLE CONSEQUENCE

The "terrible" consequence of such an hypothesis for a realistically inclined mind is the epistemological one. The hypothesis says that we (therefore) do not know and cannot know the very world in which we exist. It seems to invalidate even the very language in which it is stated thereby. This consequence, (which my hypothesis shares with those of Maturana, Edelman, and Freeman), is not devastating or absurd, (as at first glance it appears), but lies within the ordinary province of classical epistemology. It falls within the conclusions of Immanuel Kant and specifically in Ernst Cassirer's (neo-Kantian) "Symbolic Forms".{30} It does not result in a necessary relapse to solipsism or idealism –nor in a move to rampant unstructured relativism. Nor does it invalidate the reality in which we believe. (See below.)

This discussion is outside the confines of this paper, {31} but briefly it is this. Basing his arguments in those of the renowned physicist Heinrich Hertz, Cassirer cogently argued that theories and knowledge do not serve to reference metaphysical reality, but function rather asrelative, (and pragmatically useful), organizations of the phenomena. (He argued for a relativistic plurality of such organizational "forms" moreover.). I urge that this is precisely the right kind of language, the right kind of epistemology to be using when talking of biological organisms. There is good rationale for speaking about organisms organizing response, but there is no good rationale to speak of organisms knowing anything! This applies to us as well however - humans are biological organisms as well.

Cassirer's "Symbolic Forms", (his "epistemological theory of relativity"{32}), enables the language and the content of my first hypothesis{33} as a legitimate but relativistic truth -without a commitment to (metaphysical) reference. Though built with Naturalist "bricks", it does not rest on the Naturalist "foundation" of reference.

There is another legitimate basis for realism however. It consists in substituting "belief" for "knowing". Knowing, (in the sense of "scientific or pragmatic knowing"), has to do with prediction of phenomena.{34} Belief has to do with "what really is!" Are they related? Of course they are, but I maintain they are not determinately related.

Let me leave this epistemological issue though, and proceed to a second immediate consequence of this (first) hypothesis. We must agree, I think, that radical conclusions should be drawn only if they lead to other, very positive aspects of a line of thought.

4.2 THE GOOD CONSEQUENCE RE THE LOGICAL PROBLEM OF CONSCIOUSNESS

An immediate positive consequence of my first hypothesis is that it makes massive inroads on the explicitly logical dilemmas of sentiency. It allows knowing in the sense of sentient knowing!{35} It also enables the utilization of the most profound theory of meaning yet proposed: Hilbert's "Implicit Definition". This discussion is again beyond the scope of this paper,{36} so I will discuss just a few obvious consequences here.

(a) Innate in the ordinary conceptions of mind and brain is the idea that perceptual cognition, (the perceptual object), is the result of a "presentation"{37} of stimuli, (information), to a cognating entity.

(b) But also innate in the ordinary conception is the idea that even intellectual cognition, (logic and conception), is derived from the "attention" to and "abstraction" from such "presentations". Indeed, this is the theoretical and logical rationale of classical and contemporary logic themselves. It is the actual genesis of the technical logical "concept".{38}

Under these presumptions therefore, both aspects of cognition presuppose "presentation". Thereby they demand either the complement to that presentation, (a "homunculus" with its implicit problem of infinite logical regress), or an eliminative reduction of the problem, (and the mind), exclusively into a mere continuation of pure biologic and physical process within the organism, (cognating entity). They demand a denial of "mind" and "consciousness" in our ordinary sense altogether! The "schematic operative model of my first hypothesis radically changes and simplifies this traditional perspective. Assuming that our human model is not representational, (i.e. that it does not derive from presentation), the "homunculus" is no longer an issue -at least for perception. But neither does logic nor conception necessitate such "presentation" either.{39}

But how could there be cognition without presentation? What could "cognition" even mean then? How could there even be logic? Cassirer has suggested the beginnings of an answer to the latter question in his "functional concept of mathematics",{40} his rule-based reformulation of the logical concept{41} It is grounded in an independent "act" of the cognator not derived from attention or abstraction of presentation. It is instead "a peculiar form of consciousness..., an act of unification... such as cannot be [logically] reduced to the consciousness of sensation or perception".{42} (my emphasis) It originates in a considerably more plausible version of the concept actually used in modern science.

Eliminating the requirement of "presentation", Cassirer's "concept" readmits the possibility of a unified mind and "knowing", (in the unified rule and concept), and puts "the homunculus" finally to rest. "We" are, (mind is), a concept I argue, but it is a new and larger constitutive concept which resolves objects internally and logically without "presentation.{43} Ultimately, I have argued elsewhere, its logic matches and meets, (i.e. is the same as), the "schematic calculus", (the biologic operative organization), delineated in the hypothesis of the present paper!{44} Its "independent act", its rule, I argue, is the unified behavioral rule, the unified rule of Maturana's "ontogenic coupling".{45} But this now defines a concept -a concept which defines objects!{46}

5. CONCLUSION

The "schematic brain" is a large bite to swallow, admittedly!

And there are still larger "bites" of the puzzle not yet in place. Specifically

there are the considerations of "cognitive closure", (Maturana), "logical

closure", (Quine), and "epistemological relativism", (Cassirer), that must

be addressed to validate plausibility. I do not ask that you accept the

truth nor even the plausibility of this admittedly radical first hypothesis

at this juncture therefore. That must await the presentation of the rest

of the argument. What I do ask, however, is that you be willing to acknowledge

its biological, evolutionary and operative strengths and be open in future

to seriously consider it in the context of the larger problem of consciousness.

(BIOLOGY, INFORMATION AND REPRESENTATION)

6.1 BIOLOGY'S PROBLEM WITH SENSORS AS INFORMATION

A measure of the complexity of the reality with which an organism must deal is the organism's context of information about it. But information is grounded in context. Consider an individual (informational) sensor. It is not enough for a genetic accident simply to provide that sensor. Somehow it must furnish evolutionary advantage and differentially link that sensor to response through its functioning. To be useful as "information", (and retained under the evolutionary process), it must usable over the range of its possibilities. It must provide differential response over that range.

Each sensor, (as an "informational" sensor), must be minimally binary by definition. To be useful as information, (and retained on evolutionary grounds), it must have been utilized or at least connected in both of its possible states. Two sensors -as information, it seems- would have to have been utilized in all four of their possible combined states. But is this true? No, perhaps they might have been used or connected individually, (and retained). But then they would not yield combined information-i.e. they would not be mutually relevant. Even so, each individual sensor is an evolutionary mutation and each had to be connected to two paths. The evolutionary "work" performed for the two would be 4 units!

Alternatively, suppose evolution simply proliferated sensors hugely and then sampled the combined array under a "Monte Carlo" strategy. Would this work? I think it might, but it would not be "information". It would be response instead! Information necessarily embodies context. When we sample a voting population, for instance, we know what it is we are dealing with, (i.e. the context of the sample). It is a predictable population. Organisms, or at least primitive organisms, contrarily cannot know the context of their sample beforehand. To be just a little bit cute, organisms are not capable of a "Monte Carlo" strategy. The only comparable strategy of which they are capable would be a "Russian Roulette" strategy{47} -not a particularly good tactic.

6.2 THE FACTS OF HUMAN SENSORY INPUT

The only context, (the possible sensory array states),{48} in which reality could have meaning as information for human organisms is of the magnitude: 2 to the power of 107, the latter being Maturana's estimate of total human sensory receptors. {49} Taking each of the 10,000,000 human sensory cells as a minimally binary input device, their informational potential -the context within which information would be received- would be 210,000,000 . Converting the base, this is:

6.3 A SIMPLE LIMITING ARGUMENT:

(a) From the beginning

of evolutionary history there were always less organisms than subatomic

particles in the known universe{51} (i.e. less than

1084){52}

(b) Every organism

mutated once every second for this four billion years{53}

( 4 billion times 365 times 24 times 60 times 60 = 4 x 109 x

3.1536000 x 107 < 1.3 x 1016 < 1017

(c) Every single

mutation was beneficial

(d) Not even

a single (beneficial by fiat) mutation was lost

(e) All mutations

were ultimately (somehow) summed into one organism

6.3.2 COMPUTATION: 1084 x 1017 = 10101

Assuming a standard bitwise, (i.e. digital), theory of information, this simple argument demonstrates a discrepancy of more than "just a few" (!) orders-of-magnitude between informational possibility and evolution's ability to incrementally embody any significant portion of it in an internal representative model. Even if every single mutation were model defining, it is a 3 millions order-of-magnitude discrepancy!

Think about simple digital models. Consider just the three "idiot lights" on the dashboard of my decrepit old truck as a primitive instance. All eight of its possible states are relevant to response and, considered as an "information model", it must account for each of them. OFF-OFF-OFF is significant -and allows me carefree driving- only in a context of possibility. In fact one of them, (the oil light), is non-functional and not "information" at all. This simple system, in consequence, does not qualify as a representative model. That part of it that does qualify as information, (insofar as it is "information"), requires an accounting for its context of possibility.

The hypothesis of an internal representative model as the rationale for the sensory system presumes an incremental evolutionary correlation to its context of possibility. Evolution would have had the problem of progressively correlating a model with each, (or some significant portion), of the possibilities of the sensory array -and with potential response as well.

But evolution had less than 10102 {57} chances to achieve this correlation. The most optimistic correlation is 10102 instances,{58} and the ratio of model correlation to possible sensory states is

10102 / 103,010,298 < 1 / 103,000,000 !

Even if the model itself were taken as an edifice of (107) actual internal binary bits, (paralleling the sensory array), this would only regress the problem. Evolution still would have the problem of incrementally correlating alternative model states with potential response and the numbers would still stand. The odds of a "designed", or even a connected response would still be less than 1 / 103,000,000 -which is as close to zero as I care to consider!{59} It is less, (much less), than the ratio of the size of a proton to the size of the entire universe. Its utilization as "information" would still require an accounting for -and an incremental evolutionary correlation to- its context of possibility. Contrarily, taking my two proposed, (and grossly exaggerated), upper bounds for mutational possibility, 10102 and 1010290 respectively, the same informational possibility could be embodied in just 339 or 34,162 binary receptors respectively!{60} Why so many sensory possibilities?

The argument applies equally to the possibility of even an isomorphic parallelism of response, ("congruent structural coupling"), as Maturana and Varela have proposed moreover, (as distinguished from the case of an internal, representative model). That assumption still requires a correlation to sensory input! (This is the only "trigger" that anyone has postulated.) The (maximum) ratio of "designed" response, (and parallelism), to possible sensory input is less than 1 / 103,000,000!

6.5 CONCLUSION, (APPENDIX)

In short, we simply have too many sensors to support the "information" scenario -way too many! There are "10" -with three million zeros after it(!) -times-too-many sensory possibilities for evolution to have done anything with in the entire history of the universe! Conversely it is quite clear that the entire future of the universe, (assuming a finite model), would be insufficient to dent it either. Shall we talk "parsimony"? Objective reality is a bound to the evolution of organisms, it is not a limit which can be matched or paralleled.

Paul Churchland has argued that if each synapse is capable of just 10 distinct states, then the brain is capable of 10 to the power of one hundred trillion, (=10100,000,000,000,000), distinct states. This number is impressive and considerably larger than the one I am considering, it is true, but it does not refer to the possibility of acquisition of information, (specifically as information), from the environment nor to the possibility of evolutionary correlation to beneficial action -i.e. utilization. Churchland's number, therefore, only amplifies the discrepancy and the argument I have made!

It is evolutionarily plausible, certainly, to consider 10,000,000 sensory inputs as triggers of process. But it is not evolutionarily plausible to think of them as environmentally determinate -i.e. as inputs of information- as this immediately escalates the evolutionary problem exponentially -i.e. to 210,000,000, (minimally)! Exponents are awesome things.

"Information" and "representation" in whatever form just isn't a viable rationale for the evolution of the brain. I argue that the brain is an organ of ontogenic process. It is an organ of response, not of "information". The function of that organ is to organize primitive biologic process; it is not to represent its surroundings. Its job is adequate response, not knowingful information. Between knowing and adequacy is a wide gulf. Evolution demands that an organisms' performance be adequate. Nowhere in the physical or evolutionary rationale is there a place for "knowing" save by "miracle".

Figure 8

References:

Asimov, I. (1977) Asimov

on Numbers. Pocket Books.

Birkhoff, G. &

Mac Lane, S. (1955). A Survey of Modern Algebra. MacMillan

Company.

Cassirer, E. (1953).

The

Philosophy of Symbolic Forms. (Translation by Ralph Manheim). Yale

University Press.

Cassirer, E. (1923).

Substance

and Function and Einstein's Theory of Relativity. (Bound

as one: translation by William Curtis

Swabey). Open Court.

Dreyfus, H. (1992).

What

Computers Still Can't Do. MIT Press.

Edelman, G. (1992).

Bright

Air, Brilliant Fire. BasicBooks.

Freeman, W.H. (1995).

Societies

of Brains. Lawrence Erlbaum Associates, Inc.

Gould, S. J. (1994).

The

Evolution of Life on the Earth.

Hofstadter, D. (1979).

Goedel,

Escher, Bach. Vintage.

Iglowitz, J. (1996).

The

Logical Problem of Consciousness. Presented to the UNESCO "Ontology

II Congress". Barcelona, Spain.

Iglowitz, J. (1995).Virtual

Reality: Consciousness Really Explained. Online at www.foothill.net/~jerryi

Iglowitz, J. (2001).

Consciousness:

a Simpler Approach to the Mind-Brain Problem (Implicit Definition, Virtual

Reality and the

Mind) Online at www.foothill.net/~jerryi

Maturana, H. and Varela,

F. (1987). The Tree of Knowledge. Shambala Press.

Minsky, M. (1985).

The

Society of Mind. Touchstone.

Smart, H. (1949).

Cassirer's

Theory of Mathematical Concepts in The Philosophy of Ernst Cassirer,

Tudor Publishing.

Van Fraassen, B. (1991).

Quantum

Mechanics, an Empiricist View, Clarendon Press.

FOOTNOTES:

2. see Iglowitz, "Consciousness, a Simpler Approach…", 2001 and Iglowitz, 1995

3. See Iglowitz, "Consciousness, a Simpler Approach…", 2001 for the logical problem, and Iglowitz, 1995, Chapters 3 & 4 for the epistemological problem and a summary of Cassirer's thesis.

5. Webster's defines "calculus": "(math) a method of calculation, any process of reasoning by use of symbols". I am using it here in contradistinction to "the calculus", i.e. differential and integral calculus.

6. See Iglowitz, 2001, 1995, (Chapter 2) on objects implicitly defined by their operations; Chapter 4 on Hertz's rendering of scientific objects.

7. a classroom is a kind of training seminar after all!

8. Is this not the usual case between conflicting theories and perspectives?

9. Dennett's term "heterophenomenological" -i.e. with neutral ontological import -is apt here.

10. Edelman, 1992, pps. 236-237, his emphasis.

12. together: the possible conceptual contexts

13. c.f. the arguments of Chapters Two and Four for a detailed rationale

14. c.f. Iglowitz, 1995: "Afterward: Lakoff/Edelman" for a discussion of mathematical "ideals" which bears on this discussion.

15. this relates to the issues of "hierarchy" which I will discuss shortly

16. Their designers are the "lecturers", and the instruments they design are the "objects" of their schematic models

17. A Couple of other lesser but still useful Schematic Models: A "war room", (a high-tech military command center resembling a computer game), is another viable, though primitive, example of a schematic usage. It is specifically a schematic model, expressly designed for maximized response. The all-weather landing display in a jetliner supplies yet another example.

18. Cf Lakoff, 1987. Also see Iglowitz, 1995, "Afterward: Lakoff, Edelman…"

20. The multiple, topological maps in the cortex

21. in the brain's spatial maps

22. cf Dennett, Dreyfus on the "frame problem"

23. This is typically the case. A project manager, for instance, must deal with all, (and often conflicting), aspects of his task -from actual operation to acquisition, to personnel problems, to assuring that there are meals and functional bathrooms! Any one of these factors, (or some combination of them), -even the most trivial- could cause failure of his project. A more poignant example might involve a U.N. military commander in Bosnia. He would necessarily need to correlate many conflicting imperatives -from the geopolitical to the humanitarian to the military to the purely mundane!

24. See Iglowitz, 1995: Lakoff/Edelman appendix

25. See Birkhoff & Mac Lane, 1955, p.350, discussion of the "duality principle" which vindicates this move.

26. The "anthropic principle" is clearly self-serving and tautological.

27. cf Hofstadter, 1979. His is a very nice metaphor for picturing metacellular existence.

29. See Dreyfus on the "large database problem" and the Appendix to this paper.

30. It has been argued that Cassirer departed from the tradition of Kant, specifically in the issue of innate categories. See Smart, 1949. Kant was most definitely a realist however. It is a misnomer to term him an "idealist".

31. see Iglowitz 1995, chapters 3 & 4

32. Swabey: Introduction: Cassirer, 1953 This is specifically a scientifically and mathematically based relativism preserving the invariants of science and experience

33. just as it enables ordinary Naturalism

35. see Iglowitz, 2001, ("Consciousness: a Simpler…") and Iglowitz 1995, chapter 2

37. in the classical philosophical sense

42. Though Cassirer's "functional concept of mathematics" still orders presentation, it does not logically derive from it. My extension of his technical concept finally moves beyond "presentation" altogether.

44. This is the "concordance" I have argued in Iglowitz, 1995. I think it is the strongest argument in favor of my hypothesis.

47. e.g. sticking pseudopods into flames -"Monte-Carlo-ing" its way through life!

48. the set of all combinations of value input from the receptors

49. Maturana, 1987, estimates that there are 107 human sensory cells.

50. T-7 , (1084) is far greater "than there are subatomic particles in the entire known universe"! Asimov, 1977, P.58

51. Instead of trying to approximate the possible organisms at any given time, (I started with a Fibonacci series, but abandoned it to a simpler procedure), it suffices to substitute a number greater than the total number of subatomic particles in the universe -surely greater than the required number- for every term. This generates a (gross) upper limit for the series.

53. If you won't accept this assumption of the mutations per second, multiply it by a few thousands, -or millions, -or even trillions; you are only adding to the final exponent -at most a few tens. You could actually raise it to 1010,188 times per second without affecting even the literal statement of my conclusions. I suspect that long before you got to this huge number, however, that you would be stopped by the ghosts of Planck and Heisenberg! Surely complementarity suggests that there is a lower limit to the relationship between causality, mass, space and time which can have measurable effects -i.e. "information"!

54. or, alternately, to 1010,290

56. Envision a celestial turreted microscope. The lowest power is only capable of resolving objects as big as the whole universe. Progressively, the next objective lens is capable of resolving objects as small as a proton. On this "God's-eye" microscope, there would have to be 24,276 objective lenses on the turret, each with an increase in resolution comparable to that between the first two!

59. Alternatively, we would have to assume that individual evolutionary mutations could each (accidentally) correlate information to model at a scale of ten to the power of three millions!