WHOLES AND THEIR PARTS IN COGNITIVE PSYCHOLOGY:

SYSTEMS, SUBSYSTEMS, AND PERSONS

© Anthony P. Atkinson

Psychology Group, King Alfred's University College,

Winchester,

Hampshire, SO22 4NR, U.K.

email: atkinsona@wkac.ac.uk

Paper presented at the conference "Wholes and their Parts",

Maretsch Castle, Bolzano, Italy, 17-19 June, 1998.

http://www.soc.unitn.it/dsrs/IMC/IMC.htm

ABSTRACT

Decompositional analysis is the process of constructing explanations of the characteristics of

whole systems in terms of characteristics of parts of those whole systems. Cognitive

psychology is an endeavour that develops explanations of the capacities of the human

organism in terms of descriptions of the brain's functionally defined information-processing

components. This paper details the nature of this explanatory strategy, known as functional

analysis. Functional analysis is contrasted with two other varieties of decompositional

analysis, namely, structural analysis and capacity analysis. After an examination of these

three varieties of analysis, there follows a consideration of a mistake to avoid when

conducting decompositional analyses in psychology, and a possible limitation on their

explanatory scope.

-----------------------

WHOLES AND THEIR PARTS IN COGNITIVE PSYCHOLOGY:

SYSTEMS, SUBSYSTEMS, AND PERSONS [1]

My aim in this paper is to elucidate the central explanatory strategy of cognitive psychology.

My account owes much to the seminal work of Cummins (1983), but whereas he wrote from

the perspective of a philosopher of science, my elucidation of the strategy is from the

perspective of a practising cognitive psychologist. My hope is to cast the strategy in a

somewhat new light. [2]

In Section 1, I briefly introduce the notion that much psychological explanation,

especially cognitive psychological explanation, is concerned with the search for the bases of

dispositions. In Sections 2 and 3, I elaborate this notion into an account of the central

explanatory strategy of cognitive psychology, which I call decompositional analysis. In this

account, I distinguish two explanatory paths, each of which can be summarized by a

distinctive type of question one can ask of a whole system. Given that a system has a certain

property or capacity, one can ask: (1) What other properties or capacities does the whole

system have in virtue of which it has the first property or capacity?; and (2) What is the

material and/or functional composition of the system in virtue of which it has the specified

property or capacity? I call the first explanatory path capacity analysis, and the second

componential analysis. I shall distinguish two specific types of componential analysis, one of

which is concerned with specifying the component functions or processes of a system, the

other of which is concerned with specifying the material components or substrate of a

system.

In Section 4, I close the paper by briefly considering a mistake to avoid when

conducting decompositional analyses in psychology, and a possible limitation on their

explanatory scope.

1. DISPOSITIONAL REGULARITIES AND THEIR BASES

Statements of dispositional regularities are a common form of ceteris paribus (i.e., all else

being equal) laws. The standard view of ceteris paribus laws is that they are laws that apply

to certain classes of entity some of the time, that is, when and only when certain specified

conditions are met. [3] For example, it is a causal law that salt dissolves in water, ceteris

paribus (i.e., there are exceptions to the law that salt dissolves in water). Statements of

dispositional regularities are generalizations of the form, system S does X or exhibits Y

under such-and-such conditions. Or as Cummins (1983, p.18) puts it:

"To attribute a disposition d to an object x is to assert that the behavior of x is subject to (exhibits or

would exhibit) a certain lawlike regularity: to say x has d is to say that x would manifest d ... were

any of a certain range of events to occur."

For example, human skin has the disposition to turn red and peel after exposure to ultraviolet

light, over a certain range of conditions (e.g., amount of melanin in the individual's skin, and

exposure time).

What is distinctive about dispositional regularities (and possibly ceteris paribus laws

in general) is that one is entitled to ask, of the system in question, what it is in virtue of

which that system has the specified disposition. Strict or exceptionless laws, in contrast, are

brute; there is no further question as to how strict laws operate - they just do, because that

is the way the world is.

In-depth explanation of dispositional regularities, of how and why a given system has

certain dispositions, requires a search for more intrinsic properties in virtue of which the

system in question has those dispositions. In other words, dispositional regularities obtain in

virtue of certain distinctive facts about - in particular, properties of - the type of system

that has them. As Cummins puts the point (1983, p.18):

"The regularity associated with a disposition is a regularity that is special to the behavior of a

certain kind of object and obtains in virtue of some special facts about that kind of object."

An answer to the question of why salt is water soluble, for example, will appeal to the

properties of the molecular structure of salt. Salt manifests the disposition to dissolve in

water in virtue of having a certain sort of molecular composition. Likewise with many other

dispositional properties: an answer to the question of why a system manifests a disposition

will typically consist in a specification of that system's component parts, their functions and

organization; a specification of, in short, that system's microstructure. (For more on the

nature of dispositions, and of dispositional explanations, see, for example, the collected

essays in Crane, 1996.)

The idea that adequate explanations of the dispositional regularities of complex

entities require appeal to the components or microstructure of those entities is nicely

captured by Fodor's (e.g., 1974, 1989) views concerning the contrast between the "basic

sciences" (i.e., the various branches of physics) and the "special sciences" (e.g., geology,

chemistry, psychology, economics). Both the basic and the special sciences are, on Fodor's

view, in the business of providing laws that explain their proprietary phenomena. Basic

science laws are basic in the sense that nothing more is needed for causal explanation than

for it to be shown that the phenomena are subsumed under one or more fundamental laws. In

the case of special science laws, in contrast, there will always be a further story to tell about

how and why those special laws apply. It is just a brute fact of the nature of the universe that

basic science laws operate, but it is not a brute fact that special science laws operate. So the

buck of special science explanations does not stop with a statement of the appropriate laws.

Special science laws operate only in virtue of the reliable or proper functioning of

mechanisms that implement these laws, so explanations in the special sciences have to be

backed up by theories about how the operations of these mechanisms implement these laws.

Adequate explanations of behavioural and property dispositions are accounts of how,

or what it is in virtue of which, systems have those dispositions. The explanatory strategy

that generates accounts of the bases of dispositions is what I am calling decompositional

analysis.

2. DECOMPOSITIONAL ANALYSIS AS A RESEARCH STRATEGY IN COGNITIVE PSYCHOLOGY

Decompositional analysis is the analysis of systems in terms of their subsystems. Rather

obviously, the notion of a system and the notion of a subsystem are coupled. A subsystem is

a component of a system. A certain sort of circuit board is a subsystem of a CD player, and

heart valves are subsystems of hearts. Moreover, many single system-subsystem pairs will

themselves be a part of a chain of such pairs. That is to say, there will be a hierarchy of

subsystem-system relations, such that a system can itself be a subsystem of a larger system.

So a certain sort of circuit board is a subsystem of a CD player, which is in turn a subsystem

of a hi-fi system. And heart valves are subsystems of hearts, which are in turn subsystems of

circulatory systems, which are in turn subsystems of organisms. Likewise, neurons are

subsystems of neural circuits, which are in turn subsystems of the brain. [4]

This way of viewing a system as itself a subsystem of a larger system need not stop

with medium-sized objects such as hi-fi systems and organisms. A galaxy is a system

composed of stars and planets, and galaxies are in turn subsystems of the universe.

Similarly, atoms are systems composed of subatomic particles, but atoms are also

subsystems of molecules.

Decompositional analysis is a powerful and widely used method in many sciences for

explaining the properties and capacities of complex systems. Its central ideas underpinned

the development of the mechanical and engineering sciences, and its use is especially

evident in the biological and psychological sciences (Bechtel & Richardson, 1993; Wimsatt,

1976). Indeed, it was in the mechanical and engineering sciences that decompositional

analysis was crystallized and fully fostered. Not only was the behaviour of machines, and

later, electronic computers, more easily disclosed and understood from the perspective of

this mode of explanation, but it was also the basic principle upon which these machines were

designed (e.g., Wimsatt, 1976). So to the extent that this explanatory strategy has been

central to cognitive psychology, the engineering and computing sciences have been

significant historical contributors to that discipline (e.g., Knapp, 1986). Indeed, cognitive

psychological explanation, qua decompositional analysis, is sometimes described as a case

of 'reverse engineering' (e.g., Dennett, 1990, 1994, 1995). Engineers construct machines

from a plan or design that specifies what the machine is to do and how it is to do it,

including what components it will have such that it is able to do those things. A reverse

engineer, in contrast, tries to work out the largely or entirely unknown design of a machine

by analyzing or decomposing it. A reverse engineer supposes that the machine in question is

composed of parts, and that those parts realize certain functions. She attempts to explain

how it is that the system does what it does in terms of its parts and/or their respective

functions.

Via decompositional analysis, one can explain how it is that a system exhibits certain

dispositional regularities, that is, explain what it is in virtue of which a system has certain

capacities and properties. The central idea of this scheme is that characteristics of whole

systems can be explained in terms of characteristics of parts of those whole systems.

(Similarly, performances of whole tasks can be explained in terms of performances of parts

of those tasks, and complex events can be understood as series of connected simpler events.)

This explanatory task is described by Cummins (1983) as consisting of an analysis of a

system, S, that explains what it is for that system to have a capacity or property, P, "by

appeal to the properties of S's components and their mode of organization" (p.15). Cummins

continues (1983, p.15):

"The process often has as a preliminary stage an analysis of P itself into properties of S or S's

components. This step will loom large ... [in cases where the Ps are] complex dispositional

properties such as information-processing capacities."

My task in this section is to elucidate the way this explanatory methodology is employed in

cognitive psychology.

In what follows, I shall refer to the field of cognitive psychology as that branch of

psychology that is characterized by the information-processing approach. This is a

mechanistic approach to psychology whose primary object of study is the nervous system,

and in particular, the brain, viewed as an abstract information-processing system. As such,

the information-processing approach should be distinguished from another strand of

cognitive psychology, which is the study of cognition and perception, strictly so called. What

characterizes the second strand is the emphasis on psychological capacities and properties of

persons, such as perception, thinking, reasoning and problem solving. Cognitive psychologists of

this second stripe seek explanations of such properties and capacities of people in terms of other

capacities and properties of those people. So, for example, Tversky and Kahneman (1974)

investigated how people reason under uncertainty. They sought to explain how "people

assess the probability of an uncertain event or the value of an uncertain quantity" (p.1124).

Tversky and Kahneman suggested that

"people rely on a limited number of heuristic principles which reduce the complex tasks of

assessing probabilities and predicting values to simpler judgmental operations" (p.1124).

One such proposed heuristic is that of "availability", according to which

"people assess the frequency of a class or the probability of an event by the ease with which

instances or occurrences can be brought to mind" (p.1127).

(The distinction between the level at which persons are characterized and the level at which

parts of persons, such as the brain and its components, are characterized, will be discussed

in Section 4.)

In contrast to proposals of this sort, information-processing psychologists seek to

explain how it is that humans have certain capacities and properties and can engage in

certain activities, including those capacities, properties and activities studied by cognitive

psychologists of the second stripe (hence the two strands of cognitive psychology are

somewhat interrelated). Instead of asking questions about what people do, such as what

strategies are used when reasoning under uncertainty, information-processing psychologists

ask questions such as: What faculties or mechanisms underlie the ability to reason? How is it

that humans (and other animals) can see, hear, feel, taste and smell the world in the way that

they do? How is it that humans so readily develop the ability to speak and understand, and

usually read and write, language? How is it that people can recognize the faces of family and

friends?

The information-processing psychologist answers such 'How is it that ...?' questions by

saying roughly this: "People have these capacities and properties in virtue of having a brain

that is principally an information processor. The brain enables a person to have these

capacities and properties in virtue of its having such-and-such information-processing

components operating in such-and-such a way." Answers of this sort are proposals about the

functional architecture of the mind or brain. While the brain is the central object of study of

the information-processing approach, psychologists who take this approach tend not to look

at the structures and operations of the brain directly. Rather, they proffer theories about the

information-processing architecture of the brain that are somewhat abstracted from

neuroanatomy and neurophysiology. The thought is that a legitimate and useful form of

psychological theory is one that attempts to explain the capacities and behaviour of humans

in terms of a view of the brain as a complex of subsystems that are defined by what they do,

not by what they are made of.

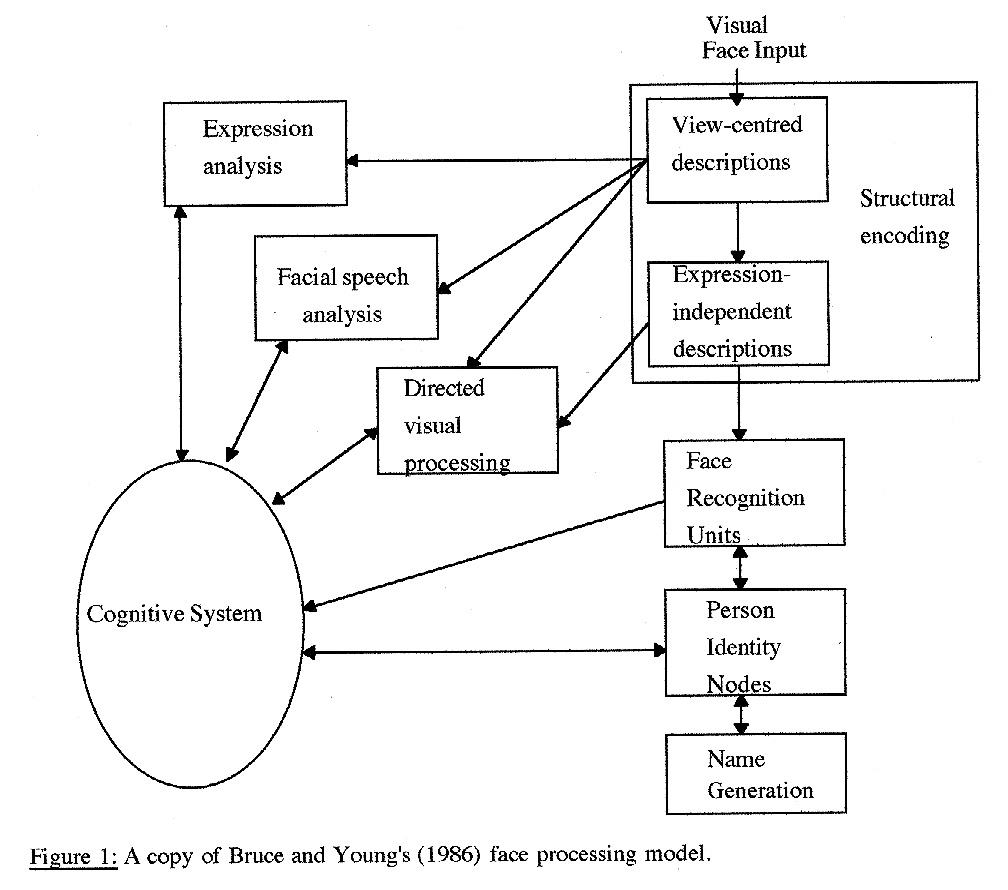

Consider one well-known model of face processing from the mid-1980s (Bruce &

Young, 1986). The authors depict this model as a 'box-and-arrow' diagram (Figure 1). Box-

and-arrow diagrams or flowcharts are very useful devices for depicting the individual stages

of a process, and the order or flow of these stages. The boxes depict operations, processes or

functions, but the theorist typically makes few if any commitments as to what it is that

performs those functions. When such diagrams are used to depict the operations of

information-processing systems, the boxes represent the functions of information processors,

memory stores, sensory transducers, output mechanisms, and the like. For example, the

'expression analysis' box and the 'facial speech analysis' box of Figure 1 are functionally

defined information processors, the 'face recognition units' and 'person identity nodes' boxes

are essentially functionally defined memory mechanisms, and the 'name generation' box is a

functionally defined output mechanism. Accordingly, the arrows in this type of model

represent the flow of information between information-processing components, that is, they

specify which outputs get to be which inputs. In other words, arrows represent the order in

which the identified processes or functions are or can be done: one process comes after

another because it depends on the output of the other process.

In short, cognitive psychology is information-processing psychology. On this view, the

canonical conception of the internal structure and operation of the brain as an information

processor is of a structured system of component information processors, memory stores,

sensory transducers and the like. What I shall now do is elucidate my view that this

explanatory strategy in cognitive psychology consists of the use of three types of

decompositional analysis. [5] In doing so, I shall highlight the dominant role of one of these

varieties, namely, functional analysis.

3. VARIETIES OF DECOMPOSITIONAL ANALYSIS

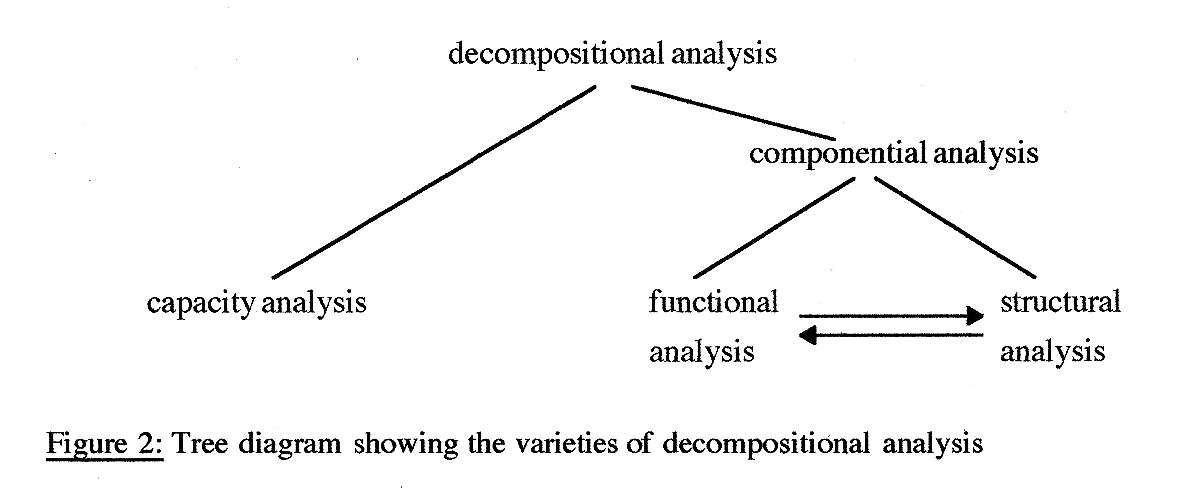

There are two (ideally interacting) explanatory paths within decompositional analysis, as

follows (see Figure 2).

&nbs

p;

(1) Capacity analysis. A capacity analysis involves the specification of a capacity of a whole

system, and the parsing of this capacity into subcapacities of the whole system. A capacity

analysis is an attempt to answer the question, How does a creature perform a complex task?

The general form of the answer provided by a capacity analysis is that the whole creature

performs the complex task by virtue of performing a set of simpler tasks. In short, a task that

a whole system has the ability to perform is decomposed into subtasks that that whole

system has the ability to perform. So, for instance, the task of baking a cake might get

decomposed into a number of more specific tasks that the cake baker performs, such as

measuring the ingredients and then mixing them. Another example: An organism's capacity

to secure a mate might get broken down into the capacities of producing a mating call,

showing off some plumage, and fending off rivals.

How do you bake a cake? You read and follow a recipe which tells you to mix such-

and-such ingredients in such-and-such quantities and then put the mixture in an oven at such-

and-such a temperature. This is a story about you, about you as a reader and follower of a

recipe, about you as a baker of cakes. The details of that story are not parasitic upon an

account of the information-processing mechanisms in your brain that enable you to read the

recipe and bake the cake. That is, although there must be some story to be told about the

information-processing machinery that enables you to do these tasks, the system-level story

does not necessitate any particular account of the information-processing substrate.

Similarly for other examples of capacity analyses: in general, a capacity analysis is a

descriptive and explanatory story about a system, without reference to that system's

subsystems. That is, capacity analyses are neutral with respect to mechanism. As Cummins

says (1983, pp.29-30):

"Since we do this sort of analysis [i.e., capacity analysis, in my terminology] without reference to

an instantiating system, the analysis is evidently not an analysis of an instantiating system. The

analyzing capacities are conceived as capacities of the whole system. ... My capacity to multiply

27 times 32 analyzes into the capacity to multiply 2 times 7, to add 5 and 1, etc. These capacities

are not (as far as is known) capacities of my components; indeed, this analysis seems to put no

constraints at all on my componential analysis."

(2) Componential analysis. The starting point for a componential analysis is some

capacity or set of capacities of a whole system, and the goal is to explain how it is that the

whole system has that capacity or set of capacities in virtue of having certain component

mechanisms with a particular structure and operation. So a componential analysis starts from

a look at a whole system and moves to hypotheses about that system's parts. At the same

time, causal explanation is seen to proceed in the opposite direction, from parts to wholes.

That is to say, the analysis of a system into subsystems is grounded on the assumption that

those subsystems act and interact to cause the whole system's behaviour.

There are two types of componential analysis (see Figure 2). To attempt to delineate a

complex of processes or functions performed by one or more as yet unspecified material

components of a system is to engage in (2a) functional analysis. And to attempt to delineate

the material components or substrate of a system is to engage in (2b) structural analysis.

Although these two types of componential analysis are distinct, there is, in practice, a close

interplay between them (as indicated by the arrows in Figure 2).

Functional analysis is the type of decompositional analysis that produces the standard

form of box-and-arrow diagrams in cognitive psychology. A functional analysis is a

specification of (i) what gets done by one or more components of a system, without

commitment as to the material composition of those components, and (ii) the order of and

relations between these functions or effects (i.e., a process, where the specified functions or

effects are stages in that process). As such, a functional analysis can serve as an explanation

of how it is that the system under investigation has a certain capacity or property. More

specifically, a functional analysis provides an explanation of a system-level capacity or

property insofar as it proposes that the system of interest has a certain functional architecture

that enables it to have the identified capacity or property.

A structural analysis, in contrast, involves breaking down a system into subsystems

defined by their material structure, and specifying the organization of and relations between

these subsystems. Structural analyses yield descriptions of the material composition of a

system, and it is to such descriptions that one must turn if one wants to know what material

apparatus implements the functions and processes detailed in a functional analysis, and how

it does so. (Bearing in mind, of course, the above point that a structural analysis might cut at

very different boundaries from a functional analysis.) Neuroscientists are in the business of

carrying out structural analyses of the brain. (Though most are in the business of carrying out

functional analyses too; that is, structural and functional analyses tend to be intertwined in

neuroscientific research.) For example, many of the structures that underlie vision have been

identified, including separate cortical areas specialized for such visual capacities as the

perception of depth, form, colour and movement (see e.g., Cowey, 1985; Van Essen &

Deyoe, 1995; Zeki, 1992, 1993, for reviews).

Cognitive psychologists are in the business of carrying out functional analyses, insofar

as they proffer box-and-arrow models as explanations of the capacities of humans, where

those models specify functionally defined components of the brain's information-processing

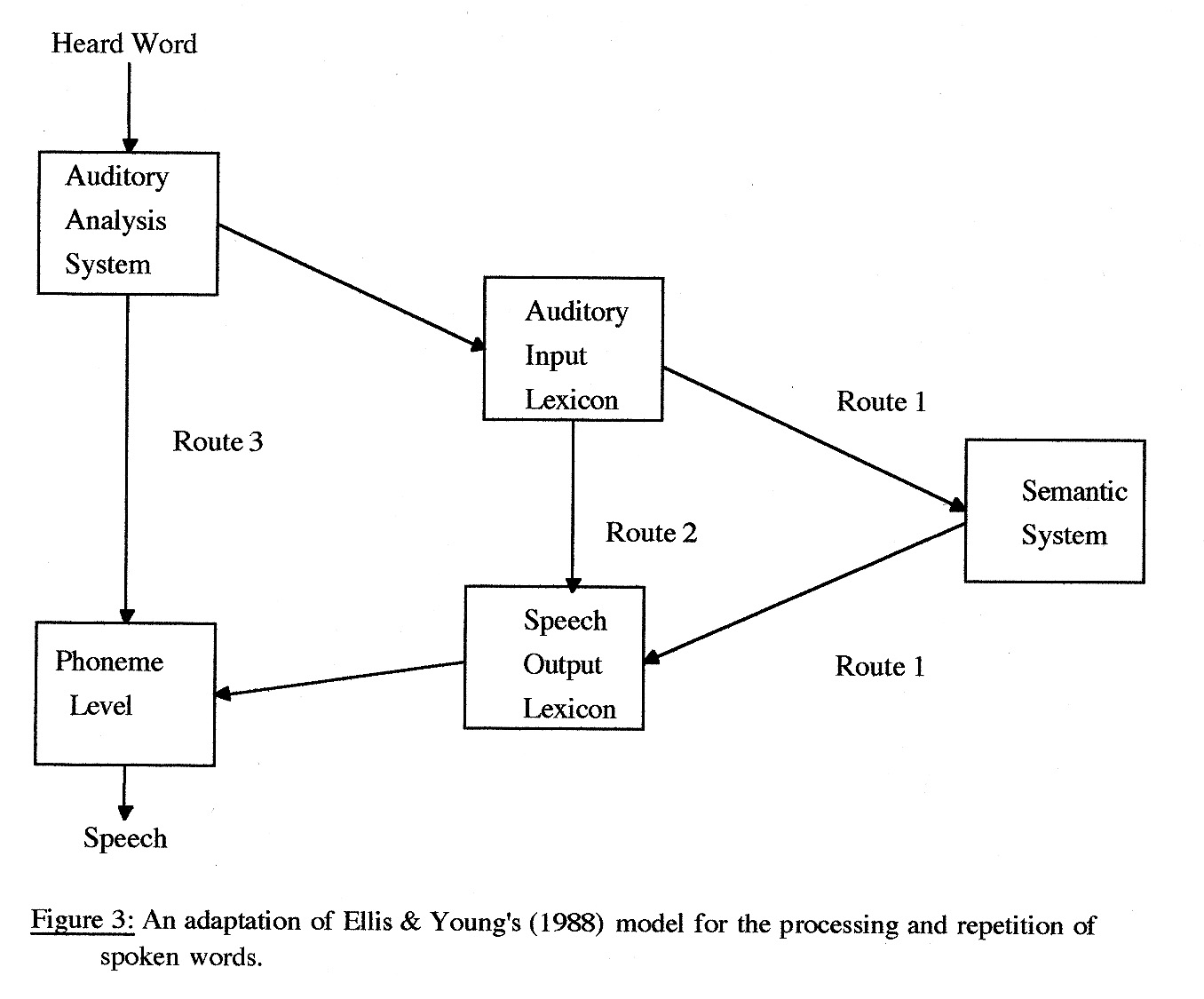

machinery. Consider, for example, the human ability to shadow speech (i.e., the ability to

repeat immediately what is spoken to one). Cognitive psychologists have proposed a

rudimentary functional analysis of this system-level capacity according to which there are

three routes from auditory input to speech output (see Ellis & Young, 1988; and Figure 3).

Each route consists of a small series of distinct information-processing operations. One

route, for example ('Route 2' in Figure 3), consists in the following four operations: (a) the

extraction of individual speech sounds (e.g., phonemes) from the sound wave, (b) the

registration of familiar spoken words, (c) the conversion of the representations of the

auditory form of words to representations of the spoken form of words, and (d) the

segmentation of the representations of the spoken form of whole words into representations

of individual speech sounds (e.g., phonemes). The general proposal is that the system-level

capacity of shadowing speech is realized in virtue of the performance of the information-

processing functions specified in any one of the three routes. It is assumed that the

distinction between these three routes will be reflected in the neural substrate, but that, for

the purposes of the functional analysis, it does not matter exactly what that substrate is.

Moreover, the functionally defined components produced by a functional analysis need

not match up, one-to-one, with the anatomically defined components produced by a

structural analysis. The positing of functionally defined components is not meant to imply

spatial localization; the 'components' featuring in such accounts are not geographical units,

but rather, are units defined solely by some function. Of course it might be the case that some

functional components map neatly onto individual geographical components or locations, but

this need not be so for all cases. It is entirely possible for a distinct functional component to

be implemented by widely dispersed (and possibly diverse) physical components.

Conversely, it is possible for a single physical component to implement more than one

distinct function. To take some simple examples: birds' wings can act as thermo-regulators as

well as instruments of flight, the human tongue has roles in eating, tasting and talking, and a

knife can be used for both cutting and spreading. And in a system as complex as the brain,

there is likely to be much "multiple, superimposed functionality", as Dennett (1991, p.273)

puts it. That is, it is likely to be the case that the mapping of functions to neural structures

will be a complex, multi-layered affair.

So functional analysis is to be clearly distinguished from structural analysis. Functional

analysis, like structural analysis, involves parsing a system into constituent mechanisms. In

structural analysis, mechanisms are individuated along the boundaries of physically defined

units (e.g., anatomically distinct neural circuits), whereas in functional analysis, mechanisms

are individuated along boundaries that distinguish individual processes or functions.

Functional analysis is also to be clearly distinguished from capacity analysis.

Functional analyses are accounts of processes, of things done and of the order in which they

are done, and so bear some resemblance to capacity analyses. But whereas capacity analysis

is concerned with what it is that whole systems do or can do, functional analysis is concerned

with the things that parts of whole systems do that enable those whole systems to have

certain capacities and properties. In short, capacity analysis is concerned with the

dispositions of whole systems, whereas functional analysis is concerned with the bases of

those dispositions. (Structural analysis is also often concerned with the bases of

dispositions.)

4. A MISTAKE TO AVOID, AND A POSSIBLE LIMITATION

To recapitulate: Given that an organism (or more generally, a whole system) has a certain

property or capacity, two questions that one can ask of it are these: (1) What other properties

or capacities does the whole organism have in virtue of which it has the first property or

capacity? (2) What is the material and/or functional composition of the whole organism in

virtue of which it has the specified property or capacity; that is, roughly, what structures and

processes inside the organism enable that whole organism to do the things that it can do?

Questions of type (1) can be answered by conducting capacity analyses, while questions of

type (2) can be answered by conducting componential analyses (i.e., functional and

structural analyses).

When we are doing decompositional analysis, especially functional analysis, there is

a general error that we need to take particular care to avoid, but which is not a mistake that

could be pinned to particular claims about subsystems. The error is simply assuming,

without evidence, that there is a box for every capacity of a whole system. In other words, it

is a mistake to assume that, for any capacity of a whole system, one can posit a

corresponding information-processing subsystem that purportedly underpins that capacity.

We can perceive espresso machines, coffee cups and cakes, for example, but there is

abundant evidence showing that we do not have separate espresso machine, coffee cup and

cake perceptual systems. [6]

Capacity analyses are neutral with respect to mechanism. As we have seen (Section

3), this is a point that Cummins (1983) is well aware of. When we conduct a capacity

analysis, Cummins says, we do so "without reference to an instantiating system" (p.29). The

capacities featuring in the analysis are ascribed to the whole system, not to parts of that

system; "indeed, this analysis seems to put no constraints at all on ... componential analysis."

(p.30). A given componential analysis will quite probably be consistent with various

capacity analyses, and a given capacity analysis will allow for various componential

analyses. Thus, the claim that, for a given capacity or property specified in a capacity

analysis, there is a corresponding subsystem identified in the componential analysis that

subserves that capacity or property, is bold and quite likely false. That is, it is quite probable

that the boundaries of many of the capacities of certain whole systems, such as the human

organism or its brain, do not line up with the boundaries of the subsystems specified in

functional (or structural) analyses of those systems.

Is Cummins right to claim that a capacity "analysis seems to put no constraints at all on

... [a] componential analysis"? While I agree that this is true in general, it is nevertheless my

view that a certain type of capacity analysis can constrain componential analyses. Although I

shall not present the argument here, it is my view that accurate information-processing

theories are more easily obtainable, and mistaken theories more easily avoided, if

decompositional analyses are motivated by evolutionary considerations. In short, the view is

that evolutionary biology is an important, or even crucial, source of guidance and constraint

for componential analyses in psychology (see e.g., Atkinson, 1997; Cosmides, Tooby &

Barkow, 1992; Cosmides & Tooby, 1994).

I finish the paper by briefly considering a possible limitation on the explanatory

scope of decompositional analyses in psychology. This limitation centres upon what has

become known as the personal/subpersonal distinction, that is, the distinction between the

level at which we talk of the individual human being or person, and the level at which we

talk of organs and other body parts, especially the brain. This distinction was vividly

captured by Dennett (1969, pp.93-94):

So functional analysis is to be clearly distinguished from structural analysis. Functional

analysis, like structural analysis, involves parsing a system into constituent mechanisms. In

structural analysis, mechanisms are individuated along the boundaries of physically defined

units (e.g., anatomically distinct neural circuits), whereas in functional analysis, mechanisms

are individuated along boundaries that distinguish individual processes or functions.

Functional analysis is also to be clearly distinguished from capacity analysis.

Functional analyses are accounts of processes, of things done and of the order in which they

are done, and so bear some resemblance to capacity analyses. But whereas capacity analysis

is concerned with what it is that whole systems do or can do, functional analysis is concerned

with the things that parts of whole systems do that enable those whole systems to have

certain capacities and properties. In short, capacity analysis is concerned with the

dispositions of whole systems, whereas functional analysis is concerned with the bases of

those dispositions. (Structural analysis is also often concerned with the bases of

dispositions.)

4. A MISTAKE TO AVOID, AND A POSSIBLE LIMITATION

To recapitulate: Given that an organism (or more generally, a whole system) has a certain

property or capacity, two questions that one can ask of it are these: (1) What other properties

or capacities does the whole organism have in virtue of which it has the first property or

capacity? (2) What is the material and/or functional composition of the whole organism in

virtue of which it has the specified property or capacity; that is, roughly, what structures and

processes inside the organism enable that whole organism to do the things that it can do?

Questions of type (1) can be answered by conducting capacity analyses, while questions of

type (2) can be answered by conducting componential analyses (i.e., functional and

structural analyses).

When we are doing decompositional analysis, especially functional analysis, there is

a general error that we need to take particular care to avoid, but which is not a mistake that

could be pinned to particular claims about subsystems. The error is simply assuming,

without evidence, that there is a box for every capacity of a whole system. In other words, it

is a mistake to assume that, for any capacity of a whole system, one can posit a

corresponding information-processing subsystem that purportedly underpins that capacity.

We can perceive espresso machines, coffee cups and cakes, for example, but there is

abundant evidence showing that we do not have separate espresso machine, coffee cup and

cake perceptual systems. [6]

Capacity analyses are neutral with respect to mechanism. As we have seen (Section

3), this is a point that Cummins (1983) is well aware of. When we conduct a capacity

analysis, Cummins says, we do so "without reference to an instantiating system" (p.29). The

capacities featuring in the analysis are ascribed to the whole system, not to parts of that

system; "indeed, this analysis seems to put no constraints at all on ... componential analysis."

(p.30). A given componential analysis will quite probably be consistent with various

capacity analyses, and a given capacity analysis will allow for various componential

analyses. Thus, the claim that, for a given capacity or property specified in a capacity

analysis, there is a corresponding subsystem identified in the componential analysis that

subserves that capacity or property, is bold and quite likely false. That is, it is quite probable

that the boundaries of many of the capacities of certain whole systems, such as the human

organism or its brain, do not line up with the boundaries of the subsystems specified in

functional (or structural) analyses of those systems.

Is Cummins right to claim that a capacity "analysis seems to put no constraints at all on

... [a] componential analysis"? While I agree that this is true in general, it is nevertheless my

view that a certain type of capacity analysis can constrain componential analyses. Although I

shall not present the argument here, it is my view that accurate information-processing

theories are more easily obtainable, and mistaken theories more easily avoided, if

decompositional analyses are motivated by evolutionary considerations. In short, the view is

that evolutionary biology is an important, or even crucial, source of guidance and constraint

for componential analyses in psychology (see e.g., Atkinson, 1997; Cosmides, Tooby &

Barkow, 1992; Cosmides & Tooby, 1994).

I finish the paper by briefly considering a possible limitation on the explanatory

scope of decompositional analyses in psychology. This limitation centres upon what has

become known as the personal/subpersonal distinction, that is, the distinction between the

level at which we talk of the individual human being or person, and the level at which we

talk of organs and other body parts, especially the brain. This distinction was vividly

captured by Dennett (1969, pp.93-94):

"When we have said that a person has a sensation of pain, locates it and is prompted to react in a

certain way, we have said all there is to say within the scope of this vocabulary. We can demand

further explanation of how a person happens to withdraw his hand from the hot stove, but we

cannot demand further explanations in terms of 'mental processes'. Since the introduction of

unanalysable mental qualities leads to a premature end to explanation, we may decide that such

introduction is wrong, and look for alternative modes of explanation. If we do this we must

abandon the explanatory level of people and their sensations and activities and turn to the sub-

personal level of brains and events in the nervous system. But when we abandon the personal level

in a very real sense we abandon the subject matter of pains as well. When we abandon mental

process talk for physical process talk we cannot say that the mental process analysis [my

emphasis] of pain is wrong, for our alternative analysis [my emphasis] cannot be an analysis of

pain at all, but rather of something else - the motions of human bodies or the organization of the

nervous system."

To a first approximation, then, personal-level phenomena are those picked out in the

conceptual scheme of commonsense or folk psychology (mental states and processes, in

short). Subpersonal-level phenomena, in contrast, are those picked out by the conceptual

scheme of the biological sciences, and in particular, by the brain and cognitive sciences.

Notice that, in the final sentence of the above passage, Dennett identifies (and, it

seems, runs together) two different types of analysis. One of these is conceptual analysis, the

other is what I call componential analysis. Conceptual analysis involves philosophical or

conceptual definition. The idea is that a concept is constituted by, or defined in terms of, a

set of different concepts. For example, the concept of bachelor is defined in terms of the

concepts of unmarried and of man. Componential analysis, in contrast, implies material or

functional constitution, as we have seen (Section 3).

Dennett points out that there is a limit on the scope or depth of the conceptual

analysis of mental state types. The thought is that there is only so far one can go with a

conceptual analysis of, for example, pain; a point will be reached where all that is said is all

that can be said at that level (i.e., the personal level). And from what Dennett says in the

above passage, it seems that he feels that there is something more to a complete explanatory

story of a personal-level capacity or property than can be provided by a conceptual analysis.

The overall explanatory story is to be made more complete by the provision of a

componential analysis. The performance of a componential analysis is the performance of a

very different type of explanatory project, which requires the abandonment of one level of

description and explanation - the personal level - for another, very different level - the

subpersonal level.

What can subpersonal sciences, such as information-processing psychology, tell us

about persons? It is thought by some (e.g., Hornsby, 1997; McDowell, 1994a, 1994b; Sellars,

1963) that our everyday, folk psychological understanding of humans qua persons cannot be

assimilated into a scientific understanding of humans qua natural systems. On this view,

cognitive psychology and neuroscience may be able to inform the study of persons -

namely, by specifying the neurological and information-processing entities and events that

enable humans to have the capacities and properties that feature in folk psychological

discourse - but no natural science can tell us what it is to be a person.

McDowell (1994a) applauds Dennett's distinction between the personal and

subpersonal levels, but suggests that the essence of the distinction, as it should be drawn, is

one between organisms and their parts, not between persons and non-persons. For

McDowell, what marks the distinction between an organism and its parts is that an organism

is a system that we describe as "more or less competently inhabiting an environment"

(p.196). "In this context," McDowell continues (1994a, p.196):

"we ask questions like the following: what features of the environment would a creature need to

become informed of, in order to live in it with precisely the competence that ... [those particular

creatures] display?"

Questions of this sort are to be distinguished from questions of a second kind that can be

asked about an organism that competently inhabits an environment, namely, questions about

the nature and operation of the organism's parts that enable the organism to live competently

in an environment. So, for example, what it is that enables an organism to become informed

of features of its environment is that organism's perceptual equipment. And the question of

how it is that an organism is informed of features of its environment will be answered by

accounts of how that perceptual equipment works.

Questions of the first kind are examples of what McDowell calls "constitutive"

questions. Questions of the second kind are examples of what he calls "enabling condition"

questions. As I see this distinction, constitutive questions are to be answered by conceptual

analysis, whereas enabling condition questions are to be answered by componential analysis.

In advancing this distinction between constitutive and enabling condition accounts,

McDowell has given us reason to separate clearly talk of organisms from talk of their parts.

On his view, to characterize organisms, including human organisms, as "more or less

competently inhabiting an environment" (1994a, p.196) is to characterize them as minded

creatures, that is, in folk psychological terms. What McDowell's view amounts to, then, is

that the personal or 'organismal' level is set apart from subpersonal or 'suborganismal' levels

of description and explanation - that is, from the system and subsystem levels - to the

extent that consummate constitutive accounts of these 'higher-level' phenomena do not

require the inclusion of facts about, for example, the brain. Indeed, while McDowell admits

that system-level and subsystem-level facts about the brain and its evolution may well help

shed light upon personal-level phenomena, he nevertheless holds that such facts are

irrelevant to constitutive accounts of those phenomena. On his view, enabling condition

theories, including cognitive scientific theories, do not tell us what it is to be a minded

creature; only constitutive accounts can tell us that. In sum, componential analyses cannot be

used to explain truly personal-level phenomena, that is, concepts that feature in conceptual

analyses of mental states and processes. There are two distinct types of analysis, and one

cannot subsume the other.

One might take McDowell's view as a serious challenge to the explanatory scope and

power of cognitive psychology. I do not. There is a clear distinction between the sorts of

question one can ask at the personal and subpersonal levels, as McDowell is at pains to

emphasize. Consider, for example, attempts to explain consciousness. Questions such as,

What is it for a person to be conscious, or to be in conscious states?, are clearly personal-

level questions. Cognitive psychologists do not attempt to answer questions of this sort. But

they do attempt to answer questions concerning the enabling conditions for consciousness;

questions such as, What is it in virtue of which a person can be conscious, or be in conscious

states?

REFERENCES

Atkinson, A. P. (1997). Consciousness boxes and cognitive psychological explanation.

Unpublished doctoral thesis, Oxford University.

Bechtel, W., & Richardson, R. C. (1993). Discovering complexity: Decomposition and

localization as strategies in scientific research. Princeton, NJ: Princeton University

Press.

Bruce, V., & Young, A. (1986). Understanding face recognition. British Journal of

Psychology, 77, 305-27.

Churchland, P. S. (1986). Neurophilosophy: Toward a unified science of the mind/brain.

Cambridge, MA: MIT Press/ Bradford Books.

Cosmides, L., & Tooby, J. (1994). Beyond intuition and instinct blindness: toward an

evolutionary rigorous cognitive science. Cognition, 50, 41-77.

Cosmides, L., Tooby, J., & Barkow, J. H. (1992). Introduction: Evolutionary psychology and

conceptual integration. In J. H. Barkow, L. Cosmides, and J. Tooby (Eds.), The

adapted mind: Evolutionary psychology and the generation of culture (pp.3-15). New

York: Oxford University Press.

Cowey, A. (1985). Aspects of cortical organization related to selective attention and

selective impairments of visual perception: A tutorial review. In M. I. Posner and

O. S. M. Marin (Eds.), Attention and Performance, XI. Hillsdale, NJ: Lawrence

Erlbaum.

Crane, T. (Ed.) (1996). Dispositions: A debate. London and New York: Routledge.

Cummins, R. (1983). The nature of psychological explanation. Cambridge, MA: MIT Press/

Bradford Books.

Dennett, D. C. (1969). Content and consciousness. London and New York: Routledge.

Dennett, D. C. (1978). Toward a cognitive theory of consciousness. In C. W. Savage (Ed.),

Minnesota studies in the philosophy of science. Minneapolis: University of Minnesota

Press.

Dennett, D. C. (1990). The interpretation of texts, people, and other artifacts. Philosophy and

Phenomenological Research, 50, 177-194.

Dennett, D. C. (1991). Consciousness explained. Boston: Little, Brown & Co.

Dennett, D. C. (1994). Cognitive science as reverse engineering: Several meanings of 'top-

down' and 'bottom-up'. In D. Prawitz, B. Skyrms, and D. Westerstahl (Eds.),

Proceedings of the 9th international congress of logic, methodology and philosophy

of science. Amsterdam: North-Holland.

Dennett, D. C. (1995). Darwin's dangerous idea. Evolution and the meanings of life. New

York: Simon & Schuster.

Ellis, A. and Young, A. W. (1988). Human cognitive neuropsychology. Hove, Sussex:

Lawrence Erlbaum.

Fodor, J. A. (1974). Special sciences (or: The disunity of science as a working hypothesis).

Synthese, 28, 97-115.

Fodor, J. A. (1989). Making mind matter more. Philosophical Topics, 17, 59-80.

Hornsby, J. (1997). Simple mindedness: In defense of naive naturalism in the philosophy of

mind. Cambridge, MA and London: Harvard University Press.

Knapp, T. J. (1986). The emergence of cognitive psychology in the latter half of the

twentieth century. In T. J. Knapp and L. C. Robertson (Eds.), Approaches to cognition:

Contrasts and controversies (pp.13-35). Hillsdale, NJ: Lawrence Erlbaum.

Levine, J. (1983). Materialism and qualia: the explanatory gap. Pacific Philosophical

Quarterly, 64, 354-61.

Levine, J. (1993). On leaving out what it's like. In M. Davies and G. W. Humphreys (Eds.),

Consciousness: Psychological and philosophical essays. Oxford: Basil Blackwell.

Marr, D. (1977). Artificial intelligence - a personal view. Artificial Intelligence, 9, 37-48.

Marr, D. (1982). Vision. New York: W. H. Freeman & Co.

McDowell, J. (1994a). The content of perceptual experience. The Philosophical Quarterly,

44, 190-205.

McDowell, J. (1994b). Mind and world. Cambridge, MA and London: Harvard University

Press.

Pietroski, P., & Rey, G. (1995). When other things aren't equal: Saving ceteris paribus laws

from vacuity. British Journal for the Philosophy of Science, 46, 81-110.

Sellars, W. (1963). Philosophy and the scientific image of man. In W. Sellars, Science,

perception and reality. London: Routledge & Kegan Paul.

Van Essen, D., & Deyoe, E. (1995). Concurrent processing in the primate visual cortex. In

M. Gazzaniga (Ed.), The cognitive neurosciences. Cambridge, MA: MIT Press/

Bradford Books.

Wimsatt, W. C. (1976). Reductionism, levels of organization, and the mind-body problem.

In G. G. Globus. G. Maxwell, and I. Savodnik (Eds.), Consciousness and the brain:

A scientific and philosophical inquiry (pp.205-267). New York and London: Plenum

Press.

Zeki, S. (1992). The visual image in mind and brain. Scientific American, 267 (3), 69-76.

Zeki, S. (1993). A vision of the brain. Oxford: Blackwell Scientific Publications.

FOOTNOTES

1. This is the extended text of a paper presented at the 6th Annual Conference of the European Society for

Philosophy and Psychology, Padua, Italy, 1997. A draft of this paper appeared in Connexions, an electronic

journal in cognitive science ( http://www.shef.ac.uk/~phil/connex/journal/atkinson.html ). Earlier

versions of this paper were presented at the Joint Session of the Aristotelian Society and the Mind Association,

Dublin, 1996; and the Wolfson Philosophy Society, Oxford, 1996. My thanks to all those who commented on

these presentations. I am indebted to Matthew Elton, Christoph Hoerl, Neil Manson, Paul Pietroski and Tony

Stone for their advice and for many helpful conversations related to the material presented here, and to the

wider project from which it is drawn, i.e., my D.Phil. thesis in the University of Oxford. I would like to extend

a special thankyou to my supervisor, Martin Davies, for countless hours of tuition, incisive advice and

stimulating philosophical discussion.

2. A difference in our views that I shall not be explaining here is this: Both Cummins and I agree that it can be

very illuminating to decompose a disposition of a system into simpler dispositions, and in so doing to hold off

questions about the material substrate of that system. However, whereas Cummins eschews all reference to

teleology in his conception of 'function', I accept a 'teleo-evolutionary' conception, and argue that cognitive

psychological theories should be grounded upon this notion of 'function' rather than Cummins' more austere

notion (see Atkinson, 1997).

3. A potentially fatal dilemma faces this standard view of ceteris paribus ('cp') laws: either such laws are merely

vacuous, or, if all the 'other things being equal' clauses are filled in, then the laws become extremely complex

and unwieldy, with little or no application to conditions in the real world. Pietroski and Rey (1995) attempt to

surmount this dilemma by way of an advocacy of a somewhat different view of cp-laws. On the more usual

reading, when a cp-law is found not to apply, one has to drop to a more basic level science to explain why so.

On Pietroski and Rey's view, in contrast, one does not need to drop to a lower level of description and

explanation for an explanation of why a cp-law did not apply on a certain occasion. Exceptions to such laws

"are to be explained as the result of interference from independent systems" (p.87), that is, roughly, as the result

of interactions of one or more of the systems specified or implied in the cp-law with one or more systems not

covered by the law (where both sets of systems are specified at the same level). Pietroski and Rey (1995, p.91):

"On our view, ... a chemist holding that cp(PV = nRT), is committed to the following: if a gas sample G is such

that PV ? nRT, there are independent factors (e.g., electrical attraction) that explain why PV ? nRT with respect

to G."

4. This way of putting the point is merely for ease of expression. Of course there might well be further neural

circuits intermediate between a 'small scale' neural circuit and the whole brain; it all really depends on how one

carves up the brain's - or for that matter, any given system's - subsystems.

5. It will become apparent that there is much overlap between the tri-level view of cognitive psychological

explanation as I shall present it (viz., capacity analysis, functional analysis and structural analysis) and Marr's

(1977, 1982) scheme of three levels of analysis (viz., the "computational" or ecological, "algorithmic" and

implementational levels). There is one important difference between Marr's scheme and mine, however, which

is that I emphasize the shift from the system level to the subsystem level whereas Marr does not.

6. Notice that this is a more general error than the familiar one discussed by, for example, Churchland (1986),

viz., the mistake of accepting, as a general inferential scheme, the following: a particular brain region, A, is the

'centre' for some capacity, C, since (1) A is lesioned in patient Y, and (2) Y no longer has C. In any given case,

such an inference might be more or less true (perhaps more correctly expressed as 'A underpins or subserves, or

has elements that underpin or subserve, C'). But its general application "will yield a bizarre catalogue of centers

- including, for example, a center for inhibiting religious fanaticism, since lesions in certain areas of the

temporal lobe sometimes result in a patient's acquiring a besotted religious zeal. Add to this centers for being

able to make gestures on command, for prevention of halting speech, for inhibition of cursing and swearing,

and so on ..." (Churchland, 1986, p.164).

© Anthony Atkinson

So functional analysis is to be clearly distinguished from structural analysis. Functional analysis, like structural analysis, involves parsing a system into constituent mechanisms. In structural analysis, mechanisms are individuated along the boundaries of physically defined units (e.g., anatomically distinct neural circuits), whereas in functional analysis, mechanisms are individuated along boundaries that distinguish individual processes or functions. Functional analysis is also to be clearly distinguished from capacity analysis. Functional analyses are accounts of processes, of things done and of the order in which they are done, and so bear some resemblance to capacity analyses. But whereas capacity analysis is concerned with what it is that whole systems do or can do, functional analysis is concerned with the things that parts of whole systems do that enable those whole systems to have certain capacities and properties. In short, capacity analysis is concerned with the dispositions of whole systems, whereas functional analysis is concerned with the bases of those dispositions. (Structural analysis is also often concerned with the bases of dispositions.) 4. A MISTAKE TO AVOID, AND A POSSIBLE LIMITATION To recapitulate: Given that an organism (or more generally, a whole system) has a certain property or capacity, two questions that one can ask of it are these: (1) What other properties or capacities does the whole organism have in virtue of which it has the first property or capacity? (2) What is the material and/or functional composition of the whole organism in virtue of which it has the specified property or capacity; that is, roughly, what structures and processes inside the organism enable that whole organism to do the things that it can do? Questions of type (1) can be answered by conducting capacity analyses, while questions of type (2) can be answered by conducting componential analyses (i.e., functional and structural analyses). When we are doing decompositional analysis, especially functional analysis, there is a general error that we need to take particular care to avoid, but which is not a mistake that could be pinned to particular claims about subsystems. The error is simply assuming, without evidence, that there is a box for every capacity of a whole system. In other words, it is a mistake to assume that, for any capacity of a whole system, one can posit a corresponding information-processing subsystem that purportedly underpins that capacity. We can perceive espresso machines, coffee cups and cakes, for example, but there is abundant evidence showing that we do not have separate espresso machine, coffee cup and cake perceptual systems. [6] Capacity analyses are neutral with respect to mechanism. As we have seen (Section 3), this is a point that Cummins (1983) is well aware of. When we conduct a capacity analysis, Cummins says, we do so "without reference to an instantiating system" (p.29). The capacities featuring in the analysis are ascribed to the whole system, not to parts of that system; "indeed, this analysis seems to put no constraints at all on ... componential analysis." (p.30). A given componential analysis will quite probably be consistent with various capacity analyses, and a given capacity analysis will allow for various componential analyses. Thus, the claim that, for a given capacity or property specified in a capacity analysis, there is a corresponding subsystem identified in the componential analysis that subserves that capacity or property, is bold and quite likely false. That is, it is quite probable that the boundaries of many of the capacities of certain whole systems, such as the human organism or its brain, do not line up with the boundaries of the subsystems specified in functional (or structural) analyses of those systems. Is Cummins right to claim that a capacity "analysis seems to put no constraints at all on ... [a] componential analysis"? While I agree that this is true in general, it is nevertheless my view that a certain type of capacity analysis can constrain componential analyses. Although I shall not present the argument here, it is my view that accurate information-processing theories are more easily obtainable, and mistaken theories more easily avoided, if decompositional analyses are motivated by evolutionary considerations. In short, the view is that evolutionary biology is an important, or even crucial, source of guidance and constraint for componential analyses in psychology (see e.g., Atkinson, 1997; Cosmides, Tooby & Barkow, 1992; Cosmides & Tooby, 1994). I finish the paper by briefly considering a possible limitation on the explanatory scope of decompositional analyses in psychology. This limitation centres upon what has become known as the personal/subpersonal distinction, that is, the distinction between the level at which we talk of the individual human being or person, and the level at which we talk of organs and other body parts, especially the brain. This distinction was vividly captured by Dennett (1969, pp.93-94):