D5.1orig-2 Application Subsystem

| Warning: this is a copy of the D5.1 document as originally submitted to the EC.

To contribute please use: Application Subsystem. |

Back to D5.1orig System Specification

The Application subsystem is the core of the eChase architecture.

It represents the layer in which all the eChase services take place. This subsystem is logically placed between the Data Management and the Access Control layer and it consists of a set of modules each of them being able to provide one main service to external customer.

The Application layer interacts, via Metadata Import Service, with the Content Provider subsystem. It’s through this "communication channel" that the Content Provider may upload and/or update their metadata representing the contents they want to publish through the eChase platform.

The Application subsystem interacts with the Data Management Control subsystem and with the Presentation subsystem.

To the former it demands the data it needs to arrange/aggregate while, to the latter, once data is processed, it sends the information that user required.

The modules are the following:

- Search engine

- Content Based Analysis

- Thesaurus engine

- Multilingual engine

- Aggregation & Annotation Tools

- Ontology and Mapping

- Metadata Import Service

- Media Importer

- User Management

- Log engine

- Accounting engine

Contents

- 1 Search Engine and Applications

- 2 Aggregation and Annotation Tools

- 3 eLearning Tool

- 4 Metadata Importer and Repository

- 4.1 Overview

- 4.2 Format Importer

- 4.3 Schema Description

- 4.4 Translation Templates

- 4.5 Legacy database builder

- 4.6 Cleaner

- 4.7 Indexing Module

- 4.8 Mapper

- 4.9 Multilingual Translation

- 4.10 Natural Language

- 4.11 Thesauri and Dictionary Knowledge Bases

- 4.12 Metadata Repository

- 4.13 Multilingual Support

- 4.14 Thesaurus Engine

- 5 Media Engine

- 6 User Management

- 7 Accounting and Log engine

Search Engine and Applications[edit]

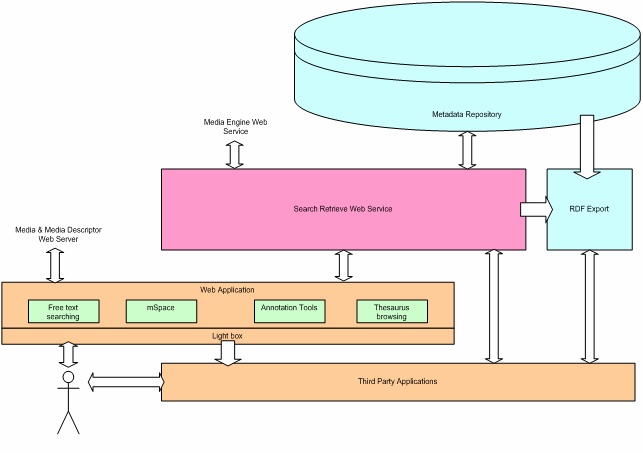

Overview[edit]

This section describes all software components that allow a user to search, navigate, visualize and export the data stored in the databases. This component provides powerful search and navigation capabilities for the user who will create content packages. The key components are:

- Search and Retrieve Web Service

- Semantic Web Search Engine

- Web application providing users with a graphical user interface to the search engines

- Light box for saving, organizing and exporting content from the unified database and media repository

- Third party applications

Section gives a brief description of the main aspects that have to be considered while designing and developing the visualization and navigation tools of the eCHASE system.

describes an existing search engine system. Certain aspects of this system should be taken into consideration when designing the eCHASE architecture.

Search Engine[edit]

The purpose of the search engine module is to assist authors and experts to develop, manage, visualize, navigate, search and exploit valuable digital resources in the eCHASE repository. The search engine will provide an access mechanism for search and retrieval of large multimedia collections that can be accessed remotely by third-party applications.

The search engine will support several different methods of searching that include:

- Searching of multimedia content (for example, finding 2D images with a similar colour or pattern to a query image)

- Searching of textual metadata (for example, finding all media that depicts a particular person)

- Searching by concept (for example, by querying with respect to people - who, art objects and representations - what, events and activities - when, places – where, and methods and techniques - how)

The search engine will support a search and retrieval protocol to allow external systems and tools to query the unified database using the different search modalities outlined above. The objective is to provide interoperability between the unified database and external applications.

The protocol will be based on the SRW (Search and Retrieve Web Service) specification developed by the z39.50 community and provides a way for external systems to query the contents of an eCHASE system according to textual metadata and multimedia content.

Search Retrieve Web Service (SRW)[edit]

The SRW executes the database searches, both for metadata, content and combined metadata and content queries. This SRW is the main web service that external systems interact with. It supports the SRW 1.1 specification, providing a search operation to handle common query language (CQL) queries, an explain operation to tell external systems what schema are supported and a get results operation to access the results of a query.

The SRW component of the system will be a Web Services that is inherently stateless and designed in a way such that it is not tightly coupled to the web application and therefore has strong potential for reuse by third parties.

The search engine Web Service will adopt a layered approach to interoperability based on a series of Web and cultural heritage domain standards. The ’nuts and bolts’ of interoperability are provided by:

- XML for the syntactic structure of data.

- Web Service standards for the access mechanism that physically allows exchange of XML data over the Internet

- SRW (http://www.loc.gov/z3950/agency/zing/srw/) to provide a search and retrieval protocol that allows one party to request information from another party.

- CQL (http://www.loc.gov/z3950/agency/zing/cql/) as a query language in which to express the information that is desired

These ’nuts and bolts’ allow the syntactic exchange of information in a structured way, but do not say anything about the meaning of the information, i.e. the semantics.

Of course, there is nothing wrong with this provided that the only use will be by people who can read additional user manuals and descriptions of the semantics of the specific service and data they are using.

These applications need the semantics to be made explicit in terms of:

- Semantics of fields (the meaning of each field or attribute)

- Semantics of content (what controlled vocabularies and languages are used)

- Supported search capabilities. This describes what parts of the collection can be queried and in what way.

The search engine ’stack’ is shown below:

TBD

Search and retrieval process[edit]

The search and retrieval via the SRW will involve a four-stage process.

- A remote application can discover information about the schema and thesauri that can be used when querying the unified collection. We use the SRW explain functionality that returns information on the schemas supported.

- The remote application then creates a machine-readable query using a language that supports query by textual, content and conceptual content. The expressivity of the query language needs to match the expressivity of the data model used to structure the data that is being searched. In our case, we use an extended version of CQL.

- The results of a query are provided to the remote application as a machine readable result set structure. The result set is stored temporarily on the server for subsequent retrieval by the client (all in one go, or through successive retrieval of subsets). The result set can be referenced by future queries, so further searches can be done within the result set, or the result set can be used to constrain another query.

- The results are physically transported to the application. The transport mechanism allows transport of digital object representations. Multiple data types for both 2D and video must be supported (e.g. JPEG, TIFF, QuickTime and WMF). Whether this data is passed by reference, for example as a URI, or is actually contained in the body of the message is a matter of implementation.

Content-based Searching with the SRW[edit]

The SRW will support requests to process content searches. However, the Media Engine will expose a web service that will encapsulate all business logic related to this process. The SRW will access this service to execute content query requests and then aggregate results to deliver to the client.

SRW Specification[edit]

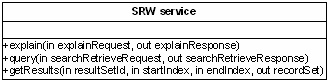

The Sculpteur search and retrieval web service implements part of the Z39.50 SRW and CQL specifications.

The basic service interface is shown in , and is modelled on the SRW v1.1 specification. For further information on the SRW service interface please refer to the SCULPTEUR D7.6 Interoperability Protocol.

The explain operation returns details of what the service can do, the Search/Retrieve operation executes a query and returns a result set containing an ID to the result set, and the getResults operation retrieves the result sets when passed an existing result set ID, start position and page size.

Framework[edit]

The SRW service will be hosted in the Apache Axis Java platform for creating and deploying web services applications. Apache Axis is an implementation of the SOAP ("Simple Object Access Protocol"). SOAP is a lightweight protocol for exchange of information in a decentralized, distributed environment. It is an XML based protocol that consists of three parts: an envelope that defines a framework for describing what is in a message and how to process it, a set of encoding rules for expressing instances of application-defined data types, and a convention for representing remote procedure calls and responses.

RDF Export: Semantic Repository and Inference Engine[edit]

Semantic web technologies can be used to perform complex querying and reasoning of the content held in the eCHASE metadata repositories. For instance, the thesauri querying functionality described in the Section can be performed using semantic web query languages accessing triple store repositories. Reasoning over the semantic relationships in thesauri permits imprecise matching between query and index terms, allowing the ranking of matching items in a result list or providing methods to find similar but not necessarily identically indexed items.

There are also reasoning techniques that can be applied to the metadata to expose patterns and relationships that are either hidden or have been unfeasible to explore due to the scale of the datasets. There is also the possibility of exposing the metadata to semantic web services and agents, which can be used to process and reason over the data in novel ways. Another benefit of supplying the data in a semantic web repository is that novel semantic web visualisation techniques could be provided on the eCHASE application layer.

This would require that the eCHASE datasets are made available using semantic web repositories. To achieve this, an export process to semantic web storage mechanisms such as RDF that then can be served by semantic web triple stores, such as 3Store or Sesame. It is important to note that the consolidation process into the unified database performed by the eCHASE system will make the RDF conversion straightforward as it deals with the complex problem of linking the overlapping information together. As the CIDOC CRM informs the design of the unified database schema, there should be a very clear mapping to obtain good quality RDF output.

The RDF being exported will be structured using the CRM ontology. There are two ways to perform the mapping from the unified database to RDF:

- Using RDF mapping tools on the unified database

- Use the SRW mechanism to obtain CRM-structured XML and apply XSLT transforms to obtain RDF

As semantic web repository technologies are still in development, there may be performance issues to resolve regarding the scale of the eCHASE datasets. This means that the eCHASE architecture should not rely on the semantic web storage in case some of the basic thesauri functionality is not possible. As such, the functionality of the semantic web interface will depend on the performance that can be achieved and optimised.

Aggregation and Annotation Tools[edit]

For eChase, a "content product" can be seen as "…an aggregation of one or more narrative threads that link multiple items of content together into some overall context."

General description of the component functionalities

At this stage, we can describe a very general way for creating such product.

The production can be described in term of:

- process

- activities

- people involved

The type of products that eChase will manage in the long term scenario is still a point of discussion. For this short introduction, the content product will be a static thematic web site.

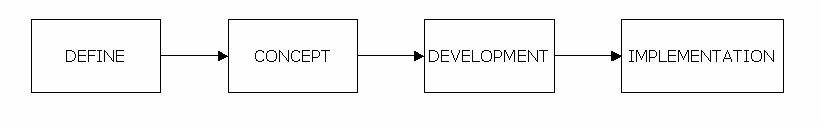

Process[edit]

Different processes can be adopted for the creation of a static web site. The process used can be described using the following diagram:

Define[edit]

The starting point of the product definition is an editorial project. All the editorial aspects are taken into account in order to prepare a "proof of concept".

Some of the main questions this step must give an answer are:

- why do we want to make such product

- what is the target

- what is the business scenario (if there is one)

- what kind of content do we aggregate within the product

- do we have already this content or it has to be realized

- what kind of result do we expect do reach and … do we have enough money to do it

The realization of the proof of concept is essential. From it, important indications for the following production activities can be understood: the most obvious information is "we can/cannot do it".

At the end of the define phase, all the requirements have to be reached, discussed and well understood in order to decide if the project will be realized internally, will be outsourced to some other partner/provider or if it has no future!

Activities[edit]

The main activities, tasks or document to prepare in this step are:

- editorial project

- feasibility of the project or proof of concept

Concept[edit]

The main purpose of the concept phase is to define what will be done, how and when. It’s the focus point of the project and the phase where interactions between editorial people and the production team are more relevant.

At the end of this step editorial people will know exactly what kind of product will be realized, which look and feel or functionalities will have and when it will be ready. At the same time, the production team will know what to do, which persons will be involved in the project, what kind of material will have to be produced or gathered, what piece of software will be developed or integrated.

Activities[edit]

The main activities, tasks or document to prepare in this step are:

- web site navigation tree/site map

- user interface layout

- graphical proposal

- technical specification

- production plan

Development[edit]

In the development phase, all the technical aspects of the product realization are analyzed. During this step, the activities can be very different, depending on the type of technologies that will be used and the type of content and functionalities the product will have.

For example, large web sites could require the organization of the contents in databases, the creation of dynamic pages or the development of a custom process for the automatic generation of html pages. At the opposite, a little static web site could require just a good HTML developer and an authoring tool like Dreamweaver.

The process can be described by the following diagram.

Activities[edit]

The main activities, tasks or document to prepare in this step are:

- content preparation (both technical and editorial activities)

- database creation

- additional content creation

- multimedia object preparation

- template preparation

- software development

Implementation[edit]

All the activities of the last phase, the implementation one, depend on the type of product we’re making and the technologies/processes that have been chosen.

In this step all the content is ready, the templates have been designed, the software needed for the final integration has been developed. In this step the final web site is realized.

So, if the product is simple and the choice of an hand production has been made, the HTML developers will take the templates and will use them for generating the web pages according to the content and to the navigation tree.

If a big web site is being developed and the process is, for instance, the one described in the previous paragraph the activities will be:

- data extraction and conversion into XML documents

- development of XSL templates

- generation of HTML pages using XSLT transformation

- final debug and integration activities

People[edit]

Several professionals have to be involved during the lifecycle of the content product production.

For the creation of the editorial project, a team of editorial "experts" will be built. This could include:

- experts that understand the subject area of the content product

- analysts that understand the underlying business model or the type of audience the product is made for

- a project manager with editorial competences

Once the editorial project has been written, the proof of concept must be prepared. For doing this, is essential that the "editorial" project manager work together with the project manager of the technical team who, from the very beginning of the project, is capable to find the right solutions to the editorial needs keeping in mind the underlying technologies the project will have and the skills owned by the technical team.

For the technical team, the professionals involved, according to the type or product and the chosen technologies, could be:

- project manager

- knowledge engineer

- database designer

- database administrator

- software developer with different skills, depending on the type of product, on the web site generation process, on the need to build custom tools for managing/converting the content

- web client scripting languages (JavaScript, Flash)

- XSL template developer

- VB, VB, PL/SQL developer

- …

- HTML developer

- designer/art director

- web producer

Obviously, the number of professionals involved in the project will depend on the size of the project itself. We could say that the minimum team should be composed of:

- editorial project manager with big competence in the subject area

- technical project manager with big knowledge of the production process, of available technologies and with experience in content product making

- web designer

- web producer

Use cases[edit]

In the long term scenario, authoring tools and functionalities will be developed in order to give to eChase users the ability to build their own "content product".

At the moment, it is still a point of discussion that will create content products and whether this could be part of a long term business or not. For this reason, use cases that can be described at this level can only be a very general ones because they could lead both on the development of on/off line functionalities and on services that the eChase consortium will offer to eChase users.

The general use cases about content aggregation, packaging and content annotation are briefly described in the following sections.

All the other technical aspects we need for the development of the right pieces of software will be explained in future releases of the document.

The actors involved in the described use cases are:

- User: the eChase User

UC 1: Content aggregation

| Name | Content Aggregation |

| Actors | User |

| Summary description | Let the user aggregate contents for its own purposes * Organize content for later re-use * Aggregate content for "content product" preparation * Make "shopping cart" for later business transactions on the selected contents |

| Pre-conditions | * The user has reached a content (from a previous browse or search action) * The user has the rights for organizing its own content (see User profiles use case, not included in the present contribution) |

| Main success scenario | The user can: * Put the selected content into personal collections * Manage collections (add/move/remove content, add/move/remove collections) * Write personal comments on the selected content for personal purposes |

| Extensions | |

| Errors | |

| Post-conditions | |

| Related use cases | |

| Open points | * The content selected by the user can be organized in collections. How these collections will be implemented is an open point. Collections could be: ? Folders with hierarchic structure ? Shopping cart ? Pre-defined templates for "content product" creation ? … * Each different type of content aggregation leads to different functionalities that will be integrated/developed: ? shopping cart: business transactions ? collection for content product production: authoring tools. All these functionalities are open points. |

UC 2: Packaging

| Name | Content Packaging |

| Actors | User |

| Summary description | Let the user obtain a packaged version of its selected contents |

| Pre-conditions | * The user has selected the type of content product he wants to build * The user has aggregated its own content * The user has built his content product (using eChase tools or not) * The user, according to his user profile and to the selected content rights, has performed all the business transactions needed to obtain the content in the selected format for the selected purpose |

| Main success scenario | According to the content product type and to the user preferences (see User profile use case, not included in the present contribution) the user can: * Choose the format he wants its content * Choose how he wants to receive the product content * Download the aggregated content |

| Extensions | |

| Errors | |

| Post-conditions | |

| Related use cases | |

| Open points | The format of the packaged content, like the type of content product and tools that eChase will provide to users are open points. |

UC 3: Annotation

| Name | Content Annotation |

| Actors | User |

| Summary description | Let the user write comments or add additional information to the content he selected for content product production |

| Pre-conditions | * The user has aggregated content for its own purposes |

| Main success scenario | The user can: * Add/remove annotations to the selected content (when a comment is added, the content owner should be alerted in order to see and manage the proposed comment). Annotations could include: ? comments about the content ? suggestion about the content metadata ? links to product that has been realized using the selected content ? links to other resources on the web that are related to the content ? links to other content, inside eChase, that are related to the content |

| Extensions | |

| Errors | |

| Post-conditions | |

| Related use cases | |

| Open points | * One of the added value that eChase wants to offer to content owners is the end-user adding of data, information, comments on the content itself. How to encourage the user to add these data and the right tools for doing that is an open point. * Several other aspects of the content annotations have to be investigated, for example: ? tools for content annotation ? annotations should be, in some way, moderated by the content owners or should adopt some workflow mechanism ? the annotations could be stored inside eChase or sent directly to content owners |

eLearning Tool[edit]

Content to be provided

Metadata Importer and Repository[edit]

Overview[edit]

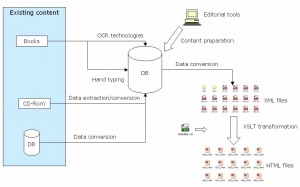

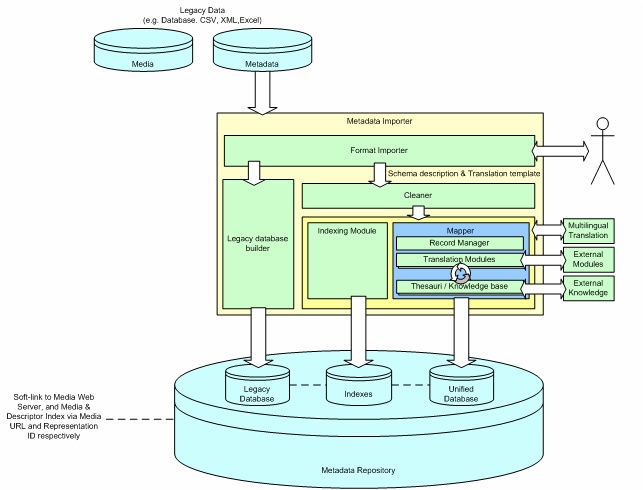

The metadata importer aggregates a large number of datasets from content providers into a well structured unified database. It includes all tools that are involved in converting legacy metadata provided by content holders into a Metadata Repository. These tools encapsulate the following functionality:

- Converting between data formats.

- Storing original legacy data in a database

- Cleaning up dirty data

- Indexing unstructured fields

- Mapping data to thesauri and common structures/dictionaries

- Mapping data to a common schema/ontology

- Building the Unified Database

Format Importer[edit]

There is a need to transform a range of formats into a common format. This transformation inherently requires specific code that will process each legacy dataset, and as a result does not fit with idealistic system architecture. However, by separating this transformation from other modular components the majority of the eCHASE system can be designed in a more generic manner, following good Software Engineering practices. Example formats that may be provided by content providers are: XML, Excel Spreadsheets, CSV and MySQL database dumps.

The Format Importer provides the transformation from legacy systems and exports into a common structure. This is used to load the legacy data into a legacy database, create indexes over the unstructured data, and provide a common structure that the Mapper can process. This simplifies these components and isolates them from the diverse legacy formats that will be imposed on the system.

In the future the process of obtaining data from content holders with documentation and in appropriate formats could be standardized and either eliminate the need for this layer, or simplify the import process. Additionally other approaches to gathering data may be considered at this level in the architecture to expand the capabilities of the eCHASE system. For example, OAI (Open Archive Initiative) harvesting is a mechanism by which metadata can be pulled from a content holder automatically. This is an alternative to the content provider compiling exports of data manually.

Schema Description[edit]

The common structure output by the Format Importer is described by the Schema Description. The Schema Description provides the necessary explanation of the legacy data that allows the Mapper and Indexer to iterate across records and extract the appropriate fields for processing. For example, by specifying how tables are interlinked, the Mapper can automatically join the relevant tables when mapping a record rather than relying on the user explicitly specifying low level linking constructs targeted at the underlying storage system (e.g. SQL join statements). Information regarding data types and unique identifiers are included in the description to facilitate any processing required.

The schema description describes the schema of the legacy systems that are imported, and includes information about the structure of the data, the data types of fields and how data is interlinked.

Translation Templates[edit]

The output of the Format Importer cannot be processed without an accompanying description stating what the semantics of each field are and how they should be translated into the common unified database.

Translation templates provide a mechanism to instruct the Mapper and the Indexer how to deal with records coming in from the Format Importer. Translation templates define:

- Input field(s) from the legacy schema

- Target output field(s) in the unified database schema

- Any context information required for translation

- Target translation module(s) to be used

The translation templates allow workflows through various translation modules to be constructed, enabling the development of simple, reusable components to be brought together.

Translation templates could be specified in XML, with a desired feature set similar to script-like systems such as Apache Ant or Automator in Mac OS X . We are also investigating workflow orchestration systems, such as Taverna to act as the framework and manage the translation modules.

Legacy database builder[edit]

The Legacy database importer will transform the common structure provided by the Format Importer into a MySQL database that stores the original data before any mapping or cleaning has been applied. This is required functionality so it is always possible to return to the original record. The web application described in Section will provide the functionality to display the original record if required. For each content provider a Legacy database will be created and acts as a sub-component of the overall Metadata Repository.

Cleaner[edit]

The Cleaner performs basic cleaning operation on legacy metadata that can be done at this level with little supporting information. Cleaning processes may include:

- Spelling mistakes

- Illegal values

- Breaking up fields, e.g. normalize token separated list of values, separates person names from birth dates

- Fully expressing short hand notations to full text, such as converting country identifiers (e.g. UK) to actual names (e.g. United Kingdom). This may be useful to convert legacy schema specific notations so that the more general translation modules can be easily applied.

Indexing Module[edit]

The Indexing Module illustrated in has the task of indexing the metadata as it is imported into the Metadata Repository. This indexing allows the search engine to perform fast Google-style free text searching. Several approaches to indexing the metadata will be considered for implementation within the eCHASE demonstrator. This section outlines the possible approaches that could be implemented. There are multilingual issues to be considered when implementing the various indexing methods. These will also be discussed in this section.

Latent semantic indexing[edit]

There has been a lot of research into advanced indexing techniques, some of which could be integrated into the eCHASE architecture. Latent semantic indexing uses mathematical statistical techniques for extracting and representing the similarity and naming of words and passages by analysis of large bodies of text. The technique can be used to improve search results as documents related to query terms are matched, overcoming issues such as synonymy and polysemy.

A possibility for performing multilingual searching may be using latent semantic indexing. By training on a corpus of bilingual document-pairs, a representation of words in both languages can then be used to perform basic multilingual searching of other documents.

Keywords and Synonyms[edit]

As free text is passed through the indexer the text is tokenised into words or phrases and these words and phrases are added to the index and associated with the metadata record identifier that the text belongs to. Additionally synonyms for each word being indexed can also be associated with the record. This allows, for example, a person searching for the word "spade" to find records containing the term "shovel". Indexing using synonyms can provide much higher success in finding words in the text where the user does not specify the term exactly as in the record. However, this can also have the reverse effect and return a larger set of results that are not as specific as the user was searching for. One technique to resolve this is to mark terms in the index that were generated from synonyms and either rank legacy-matching terms higher than synonym matching, or allow the user to switch synonym searching on and off.

Indexing with unilingual thesauri[edit]

An enhancement to keyword and synonym indexing is to index the text according to terms within thesauri. Therefore an index for a specific purpose can be created from unstructured free text, for example an index of places could be generated from a thesaurus of places.

If the thesaurus used to index the text is a unilingual thesaurus in a different language to that of the text being indexed the indexer should handle translation of the text. Two approaches could be employed to solve the problem:

- Translate the metadata text to the language of the thesaurus and then continue indexing

- Translate the thesaurus terms and index the original thesaurus terms against the metadata using translated terms.

The translation module provides the services to translate between languages. See Section for further details on multilingual support and the translation service.

Indexing with multilingual thesauri[edit]

In the case that a multilingual thesaurus exists the solution is to index as done with a unilingual thesaurus but without the need to translate between languages. This is described in Section .

Third party indexers[edit]

Additionally to the techniques previously discussed, the eCHASE system could interface with off-the-shelf pre software to index the records since indexing techniques could become a project in itself. An example free package eCHASE could use is Lucene , a Java based search engine API.

Mapper[edit]

The Mapper performs mappings based on translation templates and schema descriptions. Currently, the Mapper process consists of two types of transforms:

- Complex data cleaning

- Mapping of fields from the legacy systems to the unified database schema

The Mapper component in the architecture contains the record manager and translation modules which are described in sections and respectively. These components import and map the data to the unified database.

The Mapper is designed as a component-based framework that will allow for configurability and extensibility as the system grows.

Record Manager[edit]

The Record Manager applies translation templates to database records by iterating through records and delegating tasks in turn to translation modules. These modules are linked together to perform mapping of legacy instance data in a workflow applied by the manager.

Translation Modules[edit]

Translation Modules are re-usable components that can be plugged-in to the Record Manager to perform specific mapping tasks. These modules translate records from legacy fields into fields that map onto the unified database schema. They could include people processing modules, place processing modules, date processing modules and translation modules for example.

This interface between the modules and the Manager could extend to SOAP to facilitate external services that are currently available. The Taverna workflow engine supports integration and orchestration of external web services. This functionality is desirable and will be investigated.

Contextual information may be used to help map the values onto the unified database schema, for example mapping Manchester to Manchester, UK instead of Manchester, US.

Modules may use different knowledge bases suited to a particular application or institution. For example, if an institution (e.g. ORF) is creating content internally, it is likely that many content creators (e.g. journalists, producers) are not going to be indexed in a universal resource such as ULAN . In this case it may be necessary to author a specific knowledge base for that institution’s creators.

A detailed specification of the required translation modules is impossible without access to the full datasets from the content partners. However, from our experience in other projects we can foresee the need and likely requirements for some general components. Note that this is not a complete list and that a main feature of the eCHASE architecture is the creation and application of new modules so this list is likely to grow.

Person and Institution[edit]

It is necessary to look up people and institution names to deal with ambiguous spellings, ordering of first and last names and so on.

This module is likely to require a knowledge base depending on the source, such as the institution specific personnel example above. There also may be a need to target personalities involved in the eCHASE demonstrator "Wandering Borders" subject closely, such as Eastern European politicians.

There may also be a need for additional context (categories - e.g. artists versus sculptors, dates, places, etc.) to disambiguate between people.

Place[edit]

It is necessary to identify place names described in the legacy systems beyond simple syntactic matching.

There may be ambiguities in spellings, and different sources may use various names to describe the same place (e.g. Saint Petersburg – Leningrad – Petrograd). This issue may also be related to multilingual sources that need to be translated.

Another important feature is to determine the correct place that is being referred. For example, there are multiple matches for "Southampton": in Hampshire, UK and in Virginia, US.

Dates[edit]

Different legacy systems will have their own formats for handling dates and it is useful to translate to a consistent format for efficient querying.

There may also be a need to handle free text descriptions for dates:

- Pure free text fields, e.g. "25th November 1980" is equivalent to "November 25 `80"

- Approximation, e.g. "circa 1980", "the eighties", "1980"

- Qualifiers, e.g. "after 1980"

Dimensions[edit]

Different legacy systems may use different formats for dimensions describing museum objects. It is also useful to translate dimension units (cm, inches) into a common unit so that efficient measurement searching and comparisons can be performed. As with the Dates module, there also may be a need to handle free text in dimension fields.

We are not so familiar with the issues regarding consolidating dimensions from different picture library systems, but there may be similar problems to deal with.

Categorization[edit]

There is a need to match the various categorization standards (e.g. painting styles, categories, keyword lists) used in each of the legacy systems with a set of consistent standards specified in the unified database schema.

There are various different categorization schemas that may be used: the AAT, Italian National Thesaurus, various proprietary systems and so on. It is likely that some of the categorization schemas used in the legacy systems will be integrated in the architecture as knowledge bases, described in Section .

There is much ongoing work on ontology and categorization matching, and it is possible that existing algorithms or even systems can be incorporated. However, it is necessary to examine the datasets to determine a clearer idea of the problems that need to be overcome. For applying many of the categorization matching algorithms, obtaining the structure of the categorization systems is crucial.

It may be that in some of the datasets the thesaurus standards are available but not directly enforced. In these cases, natural language information extraction may be more suitable.

Multilingual Translation[edit]

The aim for multilingual translation in eCHASE is to provide automatic tools for importing material from legacy systems in different languages. This is a complex problem, and it is hard to know the full scale of what can be tackled in eCHASE without examining the datasets. Section explains the general multilingual issues and design in more detail.

There may be approaches to reduce the complexity of the problem, such as using categorization matching if suitable multilingual thesauri are provided and these are strongly enforced in the data.

Natural Language[edit]

It is likely that there will be various applications for natural language information extraction in consolidating data from various legacy systems. However, without examining the datasets it is hard to predict the problems that may be encountered and which techniques should be considered. It is likely that any natural language processing to be performed in eCHASE will be built on top of existing systems, such as GATE .

There may be aspects of natural language in certain fields, such as the use of words such as "circa" or "after" in describing dates.

There may be legacy systems that use controlled list fields, but the data entry system did not directly enforce the use of the controlled lists, resulting in a varying level of conformance to the control list depending on the people entering the values. On the other hand, there may be free text fields where often cataloguers looked up values from a widely used dictionary or thesaurus such as the AAT . In both these cases, it may be that the natural language extraction technique can be targeted at certain control lists or thesauri but must also cope with more general text.

There may be completely unstructured free text fields, such as image caption or object title. These fields can sometimes be crucial as there may be little other supporting metadata.

Thesauri and Dictionary Knowledge Bases[edit]

There are various requirements for background knowledge when performing mappings from the legacy systems.

It can be useful to have a specific schema or thesauri to target. For example, if the legacy systems include details on artists then a universal resource for describing artists such as ULAN should be used in the unified database. A resource such as ULAN may also resolve ambiguities and identify duplicate entries, perhaps by using further information about artists such as their date of birth and death.

Background knowledge can also assist mapping. When matching across different categorization schemes, Wordnet can be used to determine synonyms of words and thus determine more relevant matches. It is also important to model and store proprietary information such as thesauri and controlled lists to be able to perform category matching to a common thesauri.

There may be different representations and tools for accessing background knowledge base when performing the mappings. This will depend on the datasets provided and the translation modules being developed. For instance, simple look-up lists may be stored in a flat file or a database tables. Complex thesauri structures are best suited to semantic web technologies where complex rules and inference techniques can be applied.

There are various existing knowledge bases that can be used within eCHASE, some of which have been described in the Technological Baseline. These may include:

- ULAN

- TGN

- AAT

- Wordnet

It is likely that the eCHASE demonstrator subject, "Wandering borders", will drive some background knowledge. As such, the creation of background repositories to support the content may be required, such as information on Eastern European politicians. The creation of this knowledge could be obtained from repositories such as Wikipedia, either manually or with the aid of crawling and export tools.

There may also be some institution specific repositories that can be created from examining the institution’s legacy systems (see the example in Section ), or existing knowledge bases that can be enhanced using institution specific data.

Metadata Repository[edit]

The results of the mapping and import translation modules are stored in a MySQL database that is structured with a schema created with the requirements of eCHASE in mind.

The Metadata Repository consists of 3 sub-repositories for each content provider. These are:

- Legacy database

- Index

- Unified database

The Legacy database will store the original data that was supplied by the content provider to allow the system to display and search legacy content if required by the user. It may also be necessary to store this information to show how the mapping process translated the original content to the unified model.

The Index stores representations of the metadata for searching the content at speed and with enhanced accuracy for searching unstructured content. The index will correlate with records from both the legacy and unified databases.

The design of the Unified database schema will be strongly influenced by the CIDOC Conceptual Reference Model (CRM), a reference model for the interchange of information in the cultural heritage domain. More details on the CRM are described in the technological baseline document. Although the CRM is an ontological model, it can inform the design of the database schema to guarantee high quality metadata structures that are able to cope with the wide range of information sources that the eCHASE architecture is expected to accommodate. This structure will be flexible and extensible for the requirements of integrating new systems used by content providers joining eCHASE in the future, and is well suited for interoperability with other systems.

The unified database schema will define identifiers for metadata records to reference media content such as images and video files in the repository supported by the media engine. URLs will be used to identify media served by the Media and Media Descriptor Web Server (see Section ), and reference identifiers will link the record and media for content based searching (see Section ).

Multilingual Support[edit]

Many approaches exist to automatic multilingual translation and each is a project in its own, therefore it is impractical for eCHASE to implement its own natural language translation. This section will discuss the practical approaches that could be implemented within eCHASE project timeframe.

Deciding upon the appropriate approaches to pursue requires further knowledge regarding content providers metadata which is not available at the time of writing this document. To overcome this, the Multilingual Translation module will be considered a black box system and only the interface will be defined in this document.

The eCHASE system will build its own corpus of translated terms as it translates new words and phrases. Therefore it will be possible to reverse the translation if required.

An important note to consider is that when translating between languages there is always going to be a problem with accuracy. The more contextual information provided, the better the result. For example, the word "bank" in English has several meanings including "a river bank" and a "financial institution". If the translator was simply asked to translate the word "bank" into French it would need to pick at random one of the meanings. However, if the term "money" was included with the term "bank" it could make a better guess that the word being translated is referring to financial institutions.

Multilingual translation service interface[edit]

The interface to the Multilingual Translation service contains a single method as shown in .

The method translate() receives four parameters:

- phrase (String): The text to be translated.

- langFrom (String): The language that "phrase" is written in.

- langTo (String): The language to translate to.

- context (Object): Extra context information, such as extra terms to help identify the true meaning of the word. In the case of translation with thesauri the context object will specify the specific thesaurus to use.

Possible approaches[edit]

Existing language translation services[edit]

Several translation services exist to translate free text between languages. Ie: Google Translate and Alta Vista Babel Fish services. These external services can be used to translate text between languages when there are no specific dictionaries for the translation.

Multilingual thesauri and dictionaries[edit]

A general multilingual resource such as Euro WordNet , a system of semantic networks for European languages, can be applied to perform straightforward translations of a wide range of terms. Such systems can form the basis of translation systems, or aid in the indexing and mapping of a specific set of terms.

More specific multilingual thesauri may be obtained for a specific subject, and can be useful to perform translation of certain database fields. In fact, some eCHASE partners are already using multilingual thesauri to structure their metadata. If this thesauri information can be obtained, it is likely that very satisfactory translations can be performed by using category matching techniques, as described in Section . However, it is important that the metadata systems have strongly enforced the thesauri structures; otherwise it is likely that complex natural language techniques will be required.

Content partners could provide manually authored translations for small subsets of data, from which a custom multilingual thesaurus could be created. This would be used for mapping specific controlled fields.

Thesaurus Engine[edit]

There are advanced searching mechanisms that can be useful to provide when searching large datasets. These may involve queries performed on controlled lists or thesauri data, and performing query expansion based on complex categorisation data. For example, to search for all objects related to a country, such as England, one would wish to obtain matches relevant to all places in that country, such as London, Southampton and so on. This is generally not possible through traditional text based database querying where values are generally stored as individual values (e.g. "London", "Southampton") and matching is based on syntactic matching.

It is necessary to provide functionality in the eCHASE architecture to perform these sorts of queries, i.e. query expansion through thesauri. One approach might be semantic web technologies, which provide mechanisms for satisfying such complex queries. However, there may be performance issues in semantic web technologies to handle the scale of the datasets involved in eCHASE, resulting in unsatisfactory query times and unresponsive user interfaces.

In eCHASE, basic thesauri functionality will be provided by the main architecture, in particular by the design of the schemas in the indexes and unified database that are exposed by the SRW. Advanced querying, including inference and reasoning, may be achievable through the export of the unified database to RDF and served by a semantic web triple store as discussed in Section . However, due to performance issues it is crucial that the eCHASE architecture does not rely on this layer for query expansion through thesauri data.

The core functionality that should be provided by the eCHASE architecture includes:

- Providing a mechanism for the web application to access thesauri data for visualisation. The thesauri navigation and visualisation component is described in Section .

- Queries based on thesauri information. For example, if a user requests objects in England, all records related to places within England need to be returned rather than simple syntactic matching.

- Broadening and narrowing of search terms. For example, if a user searches for objects in Southampton and the system returns few results, the term Hampshire should be suggested for obtaining values close to Southampton.

- Query expansion through the use of synonyms and related terms.

Note that there is not a specific thesauri engine component in the eCHASE architecture. Thesauri related functionality is distributed between the design of the metadata repository schemas and in the implementation of the SRW. There are specifications for implementing z39.50 thesauri SRW services, such as the Zthes profile. This describes an abstract model for representing and searching thesauri, semantic hierarchies of terms as described in ISO 2788, and specifies how this model may be implemented using the Z39.50 and SRW protocols. It also suggests how the model may be implemented using other protocols and formats. This specification, along with other existing database thesaurus implementations, need to be investigated.

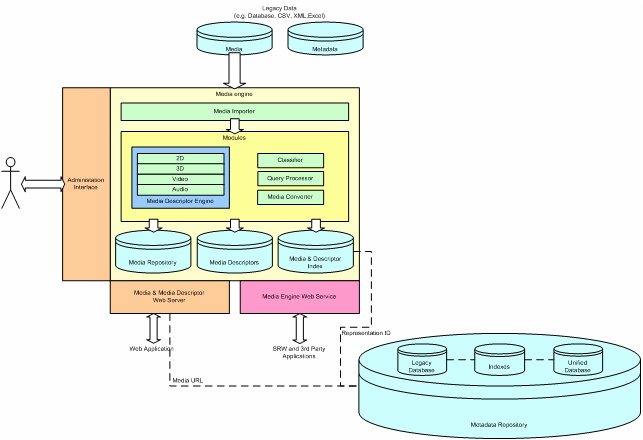

Media Engine[edit]

The media engine copes with the consolidation of the multimedia collections provided by the content partners. Besides providing functionality for importing, managing and serving the multimedia content so that it can be displayed to the users, state of the art content based retrieval queries are possible. These mechanisms can be extended to provide classification of multimedia content based on low-level content features such as color or texture.

The overview of the media engine subsystem is shown below, and each of the components is also described below.

Media Importer[edit]

This importer module consists of tools and scripts that automate the batch importing of large collections of multimedia content into the structure used by the media repository. The importer is able to use the media converter component of the media engine to apply transformations of the source media into a desired format or to generate thumbnails.

The results of the import process are placed in the media repository in the correct format and according to the structure defined in Section .

Media Administration Interface[edit]

The media administrator component provides a user interface for automated as well as manual management of the media and descriptor repositories. It provides mechanisms to support the event-driven processing of descriptors for new images or the regeneration of descriptors due to software updates.

The media administrator uses the media descriptor engine functions for generating descriptors. It provides feedback on the progress of processing tasks, as well as reporting on the success or failure of a running scheduled task.

Media Modules[edit]

The media engine is composed of various components described below and is responsible for storing the actual media content, i.e. the images, audio and videos files. The metadata in the unified database is linked to media stored in the media engine through reference identifiers.

The media engine also provides background batch processing facilities that can be applied to some of the components performing time consuming tasks, such as generating feature vectors or batch converting a large collection of images. These processes can be set up to run in the background when the server running the media engine is under less strain (e.g. at night), or so that they do not use up the entire server’s processing resources.

Media Descriptor Engine[edit]

The media descriptor engine handles the low level interfaces to the descriptor generation and comparison functions. The generation functions will generate suitable descriptors from media objects in the media repository and store the result in the media descriptor repository. It will create the appropriate indexes in the descriptor index to preserve links between the original media object and the generated descriptors.

The comparison functions will compare descriptors of the same type and return similarity or dissimilarity scores. These functions will form the basis of the descriptor-based queries decomposed in the query processor. It will also keep track of version numbers for descriptors so they can easily be regenerated following software upgrades.

We are intending to reuse media descriptor components developed in the Sculpteur and Artiste projects, although we would like to incorporate other content based systems such as the ones described in .

Classifier[edit]

The classifier can use clustering techniques to help speed up the retrieval process. By comparing the query object to representative objects of each cluster and only using objects in nearest clusters, the total number of objects in the comparison can be notably reduced.

The classifier may also be able to create associations between metadata and concepts in the ontology and media objects using their associated descriptors. Using existing information as training data, metadata can automatically be assigned to new objects entering the system.

Query Processor[edit]

The Query Processor interacts with the Search Engine to enable content-based search. The processor is responsible for carrying out complete descriptor-based queries, and uses the Media Descriptor Engine and Descriptor Index to accomplish this.

The Query Processor is capable of accepting lists of media resource identifiers to restrict search to certain media entities. This enables optimized combined searches, such as searching for images created by artists whose name begins with ’A’ and is similar to a user-provided query image. The Search Engine can initially find all images with artists names beginning with ’A’ and then pass on the result set to the query processor together with the query image. The content-based search then only needs consider the images in the result set for its search.

Results from the Query Processor are returned to the Search Engine.

Media Converter[edit]

The media converter provides functionalities for converting media content into different formats. Some of these mechanisms include generating thumbnails of images, converting between different media formats, creating images with different resolutions and extracting key frames from videos.

The functionality of the media converter is called from the importer and the media administrator.

Media Repository[edit]

The media repository stores media files in a file system hierarchy. The file system path to the media file is a function of the media file’s resource identifier (unique id) in the unified database.

Descriptor Repository[edit]

The Descriptor Repository stores multimedia descriptor files in a file system. The file system hierarchy is the same as in the Media Repository. Each of the media entities from the Media Repository is represented as a directory in the Descriptor Repository with the same name. The directories contain the versioned media descriptors.

The Descriptor Repository could reside on a different physical disk to the Media Repository. This would enable the overall system to continue to work (albeit without content-based search) in case of failure or maintenance.

Media and Descriptor Index[edit]

The Media and Descriptor Index allows high performance content-based searching. The Index provides indexes for some or all of the media descriptors to avoid having to perform slow, brute-force descriptor-by-descriptor comparisons. The individual indexes could be in a database table or a custom format (e.g. multidimensional index, or inverted files), depending on the requirements of the descriptor being indexed.

The Media and Descriptor Index is particularly important for media descriptors that contain temporal and/or spatial localization information. The index should probably reside on a separate physical disk to the repositories for performance purposes.

Media and Media Descriptor Web Server[edit]

Multimedia imported by the Media Engine will be hosted by the Media Engine and served up to applications through a web server. The Unified database will store URLs to the media on the web server that will be provided to the user’s browser by the SRW (see Section ).

Media Engine Web Service[edit]

The Media Engine is designed as a loosely coupled component to the main SRW search engine and therefore allows the SRW to be implementation independent of content-based queries. The Media Engine will expose content based functionality through a web service interface for searching, thumbnail generation and media descriptor generation.

User Management[edit]

Provisioning Module[edit]

Provisioning is the automation of all the procedures and tools to manage the lifecycle of an identity: creation of the identifier for the Identity; linkage to the authentication provider; setting and changing attributes and privileges; and decommissioning the identity. This module allows some form of self-service for the creation and ongoing maintenance of an identity that belongs to the general subscriber users.

This module provides the interfaces to create, modify, and delete a user identity. These functionalities are implemented using the API published by the User Data Repository.

This module is used by the self service module to create a user account, to change his/her password or to reset the user password.

This module is also used by Administrator module to manage the identity lifecycle of users that have particular roles in the eChase System and need special privileges.

At the moment eChase do not envision the need for a metadirectory system to manage users coming from external systems.

Self service Module[edit]

Enables users to self-register for access to eChase services and manage profile information without administrator intervention. It also allows users to perform authentication credential management: assigning and resetting passwords. Self service reduces IT operation costs, improves customer service, and improves information consistency and accuracy.

It interacts with Visualization and Navigation modules and with the provisioning module.

Accounting and Log engine[edit]

Overview[edit]

These modules provide some API for gathering data regarding the transaction activities for different purposes.

The eChase System will exchange information with some external Systems and will be able to collect information from and to these entities; moreover eChase will have to maintain track of all internal activities.

The collecting of this information may be used, later or in real time, for different purposes such:

- Logging and Navigation on the system

- Evidence of Peculiar Transactions (internal and external)

- Reports and Statistics

- Tracking of Payments

- Etc.

The Accounting Engine, together with the Log Engine, is the main responsible for the treatment and managing this kind of information.

Log engine module, in particular, provides the mechanism to track how information in the repository is created, modified and used.

This module may be based on the customization of an open source module (eg. Log4J).

It provides API to trace and record important information with different levels of sensibility:

- Error: at these level are traced information regarding situation that prevents the normal behavior of the system.

- Warning: At this level is traced information about situations that are not normal but that do not prevent the system from working: eg. Disk space going below a fixed treshold.

- Information: information that are related to important system events.

- Verbose: These are detailed information that is very useful for system troubleshooting.

This module is configurable choosing a particular level. Once a level has been configured all the information for that level and for all the levels below will be recorded.

The configuration changes are dynamic; they do not require a system restart to take effect.

Typical example of information extracted from a log file could be the following:

| Report | Periodo |

| Directory hits | Weekly |

| Top requested pages | Weekly |

| Top requested pages | Monthly |

| Browser requests | Weekly |

| Browser requests | Monthly |

| External domains (from) | Weekly |

| External domains (from) | Monthly |

Particularly we want to examine the main features tied to the Reports and Statistics aspects knowing that these general considerations could be applied for similar tasks.

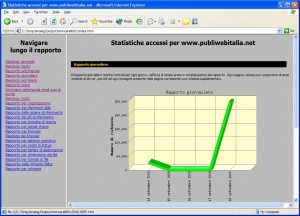

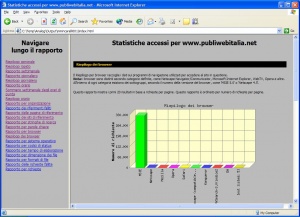

Reports and Statistics[edit]

The collecting of information and the subsequent Reports production (for the various purposes) is generally a multi-process step based on the storing and elaboration of files (log files) produced by the different Web Servers that normally manages the HTTP traffic of the Web Site/Portal.

A generic job flow may be represented as following:

- An application, running on the Report and Accounting Server take care of copying, from the front-end side Web Server, the various LOG files of the GN-1 day to a dedicated File Server.

- On the Report Server an Application joins all the log files (one from front-end Web Server)

- On the Report Server an Application extracts the required information from the entire day Log file and sends it, via FTP, to the Accounting platform (or another application on the same machine) , after a zip compression phase of the file that assures about the file integrity when decompressing the file itself

- The Accounting file (subset of information derived from a generic Log file) will have a generic classification name and could be a normal text ASCII file. In case of traffic information the structure could be:

- Request time: yyyymmddhhmmss

- Browser IP address

- Username or "-" in case of anonymous access to the web page

- Requested web page

- Outbound HTTP traffic (Bytes transmitted to the browser)

- Inbound HTTP traffic (Bytes received from browser)

- Accessed Domain (ex www.publiwebitalia.net)

- HTTP State Request

The obtained accounting data could be then stored in a dedicated Repository (i.e. Log and/or Accounting/Billing Repository)

The subsequent workflow may be represented as a group of specialized Applications that produce specific output reports complying with the particular input request.

In a Billing scenario the Accounting information could be the input data to this Subsystem for subsequent market analysis as represented in the following schema:

Possible Software solutions[edit]

In this chapter we propose some of the software tools used in similar project.

Analog[edit]

Statistics may be elaborated using some free tools like Analog (http://www.analog.cx).

This is well-known software than runs on different platforms (Win32, Linux, etc.) and come with its source code; it’s freeware even for commercial uses.

Report Magic[edit]

Data from Analog are converted in HTML files using another free tool: Report Magic (http://www.reportmagic.org). This is another well-known software than runs on different platforms (Win32, Linux, etc.) and come with its source code; it’s freeware even for commercial uses.

AnalogX QuickDNS[edit]

While Analog is very efficient in log file elaboration it is not suitable when resolving IP Addresses into DNS names (in case of Domain reporting required).

QuickDNS (http://www.analogx.com/contents/download/network/qdns.htm) is a freeware utility that perform this task and perfectly integer itself with Analog software.

Its main task is to populate, starting from Web Server Log files, a lookup file according to Analog specification.

Back to D5.1orig System Specification