Friday, October 27. 2006

Paragraph-Based Quotation in Place of PDF/Page-Based

In the online age, page/line-based quotation is obsolete (for current and forward-going text). Pages are and have always been arbitrary entities. A document's natural landmarks are sections, paragraphs and sentences. That is how quotations and passages should be cited, not by page numbers (though page numbers can be added in parens as a courtesy and curiosity, for continuity, for the time being, while pages -- and PDF -- scroll inexorably toward their natural demise).

It goes without saying that all quotations, citations and references should be hyperlinked. I am sure that XML documents will be tagged for section number, paragraph number and sentence number, so that it will be natural not only to pinpoint the passage to which one wishes to refer but to hyperlink directly to it (especially for quote/commenting).

This answers, in passing, one faint concern about the self-archiving of authors' final refereed drafts instead of the published PDF: "How will I specify the location of passages I wish to single out or quote?" The answer is paragraph numbers (or, if you want to be even more precise, section numbers, paragraph numbers and sentence spans). They have the virtue of not only being autonomous and ascertainable from the document itself, but they are independent of arbitrary pagination and PDF. (They will also be useful for digitometric analyses.)

(I proposed this rather trivial and obvious online solution in Psycoloquy in the early 90's -- though I'm sure I wasn't the first -- and APA at last began recommending it in 2001 or so.)

American Scientist Open Access Forum

It goes without saying that all quotations, citations and references should be hyperlinked. I am sure that XML documents will be tagged for section number, paragraph number and sentence number, so that it will be natural not only to pinpoint the passage to which one wishes to refer but to hyperlink directly to it (especially for quote/commenting).

This answers, in passing, one faint concern about the self-archiving of authors' final refereed drafts instead of the published PDF: "How will I specify the location of passages I wish to single out or quote?" The answer is paragraph numbers (or, if you want to be even more precise, section numbers, paragraph numbers and sentence spans). They have the virtue of not only being autonomous and ascertainable from the document itself, but they are independent of arbitrary pagination and PDF. (They will also be useful for digitometric analyses.)

(I proposed this rather trivial and obvious online solution in Psycoloquy in the early 90's -- though I'm sure I wasn't the first -- and APA at last began recommending it in 2001 or so.)

Harnad, S. (1995) Interactive Cognition: Exploring the Potential of Electronic Quote/Commenting. In: B. Gorayska & J.L. Mey (Eds.) Cognitive Technology: In Search of a Humane Interface. Elsevier. Pp. 397-414.Stevan Harnad

Light, P., Light, V., Nesbitt, E. & Harnad, S. (2000) Up for Debate: CMC as a support for course related discussion in a campus university setting. In R. Joiner (Ed) Rethinking Collaborative Learning. London: Routledge.

Harnad, S. (2003/2004) Back to the Oral Tradition Through Skywriting at the Speed of Thought. Interdisciplines. Retour à la tradition orale: écrire dans le ciel à la vitesse de la pensée. Dans: Salaün, Jean-Michel & Vendendorpe, Christian (réd.). Le défis de la publication sur le web: hyperlectures, cybertextes et méta-éditions. Presses de l'enssib.

American Scientist Open Access Forum

Thursday, October 26. 2006

October 2006: OA Self-Archiving Mandate Month

If (with Peter Suber, in today's Open Access News), we count October's full mandates adopted (BBSRC, ESRC, MRC, NERC, PPARC), expanded (Wellcome Trust), and proposed (Canada's CIHR, US's HHMI) -- plus Austria's FWF's and UK's CCLRC's "semi-mandates" -- that would make ten in one month (even more than Peter's eight, which missed PPARC and CCLRC!). (There might even be an 11th expanded October mandate from Australia's University of Tasmania, just registered by Arthur Sale in ROARMAP.)

The adoption of two big proposed mandates is still being breathlessly awaited: FRPAA in the US and the European Commission's Recommendation (A1), but there's no need for other institutions, funders or nations to wait to adopt, register and announce their own!

The adoption of two big proposed mandates is still being breathlessly awaited: FRPAA in the US and the European Commission's Recommendation (A1), but there's no need for other institutions, funders or nations to wait to adopt, register and announce their own!

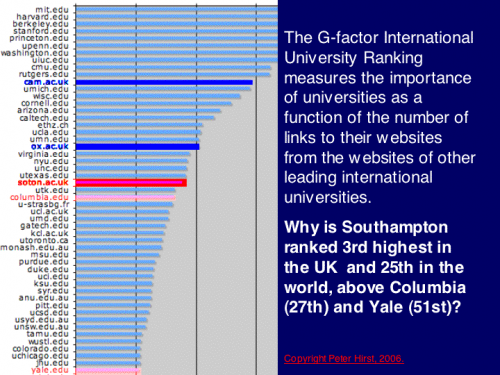

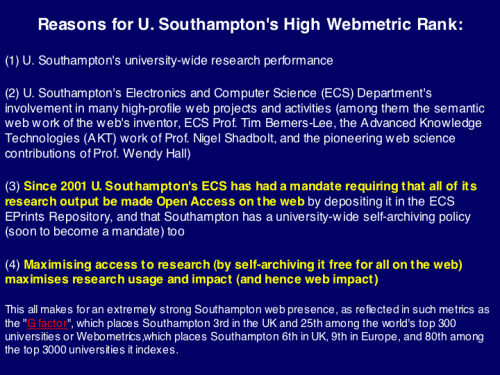

Why is Southampton's G-Factor (web impact metric) so high?

Why is U. Southampton's rank so remarkably high (second only to Cambridge and Oxford in the UK, and out-ranking the likes of Yale, Columbia and Brown in the US)?

Long practising what it has been preaching -- about maximising research impact through Open Access Self-Archiving -- is a likely factor. (This is largely a competitive advantage: Southampton invites other universities to come and level the playing field -- by likewise self-archiving their own research output!)

How OA is related to OS and FS

OA, OS, FS & CC share commonalities but also have some differences: there are genuine convergences and genuine divergences. Forcing them all into a single procrustean mold does them all a disservice. I agree that they should not do one another harm, and they do not and will not. But it would also do a kind of harm to conflate things that are logically and practically distinct. And it doesn't work, in concrete terms, no matter how much abstract discourse it may generate:

Here is the heart of the matter. Let me try to convey it purely by analogy first: I am personally in favour of open-code pharmacology ("OP"): The formula for potential cures should not be kept secret, or prevented from being used to sell or even give away the medicine.

It does not follow from this, however, that if a commercial pharmaceutical company develops a non-OP cure for AIDS today that I will refuse to use it or promote it! Nor will I try to suppress or refuse to cooperate with non-OP research or non-OP researchers, while there are still diseases and patients, needing to be cured now.

To take this allegory further: OA is like Médecins Sans Frontières (MSF): All those doctors have already dedicated themselves to providing their services for free, but it does not follow from that that they should only use, or only collaborate with OP drugs and OP suppliers. They should use whatever they can get, and favor OP whenever they can do so, but not at the expense of the immediate needs of their patients.

That's how it is with OA and OS/FS/CC: I am personally 100% in favour of OA, FS, and CC for my own output (though I think I shall write little software in this lifetime!) and for the output of others of a like mind on OA/FS/CC. But I would be against imposing OS/FS/CC (or OP) by fiat, for fear of losing potential creativity and productivity. (This is a point on which otherwise like-minded people can and do disagree.)

And the immediate situation for OA is this: 100% OA is within reach now. It needs OAI-compliant OA Institutional Repository (IR) software. I would personally prefer if all OA IR software (indeed all software) were GPL, but I would not mandate it, nor would I refuse the immediate help of non-GPL software in reaching 100% OA, any more than an MSF doctor would refuse the immediate help of a non-OP drug for treating AIDS, now.

(Maximizing research impact is not as pressing a problem as saving people from AIDS; so this analogy, like all analogies, has its limitations. But it's not a bad idea to ask ourselves quite explicitly whether we take research so lightly that we agree with the above reasoning for AIDS, but not for research.)

Here is the heart of the matter. Let me try to convey it purely by analogy first: I am personally in favour of open-code pharmacology ("OP"): The formula for potential cures should not be kept secret, or prevented from being used to sell or even give away the medicine.

It does not follow from this, however, that if a commercial pharmaceutical company develops a non-OP cure for AIDS today that I will refuse to use it or promote it! Nor will I try to suppress or refuse to cooperate with non-OP research or non-OP researchers, while there are still diseases and patients, needing to be cured now.

To take this allegory further: OA is like Médecins Sans Frontières (MSF): All those doctors have already dedicated themselves to providing their services for free, but it does not follow from that that they should only use, or only collaborate with OP drugs and OP suppliers. They should use whatever they can get, and favor OP whenever they can do so, but not at the expense of the immediate needs of their patients.

That's how it is with OA and OS/FS/CC: I am personally 100% in favour of OA, FS, and CC for my own output (though I think I shall write little software in this lifetime!) and for the output of others of a like mind on OA/FS/CC. But I would be against imposing OS/FS/CC (or OP) by fiat, for fear of losing potential creativity and productivity. (This is a point on which otherwise like-minded people can and do disagree.)

And the immediate situation for OA is this: 100% OA is within reach now. It needs OAI-compliant OA Institutional Repository (IR) software. I would personally prefer if all OA IR software (indeed all software) were GPL, but I would not mandate it, nor would I refuse the immediate help of non-GPL software in reaching 100% OA, any more than an MSF doctor would refuse the immediate help of a non-OP drug for treating AIDS, now.

(Maximizing research impact is not as pressing a problem as saving people from AIDS; so this analogy, like all analogies, has its limitations. But it's not a bad idea to ask ourselves quite explicitly whether we take research so lightly that we agree with the above reasoning for AIDS, but not for research.)

Wednesday, October 25. 2006

PPARC: 13th Self-Archiving Mandate, 6th from a funder, 7th from UK

Saturday, October 21. 2006

Arthur Sale: On the Effectiveness and Time-Course of Open Access Self-Archiving Mandates

ABSTRACT: The behavior of researchers when self–archiving in an institutional repository has not been previously analyzed. This paper uses available information for three repositories analyzing when researchers (as authors) deposit their research articles. The three repositories have variants of a mandatory deposit policy. It is shown that it takes several years for a mandatory policy to be institutionalized and routinized, but that once it has been the deposit of articles takes place in a remarkably short time after publication, or in some cases even before. Authors overwhelmingly deposit well before six months after publication date. The OA mantra of ‘deposit now, set open access when feasible’ is shown to be not only reasonable, but fitting what researchers actually do.

See also: Sale, Arthur (2006) Comparison of content policies for institutional repositories in Australia. First Monday 11(4) April.

ABSTRACT: Seven Australian universities have established institutional repositories (containing research articles, also known as eprints) that can be analyzed for content and which were in operation during 2004 and 2005. This short paper analyses their content and shows that a requirement to deposit research output into a repository coupled with effective author support policies works in Australia and delivers high levels of content. Voluntary deposit policies do not, regardless of any author support by the university. This is consistent with international data.

Tuesday, October 17. 2006

Premature Rejection Slip

Richard Poynder, in "Open Access: death knell for peer review?" has written yet another thoughtful, stimulating essay. But I think he (and many of the scholars and scientists he cites) are quite baldly wrong on this one!

SUMMARY: Richard Poynder's essay on peer review is thoughtful and stimulating but quite wrong! Peer review is like water-quality control: Everyone shouldn't have to risk doing it all for himself (or relying on those who do it for themselves). (And it has nothing to do with OA, which is about making the filtered, quality-controlled water free for all.)

What is peer review? Nothing more nor less than qualified experts vetting the work of their fellow specialists to make sure it meets certain established standards of reliability, quality and usability -- standards that correspond to the quality level of the journal whose name and track-record certifies the outcome.

Peer review is dynamic and answerable: Dynamic, because it is not just an "admit/eject" decision by a gate-keeper or an "A/B/C/D" mark assigned by a schoolmarm, but an interactive process of analysis, criticism and revision that may take several rounds of successive revisions and re-refereeing. And answerable, because the outcome must meet the requirements set out by the referees as determined by the editor, sometimes resulting in an accepted final draft that is very different from the originally submitted preprint -- and sometimes in no accepted draft at all.

Oh, and like all exercises in human judgment, even expert judgment, peer review is fallible, and sometimes makes errors of both omission and commission (but neither machinery nor anarchy can do better). It is also approximate rather than exact; and, as noted, quality-standards differ from journal to journal, but are generally known from the journal's public track record. (The only thing that does resemble an A/B/C/D marking system is the journal-quality hierarchy itself: Meeting the quality-standards of the best journals is rather like receiving an A+, and the bottom rung is not much better than a vanity press.)

But here are some other home truths about peer review (from an editor of 25 years' standing who alas knows all too well whereof he speaks): Qualified referees are a scarce, over-harvested resource. It is hard to get them to agree to review, and hard to get them to do it within a reasonable amount of time. And it is not easy to find the right referees; ill-chosen referees (inexpert or biassed) can suppress a good paper or admit a bad one; they can miss detectable errors, or introduce gratuitous distortions.

Those who think spontaneous, self-appointed vetting can replace the systematic selectivity and answerability of peer review should first take on board the ineluctable fact of referee scarcity, reluctance and tardiness, even when importuned by a reputable editor, with at least the prospect that their efforts, though unpaid, will be heeded. (Now ask yourself the likelihood that that the right umpires will do their duty on their own, and not even sure of being heeded for their pains.)

Friends of self-policed vetting should also sample for a while the raw sludge that first makes its way to the editor's desk, and ask themselves whether they would rather everyone had to contend directly with that commodity for themselves, instead of having it filtered for them by peer review, as now. (Think of it more as a food-taster for the emperor at risk of being poisoned -- rather than as an elitist "gate-keeper" keeping the hoi-poloi out of the club -- for that is closer to what a busy researcher faces in trying to decide what work to risk some of his scarce reading time on, or (worse) his even scarcer and more precious research time in trying to build upon.)

And peer-review reformers or replacers should also reflect on whether they think that those who have nothing better to do with their time than to wade through this raw, unfiltered sludge on their own recognizance -- posting their take-it-or-leave-it "reviews" publicly, for authors and users to heed as they may or may not see fit -- are the ones they would like to trust to filter their daily sludge for them, instead of answerable editors' selected, answerable experts.

Or whether they would like to see the scholarly milestones, consisting of the official, certified, published, answerable versions, vanish in a sea of moving targets, consisting of successive versions of unknown quality, crisscrossed by a tangle of reviews, commentaries and opinions of equally unknown quality.

Not that all the extras cannot be had too, alongside the peer-reviewed milestones: In our online age, no gate-keeper is blocking the public posting of unrefereed preprints, self-appointed commentaries, revised drafts, and even updates and upgrades of the published milestones -- alongside the milestones themselves. What is at issue here is whether we can do without the filtered, certified milestones themselves (until we once again reinvent peer review).

The question has to be asked seriously; and if one hasn't the imagination to pose it from the standpoint of a researcher trying to make tractable use of the literature, let us pose it more luridly, from the standpoint of how to treat a family member who is seriously ill: navigate the sludge directly, to see for oneself what's on the market? ask one's intrepid physician to try to sort out the reliable cure from the surrounding ocean of quackery? And if you think this is not a fair question, do you really think science and scholarship are that much less important than curing sick kin?

Eppur, eppur... what tugs at me on odd days of the week is the undeniable fact that most research is not cited, nor worth citing, anyway, so why bother with peer review [or, horribile dictu, OA!] for all of that? And on the other end, the few authors of the very, very best work are virtually peerless, and can exchange their masterworks amongst themselves, as in Newton's day. So is all this peer review just to keep the drones busy? I can't say. (I used to mumble things in reply to the effect that "we need to countenance the milk in order to be ensured of the cream rising to the top" or "we need to admit the chaff in order to sift out its quota of wheat" or "we need to screen the gaussian noise if we want to ensure our ration of signal") [1], [2], [3]But I can say that none of this has anything to do with Open Access, now (except that it can be obtruded, along with so many other irrelevant things, to slow OA's progress). If self-archiving mandates were adopted universally, all of this would be mooted. The current peer-reviewed literature, such as it is, would at long-last be OA -- which is the sole goal of the OA movement, and the end of the road for OA advocacy, the rest being about scholars and scientists making use of this newfound bonanza, whilst other processes (digital preservation, journal reform, copyright reform, peer review reform) proceed apace.Harnad, S. (1986) Policing the Paper Chase. (Review of S. Lock, A difficult balance: Peer review in biomedical publication.)Nature 322: 24 - 5.

As it is, however, second-guessing the future course of peer review is still one of the at-least 34 pieces of irrelevance distracting us from getting round to doing the optimal and inevitable at long, long last...

Harnad, S. (1990) Scholarly Skywriting and the Prepublication ContinuumStevan Harnad

of Scientific Inquiry. Psychological Science 1 342 - 343.

Harnad, S. (1998) The invisible hand of peer review. Nature [online] (c. 5 Nov. 1998) Exploit Interactive version

Peer Review Reform Hypothesis-Testing (started 1999)

A Note of Caution About "Reforming the System" (2001)

Self-Selected Vetting vs. Peer Review: Supplement or Substitute? (2002)

Peer Review: Streamlining It vs. Sidelining It

American Scientist Open Access Forum

Monday, October 16. 2006

The Bangalore Commitment: “Self-Archive Unto Others as You Would Have Others Self-Archive Unto You”

Appeared inMost of the 2.5 million articles published yearly in our planet's 24,000 research journals are inaccessible to a large portion of their potential users worldwide, but especially in the developing world. One might think that the reason for this is that no research institution can afford to subscribe to all 24,000 journals and that most can only afford a fraction of them -- and this is true, but it is not the whole story, nor the main part of it: For even if all those journals were sold at cost -- not a penny of profit -- they would still remain unaffordable for many of the research institutions worldwide. The only way to make all those articles accessible to all their potential users is to provide “Open Access” to them on the Web, so anyone can access and use them, anywhere in the world, at any time, for free.Wednesday Nov 1, 2006.

SUMMARY: There is no need for developing countries to wait for the developed countries to mandate Open Access (OA) self-archiving: They have more to gain because currently both their access and their impact is disproportionately low, relative to their actual and potential research productivity and influence. Lately there have been many abstract avowals of support for the Principle of OA, but what the world needs now is concrete commitments to its Practice. Under the guidance of India’s tireless OA advocate, Subbiah Arunachalam, there will be a two day workshop on research publication and OA at the Indian Institute of Science in Bangalore on November 2-3, at which the three most research-active developing countries – India, China and Brazil – will frame the “Bangalore Commitment”: a commitment to mandate OA self-archiving in their own respective countries and thereby set an example for emulation by the rest of the world.

One could have said the same of food, medicine, and all other human essentials, of course, but one cannot eat digital food or cure diseases with strings of 0's and 1's. Nor, alas, are all the producers of digital products -- let alone of physical food or medicine -- interested in giving away their products for free. So what makes research different (if it is different) and why is it urgent for all of its potential users to have access to it?

Research is the source from which future improvements in the quality, quantity and availability of food, medicine, technology, and all other potential benefits to mankind will come, if it is to come at all. And researchers -- unlike the producers of commercial products -- give their findings away: Unlike writers or journalists, researchers do not seek or get fees or royalties for their articles. They give them to their journals for free, and they even mail (and these days email) free copies to any potential user who writes to ask for one.

Why do researchers give their articles away for free? Partly for the same reason they are researchers rather than businessmen: They want to make a contribution to knowledge, to research progress. Partly also because that is the nature of the reward structure of science and scholarship: Research is funded, and researchers are employed and paid, on the strength of their "research impact." This used to mean how much they publish, but these days it also means how much their publications are read, used, and built upon, to generate further research and applications, to the benefit of the tax-paying society that funds their research and their institutions.

And now we can see why researchers give away their articles and why it is so important that all their potential users should be able to access and use them: Because all access-barriers are barriers to research progress and its benefits (as well as to the advancement of researchers' careers and productivity): If you cannot access a research finding, you cannot use, apply or build upon it.

Researchers are not businessmen, but they are not always very practical either. The reason publications need to be counted and rewarded by their employers and funders -- "publish or perish" -- is that otherwise many researchers would just put their findings in a desk drawer and move on to do the next piece of research. (That is part of the price that humanity must pay for nurturing a sector that is curiosity-driven rather than profit-driven.) So, since researchers do need to fund their research and to feed themselves and family, their publications are counted and then rewarded proportionately. But counting publications is not enough: It has to be determined whether the research was important enough to have been worth doing and publishing in the first place; its "research impact" has to be measured: What was its uptake, usage, influence? How many pieces of further research and applications did it generate? Although the measure is crude, and far richer measures are under development, citation counts -- the number of times an article is cited by other articles -- are an indicator of research impact.

So, along with publications, citations are counted, in paying researchers and funding their research. And recent studies have shown that the citation counts of articles that are freely available on the web (Open Access) are 25%-250% higher than the citation counts of articles that are only available to those researchers whose institutions can afford a subscription to the journal in which it was published.

One would think, in view of these findings, and of the fact that researchers give away their articles anyway, that researchers would all be making their published articles Open Access by now -- by "self-archiving" them in their own institution's online repositories, free for all. Ninety-four percent of journals already endorse self-archiving by their authors. Yet in fact only about 15% of researchers are self-archiving their publications spontaneously today. Perhaps that is about the same percentage of researchers that would be publishing at all, if it were not for the "publish or perish" mandate. So it is obvious what the natural solution is, for research and researchers worldwide, in the online era: the existing publish-or-perish mandate has to be extended to make it into a "publish and self-archive” mandate.

International surveys have shown that 95% of researchers would comply with a self-archiving mandate. This has since been confirmed by seven research institutions worldwide (two in Australia, two in Switzerland [one of them CERN], one in Portugal, one in the UK and one in India [National Institution of Technology, Rourkela]) that have already mandated self-archiving: their self-archiving rates are indeed rapidly climbing from the 15% baseline towards 100%.

But those are spontaneous institutional mandates, and there are only seven of them so far. There are also a few systematic national mandates: four of the eight UK research funding councils and the Wellcome Trust have now mandated self-archiving. And there are several other national proposals to mandate self-archiving, by the European Commission, a Canadian research council (CIHR) and all of the major US funding agencies (FRPAA).

There is no need, however, for developing countries to wait for the developed countries to mandate self-archiving. Developing countries have even more to gain -- for the impact of their own research on the research of others and for their own access to the research of others – because currently both their access and their impact is disproportionately low, relative to their actual and potential research productivity and influence.

In the past few years there have been many abstract avowals of support for the Principle of Open Access (e.g., the Bethesda and Berlin and Valparaiso and Goettingen and Scottish and Buenos Aires and Messina and Vienna and Salvador and WSIS and Riyadh Declarations), but these have all merely declared that providing Open Access is a "good thing" and "should be done" -- without saying exactly what should be done, and without committing themselves to doing it!

This is rather as if there were a global warming problem, and region after region kept making pious pronouncements to the effect that "something should be done about the global warming problem" instead of affirming that they have actually implemented a concrete emission policy locally, and are now inviting others to do likewise.

What the whole world needs now is concrete commitments to the Practice of Open Access. Under the guidance of India’s tireless Open Access advocate, Subbiah Arunachalam, there will be a two day workshop on research publication and Open Access at the Indian Institute of Science in Bangalore on November 2-3, at which representatives from the three most research-active developing countries – India, China and Brazil – will confer in order to frame the “Bangalore Commitment”: a commitment to mandate Open Access self-archiving in their own respective countries and thereby set an example for emulation by the rest of the world: “Self-archive unto others as you would have others self-archive unto you”

Stevan Harnad

American Scientist Open Access Forum

Saturday, October 14. 2006

Canada's SSHRC Just Keeps Spinning Its Wheels

Comments on Richard Akerman's blogged notes on David Moorman's SSHRC talk at Institutional Repositories: The Next Generation (Ottawa, October 10, 2006)

"SSHRC has embraced OA in principle, but [it's] a big challenge going from principle to action"Five out of the eight UK Research Councils (BBSRC, CCLRC, EPSRC, MRC, NERC) nevertheless seem to have managed to go from principle to action...

"Does SSHRC have a policy? No. There is more to this than just mandating OA."Four out of the eight UK Research Councils (BBSRC, EPSRC, MRC, NERC) nevertheless seem to have managed to mandate it, and Canada's CIHR seems to have managed to propose to mandate it...

"Figure out how to support OA journals. Conducting experiments to figure out best approach."Why is SSHRC fussing about supporting OA journals instead of mandating the self-archiving of SSHRC research output? Is SSHRC a research funder or a journal funder? The OA mandate pertains to the former, not the latter: to maximizing the access and impact of SSHRC research output, not to the budgeting of SSHRC's journal subsidies. Journals SSHRC may happen to be subsidising have nothing to do with the mandate in question.

"look at University of California system"Why not look instead at a system with a mandate, hence one that works -- as voluntarism, demonstrably, does not?

"[Create an] SSHRC IR?"An SSHRC Central Repositry (CR)? What on earth for? Mandate that SSHRC fundees self-archive their SSHRC-funded research output in their own institutional IRs. SSHRC does not need a CR of its own in the distributed, interoperable OAI age.

"Official Languages Act - websites must be in both languages: how to handle research? 15,000 objects would have to be translated every year"This is such nonsense as to take one's breath away: Can SSHRC not have a library? Are only bilingual or translated journals admissible? Does SSHRC stipulate which language(s) its fundees must publish in? (Besides, SSHRC does not need a CR of its own: Just mandate self-archiving in the fundees' own institutions' IRs.)

"grant[s] can't have post-award conditions, e.g. can't require article deposit"Nonsense. If publishing the research in a peer-reviewed journal can be a grant fulfillment condition, so can self-archiving the article.

SSHRC is spinning its wheels; it just keeps citing problems in principle while others are busily putting OA into practice.

Stevan Harnad"SSHRC Open Access Consultation (Canada)" (Aug 2005)

Replies for SSHRC Consultation on Open Access (Nov 2005)

Canada's SSHRC lacks leader, hence leadership, on OA (May 2006)

The Special Case of Astronomy

Update Jan 1, 2010: See Gargouri, Y; C Hajjem, V Larivière, Y Gingras, L Carr,T Brody & S Harnad (2010) “Open Access, Whether Self-Selected or Mandated, Increases Citation Impact, Especially for Higher Quality Research”

Update Feb 8, 2010: See also "Open Access: Self-Selected, Mandated & Random; Answers & Questions"

Michael Kurtz (Harvard-Smithsonian Center for Astrophysics) has provided some (as always) very interesting and informative data on the special case of research access and self-archiving practices in Astronomy. His data show that:SUMMARY: Astronomy is unusual among research fields in that all research-active astronomers already have full online access to all relevant journal articles via institutional subscriptions (because astronomy has only a small closed circle of core journals). Many astronomy articles are also self-archived as preprints prior to peer review and publication, but usage all shifts to the published version as soon as it is available. Self-archiving, even where it is at or near 100%, has no effect at all on subscriptions or cancellations. The Open Access (OA) citation advantage hence reduces to merely an "Early Access Advantage" in astronomy, because all postprints are accessible to everyone. There is also the much-reported positive correlation between the citation counts of articles and the proportion of them that were self-archived. This is no doubt partly a self-selection effect or "Quality Bias" -- with the better articles more likely to be self-archived. But this is unlikely to be all or most of the source of the OA advantage even in astronomy -- let alone in most other fields, where the postprints are not all accessible to all active researchers. The most important component of the OA advantage in general is that OA removes the access and usage barriers that prevent the better work from having its full potential impact (Quality Advantage). In astronomy, where those access barriers hardly exist, there is still a measurable OA advantage, but mostly just because of Early Advantage (and self-selection). With all postprints accessible, Competitive Advantage is restricted to the prepublication phase; Usage Advantage (downloads) can be estimated: downloads are doubled by universal online accessibility. And the Quality Advantage no doubt persists (though it is difficult to estimate independently).

(1) In astronomy, where all active, publishing researchers already have online access to all relevant journal articles (a very special case!), researchers all use the versions "eprinted" (self-archived) in Arxiv first, because those are available first; but they all switch to using the journal version, instead of the self-archived one, as soon as the journal version is available.

That is interesting, but hardly surprising, in view of the very special conditions of astronomy: If I only had access to a self-archived preprint or postprint first, I'd used that, faute de mieux. And as soon as the official journal version was accessible -- assuming that it's equally accessible -- I'd use that.

But these conditions -- (i) open accessibility of the eprint before publication, (ii) in one longstanding central repository (Arxiv), for many and in some cases most papers, and (iii) open accessibility of the journal version of all papers upon publication -- is simply not representative of most other fields! In most other fields, (i') only about 15% of papers are available early as preprints or postprints, (ii') they are self-archived in distributed IRs and websites, not one central one (Arxiv), and (iii') the journal versions of many papers are not accessible at all to many of the researchers after publication.

That's a very different state of affairs (outside astronomy and some areas of physics).

(2) Kurtz's data showing that astronomy journals are not cancelled despite 100% OA are very interesting, but they too follow almost tautologically from (1): If virtually all researchers have access to the journal version, and virtually all of them prefer to use that rather than the eprint, it stands to reason that it is not being cancelled! (What is cause and what is effect there is another question -- i.e., whether preference is driving subscriptions or subscriptions are driving preference.)

(3) In astronomy, as indicated by Kurtz, there is a small, closed circle of core journals, and all active researchers worldwide already have access to all of them. But in many other fields there is not a closed circle of core journals, and/or not all researchers have access. Hence access to a small set of core journals is not a precondition for being an active researcher in many fields -- which does not mean that lacking that access does not weaken the research (and that is the point!).

(4) I agree completely that there is a component of self-selection Quality Bias (QB) in the correlation between self-archiving and citations. The question is (4a) how much of the higher citation count for self-archived articles is due to QB (as opposed to Early Advantage, Competitive Advantage, Quality Advantage, Usage Advantage, and Arxiv (Central) Bias)? And (4b) does self-selection QB itself have any causal consequences (or are authors doing it purely superstitiously, since it is has no causal effects at all)? The effects of course need not be felt in citations; they could be felt in downloads (usage) or in other measures of impact (co-citations, influence on research direction, funding, fame, etc.).

The most important thing to bear in mind is that it would be absurd to imagine that somehow OA guarantees a quality-blind linear increment to the usage of any article, regardless of its quality. It is virtually certain that OA will benefit the better articles more, because they are more worth using and trying to build upon, hence more handicapped by access-barriers (which do exist in fields other than astro). That's QA, not QB. No amount of accessibility will help unciteable papers get used and cited. And most papers are uncited, hence probably unciteable, no matter how visible and accessible you may try to make them!

(5) I think we agree that the basic challenge in assessing causality here is that we have a positive correlation (between proportion of papers self-archived and citation-counts) but we need to analyze the direction of the causation. The fact that more-cited papers tend to be self-archived more, and less-cited papers less is merely a restatement of the correlation, not a causal analysis of it: The citations, after all, come after the self-archiving, not before!

The only methodologically irreproachable way to test causality would be to randomly choose a (sufficiently large, diverse, and representative) sample of N papers at the time of acceptance for publication (postprints -- no previous preprint self-archiving) and randomly impose self-archiving on N/2 of them, and not on the other N/2. That way we have random selection and not self-selection. Then we count citations for about 2-3 years, for all the papers, and compare them.

No one will do that study, but an approximation to it can be done (and we are doing it) by comparing (a) citation counts for papers that are self-archived in IRs that have a self-archiving mandate with (b) citation counts for papers in IRs without mandates and with (c) papers (in the same journal and year) that are not self-archived.

Not a perfect method, problems with small Ns, short available time-windows, and admixtures of self-selection and imposed self-archiving even with mandates -- but an approximation nonetheless. And other metrics -- downloads, co-citations, hub/authority scores, endogamy scores, growth-rates, funding, etc. -- can be used to triangulate and disambiguate. Stay tuned.

Now some comments:

On Tue, 10 Oct 2006, Michael Kurtz wrote:

"Recently Stevan has copied me on two sets of correspondance concerning the OA citation advantage; I thought I would just briefly respond to both.And it also shows how anomalous Astronomy is, compared to other fields, where it is certainly not true that every researcher has subscriptions to the main journals...

"Besides our IPM article: http://adsabs.harvard.edu/abs/2005IPM....41.1395K we have recently published two short papers, both with graphs you might find interesting.

"The preprint will appear in Learned Publishing http://adsabs.harvard.edu/abs/2006cs........9126H E-prints and Journal Articles in Astronomy: a Productive Co-existence

"and this is in the J. Electronic Publishing http://adsabs.harvard.edu/abs/2006JEPub...9....2H Effect of E-printing on Citation Rates in Astronomy and Physics

"There is a point I would like to emphasize from these papers. Figure 2 of the Learned Publishing paper shows that the number of ADS users who read the preprint version once the paper has been released drops to near zero. This shows that essentially every astronomer has subscriptions to the main journals, as ADS treats both the arXiv links and the links to the journals equally; also it shows that astronomers prefer the journals."

"Figure 5 of the J Electronic Publishing paper also shows that there is no effect of cost on the OA reads (and thus by extension citation) differential. Note in the plot that there is no change in slope for the obsolescence function of the reads (either of preprinted or non-preprinted) at 36 months. At 36 months the 3 year moving wall allows the papers to be accessed by everyone, this shows clearly that there is no cost effect portion of the OA differential in astronomy. This confirms the conclusion of my IPM article."And it underscores again, how unrepresentative astronomy is of research as a whole.

"Citations are probably the least sensitive measure to see the effects of OA. This is because one must be able to read the core journals in order to write a paper which will be published by them. It is really not possible for a person who has not been regularly reading journal articles on, say, nuclear physics, to suddenly be able to write one, and cite the OA articles which enabled that writing. It takes some time for a body of authors who did not previously have access to form and write acceptable papers."In astronomy -- where the core journals are few and a closed circle, and all active researchers have access to them. But this is not true of research as a whole, across disciplines (or around the world). Researchers in most fields are no doubt handicapped for having less than full access, but that does not prevent them from doing and publishing research altogether.

"Any statistical analysis of the causal/bias distinction must take into account the actual distribution of citations among articles. This is why I made the monte carlo analysis in the IPM paper. As a quick example for papers published in the Astrophysical Journal in 2003: The most cited 10% have 39% of all citations, and are 96% in the arXiv; the lowest cited 10% have 0.7% of all citations and are 29% in the arXiv. Showing the causal hypothesis is true will be very difficult under these conditions."(i) Since all of the published postprints in all these journals are accessible to all research-active astronomers as of their date of publication, we are of necessity speaking here mostly about an Early Access effect (preprints). Most of the other components of the Open Access Advantage (Competitive Advantage, Usage Advantage, Quality Advantage) are minimized here by the fact that everything in astronomy is OA from the date of publication onward. The remaining components are either Arxiv-specific (the Arxiv Bias -- the tradition of archiving and hence searching in one central repository) or self-selection [Quality Bias] influencing who does and does not self-archive early, with their prepublication preprint.

Since most fields don't post pre-refereeing preprints at all, this comparison is mostly moot. For most fields, the question about citation advantage concerns the postprint only, and as of the date of acceptance for publication, not before.

(ii) In other fields too, there is the very same correlation between citation counts and percentage self-archived, but it is based on postprints, self-archived at publication, not pre-refereeing preprints self-archived much earlier. And, most important, it is not true in these fields that the postprint is accessible to all researchers via subscription: Many potential users cannot access the article at all if it is not self-archived -- and that is the main basis for the OA impact advantage.

"Perhaps the journal which is most sensitive to cancellations due to OA archiving is Nuclear Physics B; it is 100% in arXiv, and is very expensive. I have several times seen librarians say that they would like to cancel it. One effect of OA on Nuclear Physics B is that its impact factor (as we measure it, I assume ISI gets the same thing) has gone up, just as we show in the J E Pub paper for Physical Review D. Whether Nuclear Physics B has been cancelled more than Nuclear Physics A or Physics Letters B must be well known at Elsevier."It is an interesting question whether NPB is being cancelled, but if it is, it clearly is not because of self-archiving, nor because of astronomy's special "universal paid OA" OA to the published version: if NPB is being cancelled, it is for the usual reason, which is that it is not good enough to justify its share of the institution's journal budget.

Harnad, S. (2005) OA Impact Advantage = EA + (AA) + (QB) + QA + (CA) + UAStevan Harnad

American Scientist Open Access Forum

(Page 1 of 2, totaling 17 entries)

» next page

EnablingOpenScholarship (EOS)

Quicksearch

Syndicate This Blog

Materials You Are Invited To Use To Promote OA Self-Archiving:

Videos:

audio WOS

Wizards of OA -

audio U Indiana

Scientometrics -

The American Scientist Open Access Forum has been chronicling and often directing the course of progress in providing Open Access to Universities' Peer-Reviewed Research Articles since its inception in the US in 1998 by the American Scientist, published by the Sigma Xi Society.

The American Scientist Open Access Forum has been chronicling and often directing the course of progress in providing Open Access to Universities' Peer-Reviewed Research Articles since its inception in the US in 1998 by the American Scientist, published by the Sigma Xi Society.

The Forum is largely for policy-makers at universities, research institutions and research funding agencies worldwide who are interested in institutional Open Acess Provision policy. (It is not a general discussion group for serials, pricing or publishing issues: it is specifically focussed on institutional Open Acess policy.)

You can sign on to the Forum here.

Archives

Calendar

Categories

Blog Administration

Statistics

Last entry: 2018-09-14 13:27

1129 entries written

238 comments have been made