Wednesday, December 17. 2014

The Only Substitute for Metrics is Better Metrics

Comment on: Mryglod, Olesya, Ralph Kenna, Yurij Holovatch and Bertrand Berche (2014) Predicting the results of the REF using departmental h-index: A look at biology, chemistry, physics, and sociology. LSE Impact Blog 12(6)

"The man who is ready to prove that metaphysical knowledge is wholly impossible… is a brother metaphysician with a rival theory” Bradley, F. H. (1893) Appearance and Reality

The topic of using metrics for research performance assessment in the UK has a rather long history, beginning with the work of Charles Oppenheim.

The topic of using metrics for research performance assessment in the UK has a rather long history, beginning with the work of Charles Oppenheim. The solution is neither to abjure metrics nor to pick and stick to one unvalidated metric, whether it’s the journal impact factor or the h-index.

The solution is to jointly test and validate, field by field, a battery of multiple, diverse metrics (citations, downloads, links, tweets, tags, endogamy/exogamy, hubs/authorities, latency/longevity, co-citations, co-authorships, etc.) against a face-valid criterion (such as peer rankings).

See also: "On Metrics and Metaphysics" (2008)

Oppenheim, C. (1996). Do citations count? Citation indexing and the Research Assessment Exercise (RAE). Serials: The Journal for the Serials Community, 9(2), 155-161.

Oppenheim, C. (1997). The correlation between citation counts and the 1992 research assessment exercise ratings for British research in genetics, anatomy and archaeology. Journal of documentation, 53(5), 477-487.

Oppenheim, C. (1995). The correlation between citation counts and the 1992 Research Assessment Exercise Ratings for British library and information science university departments. Journal of Documentation, 51(1), 18-27.

Oppenheim, C. (2007). Using the h-index to rank influential British researchers in information science and librarianship. Journal of the American Society for Information Science and Technology, 58(2), 297-301.

Harnad, S. (2001) Research access, impact and assessment. Times Higher Education Supplement 1487: p. 16.

Harnad, S. (2003) Measuring and Maximising UK Research Impact. Times Higher Education Supplement. Friday, June 6 2003

Harnad, S., Carr, L., Brody, T. & Oppenheim, C. (2003) Mandated online RAE CVs Linked to University Eprint Archives: Improving the UK Research Assessment Exercise whilst making it cheaper and easier. Ariadne 35.

Hitchcock, Steve; Woukeu, Arouna; Brody, Tim; Carr, Les; Hall, Wendy and Harnad, Stevan. (2003) Evaluating Citebase, an open access Web-based citation-ranked search and impact discovery service Technical Report, ECS, University of Southampton.

Harnad, S. (2004) Enrich Impact Measures Through Open Access Analysis. British Medical Journal BMJ 2004; 329:

Harnad, S. (2006) Online, Continuous, Metrics-Based Research Assessment. Technical Report, ECS, University of Southampton.

Brody, T., Harnad, S. and Carr, L. (2006) Earlier Web Usage Statistics as Predictors of Later Citation Impact. Journal of the American Association for Information Science and Technology (JASIST) 57(8) pp. 1060-1072.

Brody, T., Carr, L., Harnad, S. and Swan, A. (2007) Time to Convert to Metrics. Research Fortnight 17-18.

Brody, T., Carr, L., Gingras, Y., Hajjem, C., Harnad, S. and Swan, A. (2007) Incentivizing the Open Access Research Web: Publication-Archiving, Data-Archiving and Scientometrics. CTWatch Quarterly 3(3).

Harnad, S. (2008) Validating Research Performance Metrics Against Peer Rankings. Ethics in Science and Environmental Politics 8 (11) doi:10.3354/esep00088 The Use And Misuse Of Bibliometric Indices In Evaluating Scholarly Performance

Harnad, S. (2008) Self-Archiving, Metrics and Mandates. Science Editor 31(2) 57-59

Harnad, S., Carr, L. and Gingras, Y. (2008) Maximizing Research Progress Through Open Access Mandates and Metrics. Liinc em Revista 4(2).

Harnad, S. (2009) Open Access Scientometrics and the UK Research Assessment Exercise. Scientometrics 79 (1) Also in Proceedings of 11th Annual Meeting of the International Society for Scientometrics and Informetrics 11(1), pp. 27-33, Madrid, Spain. Torres-Salinas, D. and Moed, H. F., Eds. (2007)

Harnad, S. (2009) Multiple metrics required to measure research performance. Nature (Correspondence) 457 (785) (12 February 2009)

Harnad, S; Carr, L; Swan, A; Sale, A & Bosc H. (2009) Maximizing and Measuring Research Impact Through University and Research-Funder Open-Access Self-Archiving Mandates. Wissenschaftsmanagement 15(4) 36-41

Wednesday, May 28. 2014

Progressive vs Treadwater Fields

There are many reasons why grumbling about attempts to replicate are unlikely in the physical or even the biological sciences, but the main reason is that in most other sciences research is cumulative:

There are many reasons why grumbling about attempts to replicate are unlikely in the physical or even the biological sciences, but the main reason is that in most other sciences research is cumulative: Experimental and observational findings that are worth knowing are those on which further experiments and observations can be built, for an ever fuller and deeper causal understanding of the system under study, whether the solar system or the digestive system. If the finding is erroneous, the attempts to build on it collapse. Cumulative replication is built into the trajectory of research itself — for those findings that are worth knowing.

In contrast, if no one bothers to build anything on it, chances are that a finding was not worth knowing (and so it matters little whether it would replicate or not, if tested again).

Why is it otherwise in many areas of Psychology? Why do the outcomes of so many one-shot, hit-and-run studies keep being reported in textbooks?

Because so much of Psychology is not cumulative explanatory research at all. It is helter-skelter statistical outcomes that manage to do two things: (1) meet a crierion for statistical significance (i.e., a low probability that they occurred by chance) and (2) are amenable to an attention-catching interpretation.

No wonder that their authors grumble when replicators spoil the illusion.

Yes, open access, open commentary and crowd-sourcing are needed in all fields, for many reasons, but for one reason more in hit-and-run fields.

Friday, October 11. 2013

Spurning the Better to Keep Burning for the Best

Björn Brembs (as interviewed by Richard Poynder) is not satisfied with "read access" (free online access: Gratis OA): he wants "read/write access" (free online access plus re-use rights: Libre OA).

Björn Brembs (as interviewed by Richard Poynder) is not satisfied with "read access" (free online access: Gratis OA): he wants "read/write access" (free online access plus re-use rights: Libre OA).The problem is that we are nowhere near having even the read-access that Björn is not satisfied with.

So his dissatisfaction is not only with something we do not yet have, but with something that is also an essential component and prerequsite for read/write access. Björn wants more, now, when we don't even have less.

And alas Björn does not give even a hint of a hint of a practical plan for getting read/write access instead of "just" the read access we don't yet have.

All he proposes is that a consortium of rich universities should cancel journals and take over.

Before even asking what on earth those universities would/should/could do, there is the question of how their users would get access to all those cancelled journals (otherwise this "access" would be even less than less!). Björn's reply -- doubly alas -- uses the name of my eprint-request Button in vain:

The eprint-request Button is only legal, and only works, because authors are providing access to individual eprint requestors for their own articles. If the less-rich universities who were not part of this brave take-over consortium of journal-cancellers were to begin to provide automatic Button-access to all those extra-institutional users, their institutional license costs (subscriptions) would sky-rocket, because their Big-Deal license fees are determined by publishers on the basis of the size of each institution's total usership, which would now include all the users of all the cancelling institutions, on Björn's scheme.

So back to the work-bench on that one.

Björn seems to think that OA is just a technical matter, since all the technical wherewithal is already in place, or nearly so. But in fact, the technology for Green Gratis ("read-only") OA has been in place for over 20 years, and we are still nowhere near having it. (We may, optimistically, be somewhere between 20-30%, though certainly not even the 50% that Science-Metrix has optimistically touted recently as the "tipping point" for OA -- because much of that is post-embargo, hence Delayed Access (DA), not OA.

Björn also seems to have proud plans for post-publication "peer review" (which is rather like finding out whether the water you just drank was drinkable on the basis of some crowd-sourcing after you drank it).

Post-publication crowd-sourcing is a useful supplement to peer review, but certainly not a substitute for it.

All I can do is repeat what I've had to say so many times across the past 20 years, as each new generation first comes in contact with the access problem, and proposes its prima facie solutions (none of which are new: they have all been proposed so many times that they -- and their fatal flaws -- have already have each already had their own FAQs for over a decade.) The watchword here, again, is that the primary purpose of the Open Access movement is to free the peer-reviewed literature from access-tolls -- not to free it from peer-review. And before you throw out the peer review system, make sure you have a tried, tested, scalable and sustainable system with which to replace it, one that demonstrably yields at least the same quality (and hence usability) as the existing system does.

Till then, focus on freeing access to the peer-reviewed literature such as it is.

And that's read-access, which is much easier to provide than read-write access. None of the Green (no-embargo) publishers are read-write Green: just read-Green. Insisting on read-write would be an excellent way to get them to adopt and extend embargoes, just as the foolish Finch preference for Gold did (and just as Rick Anderson's absurd proposal to cancel Green (no-embargo) journals would do).

And, to repeat: after 20 years, we are still nowhere near 100% read-Green, largely because of phobias about publisher embargoes on read-Green. Björn is urging us to insist on even more than read-Green. Another instance of letting the (out-of-reach) Best get in the way of the (within-reach) Better. And that, despite the fact that it is virtually certain that once we have 100% read-Green, the other things we seek -- read-write, Fair-Gold, copyright reform, publishing reform, perhaps even peer review reform -- will all follow, as surely as day follows night.

But not if we contribute to slowing our passage to the Better (which there is already a tried and tested means of reaching, via institutional and funder mandates) by rejecting or delaying the Better in the name of holding out for a direct sprint to the Best (which no one has a tried and tested means of reaching, other than to throw even more money at publishers for Fool's Gold). Björn's speculation that universities should cancel journals, rely on interlibrary loan, and scrap peer-review for post-hoc crowd-sourcing is certainly not a tried and tested means!

As to journal ranking and citation impact factors: They are not the problem. No one is preventing the use of article- and author-based citation counts in evaluating articles and authors. And although the correlation between journal impact factors and journal quality and importance is not that big, it's nevertheless positive and significant. So there's nothing wrong with libraries using journal impact factors as one of a battery of many factors (including user surveys, usage metrics, institutional fields of interest, budget constraints, etc.) in deciding which journals to keep or cancel. Nor is there anything wrong with research performance evaluation committees using journal impact factors as one of a battery of many factors (alongside article metrics, author metrics, download counts, publication counts, funding, doctoral students, prizes, honours, and peer evaluations) in assessing and rewarding research progress.

The problem is neither journal impact factors nor peer review: The only thing standing between the global research community and 100% OA (read-Green) is keystrokes. Effective institutional and funder mandates can and will ensure that those keystrokes are done. Publisher embargoes cannot stop them: With immediate-deposit mandates, 100% of articles (final, refereed drafts) are deposited in the author's institutional repository immediately upon acceptance for publication. At least 60% of them can be made immediately OA, because at least 60% of journals don't embargo (read-Green) OA; access to the other 40% of deposits can be made Restricted Access, and it is there that the eprint-request Button can provide Almost-OA with one extra keystroke from the would-be user to request it and one extra keystroke from the author to fulfill the request.

That done, globally, and we can leave it to nature (and human nature) to ensure that the "Best" (100% immediate OA, subscription collapse, conversion to Fair Gold, all the re-use rights users need, and even peer-review reform) will soon follow.

But not as long as we continue spurning the Better and just burning for the Best.

Stevan Harnad

Saturday, April 6. 2013

Paid-Gold OA, Free-Gold OA & Journal Quality Standards

Peter Suber has pointed out that "About 50% of articles published in peer-reviewed OA journals are published in fee-based journals" (as reported by Laakso & Bjork 2012).

Peter Suber has pointed out that "About 50% of articles published in peer-reviewed OA journals are published in fee-based journals" (as reported by Laakso & Bjork 2012).Laakso & Bjork also report that "[12% of] articles published during 2011 and indexed in the most comprehensive article-level index of scholarly articles (Scopus) are available OA through journal publishers... immediately...".

That's 12% immediate Gold-OA for the (already selective) SCOPUS sample. The percentage is still smaller for the more selective Thomson-Reuters/ISI sample.

I think it cannot be left out of the reckoning about paid-Gold OA vs. free-Gold OA that:

(#1) most articles are not published as Gold OA at all today (neither paid-Gold nor free-Gold)#2 and #3 are hypotheses, but I think they can be tested objectively.

(#2) the articles of the quality that users need and want most are much less likely to be published as Gold OA (whether paid-Gold or free-Gold) today, and, most important,

(#3) the Gold OA articles of the quality that users need and want most today are less likely to be the free-Gold ones than the paid-Gold ones (even though the junk journals on Jeffrey Beall's "predatory" Gold OA journal list are all paid-Gold).

A test for #2 would be to compare the download and citation counts (not the journal impact factors) for Gold OA (including hybrid Gold) articles vs non-Gold subscription journal articles (excluding the ones that have been made Green OA) within the same subject (and language!) area.

A test for #3 would be to compare the download and citation counts (not the journal impact factors) for paid-Gold (including hybrid Gold) vs free-gold articles within the same subject (and language!) area.

I mention this because I think just comparing the number of paid-Gold vs. free-Gold journals without taking quality into account could be misleading.

Wednesday, October 24. 2012

Comparing Carrots and Lettuce

"The inexorable rise of open access scientific publishing".

Our (Gargouri, Lariviere, Gingras, Carr & Harnad) estimate (for publication years 2005-2010, measured in 2011, based on articles published in the c. 12,000 journals indexed by Thomson-Reuters ISI) is 35% total OA in the UK (10% above the worldwide total OA average of 25%): This is the sum of both Green and Gold OA.

Our (Gargouri, Lariviere, Gingras, Carr & Harnad) estimate (for publication years 2005-2010, measured in 2011, based on articles published in the c. 12,000 journals indexed by Thomson-Reuters ISI) is 35% total OA in the UK (10% above the worldwide total OA average of 25%): This is the sum of both Green and Gold OA.Our sample yields a Gold OA estimate much lower than Laakso & Björk's. Our estimate of about 25% OA worldwide is composed of 22.5% Green plus 2.5% Gold. And the growth rate of neither Gold nor (unmandated) Green is exponential.

There are a number of reasons neither "carrots vs. lettuce" nor "UK vs. non-UK produce" nor L&B estimates vs. G et al estimates can be compared or combined in a straightforward way.

Please take the following as coming from a fervent supporter of OA, not an ill-wisher, but one who has been disappointed across the long years by far too many failures to seize the day -- amidst surges of "tipping-point" euphoria -- to be ready once again to tout triumph.

First, note that the hubbub is yet again about Gold OA (publishing), even though all estimates agree that there is far less of Gold OA than there is of Green OA (self-archiving), and even though it is Green OA that can be fast-forwarded to 100%: all it takes is effective Green OA mandates (I will return to this point at the end).

So Stephen Curry asks why there is a discrepancy between our (Gargouri et al) estimates of Gold OA -- in the UK and worldwide (c. <5%) -- the estimates of Laakso & Björk (17%). Here are some of the multiple reasons (several of them already pointed out by Richard van Noorden in his comments too):

1. Thomson-Reuters ISI Subset: Our estimates are based solely on articles in the Thomson-Reuters ISI database of c. 12,000 journals. This database is more selective than the SCOPUS database on which L&B's sample is based. The more selective journals have higher quality standards and are hence the ones that both authors and users prefer.

(Without getting into the controversy about journal citation impact factors, another recent L&B study has shown that the higher the journal's impact factor, the less likely that the journal is Gold OA. -- But let me add that this is now likely to change, because of the perverse effects of the Finch Report and the RCUK OA Policy: Thanks to the UK's announced readiness to divert UK research funds to double-paying subscription journal publishers for hybrid Gold OA, most journals, including the top journals, will soon be offering hybrid Gold OA -- a very pricey way to add the UK's 6% of worldwide research output to the worldwide Gold OA total: The very same effect could be achieved free of extra cost if RCUK instead adopted a compliance-verification mechanism for its existing Green OA mandates.)

2. Embargoed "Gold OA": L&B included in their Gold OA estimates "OA" that was embargoed for a year. That's not OA, and certainly should not be credited to the total OA for any given year -- whence it is absent -- but to the next year. By that time, the Green OA embargoes of most journals have already expired. So, again, any OA purchased in this pricey way -- instead of for a few extra cost-free keystrokes by the author, for Green -- is more of a head-shaker than occasion for heady triumph.

3. 1% Annual Growth: The 1% annual growth of Gold OA is not much headway either, if you do the growth curves for the projected date they will reach 100%! (The more heady Gold OA growth percentages are not Gold OA growth as a percentage of all articles published, but Gold OA growth as a percentage of the preceding year's Gold OA articles.)

4. Green Achromatopsia: The relevant data for comparing Gold OA -- both its proportion and its growth rate -- with Green come from a source L&B do not study, namely, institutions with (effective) Green OA mandates. Here the proportions within two years of mandate adoption (60%+) and the subsequent growth rate toward 100% eclipse not only the worldwide Gold OA proportions and growth rate, but also the larger but still unimpressive worldwide Green OA proportions and growth rate for unmandated Green OA (which is still mostly all there is).

5. Mandate Effectiveness: Note also that RCUK's prior Green OA mandate was not an effective one (because it had no compliance verification mechanism), even though it may have increased UK OA (35%) by 10% over the global average (25%).

Stephen Curry: "A cheaper green route is also available, whereby the author usually deposits an unformatted version of the paper in a university repository without incurring a publisher's charge, but it remains to be seen if this will be adopted in practice. Universities and research institutions are only now beginning to work out how to implement the new policy (recently clarified by the RCUK)."Well, actually RCUK has had Green OA mandates for over a half-decade now. But RCUK has failed to draw the obvious conclusion from its pioneering experiment -- which is that the RCUK mandates require an effective compliance-verification mechanism (of the kind that the effective university mandates have -- indeed, the universities themselves need to be recruited as the compliance-verifiers).

Instead, taking their cue from the Finch Report -- which in turn took its cue from the publisher lobby -- RCUK is doing a U-turn from its existing Green OA mandate, and electing to double-pay publishers for Gold instead.

A much more constructive strategy would be for RCUK to build on its belated grudging concession (that although Gold is RCUK's preference, RCUK fundees may still choose Green) by adopting an effective Green OA compliance verification mechanism. That (rather than the obsession with how to spend "block grants" for Gold) is what the fundees' institutions should be recruited to do for RCUK.

6. Discipline Differences: The main difference between the Gargouri, Lariviere, Gingras, Carr & Harnad estimates of average percent Gold in the ISI sample (2.5%) and the Laakso & Bjork estimates (10.3% for 2010) probably arise because L&B's sample included all ISI articles per year for 12 years (2000-2011), whereas ours was a sample of 1300 articles per year, per discipline, separately, for each of 14 disciplines, for 6 years (2005-2010: a total of about 100,000 articles).

7. Biomedicine Preponderance? Our sample was much smaller than L&B's because L&B were just counting total Gold articles, using DOAJ, whereas we were sending out a robot to look for Green OA versions on the Web for each of the 100,000 articles in our sample. It may be this equal sampling across disciplines that leads to our lower estimates of Gold: L&B's higher estimate may reflect the fact that certain disciplines are both more Gold and publish more articles (in our sample, Biomed was 7.9% Gold). Note that both studies agree on the annual growth rate of Gold (about 1%)

8. Growth Spurts? Our projection does not assume a linear year-to-year growth rate (1%), it detects it. There have so far been no detectable annual growth spurts (of either Gold or Green). (I agree, however, that Finch/RCUK could herald one forthcoming annual spurt of 6% Gold (the UK's share of world research output) -- but that would be a rather pricey (and, I suspect, unscaleable and unsustainable) one-off growth spurt. )

9. RCUK Compliance Verification Mechanism for Green OA Deposits: I certainly hope Stephen Curry is right that I am overstating the ambiguity of the RCUK policy!

But I was not at all reassured at the LSHTM meeting on Open Access by Ben Ryan's rather vague remarks about monitoring RCUK mandate compliance, especially compliance with Green. After all that (and not the failure to prefer and fund Gold) was the main weakness of the prior RCUK OA mandate.

Stevan Harnad

Saturday, April 2. 2011

"The Sole Methodologically Sound Study of the Open Access Citation Advantage(!)"

It is true that downloads of research findings are important. They are being measured, and the evidence of the open-access download advantage is growing. See:

S. Hitchcock (2011) "The effect of open access and downloads ('hits') on citation impact: a bibliography of studies"But the reason it is the open-access citation advantage that is especially important is that refereed research is conducted and published so it can be accessed, used, applied and built upon in further research: Research is done by researchers, for uptake by researchers, for the benefit of the public that funds the research. Both research progress and researchers' careers and funding depend on research uptake and impact.

A. Swan (2010) "The Open Access citation advantage: Studies and results to date"

B. Wagner (2010) "Open Access Citation Advantage: An Annotated Bibliography"

The greatest growth potential for open access today is through open access self-archiving mandates adopted by the universal providers of research: the researchers' universities, institutions and funders (e.g., Harvard and MIT) . See the ROARMAP registry of open-access mandates.

Universities adopt open access mandates in order to maximize their research impact. The large body of evidence, in field after field, that open access increases citation impact, helps motivate universities to mandate open access self-archiving of their research output, to make it accessible to all its potential users -- rather than just those whose universities can afford subscription access -- so that all can apply, build upon and cite it. (Universities can only afford subscription access to a fraction of research journals.)

The Davis study lacks the statistical power to show what it purports to show, which is that the open access citation advantage is not causal, but merely an artifact of authors self-selectively self-archiving their better (hence more citable) papers. Davis's sample size was smaller than many of the studies reporting the open access citation advantage. Davis found no citation advantage for randomized open access. But that does not demonstrate that open access is a self-selection artifact -- in that study or any other study -- because Davis did not replicate the widely reported self-archiving advantage either, and that advantage is often based on far larger samples. So the Davis study is merely a small non-replication of a widely reported outcome. (There are a few other non-replications; but most of the studies to date replicate the citation advantage, especially those based on bigger samples.)

Davis says he does not see why the inferences he attempts to make from his results -- that the reported open access citation advantage is an artifact, eliminated by randomization, that there is hence no citation advantage, which implies that there is no research access problem for researchers, and that researchers should just content themselves with the open access download advantage among lay users and forget about any citation advantage -- are not welcomed by researchers.

These inferences are not welcomed because they are based on flawed methodology and insufficient statistical power and yet they are being widely touted -- particularly by the publishing industry lobby (see the spin FASEB is already trying to put on the Davis study: "Paid access to journal articles not a significant barrier for scientists"!) -- as being the sole methodologically sound test of the open access citation advantage! Ignore the many positive studies. They are all methodologically flawed. The definitive finding, from the sole methodologically sound study, is null. So there's no access problem, researchers have all the access they need -- and hence there's no need to mandate open access self-archiving.

No, this string of inferences is not a "blow to open access" -- but it would be if it were taken seriously.

What would be useful and opportune at this point would be meta-analysis.

Stevan Harnad

American Scientist Open Access Forum

EnablingOpenScholarship

The Sound of One Hand Clapping

Suppose many studies report that cancer incidence is correlated with smoking and you want to demonstrate in a methodologically sounder way that this correlation is not caused by smoking itself, but just an artifact of the fact that the same people who self-select to smoke are also the ones who are more prone to cancer. So you test a small sample of people randomly assigned to smoke or not, and you find no difference in their cancer rates. How can you know that your sample was big enough to detect the repeatedly reported correlation at all unless you test whether it's big enough to show that cancer incidence is significantly higher for self-selected smoking than for randomized smoking?

Many studies have reported a statistically significant increase in citations for articles whose authors make them OA by self-archiving them. To show that this citation advantage is not caused by OA but just a self-selection artifact (because authors selectively self-archive their better, more citeable papers), you first have to replicate the advantage itself, for the self-archived OA articles in your sample, and then show that that advantage is absent for the articles made OA at random. But Davis showed only that the citation advantage was absent altogether in his sample. The most likely reason for that is that the sample was much too small (36 journals, 712 articles randomly OA, 65 self-archived OA, 2533 non-OA).

In a recent study (Gargouri et al 2010) we controlled for self-selection using mandated (obligatory) OA rather than random OA. The far larger sample (1984 journals, 3055 articles mandatorily OA, 3664 self-archived OA, 20,982 non-OA) revealed a statistically significant citation advantage of about the same size for both self-selected and mandated OA.

If and when Davis's requisite self-selected self-archiving control is ever tested, the outcome will either be (1) the usual significant OA citation advantage in the self-archiving control condition that most other published studies have reported -- in which case the absence of the citation advantage in Davis's randomized condition would indeed be evidence that the citation advantage had been a self-selection artifact that was then successfully eliminated by the randomization -- or (more likely, I should think) (2) no significant citation advantage will be found in the self-archiving control condition either, in which case the Davis study will prove to have been just one non-replication of the usual significant OA citation advantage (perhaps because of Davis's small sample size, the fields, or the fact that most of the non-OA articles become OA on the journal's website after a year). (There have been a few other non-replications; but most studies replicate the OA citation advantage, especially the ones based on larger samples.)

Until that requisite self-selected self-archiving control is done, this is just the sound of one hand clapping.

Readers can be trusted to draw their own conclusions as to whether Davis's study, tirelessly touted as the only methodologically sound one to date, is that -- or an exercise in advocacy.

Self-Selected or Mandated, Open Access Increases Citation Impact for Higher Quality Research (2010) PLOS ONE 5 (10) (authors: Gargouri, Y., Hajjem, C., Lariviere, V., Gingras, Y., Brody, T., Carr, L. and Harnad, S.)

Thursday, March 31. 2011

On Methodology and Advocacy: Davis's Randomization Study of the OA Advantage

Open access, readership, citations: a randomized controlled trial of scientific journal publishing doi:10.1096/fj.11-183988fj.11-183988Sorry to disappoint! Nothing new to cut-and-paste or reply to:

Philip M. Davis: "Published today in The FASEB Journal we report the findings of our randomized controlled trial of open access publishing on article downloads and citations. This study extends a prior study of 11 journals in physiology (Davis et al, BMJ, 2008) reported at 12 months to 36 journals covering the sciences, social sciences and humanities at 3yrs. Our initial results are generalizable across all subject disciplines: open access increases article downloads but has no effect on article citations... You may expect a routine cut-and-paste reply by S.H. shortly... I see the world as a more complicated and nuanced place than through the lens of advocacy."

Still no self-selected self-archiving control, hence no basis for the conclusions drawn (to the effect that the widely reported OA citation advantage is merely an artifact of a self-selection bias toward self-archiving the better, hence more citeable articles -- a bias that the randomization eliminates). The methodological flaw, still uncorrected, has been pointed out before.

If and when the requisite self-selected self-archiving control is ever tested, the outcome will either be (1) the usual significant OA citation advantage in the self-archiving control condition that most other published studies have reported -- in which case the absence of the citation advantage in Davis's randomized condition would indeed be evidence that the citation advantage had been a self-selection artifact that was then successfully eliminated by the randomization -- or (more likely, I should think) (2) there will be no significant citation advantage in the self-archiving control condition either, in which case the Davis study will prove to have been just a non-replication of the usual significant OA citation advantage (perhaps because of Davis's small sample size, the fields, or the fact that most of the non-OA articles become OA on the journal's website after a year).

Until the requisite self-selected self-archiving control is done, this is just the sound of one hand clapping.

Readers can be trusted to draw their own conclusions as to whether this study, tirelessly touted as the only methodologically sound one to date, is that -- or an exercise in advocacy.

Stevan Harnad

American Scientist Open Access Forum

EnablingOpenScholarship

Wednesday, October 20. 2010

Correlation, Causation, and the Weight of Evidence

Jennifer Howard ("Is there an Open-Access Advantage?," Chronicle of Higher Education, October 19 2010) seems to have missed the point of our article. It is undisputed that study after study has found that Open Access (OA) is correlated with higher probability of citation. The question our study addressed was whether making an article OA causes the higher probability of citation, or the higher probability causes the article to be made OA.SUMMARY: One can only speculate on the reasons why some might still wish to cling to the self-selection bias hypothesis in the face of all the evidence to date. It seems almost a matter of common sense that making articles more accessible to users also makes them more usable and citable -- especially in a world where most researchers are familiar with the frustration of arriving at a link to an article that they would like to read (but their institution does not subscribe), so they are asked to drop it into the shopping cart and pay $30 at the check-out counter. The straightforward causal relationship is the default hypothesis, based on both plausibility and the cumulative weight of the evidence. Hence the burden of providing counter-evidence to refute it is now on the advocates of the alternative.

The latter is the "author self-selection bias" hypothesis, according to which the only reason OA articles are cited more is that authors do not make all articles OA: only the better ones, the ones that are also more likely to be cited.

But almost no one finds that OA articles are cited more a year after publication. The OA citation advantage only becomes statistically detectable after citations have accumulated for 2-3 years.

Even more important, Davis et al. did not test the obvious and essential control condition in their randomized OA experiment: They did not test whether there was a statistically detectable OA advantage for self-selected OA in the same journals and time-window. You cannot show that an effect is an artifact of self-selection unless you show that with self-selection the effect is there, whereas with randomization it is not. All Davis et al showed was that there is no detectable OA advantage at all in their one-year sample (247 articles from 11 Biology journals); randomness and self-selection have nothing to do with it.

Davis et al released their results prematurely. We are waiting*,** to hear what Davis finds after 2-3 years, when he completes his doctoral dissertation. But if all he reports is that he has found no OA advantage at all in that sample of 11 biology journals, and that interval, rather than an OA advantage for the self-selected subset and no OA advantage for the randomized subset, then again, all we will have is a failure to replicate the positive effect that has now been reported by many other investigators, in field after field, often with far larger samples than Davis et al's.

Meanwhile, our study was similar to that of Davis et al's, except that it was a much bigger sample, across many fields, and a much larger time window -- and, most important, we did have a self-selective matched-control subset, which did show the usual OA advantage. Instead of comparing self-selective OA with randomized OA, however, we compared it with mandated OA -- which amounts to much the same thing, because the point of the self-selection hypothesis is that the author picks and chooses what to make OA, whereas if the OA is mandatory (required), the author is not picking and choosing, just as the author is not picking and choosing when the OA is imposed randomly.Davis's results are welcome and interesting, and include some good theoretical insights, but insofar as the OA Citation Advantage is concerned, the empirical findings turn out to be just a failure to replicate the OA Citation Advantage in that particular sample and time-span -- exactly as predicted above. The original 2008 sample of 247 OA and 1372 non-OA articles in 11 journals one year after publication has now been extended to 712 OA and 2533 non-OA articles in 36 journals two years after publication. The result is a significant download advantage for OA articles but no significant citation advantage.

*Note added October 31, 2010: Davis's dissertation turns out to have been posted on the same day as the present posting (October 20; thanks to Les Carr for drawing this to my attention on October 24!).

**Note added November 24, 2010: Phil Davis's results -- a replication of the OA download advantage and a non-replication of the OA citation advantage -- have since been published as: Davis, P. (2010) Does Open Access Lead to Increased Readership and Citations? A Randomized Controlled Trial of Articles Published in APS Journals. The Physiologist 53(6) December 2010.

The only way to describe this outcome is as a non-replication of the OA Citation Advantage on this particular sample; it is most definitely not a demonstration that the OA Advantage is an artifact of self-selection, since there is no control group demonstrating the presence of the citation advantage with self-selected OA and the absence of the citation advantage with randomized OA across the same sample and time-span: There is simply the failure to detect any citation advantage at all.

This failure to replicate is almost certainly due to the small sample size as well as the short time-span. (Davis's a-priori estimates of the sample size required to detect a 20% difference took no account of the fact that citations grow with time; and the a-priori criterion fails even to be met for the self-selected subsample of 65.)

"I could not detect the effect in a much smaller and briefer sample than others" is hardly news! Compare the sample size of Davis's negative results with the sample-sizes and time-spans of some of the studies that found positive results:

And our finding is that the mandated OA advantage is just as big as the self-selective OA advantage.

As we discussed in our article, if someone really clings to the self-selection hypothesis, there are some remaining points of uncertainty in our study that self-selectionists can still hope will eventually bear them out: Compliance with the mandates was not 100%, but 60-70%. So the self-selection hypothesis has a chance of being resurrected if one argues that now it is no longer a case of positive selection for the stronger articles, but a refusal to comply with the mandate for the weaker ones. One would have expected, however, that if this were true, the OA advantage would at least be weaker for mandated OA than for unmandated OA, since the percentage of total output that is self-archived under a mandate is almost three times the 5-25% that is self-archived self-selectively. Yet the OA advantage is undiminished with 60-70% mandate compliance in 2002-2006. We have since extended the window by three more years, to 2009; the compliance rate rises by another 10%, but the mandated OA advantage remains undiminished. Self-selectionists don't have to cede till the percentage is 100%, but their hypothesis gets more and more far-fetched...

The other way of saving the self-selection hypothesis despite our findings is to argue that there was a "self-selection" bias in terms of which institutions do and do not mandate OA: Maybe it's the better ones that self-select to do so. There may be a plausible case to be made that one of our four mandated institutions -- CERN -- is an elite institution. (It is also physics-only.) But, as we reported, we re-did our analysis removing CERN, and we got the same outcome. Even if the objection of eliteness is extended to Southampton ECS, removing that second institution did not change the outcome either. We leave it to the reader to decide whether it is plausible to count our remaining two mandating institutions -- University of Minho in Portugal and Queensland University of Technology in Australia -- as elite institutions, compared to other universities. It is a historical fact, however, that these four institutions were the first in the world to elect to mandate OA.

One can only speculate on the reasons why some might still wish to cling to the self-selection bias hypothesis in the face of all the evidence to date. It seems almost a matter of common sense that making articles more accessible to users also makes them more usable and citable -- especially in a world where most researchers are familiar with the frustration of arriving at a link to an article that they would like to read (but their institution does not subscribe), so they are asked to drop it into the shopping cart and pay $30 at the check-out counter. The straightforward causal relationship is the default hypothesis, based on both plausibility and the cumulative weight of the evidence. Hence the burden of providing counter-evidence to refute it is now on the advocates of the alternative.

Davis, PN, Lewenstein, BV, Simon, DH, Booth, JG, & Connolly, MJL (2008) Open access publishing, article downloads, and citations: randomised controlled trial , British Medical Journal 337: a568

Gargouri, Y., Hajjem, C., Lariviere, V., Gingras, Y., Brody, T., Carr, L. and Harnad, S. (2010) Self-Selected or Mandated, Open Access Increases Citation Impact for Higher Quality Research. PLOS ONE 10(5) e13636

Harnad, S. (2008) Davis et al's 1-year Study of Self-Selection Bias: No Self-Archiving Control, No OA Effect, No Conclusion. Open Access Archivangelism July 31 2008

Tuesday, October 19. 2010

Comparing OA and Non-OA: Some Methodological Supplements

Response to Martin Fenner's comments on Gargouri Y, Hajjem C, Larivière V, Gingras Y, Carr L, Brody T, Harnad S. (2010) Self-Selected or Mandated, Open Access Increases Citation Impact for Higher Quality Research. PLoS ONE 5(10):e13636+. doi:10.1371/journal.pone.0013636.

Response to Martin Fenner's comments on Gargouri Y, Hajjem C, Larivière V, Gingras Y, Carr L, Brody T, Harnad S. (2010) Self-Selected or Mandated, Open Access Increases Citation Impact for Higher Quality Research. PLoS ONE 5(10):e13636+. doi:10.1371/journal.pone.0013636.(1) Yes, we cited the Davis et al study. That study does not show that the OA citation advantage is a result of self-selection bias. It simply shows (as many other studies have noted) that no OA advantage at all (whether randomized or self-selected) is detectable only a year after publication, especially in a small sample. It's since been over two years and we're still waiting to hear whether Davis et al's randomized sample still has no OA advantage while a self-selected control sample from the same journals and year does. That would be the way to show what the OA advantage is a self-selection bias. Otherwise it's just the sound of one hand clapping.Harnad, S (2008) Davis et al's 1-year Study of Self-Selection Bias: No Self-Archiving Control, No OA Effect, No Conclusion. Open Access Archivangelism. July 31 2008.(2) No, we did not look only at self-archiving in institutional repositories. Our matched-control sample of self-selected self-archived articles came from institutional repositories, central repositories, and authors' websites. (All of that is "Green OA.") It was only the mandated sample that was exclusively from institutional repositories. (Someone else may wish to replicate our study using funder-mandated self-archiving in central repositories. The results are likely to be much the same, but the design and analysis would be rather more complicated.)

(4) Yes, we systematically excluded articles in Gold OA journals from our sample, not because we do not believe that they generate the OA advantage too, but because it is impossible to do matched-control comparisons between OA and non-OA articles in the same journal issue with Gold OA journals, since all their articles are OA. (It would for much the same reason be difficult to do this comparison in a field where 100% of the articles were OA, even if we were interested in unrefereed preprints; but we were not: we were interested in open access to refereed journal articles.)

(5) As to the 60% mandated self-archiving rates: The institutions we studied had mandated OA in 2003-2004. Our test time-span was 2002-2006. At least two of those institutions (Southampton ECS and CERN) and probably the other two also (Minho and QUT) have deposit rates of close to 100% by now. (We have since extended the analyses to 2009 and found exactly the same result.)

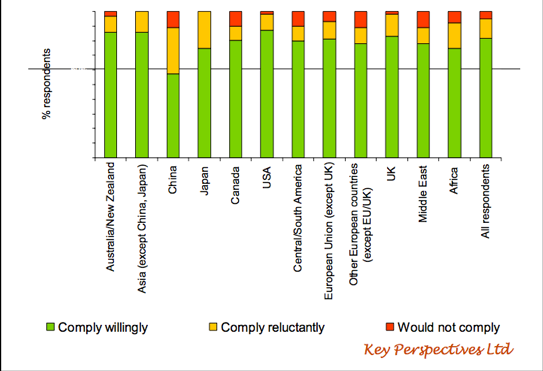

(6) "What is wrong if [OA] rates are 15%"? We leave that to the reader as an exercise. That, after all, is what OA is all about. But surveys have shown -- and outcome studies have confirmed -- that although most researchers do not self-archive spontaneously, 95% report that they would self-archive if their institutions or funders required it, over 80% of them saying they would do it willingly. (Most don't self-archive spontaneously because of worries -- groundless worries -- that it might be illegal or might entail a lot of work.)

(7) Yes, "there are many reasons other than citation rates that make OA worthwhile," but if most researchers will only provide OA if it is mandated, then it is important to demonstrate to researchers why it is worth their while.

(8) If we have given "the impression that mandatory self-archiving of post-prints in institutional repositories is the only reasonable Open Access strategy," then we have succeeded in conveying the implication of our findings.

Swan, A. (2006) The culture of Open Access: researchers’ views and responses, in Jacobs, Neil, Eds. Open Access: Key Strategic, Technical and Economic Aspects. Chandos Publishing (Oxford) Limited.

(Page 1 of 4, totaling 36 entries)

» next page

EnablingOpenScholarship (EOS)

Quicksearch

Syndicate This Blog

Materials You Are Invited To Use To Promote OA Self-Archiving:

Videos:

audio WOS

Wizards of OA -

audio U Indiana

Scientometrics -

The American Scientist Open Access Forum has been chronicling and often directing the course of progress in providing Open Access to Universities' Peer-Reviewed Research Articles since its inception in the US in 1998 by the American Scientist, published by the Sigma Xi Society.

The American Scientist Open Access Forum has been chronicling and often directing the course of progress in providing Open Access to Universities' Peer-Reviewed Research Articles since its inception in the US in 1998 by the American Scientist, published by the Sigma Xi Society.

The Forum is largely for policy-makers at universities, research institutions and research funding agencies worldwide who are interested in institutional Open Acess Provision policy. (It is not a general discussion group for serials, pricing or publishing issues: it is specifically focussed on institutional Open Acess policy.)

You can sign on to the Forum here.

Archives

Calendar

|

|

May '21 | |||||

| Mon | Tue | Wed | Thu | Fri | Sat | Sun |

| 1 | 2 | |||||

| 3 | 4 | 5 | 6 | 7 | 8 | 9 |

| 10 | 11 | 12 | 13 | 14 | 15 | 16 |

| 17 | 18 | 19 | 20 | 21 | 22 | 23 |

| 24 | 25 | 26 | 27 | 28 | 29 | 30 |

| 31 | ||||||

Categories

Blog Administration

Statistics

Last entry: 2018-09-14 13:27

1129 entries written

238 comments have been made