Tuesday, February 3. 2015

Peer-Review and Quality Control

Many physicists say — and some may even believe — that peer review does not add much to their work, that they would do fine with just unrefereed preprints, and that they only continue to submit to peer-reviewed journals because they need to satisfy their promotion/evaluation committees.

Many physicists say — and some may even believe — that peer review does not add much to their work, that they would do fine with just unrefereed preprints, and that they only continue to submit to peer-reviewed journals because they need to satisfy their promotion/evaluation committees.And some of them may even be right. Certainly the giants in the field don’t benefit from peer review. They have no peers, and for them peer-review just leads to regression on the mean.

But that criterion does not scale to the whole field, nor to other fields, and peer review continues to be needed to maintain quality standards. That’s just the nature of human endeavor.

And the quality vetting and tagging is needed before you risk investing the time into reading, using and trying to build on work -- not after. (That's why it's getting so hard to find referees, why they're taking so long (and often not doing a conscientious enough job, especially for journals whose quality standards are at or below the mean.)

Open Access means freeing peer-reviewed research from access tolls, not freeing it from peer review...

Harnad, S. (1998/2000/2004) The invisible hand of peer review. Nature [online] (5 Nov. 1998), Exploit Interactive 5 (2000): and in Shatz, B. (2004) (ed.) Peer Review: A Critical Inquiry. Rowland & Littlefield. Pp. 235-242. http://cogprints.org/1646/

Harnad, S. (2009) The PostGutenberg Open Access Journal. In: Cope, B. & Phillips, (Eds.) The Future of the Academic Journal. Chandos. http://eprints.soton.ac.uk/265617/

Harnad, S. (2010) No-Fault Peer Review Charges: The Price of Selectivity Need Not Be Access Denied or Delayed. D-Lib Magazine 16 (7/8). http://eprints.ecs.soton.ac.uk/21348/

Harnad, S. (2014) Crowd-Sourced Peer Review: Substitute or supplement for the current outdated system? LSE Impact Blog 8/21 August 21 2014 http://blogs.lse.ac.uk/impactofsocialsciences/2014/08/21/crowd-sourced-peer-review-substitute-or-supplement/

Tuesday, January 13. 2015

Journal publishing prices need to go down, not up

Today's transitional period for peer-reviewed journal publishing -- when both the price of subscribing to conventional journals and the price of publishing in open-access journals ("Gold OA") is grossly inflated by obsolete costs and services -- is hardly the time to inflate costs still further by paying peer reviewers.

Today's transitional period for peer-reviewed journal publishing -- when both the price of subscribing to conventional journals and the price of publishing in open-access journals ("Gold OA") is grossly inflated by obsolete costs and services -- is hardly the time to inflate costs still further by paying peer reviewers.Institutions and funders need to mandate the open-access self-archiving of all published articles first ("Green OA"). This will make subscriptions unsustainable, forcing journals to downsize to providing only peer review, leaving access-provision and archiving to the distributed global network of institutional repositories. The price per submitted paper of managing peer review -- since peers review, and always reviewed for free -- is low, fair, affordable and sustainable, on a no-fault basis (irrespective of whether the paper is accepted or rejected: accepted authors should not have to subsidize the cost of rejected papers).

Let's get there first, before contemplating whether we really want to raise that cost yet again, this time by paying peers.

Harnad, S. (2010) No-Fault Peer Review Charges: The Price of Selectivity Need Not Be Access Denied or Delayed. D-Lib Magazine 16 (7/8).

Harnad, S (2014) The only way to make inflated journal subscriptions unsustainable: Mandate Green Open Access. LSE Impact of Social Sciences Blog 4/28 h

Thursday, August 21. 2014

Crowd-Sourced Peer Review: Substitute or Supplement?

Harnad, S. (2014) Crowd-Sourced Peer Review: Substitute or supplement for the current outdated system? LSE Impact Blog 8/21

If, as rumoured, google builds a platform for depositing unrefereed research papers for “peer-reviewing” via crowd-sourcing, can this create a substitute for classical peer-review or will it merely supplement classical peer review with crowd-sourcing?

If, as rumoured, google builds a platform for depositing unrefereed research papers for “peer-reviewing” via crowd-sourcing, can this create a substitute for classical peer-review or will it merely supplement classical peer review with crowd-sourcing?In classical peer review, an expert (presumably qualified, and definitely answerable), an "action editor," chooses experts (presumably qualified, and definitely answerable), "referees," to evaluate a submitted research paper in terms of correctness, quality, reliability, validity, originality, importance and relevance in order to determine whether it meets the standards of a journal with an established track-record for correctness, reliability, originality, quality, novelty, importance and relevance in a certain field.

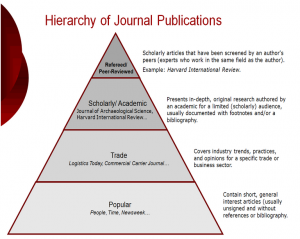

In each field there is usually a well-known hierarchy of journals, hence a hierarchy of peer-review standards, from the most rigorous and selective journals at the top all the way down to what is sometimes close to a vanity press at the bottom. Researchers use the journals' public track-records for quality standards as a hierarchical filter for deciding in what papers to invest their limited reading time to read, and in what findings to risk investing their even more limited and precious research time to try to use and build upon.

In each field there is usually a well-known hierarchy of journals, hence a hierarchy of peer-review standards, from the most rigorous and selective journals at the top all the way down to what is sometimes close to a vanity press at the bottom. Researchers use the journals' public track-records for quality standards as a hierarchical filter for deciding in what papers to invest their limited reading time to read, and in what findings to risk investing their even more limited and precious research time to try to use and build upon.Authors' papers are (privately) answerable to the peer-reviewers, the peer-reviewers are (privately) answerable to the editor, and the editor is publicly answerable to users and authors via the journal's name and track-record.

Both private and public answerability are fundamental to classical peer review. So is their timing. For the sake of their reputations, many (though not all) authors don't want to make their papers public before they have been vetted and certified for quality by qualified experts. And many (though not all) users do not have the time to read unvetted, uncertified papers, let alone to risk trying to build on unvalidated findings. Nor are researchers eager to self-appoint themselves to peer-review arbitrary papers in their fields, especially when the author is not answerable to anyone for following the freely given crowd-sourced advice (and there is no more assurance that the advice is expert advice rather than idle or ignorant advice than there is any assurance that a paper is worth taking the time to read and review).

The problem with classical peer review today is that there is so much research being produced that there are not enough experts with enough time to peer-review it all. So there are huge publication lags (because of delays in finding qualified, willing referees, and getting them to submit their reviews in time) and the quality of peer-review is uneven at the top of the journal hierarchy and minimal lower down, because referees do not take the time to review rigorously.

The solution would be obvious if each unrefereed, submitted paper had a reliable tag marking its quality level: Then the scarce expertise and time for rigorous peer review could be reserved for, say, the top 10% or 30% and the rest of the vetting could be left to crowd-sourcing. But the trouble is that papers do not come with a-priori quality tags: Peer review determines the tag.

The benchmark today is hence the quality hierarchy of the current, classically peer-reviewed research literature. And the question is whether crowd-sourced peer review could match, exceed, or even come close enough to this benchmark to continue to guide researchers on what is worth reading and safe to trust and use at least as well as they are being guided by classical peer review today.

And of course no one knows whether crowd-sourced peer-review, even if it could work, would be scaleable or sustainable.

The key questions are hence:

1. Would all (most? many?) authors be willing to post their unrefereed papers publicly (and in place of submitting them to journals!)?My own prediction (based on nearly a quarter century of umpiring both classical peer review and open peer commentary) is that crowdsourcing will provide an excellent supplement to classical peer review but not a substitute for it. Radical implementations will simply end up re-inventing classical peer review, but on a much faster and more efficient PostGutenberg platform. We will not realize this, however, until all of the peer-reviewed literature has first been made open access. And for that it is not sufficient for Google merely to provide a platform for authors to post their unrefereed papers, because most authors don’t even post their refereed papers in their institutional repositories until it is mandated by their institutions and funders.

2. Would all (most? many?) of the posted papers attract referees? competent experts?

3. Who/what decides whether the refereeing is competent, and whether the author has adequately complied? (Relying on a Wikipedia-style cadre of 2nd-order crowd-sourcers who gain authority recursively in proportion to how much 1st-order crowd-sourcing they have done — rather than on the basis of expertise — sounds like a way to generate Wikipedia quality, but not peer-reviewed quality…)

4. If any of this actually happens on any scale, will it be sustainable?

5. Would this make the landscape (unrefereed preprints, referee comments, revised postprints) as navigable and useful as classical peer review, or not?

Harnad, S. (1998/2000/2004) The invisible hand of peer review. Nature [online] (1998), Exploit Interactive 5 (2000): and in Shatz, B. (2004) (ed.) Peer Review: A Critical Inquiry. Rowland & Littlefield. Pp. 235-242.

Harnad, S., Carr, L., Brody, T. & Oppenheim, C. (2003) Mandated online RAE CVs Linked to University Eprint Archives: Improving the UK Research Assessment Exercise whilst making it cheaper and easier. Ariadne 35.

Harnad, S. (2010) No-Fault Peer Review Charges: The Price of Selectivity Need Not Be Access Denied or Delayed. D-Lib Magazine 16 (7/8).

Harnad, S. (2011) Open Access to Research: Changing Researcher Behavior Through University and Funder Mandates. JEDEM Journal of Democracy and Open Government 3 (1): 33-41.

Harnad, Stevan (2013) The Postgutenberg Open Access Journal. In, Cope, B and Phillips, A (eds.) The Future of the Academic Journal (2nd edition). Chandos.

Saturday, November 3. 2012

Accessibility, Affordability and Quality: Think It Through

Reply to Ross Mounce:

Reply to Ross Mounce:1.The affordability problem loses all of its importance and urgency once globally mandated Green OA has its dual effect of (i) making peer-reviewed journal articles free for all (not just subscribers), thereby (ii) making it possible for institutions to cancel subscriptions if they can no longer afford -- or no longer wish -- to pay for them:

2. "If and when global Green OA should go on to make subscriptions unsustainable (because users are satisfied with just the Green OA versions) that will in turn induce journals to cut costs (print edition, online edition, access-provision, archiving), downsize to just providing the service of peer review, and convert to the Gold OA cost-recovery model. Meanwhile, the subscription cancellations will have released the funds to pay these residual service costs."

3. In other words, it is global Green OA itself that will "decouple the scholarly journal, and separate the peer-review process from the integrated set of services that traditional journals provide." (And then the natural way to charge for the service of peer review will be on a "no-fault basis," with the author's institution or funder paying for each round of refereeing, regardless of outcome [acceptance, revision/re-refereeing, or rejection], minimizing cost while protecting against inflated acceptance rates and decline in quality standards.)

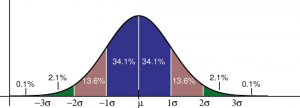

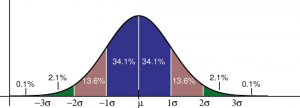

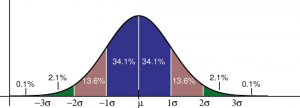

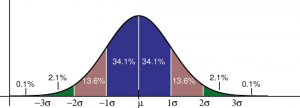

4. Because of the Gaussian distribution of virtually all human qualities and quantities, research quality and quality-assessment is not just a 0/1, pass/fail matter. Research and researchers need the much more nuanced and informative hierarchy of quality levels that journals afford.

5. Please don't conflate the simplistic reliance on the journal impact factor (the journal's articles' average citation counts) -- which is not, by the way, an OA issue -- with the much more substantive and important fact that the existing journal hierarchy does represent a vertical array of research quality levels, corresponding to different standards of peer-review rigour and hence selectivity.

6. This hierarchy is already provided by the known track-records of journals, and can and will be enhanced by a growing set of new, rich and diverse metrics of journal and article quality, importance, usage and impact. What authors and users need is not just a "Gold OA plot" but a clear sense of the quality standards of journals.

7. I think it is exceedingly unrealistic and counterproductive to advise researchers to simply give up the journal with the known and established quality standards and track-record for their work in favour of another journal just for the sake of making their paper Gold OA (let alone for the sake of freeing it from the tyranny of the impact factor) -- and especially at today's still vastly-inflated Gold OA "publishing fee," and whilst the money to pay for it is still locked into institutional subscriptions that cannot be cancelled until/unless those journal articles are accessible in some other way.

8. That other way is cost-free Green OA. And it is global Green OA self-archiving of all journal articles, published in the journal with the highest quality standards the author's work can meet -- not a pre-emptive switch to new journals just because they offer Gold OA today -- that will make those journal articles accessible in the "other way" that (i) solves the accessibility problem immediately, (ii) mitigates the affordability problem immediately, and (iii) eventually induces a transition to Gold OA at a fair and affordable price.

9. Today (i.e., pre-Green-OA), Gold OA means double-pay -- whether for hybrid Gold OA, or for pure Gold OA, as long as subscriptions must still be paid too.

10. It is short-sighted in the extreme to wish authors to renounce journals of established quality and pay extra pre-emptively to new Gold OA journals for an OA that they can already provide cost-free today through Green OA self-archiving, with the additional prospect of easing the affordability problem now, as well as preparing the road for an eventual liberation from subscriptions and a leveraged transition to affordable, sustainable Gold (and Libre) OA.

Harnad, S. (2007) The Green Road to Open Access: A Leveraged Transition. In: Anna Gacs. The Culture of Periodicals from the Perspective of the Electronic Age. L'Harmattan. 99-106.

Harnad, S. (2009) The PostGutenberg Open Access Journal. In: Cope, B. & Phillips, A (Eds.) The Future of the Academic Journal. Chandos.

Harnad, S. (2010) No-Fault Peer Review Charges: The Price of Selectivity Need Not Be Access Denied or Delayed. D-Lib Magazine 16 (7/8).

Harnad, S (2012) The Optimal and Inevitable outcome for Research in the Online Age. CILIP Update September 2012

Sunday, November 20. 2011

WikiWeakPoints: Notability vs. Noteworthiness, Anonymity vs. Answerability

Re: "Why are pornstars more notable than scientists on Wikipedia?" and "Wikipedian in Residence"

Re: "Why are pornstars more notable than scientists on Wikipedia?" and "Wikipedian in Residence"Just a small sample of the patently obvious and persistent fallacies in the notion that anonymous global cloud-writing can produce reliable information on anything that's more than skin-deep (I could go on and on and on):

(1) A neutral point of view on what is true?

(2) Expertise is no excuse?

(3) Expertise is elitism?

(4) Expertise is bias?

(5) Write on what you don't know?

(6) The longer your track-record of being a dilettante busybody, the more decision power you merit?

(7) Zipf's Law trumps the Matthew Effect?

(8) Notability, not noteworthiness, rules?

(9) Anonymous gallup polls, not personal answerability, keep people honest and on their toes?

(10) Crowd-sourcing protects against regression on the mean?

(11) Porifera is on a par with porn?

The surprise is not when Wikipedia gets things wrong, but when it gets them right.

(And the only virtue of notability is that it reduces the motivation of most wikipedia busybodies to bother with esoteric scientific and scholarly topics. Trouble is that it just takes one officious dilettante with a long wack-record to cast a contagious shadow of doubt over stuff he doesn't know, understand or care about.)

My guess is that the only reason any qualified experts even bother to have a go at writing in Wikipedia is Wikipedia's PageRank notoriety, which influences students and public opinion as their first (and often only) port of call.

The only hope is that Open Access to the primary scientific and scholarly literature will remedy that, leaving Wikipedia to rule where it really is the expert: Trivial Pursuit.

Stevan Harnad

Wednesday, September 7. 2011

Post-Peer-Review Open Access, Commentary and Metrics versus Post Hoc "Peer Review"

David Colquhoun (2011) is quite right on practically every point he makes: There is too much pressure to publish and too much emphasis on journal impact factors. Too many papers are published. Many are not worth publishing (trivial or wrong). Peer reviewers are overworked. Refereeing is not always reliable. There is a hierarchy of journal peer review quality. The lower levels of the quality hierarchy are practically unrefereed.

David Colquhoun (2011) is quite right on practically every point he makes: There is too much pressure to publish and too much emphasis on journal impact factors. Too many papers are published. Many are not worth publishing (trivial or wrong). Peer reviewers are overworked. Refereeing is not always reliable. There is a hierarchy of journal peer review quality. The lower levels of the quality hierarchy are practically unrefereed.But the solution is not to post everything publicly first, and entrust the rest to post hoc "peer review," including anonymous peer review.

First, the situation is not new. Already a quarter century ago Stephen Lock (1985), former editor of the British Medical Journal, noted that everything was getting published, somewhere, in the hierarchy of journals.

And journals' positions in the hierarchy serve a purpose: Their names and public track-records for quality are important filters for potential users, helping them decide what to invest their limited time in reading, how much to trust it, and whether to risk trying to apply or build upon it. This is especially true in medicine, where it is not just researchers' time and careers that are at risk from invalid results.

Classical, prepublication peer review is answerable: The submitted paper is answerable to the referees. The referees are answerable to the editors. The editors and journal are answerable to the readership. In the higher quality journals, if revisions cannot be made to bring a paper up to its standards, it is rejected. Peer review is a means of raising paper quality, for authors, and of filtering paper quality, for users.

Self-appointed post hoc peer review is not answerable. No editor's or journal's public track record is at stake to ensure that qualified referees assess the papers, nor that their recommendations for revision are valid, heeded or followed.

And referee anonymity is a two-edged sword. Yes, it protects junior researchers and rivals from vindictiveness, but it also allows anyone to say anything about anything, immunized by anonymity. (Look at the unevenness in the quality of the comments on Professor Colquhoun's article here in the Guardian. This is not peer review.) Journal referees are anonymous to authors, but not to editors.

No, the solution is not that everything should be publicly posted, unrefereed, and then to hope that open commentary will somehow take care of the rest. The solution is to post all peer-reviewed, revised and accepted papers online, free for all (Open Access) and to allow postpublication open peer commentary (anonymous and onymous) to complement and supplement classical peer review.

And to end the arbitrary tyranny of journal impact factors (which just means the journal's average number of citations per article), let 1000 new Open Access metrics bloom -- a metric track-record, public and answerable.

Colquhoun, D. (2011) Publish-or-perish: Peer review and the corruption of science Guardian September 5 2011.

Harnad, S. (1997) Learned Inquiry and the Net: The Role of Peer Review, Peer Commentary and Copyright. Learned Publishing 11(4) 283-292.

Harnad, S. (1998) The invisible hand of peer review. Nature [Web Matters]

Harnad, S. (2003) Valedictory Editorial. Behavioral and Brain Sciences (Journal of Open Peer Commentary) 26(1): 1

Harnad, S. (2009) Open Access Scientometrics and the UK Research Assessment Exercise. Scientometrics 79 (1)

Lock, Stephen (1985) A difficult balance : editorial peer review in medicine London : Nuffield Provincial Hospitals Trust.

Wednesday, June 29. 2011

Megajournals, Quality Standards and Selectivity: Gaussian Facts of Life

SUMMARY: It is obvious that broad-spectrum, low-selectivity, pay-to-publish mega-journals -- whether Open Access or not Open Access -- can help meet many researchers' need to publish today, but it is certainly not true that that's the only way, the best way, or the most economical way to provide Open Access to their articles.

Like height, weight, and just about every other biological trait (including every field of human performance), scholarly/scientific quality is normally distributed (the "bell" curve), most of it around average, tapering toward the increasingly substandard in the lower tail of the bell curve and toward increasing excellence in the upper tail.

Like height, weight, and just about every other biological trait (including every field of human performance), scholarly/scientific quality is normally distributed (the "bell" curve), most of it around average, tapering toward the increasingly substandard in the lower tail of the bell curve and toward increasing excellence in the upper tail. For some forms of human performance -- e.g., driving or doctoring -- we are satisfied with a pass/fail license cut-off.

For others, such as sports or musical performance, we prefer finer-grained levels, with a hierarchy of increasingly exacting -- hence selective -- performance standards.

But, as a matter of necessity with a finite (though growing) population and a bell curve with tapered tails, the proportion (and hence the number) of candidates and works that can meet higher and higher performance standards gets smaller and smaller.

Not only can everyone and everything not be in the top 10% or the top 1% or the top 0.1%, but because the bell curve's tail is tapered (it is a bell, not a pyramid), the proportion that can meet higher and higher standards shrinks even faster than a straight line.

Scholars and scientists' purpose in publishing in peer-reviewed journals -- indeed, the purpose of the "publish-or-perish" principle itself -- had always been two-fold: (1) to disseminate findings to potential users (i.e., mostly other scholars and scientists) and (2) to meet and mark a hierarchy of quality levels with each individual journal's name and its track-record for the rigor of its peer review standards (so users at different levels can decide what to read and trust and so quality can be assessed and rewarded by employers and funders).

In principle (though not yet in practice), journals are no longer needed for the first of these purposes, only the second -- but for that, they need to continue to be selective, ensuring that the hierarchy of quality standards continues to be met and marked.

It is obvious that broad-spectrum, low-selectivity, pay-to-publish mega-journals -- whether OA or not OA -- can help meet many researchers' need to publish today, but it is certainly not true that that's the only way, the best way, or the most economical way to provide OA for their articles:

Harnad, S. (2010) No-Fault Peer Review Charges: The Price of Selectivity Need Not Be Access Denied or Delayed. D-Lib Magazine 16 (7/8).Peer review itself, however, will, like homeostasis, always "defend" a level, whether that level is methodological soundness alone, methodological soundness and originality, methodological soundness, originality and importance, or what have you. The more exacting the standard, the fewer the papers that will be able to meet it. Perhaps the most important function of peer review is not the "marking" of a paper's having met the standard, but helping the paper to reach the standard, through referee feedback, adjudicated by an editor, sometimes involving several rounds of revision and re-refereeing.

ABSTRACT: Plans by universities and research funders to pay the costs of Open Access Publishing ("Gold OA") are premature. Funds are short; 80% of journals (including virtually all the top journals) are still subscription-based, tying up the potential funds to pay for Gold OA; the asking price for Gold OA is still high; and there is concern that paying to publish may inflate acceptance rates and lower quality standards. What is needed now is for universities and funders to mandate OA self-archiving (of authors' final peer-reviewed drafts, immediately upon acceptance for publication) ("Green OA"). That will provide immediate OA; and if and when universal Green OA should go on to make subscriptions unsustainable (because users are satisfied with just the Green OA versions) that will in turn induce journals to cut costs (print edition, online edition, access-provision, archiving), downsize to just providing the service of peer review, and convert to the Gold OA cost-recovery model; meanwhile, the subscription cancellations will have released the funds to pay these residual service costs. The natural way to charge for the service of peer review then will be on a "no-fault basis," with the author's institution or funder paying for each round of refereeing, regardless of outcome (acceptance, revision/re-refereeing, or rejection). This will minimize cost while protecting against inflated acceptance rates and decline in quality standards.

Since peer review is an active, dynamical process of correction and improvement, it is not like the passive assignment of a letter grade to a finished work -- A, B, C, D. Rather, an author picks a journal that "defends" a target grade (A or B or C or D), submits the paper to that journal for refereeing, and then tries to improve the paper so as to meet the referees' recommendations (if any) by revising it.

There are, in other words, A, B, C and D journals, the A+ and A journals being the highest-standard and most selective ones, and hence the least numerous in terms of both titles and articles, for the Gaussian reasons described above.

A mega-journal, in contrast, is equivalent to one generic pass/fail grade (often in the hope that the "self-corrective" nature of science and scholarship will eventually take care of any further improvement and sorting that might be needed -- after publication, though "open peer review").

Maybe one day scholarly publication will move toward a model like that -- or maybe it won't (because users require more immediate quality markers, and/or because the post-publication marking is too uncertain and unreliable).

But what's needed today is open access to the peer-reviewed literature, published in A, B, C and D journals, such as it is, not to a pass/fail subset of it.

Hence pass/fail mega-journals are a potential supplement to the status quo, but not a substitute for it.

Stevan Harnad

EnablingOpenScholarship

Wednesday, June 16. 2010

Peer Review vs. Peer Ranking: Dynamic vs Passive Filtration

Chen & Konstan's (C & K) paper, "Conference Paper Selectivity and Impact" is interesting, though somewhat limited because it is based only on computer science and has fuller data on conference papers than on journal papers.

Chen & Konstan's (C & K) paper, "Conference Paper Selectivity and Impact" is interesting, though somewhat limited because it is based only on computer science and has fuller data on conference papers than on journal papers.The finding is that papers from highly selective conferences are cited as much as (or even more than) papers from certain journals. (Journals of course also differ among themselves in quality and acceptance rates.)

Comparing the ceiling for citation counts for high- and low-selectivity conferences by analyzing only the equated top slice of the low-selectivity conferences, C & K found that that the high-selectivity conferences still did better, suggesting that the difference was not just selectivity (i.e., filtration) but also "reputation." (The effect broke down a bit in comparing the very highest and next-to-highest selectivity conferences, with the next-to-highest doing slightly better than the very highest; plenty of post hoc speculations were ready to account for this effect too: excess narrowness, distaste for competition, etc. at the very highest level, but not the next-highest…)

Some of this smacks of over-interpretation of sparse data, but I'd like to point out two rather more general considerations that seem to have been overlooked or under-rated:

(1) Conference selectivity is not the same as journal selectivity: A conference accepts the top fraction of its submissions (whatever it sets the cut-off point to be), with no rounds of revision, resubmission and re-refereeing (or at most one cursory final round, when the conference is hardly in the position to change most of its decisions, since the conference looms and the program cannot be made much more sparse than planned). This is passive filtration. Journals do not work this way; they have periodic issues, which they must fill, but they can have a longstanding backlog of papers undergoing revision that are not published until and unless they have succeeded in meeting the referee reports' and editor's requirements. The result is that the accepted journal papers have been systematically improved ("dynamic filtration") through peer review (sometimes several rounds), whereas the conference papers have simply been statically ranked much as they were submitted. This is peer ranking, not peer review.

(2) "Reputation" really just means track record: How useful have papers in this venue been in the past? Reputation clearly depends on the reliability and validity of the selective process. But reliability and validity depend on more than the volume and cut-off point of raw submission rankings (passive filtration).

I normally only comment on open-access-related matters, so let me close by pointing out a connection:

I normally only comment on open-access-related matters, so let me close by pointing out a connection:There are three kinds of selectivity: journal selectivity, author selectivity and user selectivity. Journals (and conferences) select which papers to accept for publication; authors select which papers to submit, and which publication venue to submit them to; and users select which papers to read, use and cite. Hence citations are an outcome of a complex interaction of all three factors. The relevant entity for the user, however, is the paper, not the venue. Yes, the journal's reputation will play a role in the user's decision about whether to read a paper, just as the author's reputation will; and of course so will the title and topic. But the main determinant is the paper itself. And in order to read, use and cite a paper, you have to be able to access it. Accessibility trumps all the other factors: it is not a sufficient condition for citation, but it is certainly a necessary one.

Stevan Harnad

American Scientist Open Access Forum

Wednesday, May 12. 2010

PostGutenberg Peer Review

Joseph Esposito [JE] asks, in liblicense-l:

Joseph Esposito [JE] asks, in liblicense-l:JE: “What happens when the number of author-pays open access sites grows and these various services have to compete with one another to get the finest articles deposited in their respositories?”Green OA mandates require deposit in each author's own institutional repository. The hypothesis of Post-Green-OA subscription cancellations (which is only a hypothesis, though I think it will eventually prove to be right) is that the Green OA version will prove to be enough for users, leaving peer review as the only remaining essential publishing service a journal will need to perform.

Whether on the non-OA subscription model or on the Gold-OA author-pays model, the only way scholarly/scientific journals compete for content is through their peer-review standards: The higher-quality journals are the ones with more rigorous and selective criteria for acceptability. This is reflected in their track records for quality, including correlates of quality and impact such as citations, downloads and the many rich new metrics that the online and OA era will be generating.

Whether on the non-OA subscription model or on the Gold-OA author-pays model, the only way scholarly/scientific journals compete for content is through their peer-review standards: The higher-quality journals are the ones with more rigorous and selective criteria for acceptability. This is reflected in their track records for quality, including correlates of quality and impact such as citations, downloads and the many rich new metrics that the online and OA era will be generating.JE: “What will the cost of marketing to attract the best authors be?”It is not "marketing" but the journal's track record for quality standards and impact that attract authors and content in peer-reviewed research publication. Marketing is for subscribers (institutional and individual); for authors and their institutions it is standards and metrics that matter.

And, before someone raises the question: Yes, metrics can be manipulated and abused, in the short term, but cheating can also be detected, especially as deviations within a rich context of multiple metrics. Manipulating a single metric (e.g., robotically inflating download counts) is easy, but manipulating a battery of systematically intercorrelated metrics is not; and abusers can and will be named and shamed. In research and academia, this risk to track record and career is likely to counterbalance the temptation to cheat. (Let's not forget that metrics, like the content they are derived from, will be OA too...)

JE: “I am not myself aware of any financial modeling that attempts to grapple with an environment where there are not a handful of such services but 200, 400, more.”There are already at least 25,000 such services (journals) now! There will be about the same number post-Green-OA.

The only thing that would change (on the hypothesis that universal Green OA will eventually make subscriptions unsustainable) is that the 25,000 post-Green-OA journals would only provide peer review: no more print edition, online edition, distribution, archiving, or marketing (other than each journal's quality track record itself, and its metrics). Gone too would be the costs of these obsolete products and services, and their marketing.

(Probably gone too will be the big-bucks era of journal-fleet publishing. Unlike with books, it has always been the individual journal's name and track record that has mattered to authors and their institutions and funders, not their fleet-publisher's imprimatur. Software for implementing peer review online will provide the requisite economy of scale at the individual journal level: no need to co-bundle a fleet of independent journals and fields under the same operational blanket.)

JE: “As these services develop and authors seek the best one, what new investments will be necessary in such areas as information technology?”The best peer review is provided by the best peers (for free), applying the highest quality standards. OA metrics will grow and develop (independent of publishers), but peer review software is pretty trivial and probably already quite well developed (hence hopes of "patenting" new peer review "systems" are probably pipe-dreams.)

JE: “Will the fixed costs of managing such a service rise along with greater demands by the most significant authors?”The journal quality hierarchy will remain pretty much as it is now, with the highest-quality (hence most selective) journals the fewest, at the top, grading down to the many average-level journals, and then the near-vanity press at the bottom (since just about everything eventually gets published somewhere, especially in the online era).

(I also think that "no-fault peer review" will evolve as a natural matter of course -- i.e., authors will pay a standard fee per round of peer review, independent of outcome: acceptance, revision/re-refereeing or rejection. So being rejected by a higher-level journal will not be a dead loss, if the author is ready to revise for a lower-level journal in response to the higher-level journal's review. Nor will rejected papers be an unfair burden, bundled into the fee of the authors of accepted papers.)

JE: “As more services proliferate, what will the cost of submitting material on an author-pays basis be?”There will be no more new publishing services, apart from peer review (and possibly some copy-editing), and no more new journals either; 25,000 is probably enough already! And the cost per round of refereeing should not prove more than about $200.

JE: “Will the need to attract the best authors drive prices down?”There will be no "need to attract the best authors," but the best journals will get them by maintaining the highest standards.

Since the peers review for free, the cost per round of refereeing is small and pretty fixed.

JE: “If prices are driven down, is there any way for such a service to operate profitably as the costs of marketing and technology grow without attempting to increase in volume what is lost in margin?”Peer-reviewed journal publishing will no longer be big business; just a modest scholarly service, covering its costs.

JE: “If such services must increase their volume, will there be inexorable pressure to lower some of the review standards in order to solicit more papers?”There will be no pressure to increase volume (why should there be)? Scholars try to meet the highest quality standards they can meet. Journals will try to maintain the highest quality standards they can maintain.

JE: “What is the proper balance between the right fee for authors, the level of editorial scrutiny, and the overall scope of the service, as measured by the number of articles developed?”Much ado about little, here.

The one thing to remember is that there is a trade-off between quality-standards and volume: The more selective a journal, the smaller is the percentage of all articles in a field that will meet its quality standards. The "price" of higher selectivity is lower volume, but that is also the prize of peer-reviewed publishing: Journals aspire to high quality and authors aspire to be published in journals of high quality.

No-fault refereeing fees will help distribute the refereeing load (and cost) better than (as now) inflating the fees of accepted papers to cover the costs of rejected papers (rather like a shop-lifting surcharge!). Journals lower in the quality hierarchy will (as always) be more numerous, and will accept more papers, but authors are likely to continue to try a top-down strategy (as now), trying their luck with a higher-quality journal first.

There will no doubt be unrealistic submissions that can (as now) be summarily rejected without formal refereeing (or fee). The authors of papers that do merit full refereeing may elect to pay for refereeing by a higher-level journal, at the risk of rejection, but they can then use their referee reports to submit a more roadworthy version to a lower-level journal. With no-fault refereeing fees, both journals are paid for their costs, regardless of how many articles they actually accept for publication. (PotGutenberg publication means, I hasten to add, that accepted papers are certified with the name and track-record of the accepting journal, but those names just serve as the metadata for the Green OA version self-archived in the author's institutional repository.)

And let's not forget what peer-reviewed research publishing is about, and for: It is not about provisioning a publishing industry but about providing a service to research, researchers, their institutions and their funders. Gutenberg-era publication costs meant that the Gutenberg publisher evolved, through no fault of its own, into the tail that wagged the paper-trained research pooch; in the PostGutenberg era, things will at last rescale into more proper and productive anatomic proportions...Harnad, S. (2009) The PostGutenberg Open Access Journal. In: Cope, B. & Phillips, A (Eds.) The Future of the Academic Journal. Chandos.

Harnad, S (2010) No-Fault Refereeing Fees: The Price of Selectivity Need Not Be Access Denied or Delayed. (Draft under refereeing).

Stevan Harnad

American Scientist Open Access Forum

Tuesday, April 27. 2010

Symptoms of Premature Gold OA -- and their Cure

"Gold" Open Access (OA) journals (especially high-quality, highly selective ones like PLOS Biology) were a useful proof of principle, but now there are far too many of them, and they are mostly not journals of high quality.

"Gold" Open Access (OA) journals (especially high-quality, highly selective ones like PLOS Biology) were a useful proof of principle, but now there are far too many of them, and they are mostly not journals of high quality. The reason is that new Gold OA journals are premature at this time. What is needed is more access to existing journals, not more journals. Everything already gets published somewhere in the existing journal quality hierarchy. The recent proliferation of lower-standard Gold OA journals arose out of the drive and rush to publish-or-perish, and pay-to-publish was an irresistible lure, both to authors and to publishers.

Meanwhile, authors have been sluggish about availing themselves of a cost-free way of providing OA for their published journal articles: "Green" OA self-archiving.

The simple and natural remedy for the sluggishness -- as well as the premature, low-standard Gold OA -- is now on the horizon: Green OA self-archiving mandates from authors' institutions and funders. Once Green OA prevails globally, we will have the much-needed access to existing journals for all would-be users, not just those whose institutions can afford to subscribe. That will remove all pretensions that the motivation for paying-to-publish in a Gold OA journal is to provide OA (rather than just to get published), since Green OA can be provided by authors by publishing in established journals, with their known track records for quality, and without having to pay extra -- while subscriptions continue to pay the costs of publishing.

If and when universal Green OA should eventually make subscriptions unsustainable -- because institutions cancel their subscriptions -- the established journals, with their known track records, can convert to the Gold OA cost-recovery model, downsizing to the provision of peer review alone (since access-provision and archiving will be done by the global network of Green OA Institutional Repositories), with the costs of peer review alone covered out of a fraction of the institutional subscription cancellation savings.

What will prevent pay-to-publish from causing quality standards to plummet under these conditions? It will not be pay-to-publish! It will be no-fault pay-to-be-peer-reviewed, regardless of whether the outcome is accept, revise, or reject. Authors will pay for each round of refereeing. And journals will (as now) form a (known) quality hierarchy, based on their track-record for peer-review standards and hence selectivity.

I'm preparing a paper on this now, provisionally entitled "No-Fault Refereeing Fees: The Price of Selectivity Need Not Be Access Denied or Delayed."

Stevan Harnad

American Scientist Open Access Forum

(Page 1 of 3, totaling 21 entries)

» next page

EnablingOpenScholarship (EOS)

Quicksearch

Syndicate This Blog

Materials You Are Invited To Use To Promote OA Self-Archiving:

Videos:

audio WOS

Wizards of OA -

audio U Indiana

Scientometrics -

The American Scientist Open Access Forum has been chronicling and often directing the course of progress in providing Open Access to Universities' Peer-Reviewed Research Articles since its inception in the US in 1998 by the American Scientist, published by the Sigma Xi Society.

The American Scientist Open Access Forum has been chronicling and often directing the course of progress in providing Open Access to Universities' Peer-Reviewed Research Articles since its inception in the US in 1998 by the American Scientist, published by the Sigma Xi Society.

The Forum is largely for policy-makers at universities, research institutions and research funding agencies worldwide who are interested in institutional Open Acess Provision policy. (It is not a general discussion group for serials, pricing or publishing issues: it is specifically focussed on institutional Open Acess policy.)

You can sign on to the Forum here.

Archives

Calendar

|

|

May '21 | |||||

| Mon | Tue | Wed | Thu | Fri | Sat | Sun |

| 1 | 2 | |||||

| 3 | 4 | 5 | 6 | 7 | 8 | 9 |

| 10 | 11 | 12 | 13 | 14 | 15 | 16 |

| 17 | 18 | 19 | 20 | 21 | 22 | 23 |

| 24 | 25 | 26 | 27 | 28 | 29 | 30 |

| 31 | ||||||

Categories

Blog Administration

Statistics

Last entry: 2018-09-14 13:27

1129 entries written

238 comments have been made