Monday, February 8. 2010

Open Access: Self-Selected, Mandated & Random; Answers & Questions

Gargouri, Y., Hajjem, C., Lariviere, V., Gingras, Y., Brody, T., Carr, L. and Harnad, S. (2010) Self-Selected or Mandated, Open Access Increases Citation Impact for Higher Quality Research.(Submitted)We are happy to have performed these further analyses, and we are very much in favor of this sort of open discussion and feedback on pre-refereeing preprints of papers that have been submitted and are undergoing peer review. They can only improve the quality of the eventual published version of articles.

However, having carefully responded to Phil's welcome questions, below, we will, at the end of this posting, ask Phil to respond in kind to a question that we have repeatedly raised about his own paper (Davis et al 2008), published a year and a half ago...

RESPONSES TO DAVIS'S QUESTIONS ABOUT OUR PAPER:

PD:We are very appreciative of your concern and hope you will agree that we have not been interested only in what the referees might have to say. (We also hope you will now in turn be equally responsive to a longstanding question we have raised about your own paper on this same topic.)

"Stevan, Granted, you may be more interested in what the referees of the paper have to say than my comments; I'm interested in whether this paper is good science, whether the methodology is sound and whether you interpret your results properly."

PD:Our article supports its conclusions with several different, convergent analyses. The logistical analysis with the odds ratio is one of them, and its results are fully corroborated by the other, simpler analyses we also reported, as well as the supplementary analyses we append here now.

"For instance, it is not clear whether your Odds Ratios are interpreted correctly. Based on Figure 4, OA article are MORE LIKELY to receive zero citations than 1-5 citations (or conversely, LESS LIKELY to receive 1-5 citations than zero citations). You write: "For example, we can say for the first model that for a one unit increase in OA, the odds of receiving 1-5 citations (versus zero citations) increased by a factor of 0.957 [re: Figure 4 (p.9)]"... I find your odds ratio methodology unnecessarily complex and unintuitive..."

[Yassine has since added that your confusion was our fault because by way of an illustration we had used the first model (0 citations vs. 1-5 citations), with its odds ratio of 0.957 ("For example, we can say for the first model that for a one unit increase in OA, the odds of receiving 1-5 citations (versus zero citations) increased by a factor of 0.957 "). In the first model the value 0.957 is below and too close to 1 to serve as a good illustration of the meaning of the odds ratio. We should have chosen a better example. one in which (Exp(ß) is clearly greater than 1. We should have said: "For example, we can say for the second model that for a one unit increase in OA, the odds of receiving 5-10 citations (versus 1-5 citations) increased by a factor of 1.323." This clearer example will be used in the revised text of the paper. (See Figure 4S with a translation to display the deviations relative to an odds ratio of one rather than zero {although Excel here insists on labelling the baseline "0" instead of "1"! This too will be fixed in the revised text}.]

PD:Here is the analysis underlying Figure 4, re-done without CERN, and then again re-done without either CERN or Southampton. As will be seen, the outcome pattern, as well as its statistical significance, are the same whether or not we exclude these institutions. (Moreover, I remind you that those are multiple regression analyses in which the Beta values reflect the independent contributions of each of the variables: That means the significant OA advantage, whether or not we exclude CERN, is the contribution of OA independent of the contribution of each institution.)

"Similarly in Figure 4 (if I understand the axes correctly), CERN articles are more than twice as likely to be in the 20+ citation category than in the 1-5 citation category, a fact that may distort further interpretation of your data as it may be that institutional effects may explain your Mandated OA effect. See comments by Patrick Gaule and Ludo Waltman on the review"

PD:As noted in Yassine's reply to Phil, that formula was incorrectly stated in our text, once; in all the actual computations, results, figures and tables, however, the correct formula was used.

"Changing how you report your citation ratios, from the ratio of log citations to the log of citation ratios is a very substantial change to your paper and I am surprised that you point out this reporting error at this point."

PD:The log of the citation ratio was used only in displaying the means (Figure 2), presented for visual inspection. The paired-sample t-tests of significance (Table 2) were based on the raw citation counts, not on log ratios, hence had no leverage in our calculations or their interpretations. (The paired-sample t-tests were also based only on 2004-2006, because for 2002-2003 not all the institutional mandates were yet in effect.)

"While it normalizes the distribution of the ratios, it is not without problems, such as: 1. Small citation differences have very large leverage in your calculations. Example, A=2 and B=1, log (A/B)=0.3"

Moreover, both the paired-sample t-test results (2004-2006) and the pattern of means (2002-2006) converged with the results of the (more complicated) logistical regression analyses and subdivisions into citation ranges.

PD:As noted, the log ratios were only used in presenting the means, not in the significance testing, nor in the logistic regressions.

"2. Similarly, any ratio with zero in the denominator must be thrown out of your dataset. The paper does not inform the reader on how much data was ignored in your ratio analysis and we have no information on the potential bias this may have on your results."

However, we are happy to provide the additional information Phil requests, in order to help readers eyeball the means. Here are the means from Figure 2, recalculated by adding 1 to all citation counts. This restores all log ratios with zeroes in the numerator (sic); the probability of a zero in the denominator is vanishingly small, as it would require that all 10 same-issue control articles have no citations!

The pattern is again much the same. (And, as noted, the significance tests are based on the raw citation counts, which were not affected by the log transformations that exclude numerator citation counts of zero.)

This exercise suggested a further heuristic analysis that we had not thought of doing in the paper, even though the results had clearly suggested that the OA advantage is not evenly distributed across the full range of article quality and citeability: The higher quality, more citeable articles gain more of the citation advantage from OA.

In the following supplementary figure (S3), for exploratory and illustrative purposes only, we re-calculate the means in the paper's Figure 2 separately for OA articles in the citation range 0-4 and for OA articles in the citation range 5+.

The overall OA advantage is clearly concentrated on articles in the higher citation range. There is even what looks like an OA DISadvantage for articles in the lower citation range. This may be mostly an artifact (from restricting the OA articles to 0-4 citations and not restricting the non-OA articles), although it may also be partly due to the fact that when unciteable articles are made OA, only one direction of outcome is possible, in the comparison with citation means for non-OA articles in the same journal and year: OA/non-OA citation ratios will always be unflattering for zero-citation OA articles. (This can be statistically controlled for, if we go on to investigate the distribution of the OA effect across citation brackets directly.)

PD:We will be doing this in our next study, which extends the time base to 2002-2008. Meanwhile, a preview is possible from plotting the mean number of OA and non-OA articles for each citation count. Note that zero citations is the biggest category for both OA and non-OA articles, and that the proportion of articles at each citation level decreases faster for non-OA articles than for OA articles; this is another way of visualizing the OA advantage. At citation counts of 30 or more, the difference is quite striking, although of course there are few articles with so many citations:

"Have you attempted to analyze your citation data as continuous variables rather than ratios or categories?"

REQUEST FOR RESPONSE TO QUESTION ABOUT DAVIS ET AL'S (2008) PAPER:

Davis, PN, Lewenstein, BV, Simon, DH, Booth, JG, & Connolly, MJL (2008)Davis et al had taken a 1-year sample of biological journal articles and randomly made a subset of them OA, to control for author self-selection. (This is comparable to our mandated control for author self-selection.) They reported that after a year, they found no significant OA Advantage for the randomized OA for citations (although they did find an OA Advantage for downloads) and concluded that this showed that the OA citation Advantage is just an artifact of author self-selection, now eliminated by the randomization.

Open access publishing, article downloads, and citations: randomised controlled trial British Medical Journal 337

Critique of Davis et al's paper: "Davis et al's 1-year Study of Self-Selection Bias: No Self-Archiving Control, No OA Effect, No Conclusion" BMJ Responses.

What Davis et al failed to do, however, was to demonstrate that -- in the same sample and time-span -- author self-selection does generate the OA citation Advantage. Without showing that, all they have shown is that in their sample and time-span, they found no significant OA citation Advantage. This is no great surprise, because their sample was small and their time-span was short, whereas many of the other studies that have reported finding an OA Advantage were based on much larger samples and much longer time spans.

The question raised was about controlling for self-selected OA. If one tests for the OA Advantage, whether self-selected or randomized, there is a great deal of variability, across articles and disciplines, especially for the first year or so after publication. In order to have a statistically reliable measure of OA effects, the sample has to be big enough, both in number of articles and in the time allowed for any citation advantage to build up to become detectable and statistically reliable.

Davis et al need to do with their randomization methodology what we have done with our mandating methodology, namely, to demonstrate the presence of a self-selected OA Advantage in the same journals and years. Then they can compare that with randomized OA in those same journals and years, and if there is a significant OA Advantage for self-selected OA and no OA Advantage for randomized OA then they will have evidence that -- contrary to our findings -- some or all of the OA Advantage is indeed just a side-effect of self-selection. Otherwise, all they have shown is that with their journals, sample size and time-span, there is no detectable OA Advantage at all.

What Davis et al replied in their BMJ Authors' Response was instead this:

PD:This is not an adequate response. If a control condition was needed in order to make an outcome meaningful, it is not sufficient to reply that "the publisher and sample allowed us to do the experimental condition but not the control condition."

"Professor Harnad comments that we should have implemented a self-selection control in our study. Although this is an excellent idea, it was not possible for us to do so because, at the time of our randomization, the publisher did not permit author-sponsored open access publishing in our experimental journals. Nonetheless, self-archiving, the type of open access Prof. Harnad often refers to, is accounted for in our regression model (see Tables 2 and 3)... Table 2 Linear regression output reporting independent variable effects on PDF downloads for six months after publication Self-archived: 6% of variance p = .361 (i.e., not statistically significant)... Table 3 Negative binomial regression output reporting independent variable effects on citations to articles aged 9 to 12 months Self-archived: Incidence Rate 0.9 p = .716 (i.e., not statistically significant)..."

Nor is it an adequate response to reiterate that there was no significant self-selected self-archiving effect in the sample (as the regression analysis showed). That is in fact bad news for the hypothesis being tested.

Nor is it an adequate response to say, as Phil did in a later posting, that even after another half year or more had gone by, there was still no significant OA Advantage. (That is just the sound of one hand clapping again, this time louder.)

The only way to draw meaningful conclusions from Davis et al's methodology is to demonstrate the self-selected self-archiving citation advantage, for the same journals and time-span, and then to show that randomization wipes it out (or substantially reduces it).

Until then, our own results, which do demonstrate the self-selected self-archiving citation advantage for the same journals and time-span (and on a much bigger and more diverse sample and a much longer time scale), show that mandating the self-archiving does not wipe out the citation advantage (nor does it substantially reduce it).

Meanwhile, Davis et al's finding that although their randomized OA did not generate a citation increase, it did generate a download increase, suggests that with a larger sample and time-span there may well be scope for a citation advantage as well: Our own prior work and that of others has shown that higher early download counts tend to lead to higher citation counts later.

Bollen, J., Van de Sompel, H., Hagberg, A. and Chute, R. (2009) A principal component analysis of 39 scientific impact measures in PLoS ONE 4(6): e6022,

Brody, T., Harnad, S. and Carr, L. (2006) Earlier Web Usage Statistics as Predictors of Later Citation Impact. Journal of the American Association for Information Science and Technology (JASIST) 57(8) 1060-1072.

Lokker, C., McKibbon, K. A., McKinlay, R.J., Wilczynski, N. L. and Haynes, R. B. (2008) Prediction of citation counts for clinical articles at two years using data available within three weeks of publication: retrospective cohort study BMJ, 2008;336:655-657

Moed, H. F. (2005) Statistical Relationships Between Downloads and Citations at the Level of Individual Documents Within a Single Journal. Journal of the American Society for Information Science and Technology 56(10): 1088- 1097

O'Leary, D. E. (2008) The relationship between citations and number of downloads Decision Support Systems 45(4): 972-980

Watson, A. B. (2009) Comparing citations and downloads for individual articles Journal of Vision 9(4): 1-4

Sunday, January 17. 2010

Preference Surveys and Self-Fulfilling Prophecies: Do Users Prefer No Access To Postprint Access?

SM: "Stevan asserts that researchers who cannot afford access to the published version of articles are perfectly happy with the self-archived author's final version.Sally does not always put her survey questions in the most transparent way.

"Interestingly, in our survey of learned society members Sue Thorn and I found that most of our 1368 respondents did not, in fact, use authors' self-archived versions even when they had no access to the published version - 53% never did so, and only 16% did so whenever possible."

If you really want to find out whether or not researchers are "happy" with the author's refereed, accepted final draft when they lack access to the published version you have to ask them that:

(1) "How often do you encounter online, in a search or otherwise, the author's free refereed, accepted final draft of a potentially relevant article to which you (or your institution) cannot afford paid full-text access?"That's the forthright, transparent way to put the exact contingencies we are addressing. No equivocation or ambiguity.

(2) "If you lack access to the published version of such a potentially relevant article, would you prefer to have no access at all, or access to the author's free refereed, accepted final draft?"

(3) "If you would prefer access to the author draft over no access at all, how strongly would you prefer it over no access at all?

In contrast, I am sure that Sally's question about "How often do you use author drafts?" was just that: "How often do you use author drafts?" Not "How often do you encounter a potentially relevant article, but decline to use it because you only have access to the author draft and not the published version?"

Sally's responses -- which seem to say that 47% do use the author draft and 53% do not use the author draft -- fail to reveal whether the 53% who fail to use the author draft indeed fail to do so because, even though they have found a potentially relevant author draft free online, and lack access to the publisher draft, they prefer to ignore the potentially relevant author draft (this would be very interesting and relevant news if it were indeed true), or simply because they happen to be among the 53% who had never encountered a potentially relevant author draft free online when they had no access to the publisher version. (And could the 16% who did use the author draft "wherever possible" perhaps correspond to the well-known datum that only about 15% of all articles have freely accessible author drafts online)?

Surveys that obscure these fundamental details under a cloud of ambiguity are not revealing researchers' preferences but their own.

Stevan Harnad

American Scientist Open Access Forum

Thursday, January 7. 2010

Log Ratios, Effect Size, and a Mandated OA Advantage?

Update Feb 8, 2010: See also "Open Access: Self-Selected, Mandated & Random; Answers & Questions"

Phil Davis: "An interesting bit of research, although I have some methodological concerns about how you treat the data, which may explain some inconsistent and counter-intuitive results, see: http://j.mp/8LK57u A technical response addressing the methodology is welcome."Thanks for the feedback. We reply to the three points of substance, in order of importance:

(1) LOG RATIOS: We analyzed log citation ratios to adjust for departures from normality. Logs were used to normalize the citations and attenuate distortion from high values. Moed's (2007) point was about (non-log) ratios that were not used in this study. We used log citation ratios. This approach loses some values when the log tranformation makes the denominator zero, but despite these lost data, the t-test results were significant, and were further confirmed by our second, logistic regression analysis. It is highly unlikely that any of this would introduce a systematic bias in favor of OA, but if the referees of the paper should call for a "simpler and more elegant" analysis to make sure, we will be glad to perform it.

(2) EFFECT SIZE: The size of the OA Advantage varies greatly from year to year and field to field. We reported this in Hajjem et al (2005), stressing that the important point is that there is virtually always a positive OA Advantage, absent only when the sample is too small or the effect is measured too early (as in Davis et al's 2008 study). The consistently bigger OA Advantage in physics (Brody & Harnad 2004) is almost certainly an effect of the Early Access factor, because in physics, unlike in most other disciplines (apart from computer science and economics), authors tend to make their unrefereed preprints OA well before publication. (This too might be a good practice to emulate, for authors desirous of greater research impact.)

(3) MANDATED OA ADVANTAGE? Yes, the fact that the citation advantage of mandated OA was slightly greater than that of self-selected OA is surprising, and if it proves reliable, it is interesting and worthy of interpretation. We did not interpret it in our paper, because it was the smallest effect, and our focus was on testing the Self-Selection/Quality-Bias hypothesis, according to which mandated OA should have little or no citation advantage at all, if self-selection is a major contributor to the OA citation advantage.

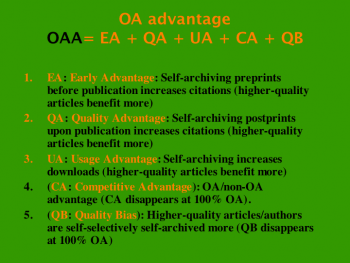

Our sample was 2002-2006. We are now analyzing 2007-2008. If there is still a statistically significant OA advantage for mandated OA over self-selected OA in this more recent sample too, a potential explanation is the inverse of the Self-Selection/Quality-Bias hypothesis (which, by the way, we do think is one of the several factors that contribute to the OA Advantage, alongside the other contributors: Early Advantage, Quality Advantage, Competitive Advantage, Download Advantage, Arxiv Advantage, and probably others).

The Self-Selection/Quality-Bias (SSQB) consists of better authors being more likely to make their papers OA, and/or authors being more likely to make their better papers OA, because they are better, hence more citeable. The hypothesis we tested was that all or most of the widely reported OA Advantage across all fields and years is just due to SSQB. Our data show that it is not, because the OA Advantage is no smaller when it is mandated. If it turns out to be reliably bigger, the most likely explanation is a variant of the "Sitting Pretty" (SP) effect, whereby some of the more comfortable authors have said that the reason they do not make their articles OA is that they think they have enough access and impact already. Such authors do not self-archive spontaneously. But when OA is mandated, their papers reap the extra benefit of OA, with its Quality Advantage (for the better, more citeable papers). In other words, if SSQB is a bias in favor of OA on the part of some of the better authors, mandates reverse an SP bias against OA on the part of others of the better authors. Spontaneous, unmandated OA would be missing the papers of these SP authors.

There may be other explanations too. But we think any explanation at all is premature until it is confirmed that this new mandated OA advantage is indeed reliable and replicable. Phil further singles out the fact that the mandate advantage is present in the middle citation ranges and not the top and bottom. Again, it seems premature to interpret these minor effects whose unreliability is unknown, but if forced to pick an interpretation now, we would say it was because the "Sitting Pretty" authors may be the middle-range authors rather than the top ones...

Brody, T. and Harnad, S. (2004) Comparing the Impact of Open Access (OA) vs. Non-OA Articles in the Same Journals. D-Lib Magazine 10(6).Yassine Gargouri, Chawki Hajjem, Vincent Lariviere, Yves Gingras, Les Carr, Tim Brody, Stevan Harnad

Davis, P.M., Lewenstein, B.V., Simon, D.H., Booth, J.G., Connolly, M.J.L. (2008) Open access publishing, article downloads, and citations: randomised controlled trial British Medical Journal 337:a568

Hajjem, C., Harnad, S. and Gingras, Y. (2005) Ten-Year Cross-Disciplinary Comparison of the Growth of Open Access and How it Increases Research Citation Impact. IEEE Data Engineering Bulletin 28(4) 39-47.

Moed, H. F. (2006) The effect of 'Open Access' upon citation impact: An analysis of ArXiv's Condensed Matter Section Journal of the American Society for Information Science and Technology 58(13) 2145-2156

Saturday, June 13. 2009

On Proportion and Strategy: OA, Non-OA, Gold-OA, Paid-OA

As I do not have exact figures on most of the 12 proportions I highlight below, I am expressing them only in terms of "vast majority" (75% or higher) vs. "minority" (25% or lower) -- rough figures that we can be confident are approximately valid. They turn out to have at least one rather important implication about practical priorities for institutions and funders who wish to provide Open Access to their research output.

As I do not have exact figures on most of the 12 proportions I highlight below, I am expressing them only in terms of "vast majority" (75% or higher) vs. "minority" (25% or lower) -- rough figures that we can be confident are approximately valid. They turn out to have at least one rather important implication about practical priorities for institutions and funders who wish to provide Open Access to their research output.TWELVE OA STATISTICS AND THREE CONCLUSIONS

#1: The vast majority of current (peer-reviewed) journal articles are not OA (Open Access) (neither Green OA nor Gold OA ).

#2: The vast majority of journals are not Gold OA.A peer-reviewed journal article is Green OA if it has been made OA by its author, by self-archiving it in an Open Access Repository (preferably the author's own institution's OAI-compliant Institutional Repository) from which anyone can access it for free on the web.

A peer-reviewed journal article is Gold OA if it has been published in a Gold OA journal from which anyone can access it for free on the web.

There are at least 25,000 peer-reviewed journals, across all fields worldwide, publishing about 2.5 million articles per year.

#3: The vast majority of journals are Green OA.(Note that the c. 10,000 journals indexed in SHERPA/Romeo do not include most of the Gold OA journals, although these would all be classed as Green too, as all Gold OA journals also endorse Green OA self-archiving. Romeo does, however, index just about all of the top journals in just about every field.)

#4: The vast majority of citations are to the top minority of articles (the Pareto/Seglen 90/10 rule).Of the 10,000+ journals whose OA policies are indexed in SHERPA/Romeo, over 90% endorse immediate deposit and immediate OA by the author: 63% for the author's peer-reviewed final draft (the postprint) and a further 32% for the pre-refereeing preprint.

#5: The vast majority of journals (or journal articles) are not among the top minority of journals (or journal articles).

#6: The vast majority of the top journals are not Gold OA.

#7: The vast majority of the top journals are Green OA.

#8: The vast majority of article authors would comply willingly with a Green OA mandate from their institutions and/or funders.The relation between point #1 (the vast majority of articles are neither Green OA nor Gold OA) and point #7 (the vast majority of the top journals -- and indeed also the vast majority of all journals -- are Green OA) is this: Although the vast majority of journals endorse Green OA self-archiving by the author (and are hence Green) the vast majority of authors do not yet act upon this Green light to deposit. That is why the Green OA mandates by institutions and funders are needed.

#9: The vast majority of institutions and funders do not yet mandate Green OA.Ninety-five percent (95%) of authors surveyed (by Alma Swan of Key Perspectives, for JISC), in all fields and all countries, have stated that they would comply with a mandate to self-archive from their universities and/or their funders (over 80% of them say they would do it willingly). However, the vast majority do not self-archive spontaneously, without a mandate.

#10: The vast majority of Gold OA journals are not paid-publication journals.There are somewhere around 10,000 universities and research institutions worldwide. So far, 51 of them -- plus 36 research funders -- have mandated (i.e. required) their peer-reviewed research output to be made Green OA by depositing it in an OA repository.

#11: The vast majority of the top Gold OA journals are paid-publication journals.

#12: The vast majority of institutions do not have the funds to subscribe to all the journals their users need.

Because of the serials crisis, institutional library acquisitions budgets are overstretched. The same is true of research funders' budgets.

I think two strong conclusions follow from this:

C1: The fact that the vast majority of Gold OA journals are not paid-publication journals is not relevant if we are concerned about providing OA to the articles in the top journals.

C2: Green OA, mandated by institutions and funders, is the vastly underutilized means of providing OA.

The implication is:

It is vastly more productive (of OA) for universities and funders to mandate Green OA than to fund Gold OA.(Whether institutions and funders elect to fund Gold OA after they have mandated Green OA is of course an entirely different question, and not a matter of urgency one way or the other. These statistics and conclusions about practical priority are intended only to illustrate the short-sightedness of funding Gold OA pre-emptively, without mandating Green OA, if the goal of institutions and funders is to provide Open Access to their research output.)

Stevan Harnad

American Scientist Open Access Forum

Wednesday, February 25. 2009

Perils of Press-Release Journalism: NSF, U. Chicago, and Chronicle of Higher Education

Update Jan 1, 2010: See Gargouri, Y; C Hajjem, V Larivière, Y Gingras, L Carr,T Brody & S Harnad (2010) “Open Access, Whether Self-Selected or Mandated, Increases Citation Impact, Especially for Higher Quality Research”

Update Feb 8, 2010: See also "Open Access: Self-Selected, Mandated & Random; Answers & Questions"

In response to my critique of his Chronicle of Higher Education posting on Evans and Reimer's (2009) Science article (which I likewise critiqued, though much more mildly), I got an email from Paul Basken asking me to explain what, if anything, he had got wrong, since his posting was based entirely on a press release from NSF (which turns out to be a relay of a press release from the University of Chicago, E & R's home institution). Sure enough, the silly spin originated from the NSF/Chicago Press release (though the buck stops with E & R's own vague and somewhat tendentious description and interpretation of some of their findings). Here is the NSF/Chicago Press Release, enhanced with my comments, for your delectation and verdict:

In response to my critique of his Chronicle of Higher Education posting on Evans and Reimer's (2009) Science article (which I likewise critiqued, though much more mildly), I got an email from Paul Basken asking me to explain what, if anything, he had got wrong, since his posting was based entirely on a press release from NSF (which turns out to be a relay of a press release from the University of Chicago, E & R's home institution). Sure enough, the silly spin originated from the NSF/Chicago Press release (though the buck stops with E & R's own vague and somewhat tendentious description and interpretation of some of their findings). Here is the NSF/Chicago Press Release, enhanced with my comments, for your delectation and verdict:NSF/U.CHICAGO:(1) If you offer something valuable for free, people will choose the free option unless they've already paid for the paid option (especially if they needed -- and could afford -- it earlier).

"If you offer something of value to people for free while someone else charges a hefty sum of money for the same type of product, one would logically assume that most people would choose the free option. According to new research in today's edition of the journal Science, if the product in question is access to scholarly papers and research, that logic might just be wrong. These findings provide new insight into the nature of scholarly discourse and the future of the open source publication movement [sic, emphasis added]."

(2) Free access after an embargo of a year or more is not the same "something" as immediate free access. Its "value" for a potential user is lower. (That's one of the reasons institutions keep paying for subscription/license access to journals.)

(3) Hence it is not in the least surprising that immediate (paid) print-on-paper access + online access (IP + IO) generates more citations than immediate (paid) print-on-paper access (IP) alone.

(4) Nor is it surprising that immediate (paid) print-on-paper access + online access + delayed free online access (IP +IO + DF) generates more citations than just immediate (paid) print-on-paper + online access (IP + IO) alone -- even if the free access is provided a year or longer after the paid access.

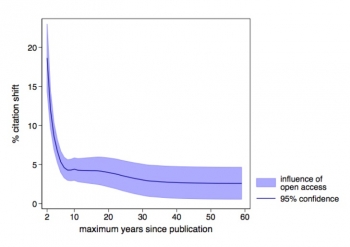

(5) Why on earth would anyone conclude that the fact that the increase in citations from IP to IP + IO is 12% and the increase in citations from IP + IO to IP + IO + DF is a further 8% implies anything whatsoever about people's preference for paid access over free access? Especially when the free access is not even immediate (IF) but delayed (DF) and the 8% is an underestimate based on averaging in ancient articles: see E & R's supplemental Figure S1(c), right [with thanks to Mike Eisen for spotting this one!].

(5) Why on earth would anyone conclude that the fact that the increase in citations from IP to IP + IO is 12% and the increase in citations from IP + IO to IP + IO + DF is a further 8% implies anything whatsoever about people's preference for paid access over free access? Especially when the free access is not even immediate (IF) but delayed (DF) and the 8% is an underestimate based on averaging in ancient articles: see E & R's supplemental Figure S1(c), right [with thanks to Mike Eisen for spotting this one!].NSF/U.CHICAGO:What on earth is an "open source outlet"? ("Open source" is a software matter.) Let's assume what's meant is "open access"; but then is this referring to (i) publishing in an open access journal, to (ii) publishing in a subscription journal but also self-archiving the published article to make it open access, or to (iii) self-archiving an unpublished paper?

"Most research is published in scientific journals and reviews, and subscriptions to these outlets have traditionally cost money--in some cases a great deal of money. Publishers must cover the costs of producing peer-reviewed publications and in most cases also try to turn a profit. To access these publications, other scholars and researchers must either be able to afford subscriptions or work at institutions that can provide access.

"In recent years, as the Internet has helped lower the cost of publishing, more and more scientists have begun publishing their research in open source [sic] outlets online. Since these publications are free to anyone with an Internet connection, the belief has been that more interested readers will find them and potentially cite them. Earlier studies had postulated that being in an open source [sic] format could more than double the number of times a journal article is used by other researchers."

What (many) previous studies had measured (not "postulated") was that authors (ii) publishing in a subscription journal (IP + IO) and also self-archiving their published article to make it Open Access (IP + IO + OA) could more than double their citations, compared to IP + IO alone.

NSF/U.CHICAGO:No, Evans & Reimer (E & R) did nothing of the sort; and no "theory" was tested (nor was there any theory).

"To test this theory, James A. Evans, an assistant professor of sociology at the University of Chicago, and Jacob Reimer, a student of neurobiology also at the University of Chicago, analyzed millions of articles available online, including those from open source publications [sic] and those that required payment to access."

E & R only analyzed articles from subscription access journals before and after the journals made them accessible online (to paid subscribers only) (i.e., IP vs IP + IO) as well as before and after the journals made the online version accessible free for all (after a paid-access-only embargo of up to a year or more: i.e., IP +IO vs IP + IO + DF). E & R's methodology was based on comparing citation counts for articles within the same journals before and after being made free online (by the journal) following delays of various lengths.

NSF/U.CHICAGO:In other words, the citation count increase from just (paid) IP to (paid) IP + IO was 12% and the citation count increase from just (paid) IP + IO to (paid) IP + IO + DF was a further 8%. Not in the least surprising: Making paid-access articles accessible online increases their citations, and making them free online (even if only after a delay of a year or longer) increases their citations still more.

"The results were surprising. On average, when a given publication was made available online after being in print for a year, being published in an open source format [sic] increased the use of that article by about 8 percent. When articles are made available online in a commercial format a year after publication, however, usage increases by about 12 percent."

What is surprising is the rather absurd spin that this press release appears to be trying to put on this decidedly unsurprising finding.

NSF/U.CHICAGO:We already knew that OA increased citations, as the many prior published studies have shown. Most of those studies, however, were based on immediate OA (i.e., IF), not embargoed OA. What E & R do show, interestingly, is that even delaying OA for a year or more still increases citations, though (unsurprisingly) not as much as immediate OA (IF) does.

"'Across the scientific community,' Evans said in an interview, 'it turns out that open access does have a positive impact on the attention that's given to the journal articles, but it's a small impact.'"

NSF/U.CHICAGO:A large portion of the citation increase from (delayed) OA turns out to come from Developing Countries (refuting Frandsen's recent report to the contrary). This is a new and useful finding (though hardly a surprising one, if one does the arithmetic). (A similar analysis, within the US, comparing citations from America's own "Have-Not" Universities (with the smaller journal subscription budgets) with its Harvards might well reveal the same effect closer to home, though probably at a smaller scale.)

"Yet Evans and Reimer's research also points to one very positive impact of the open source movement [sic] that is sometimes overlooked in the debate about scholarly publications. Researchers in the developing world, where research funding and libraries are not as robust as they are in wealthier countries, were far more likely to read and cite open source articles."

NSF/U.CHICAGO:And it will be interesting to test for the same effect comparing the Harvards and the Have-Nots in the US -- but a more realistic estimate might come from looking at immediate OA (IF) rather than just embargoed OA (DF).

"The University of Chicago team concludes that outside the developed world, the open source movement [sic] 'widens the global circle of those who can participate in science and benefit from it.'"

NSF/U.CHICAGO:It would be interesting to hear the authors of this NSF/Chicago press release -- or E & R, for that matter -- explain how this paradoxical "preference" for paid access over free access was tested during the access embargo period...

"So while some scientists and scholars may chose to pay for scientific publications even when free publications are available, their colleagues in other parts of the world may find that going with open source works [sic] is the only choice they have."

Stevan Harnad

American Scientist Open Access Forum

Tuesday, January 20. 2009

Learned Society Survey On Open Access Self-Archiving

On Mon, Jan 19, 2009 at 3:15 PM, Sally Morris [SM] (Morris Associates)

On Mon, Jan 19, 2009 at 3:15 PM, Sally Morris [SM] (Morris Associates) SM:This makes sense. The self-archived versions are supplements, for those who don't have subscription access.

"Sue Thorn and I will shortly be publishing a report of a research study on the attitudes and behaviour of 1368 members of UK-based learned societies in the life sciences.

"72.5% said they never used self-archived articles when they had access to the published version"

SM:This is an odd category: Wouldn't one have to know what percentage of those articles -- to which these respondents did not have subscription access -- in fact had self-archived versions at all? (The global baseline for spontaneous self-archiving is around 15%)

"3% did so whenever possible, 10% sometimes and 14% rarely. When they did not have access to the published version, 53% still never accessed the self-archived version"

The way it is stated above, it sounds as if the respondents knew there was a self-archived version, but chose not to use it. I would strongly doubt that...

SM:That 16% sounds awfully close to the baseline 15% where it is possible, because the self-archived supplement exists. In that case, the right description would be that 100%, not 16%, did so. (But I rather suspect the questions were yet again posed in such an ambiguous way that it is impossible to sort any of this out.)

"16% did so whenever possible"

SM:To get responses on self-archived content, you have to very carefully explain to your respondents what is and is not meant by self-archived content: Free online versions, not those you or your institution have to pay subscription tolls to access.

"16% sometimes and 15% rarely. However, 13% of references were not in fact to self-archiving repositories - they included Athens, Ovid, Science Direct and ISI Web of Science/Web of Knowledge."

Stevan Harnad

American Scientist Open Access Forum

Sunday, October 5. 2008

Suber/Harnad statement in support of the investigative work of Richard Poynder

Richard Poynder, a distinguished scientific journalist specializing in online-era scientific/scholarly communication and publication, has been the ablest, most prolific and most probing chronicler of the open access movement from its very beginning. He is widely respected for his independence, even-handedness, analysis, careful interviews, and detailed research.

Richard Poynder, a distinguished scientific journalist specializing in online-era scientific/scholarly communication and publication, has been the ablest, most prolific and most probing chronicler of the open access movement from its very beginning. He is widely respected for his independence, even-handedness, analysis, careful interviews, and detailed research.Richard is currently conducting a series of investigations on the peer review practices of some newly formed open access journals and their publishers. In one case, when a publisher would not talk to him privately, Richard made his questions public in the American Scientist Open Access Forum:

"Help sought on OA publisher Scientific Journals International"That posting elicited public and private threats of a libel suit and accusations of racism.

"Lies, fear and smear campaigns against SJI and other OA journals"Those groundless threats and accusations appear to us to be attempts to intimidate. Moreover, Richard is being portrayed as an opponent of open access, which he is not. He is an even-handed, critically minded analyst of the open access movement (among other things), and his critical investigations are healthy for open access.

He has interviewed us both, at length. While the resulting pictures were largely favorable, he didn't hesitate to probe our weaknesses and the objections others have raised to our respective methods or styles of work. This kind of critical scrutiny is essential to a new and fast-growing movement and does not imply hostility to the subjects of his investigation or opposition to open access.

Trying to suppress Richard Poynder's investigations through threats of legal action is contemptible. We hope that the friends of open access in the legal community will attest to the lawfulness of his inquiries and that all friends of open access will attest to the value and legitimacy of his investigative journalism.

Peter Suber and Stevan Harnad

Friday, August 29. 2008

On Eggs and Citations

Update Jan 1, 2010: See Gargouri, Y; C Hajjem, V Larivière, Y Gingras, L Carr,T Brody & S Harnad (2010) “Open Access, Whether Self-Selected or Mandated, Increases Citation Impact, Especially for Higher Quality Research”

Update Feb 8, 2010: See also "Open Access: Self-Selected, Mandated & Random; Answers & Questions"

Failing to observe a platypus laying eggs is not a demonstration that the platypus does not lay eggs.

Failing to observe a platypus laying eggs is not a demonstration that the platypus does not lay eggs. You have to actually observe the provenance, ab ovo, of those little newborn platypusses, if you want to demonstrate that they are not being engendered by egg-laying.

Failing to observe a significant OA citation Advantage within a year of publication (or a year and a half -- or longer, as the case may be) with randomized OA does not demonstrate that the many studies that do observe a significant OA citation Advantage with nonrandomized OA are simply reporting self-selection artifacts (i.e., selective provision of OA for the more highly citable articles.)

To demonstrate the latter, you first have to replicate the OA citation Advantage with nonrandomized OA (on the same or comparable sample) and then demonstrate that randomized OA (on the same or comparable sample) eliminates that OA citation Advantage (on the same or comparable sample).

Otherwise, you are simply comparing apples and oranges (or eggs and expectations, as the case may be) in reporting a failure to observe a significant OA citation Advantage in a first-year (or first 1.5-year) sample with randomized OA -- along with a failure to observe a significant OA citation Advantage for nonrandomized OA either, for the same sample (on the grounds that the nonrandomized OA subsample was too small):

The many reports of the nonrandomized OA Citation Advantage are based on samples that were sufficiently large, and on a sufficiently long time-scale (almost never as short as a year) to detect a significant OA Citation Advantage.

A failure to observe a significant effect with small, early samples, on short time-scales -- whether randomized or nonrandomized -- is simple that: a failure to observe a significant effect: Keep testing till the size and duration of your sample of randomized and nonrandomized OA is big enough to test your self-selection hypothesis (i.e., comparable with the other studies that have detected the effect).

Meanwhile, note that (as other studies have likewise found), although a year proved too short to observe a significant OA citation Advantage for randomized (or nonrandomized) OA, it did prove long enough to observe a significant OA download Advantage for randomized OA -- and that other studies have also reported that early download advantages correlate significantly with later significant citation advantages.

Just as mating more is likely to lead to more progeny for platypusses (by whatever route) than mating less, so accessing and downloading more is likely to lead to more citations for papers than accessing and downloading less.

Stevan Harnad

American Scientist Open Access Forum

Saturday, August 9. 2008

Estimating Annual Growth in OA Repository Content

SUMMARY: Re: Deblauwe, F. (2008) OA Academia in Repose: Seven Academic Open-Access Repositories Compared: A useful way to benchmark OA progress would be to focus on OA's target content -- peer-reviewed scientific and scholarly journal articles -- and to indicate, year by year, the proportion of the total annual output of the content-providers, rather than just absolute annual deposit totals. The OA content-providers are universities and research institutions. The denominator for all measures should be the number of articles the institution publishes in a given year, and the numerator should be the number of articles published in that year (full-texts) that are deposited in that institution's Institutional Repository (IR). (If an institution does not know its own annual published articles output -- as is likely, since such record-keeping is one of the many functions that the OA IRs are meant to perform -- an estimate can be derived from the Institute of Scientific Information's (ISI's) annual data for that institution.)

This is a useful beginning in the analysis of the growth of Open Access (OA), but it is mostly based on central collections of a variety of different kinds of content.Deblauwe, Francis (2008) OA Academia in Repose: Seven Academic Open-Access Repositories Compared

A useful way to benchmark OA progress would be to focus on OA's target content -- this would be, first and foremost, peer-reviewed scientific and scholarly journal articles -- and to indicate, year by year, the proportion of the total annual output of the content-providers, rather than just absolute annual deposit totals.

The OA content-providers are universities and research institutions. The denominator for all measures should be the number of articles the institution publishes in a given year, and the numerator should be the number of articles published in that year (full-texts) that are deposited in that institution's Institutional Repository (IR).

Just counting total deposits, without specifying the year of publication, the year of deposit, and the total target output of which they are a fraction (as well as making sure they are article full-texts rather than just metadata) is only minimally informative.

Absolute totals for Central Repositories (CRs), based on open-ended input from distributed institutions, are even less informative, as there is no indication of the size of the total output, hence what fraction of that has been deposited.

If an institution does not know its own annual published articles output -- as is likely, since such record-keeping is one of the many functions that the OA IRs are meant to perform -- an estimate can be derived from the Institute of Scientific Information's (ISI's) annual data for that institution. The estimate is then simple: Determine what proportion of the full-texts of the annual ISI items for that institution are in the IR. (ISI does not index everything, but it probably indexes the most important output, and this ratio is hence an estimate of what proportion of the most important output is being made OA annually by that institution).

This calculation could easily be done for the only university IR among the 7 analyzed above, Cambridge University's. It was probably chosen because it is the IR containing the largest total number of items (see ROAR) and one of the few IRs with a total item count big enough to be comparable with the total counts of the multi-institutional collections such as Arxiv. However, it is unclear what proportion of the items in Cambridge's IR are the full-texts of journal articles -- and what percentage of Cambridge's annual journal article output this represents.

CERN is an institution, but not a multidisciplinary university: High Energy Physics only. CERN has, however, done the recommended estimate of its annual OA growth in 2006 and found its IR "Three Quarters Full and Counting. http://library.cern.ch/HEPLW/12/papers/2/

CERN, moreover, is one of the 25 institutions, universities and departments that have mandated deposit in their IR. Those are also the IRs that are growing the fastest.

(Deblauwe notes that"Resources... remain a big issue, e.g., in 2006, after the initially-funded three years, DSpace@Cambridge's growth rate slowed down due to underestimation of the expenses and difficulty of scaling up." I would suggest that what Cambridge needs is not more resources for the IR but a deposit mandate, like Southampton's, QUT's, Minho's, CERN's, Harvard's, Stanford's, and the rest of the 25 mandates to date: See ROARMAP.)

Stevan Harnad

American Scientist Open Access Forum

Thursday, July 31. 2008

Davis et al's 1-year Study of Self-Selection Bias: No Self-Archiving Control, No OA Effect, No Conclusion

The following is an expanded, hyperlinked version of a BMJ critique of:

Update Jan 1, 2010: See Gargouri, Y; C Hajjem, V Larivière, Y Gingras, L Carr,T Brody & S Harnad (2010) “Open Access, Whether Self-Selected or Mandated, Increases Citation Impact, Especially for Higher Quality Research”

Update Feb 8, 2010: See also "Open Access: Self-Selected, Mandated & Random; Answers & Questions"

Davis, PN, Lewenstein, BV, Simon, DH, Booth, JG, & Connolly, MJL (2008) Open access publishing, article downloads, and citations: randomised controlled trial British Medical Journal 337: a568

Davis, PN, Lewenstein, BV, Simon, DH, Booth, JG, & Connolly, MJL (2008) Open access publishing, article downloads, and citations: randomised controlled trial British Medical Journal 337: a568Overview (by SH):

Davis et al.'s study was designed to test whether the "Open Access (OA) Advantage" (i.e., more citations to OA articles than to non-OA articles in the same journal and year) is an artifact of a "self-selection bias" (i.e., better authors are more likely to self-archive or better articles are more likely to be self-archived by their authors).

The control for self-selection bias was to select randomly which articles were made OA, rather than having the author choose. The result was that a year after publication the OA articles were not cited significantly more than the non-OA articles (although they were downloaded more).

The authors write:

"To control for self selection we carried out a randomised controlled experiment in which articles from a journal publisher’s websites were assigned to open access status or subscription access only"The authors conclude:

"No evidence was found of a citation advantage for open access articles in the first year after publication. The citation advantage from open access reported widely in the literature may be an artefact of other causes."Commentary:

To show that the OA advantage is an artefact of self-selection bias (or of any other factor), you first have to produce the OA advantage and then show that it is eliminated by eliminating self-selection bias (or any other artefact).

This is not what Davis et al. did. They simply showed that they could detect no OA advantage one year after publication in their sample. This is not surprising, since most other studies, some based based on hundreds of thousands of articles, don't detect an OA advantage one year after publication either. It is too early.

To draw any conclusions at all from such a 1-year study, the authors would have had to do a control condition, in which they managed to find a sufficient number of self-selected, self-archived OA articles (from the same journals, for the same year) that do show the OA advantage, whereas their randomized OA articles do not. In the absence of that control condition, the finding that no OA advantage is detected in the first year for this particular sample of 247 out of 1619 articles in 11 physiological journals is completely uninformative.

The authors did find a download advantage within the first year, as other studies have found. This early download advantage for OA articles has also been found to be correlated with a citation advantage 18 months or more later. The authors try to argue that this correlation would not hold in their case, but they give no evidence (because they hurried to publish their study, originally intended to run four years, three years too early.)

(1) The Davis study was originally proposed (in December 2006) as intended to cover 4 years:

Davis, PN (2006) Randomized controlled study of OA publishing (see comment)It has instead been released after a year.

(2) The Open Access (OA) Advantage (i.e., significantly more citations for OA articles, always comparing OA and non-OA articles in the same journal and year) has been reported in all fields tested so far, for example:

Hajjem, C., Harnad, S. and Gingras, Y. (2005) Ten-Year Cross-Disciplinary Comparison of the Growth of Open Access and How it Increases Research Citation Impact. IEEE Data Engineering Bulletin 28(4) pp. 39-47.(3) There is always the logical possibility that the OA advantage is not a causal one, but merely an effect of self-selection: The better authors may be more likely to self-archive their articles and/or the better articles may be more likely to be self-archived; those better articles would be the ones that get more cited anyway.

(4) So it is a very good idea to try to control methodologically for this self-selection bias: The way to control it is exactly as Davis et al. have done, which is to select articles at random for being made OA, rather than having the authors self-select.

(5) Then, if it turns out that the citation advantage for randomized OA articles is significantly smaller than the citation advantage for self-selected-OA articles, the hypothesis that the OA advantage is all or mostly just a self-selection bias is supported.

(6) But that is not at all what Davis et al. did.

(7) All Davis et al. did was to find that their randomized OA articles had significantly higher downloads than non-OA articles, but no significant difference in citations.

(8) This was based on the first year after publication, when most of the prior studies on the OA advantage likewise find no significant OA advantage, because it is simply too early: the early results are too noisy! The OA advantage shows up in later years (1-4).

(9) If Davis et al. had been more self-critical, seeking to test and perhaps falsify their own hypothesis, rather than just to confirm it, they would have done the obvious control study, which is to test whether articles that were made OA through self-selected self-archiving by their authors (in the very same year, in the very same journals) show an OA advantage in that same interval. For if they do not, then of course the interval was too short, the results were released prematurely, and the study so far shows nothing at all: It is not until you have actually demonstrated an OA advantage that you can estimate how much of that advantage might in reality be due to a self-selection artefact!

(10) The study shows almost nothing at all, but not quite nothing, because one would expect (based on our own previous study, which showed that early downloads, at 6 months, predict enhanced citations at a year and a half or later) that Davis's increased downloads too would translate into increased citations, once given enough time.

Brody, T., Harnad, S. and Carr, L. (2006) Earlier Web Usage Statistics as Predictors of Later Citation Impact. Journal of the American Association for Information Science and Technology (JASIST) 57(8) pp. 1060-1072.(11) The findings of Michael Kurtz and collaborators are also relevant in this regard. They looked only at astrophysics, which is special, in that (a) it is a field with only about a dozen journals, to which every research-active astronomer has subscription access -- these days they also have free online access via ADS -- and (b) it is a field in which most authors self-archive their preprints very early in arxiv -- much earlier than the date of publication.

Kurtz, M. J. and Henneken, E. A. (2007) Open Access does not increase citations for research articles from The Astrophysical Journal. Preprint deposited in arXiv September 6, 2007.(12) Kurtz & Henneken, too, found the usual self-archiving advantage in astrophysics (i.e., about twice as many citations for OA papers than non-OA), but when they analyzed its cause, they found that most of the cause was the Early Advantage of access to the preprint, as much as a year before publication of the (OA) postprint. In addition, they found a self-selection bias (for prepublication preprints -- which is all that were involved here, because, as noted, in astrophysics, after publication, everything is OA): The better articles by the better authors were more likely to have been self-archived as preprints.

(13) Kurtz's results do not generalize to all fields, because it is not true of other fields either that (a) they already have 100% OA for their published postprints, or that (b) many authors tend to self-archive preprints before publication.

(14) However, the fact that early preprint self-archiving (in a field that is 100% OA as of postprint publication) is sufficient to double citations is very likely to translate into a similar effect, in a non-OA, non-preprint-archiving field, if one reckons on the basis of the one-year access embargo that many publishers are imposing on the postprint. (The yearlong "No-Embargo" advantage provided by postprint OA in other fields might not turn out to be so big as to double citations, as the preprint Early Advantage in astrophysics does, because any potential prepublication advantage is lost, and after publication there is at least the subscription access to the postprint; but the postpublication counterpart of the Early Advantage for postprints that are either not self-archived or embargoed is likely to be there too.)

(15) Moreover, the preprint OA advantage is primarily Early Advantage, and only secondarily Self-Selection.

(16) The size of the postprint self-selection bias would have been what Davis et al. tested -- if they had done the proper control, and waited long enough to get an actual OA effect to compare against. (Their regression analyses simply show that exactly as they detected no citation advantage in their sample and interval for the random OA articles, they likewise likewise detected no citation advantage for the self-selected self-archived OA articles in their sample and interval: this hardly constitutes evidence that the (undetected) OA advantage is in reality a self-selection artefact!)

(17) We had reported in an unpublished 2007 pilot study that there was no statistically significant difference between the size of the OA advantage for mandated (i.e., obligatory) and unmandated (i.e., self-selected) self-archiving:

Hajjem, C & Harnad, S. (2007) The Open Access Citation Advantage: Quality Advantage Or Quality Bias? Preprint deposited in arXiv January 22, 2007.(18) We will soon be reporting the results of a 4-year study on the OA advantage in mandated and unmandated self-archiving that confirms these earlier findings: Mandated self-archiving is like Davis et al.'s randomized OA, but we find that it does not reduce the OA advantage at all -- once enough time has elapsed for there to be an OA Advantage at all.

Stevan Harnad

American Scientist Open Access Forum

« previous page

(Page 2 of 4, totaling 36 entries)

» next page

EnablingOpenScholarship (EOS)

Quicksearch

Syndicate This Blog

Materials You Are Invited To Use To Promote OA Self-Archiving:

Videos:

audio WOS

Wizards of OA -

audio U Indiana

Scientometrics -

The American Scientist Open Access Forum has been chronicling and often directing the course of progress in providing Open Access to Universities' Peer-Reviewed Research Articles since its inception in the US in 1998 by the American Scientist, published by the Sigma Xi Society.

The American Scientist Open Access Forum has been chronicling and often directing the course of progress in providing Open Access to Universities' Peer-Reviewed Research Articles since its inception in the US in 1998 by the American Scientist, published by the Sigma Xi Society.

The Forum is largely for policy-makers at universities, research institutions and research funding agencies worldwide who are interested in institutional Open Acess Provision policy. (It is not a general discussion group for serials, pricing or publishing issues: it is specifically focussed on institutional Open Acess policy.)

You can sign on to the Forum here.

Archives

Calendar

|

|

May '21 | |||||

| Mon | Tue | Wed | Thu | Fri | Sat | Sun |

| 1 | 2 | |||||

| 3 | 4 | 5 | 6 | 7 | 8 | 9 |

| 10 | 11 | 12 | 13 | 14 | 15 | 16 |

| 17 | 18 | 19 | 20 | 21 | 22 | 23 |

| 24 | 25 | 26 | 27 | 28 | 29 | 30 |

| 31 | ||||||

Categories

Blog Administration

Statistics

Last entry: 2018-09-14 13:27

1129 entries written

238 comments have been made