Quicksearch

Your search for humanities returned 62 results:

Saturday, August 28. 2010

Testing Jan Velterop's Hunch About Green and Gold Open Access

Comparing Green and Gold

Yassine Gargouri

&

Stevan Harnad

Cognition/Communication Laboratory

Cognitive Sciences Institute

Universitè du Québec à Montréal

Jan Velterop has posted his hunch that of the overall percentage of articles published annually today most will prove to be Gold OA journal articles, once one separates from the articles that are classified as self-archived Green OA those of them that also happen to be published in Gold OA journals:

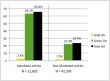

SUMMARY: Velterop (2010) has conjectured that more articles are being made Open Access (OA) by publishing them in an OA journal ("Gold OA") than by publishing them in a conventional journal and self-archiving them ("Green OA"), even where self-archiving is mandatory. Of our sample of 11,801 articles published 2002-2008 by authors at four institutions that mandate self-archiving, 65.6% were self-archived, as required (63.2% Green only, 2.4% both Green and Gold). For 42,395 keyword-matched, non-mandated control articles, the percentage OA was 21.9% Green and 1.5% Gold. Velterop’s conjecture is the wrongest of all precisely where OA is mandated.

JV: “Is anyone… aware of credible research that shows how many articles (in the last 5 years, say), outside physics and the Arxiv preprint servers, have been made available with OA exclusively via 'green' archiving in repositories, and how many were made available with OA directly ('gold') by the publishers (author-side paid or not)?The results turn out to go strongly contrary to Velterop’s hunch.

“The 'gold' OA ones may of course also be available in repositories, but shouldn't be counted for this purpose, as their OA status is not due to them being 'green' OA.

“It is my hunch (to be verified or falsified) that publishers (the 'gold' road) have actually done more to bring OA about than repositories, even where mandated (the 'green' road).”-- J. Velterop, American Scientist Open Access Forum, 25 August 2010

Our ongoing project is comparing citation counts for mandated Green OA articles with those for non-mandated Green OA articles, all published in journals indexed by the Thompson/Reuters ISI database (science and social-science/humanities). (We use only the ISI-indexed sample because the citation counts for our comparisons between OA and non-OA are all derived from ISI.)

The four mandated institutions were Southampton University (ECS), Minho, Queensland University of Technology and CERN.

Out of our total set of 11,801 mandated, self-archived OA articles, we first set aside all those (279) articles that had been published in Gold OA journals (i.e., the journals in the DOAJ-indexed subset of ISI-indexed journals) because we were primarily interested in testing the OA citation advantage, which is based on comparing the citation counts of OA articles versus non-OA articles published in the same journal and year. (This can only be done in non-OA journals, because OA journals have no non-OA articles.) This left only the Green OA articles published in non-Gold journals.

We then extracted, as control articles for each article in this purely Green OA subset, 10 keyword-matched articles published in the same journal and year. The total number of articles in this control sample for the years 2002-2008 was 41,755. (Our preprint for PloS, Gargouri et al. 2010, covers a somewhat smaller, earlier period: 2002-2006, with 20,982 control articles.)

Next we used a robot to check what percentage of these unmandated control articles was OA (freely accessible on the web).

Of our total set of 11,801 mandated, self-archived articles, 279 articles (2.4%) had been published in the 63 Gold OA journals (2.6%) among the 2,391 ISI-indexed journals in which the authors from our four mandated institutions had published in 2002-2008. Both these estimates of percent Gold OA are about half as big as the total 5% proportion for Gold OA journals among all ISI-indexed journals (active in the past 10 years). To be conservative, we can use the higher figure of 5% as a first estimate of the Gold OA contribution to total OA among all ISI-indexed journals.

Now, in our sample, we find that out of the total number of articles published in ISI-indexed journals by authors from our four mandated institutions between 2002-2008 (11,801 articles), about 65.6% of them (7,736 articles) had indeed been made Green OA through self-archiving by their authors, as mandated (7,457 or 63.2% Green only, and 279 or 2.4% both Green and Gold).

Now, in our sample, we find that out of the total number of articles published in ISI-indexed journals by authors from our four mandated institutions between 2002-2008 (11,801 articles), about 65.6% of them (7,736 articles) had indeed been made Green OA through self-archiving by their authors, as mandated (7,457 or 63.2% Green only, and 279 or 2.4% both Green and Gold). In contrast, for our 42,395 keyword-matched, non-mandated control articles, the percentage OA was 23.4% (21.9% Green and 1.5% Gold).

The variance is probably due to different discipline blends in the samples (see Björk et al's Figure 4, where Gold exceeds Green in bio-medicine), but whichever overall results one chooses – whether our 21.9% Green and 1.5% Gold or Björk et al’s 14% Gold and 6.6% Green (or even their extended 11.9% Green and 8.5% Gold), the figures fail to bear out Velterop’s hunch that:

The variance is probably due to different discipline blends in the samples (see Björk et al's Figure 4, where Gold exceeds Green in bio-medicine), but whichever overall results one chooses – whether our 21.9% Green and 1.5% Gold or Björk et al’s 14% Gold and 6.6% Green (or even their extended 11.9% Green and 8.5% Gold), the figures fail to bear out Velterop’s hunch that: “publishers (the 'gold' road) have actually done more to bring OA about than repositories, even where mandated (the 'green' road).”Moreover (and this is really the most important point of all), Velterop's hunch is the wrongest of all precisely where OA is mandated, for there the percent Green is over 60%, and headed toward 100%. That is the real power of Green OA mandates.

Gargouri, Y., Hajjem, C., Lariviere, V., Gingras, Y., Brody, T., Carr, L. and Harnad, S. (2010) Self-Selected or Mandated, Open Access Increases Citation Impact for Higher Quality Research. PLOS ONE 10(5)

Björk B-C, Welling P, Laakso M, Majlender P, Hedlund T, et al. (2010) Open Access to the Scientific Journal Literature: Situation 2009. PLOS ONE 5(6): e11273.

Wednesday, June 2. 2010

"The Age of Open Access" at Humanities and Social Science Congress in Montreal

The Congress of the Humanities and Social Sciences at Concordia University in Montreal on Monday May 31 featured a symposium on The Age of Open Access: New paradigm for universities and researchers. To see the web-stream of the session, click here.

The Congress of the Humanities and Social Sciences at Concordia University in Montreal on Monday May 31 featured a symposium on The Age of Open Access: New paradigm for universities and researchers. To see the web-stream of the session, click here.John Willinsky (Stanford University) and Heather Joseph (Scholarly Publishing and Academic Resources Coalition, Washington) reported on the progress and promise of OA; Michael Geist (University of Ottawa) discussed the more general question of copyright in the digital age, and Gerald Beasley (Concordia University) reported on the very productive faculty consultations that led up to the adoption in April of the Concordia mandate, Canada's first university-wide Green Open Access mandate (motivated in part to coincide with this very Congress, and strongly encouraged by the Congress's Academic Convenor, Ronald Rudin, Professor of History at Concordia).

Most of the subsequent discussion from the audience focused on the funding of Gold OA publishing rather than on the mandating of Green OA self-archiving that has been spearheaded among US universities by Harvard's Robert Darnton (who also spoke at Congress) and Stuart Shieber and among Canadian universities by Concordia's Ronald Rudin and Gerald Beasley. Yet it is Green OA Mandates that will usher in "The Age of Open Access" -- which, all the presenters agreed, has not yet arrived!.

On Wednesday, June 2, there are two further OA events at Congress:

"Open Access: Transforming research in the developing world"

Access to knowledge is fundamental to all aspects of human development, from health to food security, and from education to social capacity building. Yet access to academic publications is severely restricted for many developing countries. As well, the prohibitive cost of publishing and distributing journals in the developing world means much of the research done there remains ‘invisible’ to the rest of the world. This panel will bring together experts to explore the potential impact of Open Access on the developing world.

Moderated by Haroon Akram-Lodhi (Trent University), this panel will bring together Buhle Mbambo-Thata (UNISA Library, South Africa), Leslie Chan (University of Toronto) and Hebe Vessuri, (Venezuelan Institute of Scientific Research). It will be followed by a celebration of Concordia University’s commitment to Open Access at the Libraries, 5pm - 7pm, at the Webster Library (LB Building 2nd Floor)

Tuesday, June 1. 2010

Three Caveats on Professor Darnton's Three Jeremiads

Professor Robert Darnton of Harvard University has given a splendid (if a trifle US-centric, indeed Harvard-centric!) talk at the Congress of the Humanities and Social Sciences at Concordia University.

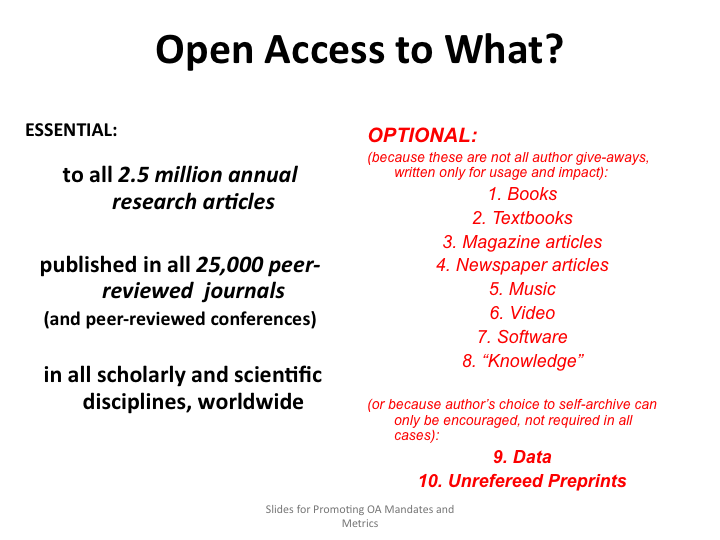

Professor Robert Darnton of Harvard University has given a splendid (if a trifle US-centric, indeed Harvard-centric!) talk at the Congress of the Humanities and Social Sciences at Concordia University.Caveat 1: Journal Articles Versus Books. Professor Darnton's three jeremiads (one on journal articles, two on books) are all spot-on -- but best kept separate, partly because only journal articles are, strictly speaking, Open Access (OA) issues, but mostly because the journal article access problem already has a straightforward solution -- and Harvard was the first university in the US (though only the 16th worldwide!) to adopt it: Mandating "Green" OA self-archiving of all journal articles published by its authors.

In contrast, book OA cannot be mandated, for the simple reason that all journal article authors already wish to give away their articles free for all users online, rather than leaving them accessible only to users at universities that can afford to subscribe to the journal in which they were published; this is far from being true of the authors of all or even most books. Hence trying to treat or even conceptualize journal article access and book access as the same problem would handicap the journal article access problem -- which already has a simple, immediate, and complete solution (OA mandates) -- with the complications of the book access problem, which does not.

Caveat 2: The "Give-Away/Buy-Back" Argument. Nor does it diminish the importance and effectiveness of the mandate solution to the journal article access problem which Harvard has so successfully championed that one of the arguments many (including Professor Darnton) have invoked in its favour happens to be specious. The need and wish on the part of the authors of refereed journal articles (for the sake of both research progress and the progress of their own careers) to make them accessible to all would-be users -- rather then leaving them accessible only to those users whose institutions can afford to subscribe -- is already decisive. There is no need to add to it the "we give it to publishers for free and then we have to buy it back" argument, because it's just not valid:

When a university subscribes to a journal, it does not do so in order to buy back its own published research articles: It does so in order to buy in the research articles published by other universities! In that respect, the transaction is the same as it is with books -- except that book authors may be seeking royalties whereas journal articles are not.

So this specious argument -- though it does well express the frustrations of university libraries because of the way their swelling annual serials budgets keep cannibalizing their book budgets -- is not needed in order to make the case for mandating journal article OA: The fact that mandating journal article OA is feasible and effective, that it maximizes article usage and impact, and that it is beneficial and desirable to all journal article authors is already argument enough, and decisive.

Caveat 3: Funding Gold OA Without Mandating Green OA. Similarly unnecessary is continued worry about university journal budgets -- once universities universally follow Harvard's example and mandate Green OA self-archiving. For once all journal articles are freely accessible online, whether and when to cancel unaffordable journals is no longer the agonizing problem it is now: The inelastic need is satisfied by the Green OA version, hence the subscription demand (and the resultant cannibalization of book budgets by journal budgets) is no longer inelastic.

In addition to its Green OA self-archiving mandate, Harvard has also launched the Compact for Open-Access Publishing Equity (COPE), a commitment to provide some money to pay the costs of Harvard authors who wish to publish in "Gold" OA journals (journals that make their articles OA). The purpose of funding COPE is to encourage publishers to make a transition to Gold Open Access publishing. This is commendable, but it needs to be noted that it is not really urgent or necessary at this time, when journal publication is still being abundantly funded by universities' annual subscriptions -- which cannot be cancelled until and unless Green OA first prevails!

So committing spare funds that Harvard may have available to pay for Gold OA for some of Harvard's article output is of course welcome, given that Harvard has already mandated Green OA for all of its article output. But alas COPE has now inspired membership by other universities that are emulating Harvard only in committing funds to pay for Gold OA, not in mandating Green OA, thinking that they are thereby doing their bit for OA! The Harvard model would be much more useful for the universities worldwide that are keen to emulate it if it were made clear that COPE should only be committed as a supplement for a Green OA mandate, not as a substitute for it. (See some of my own jeremiads on this matter.)

Fortunately, Concordia University, the host for this Congress, has adopted a Green OA mandate before even thinking about whether or not it has any extra cash to commit to COPE!

(If and when universal Green OA frees universities to cancel journals, it will by the same token free that windfall cancellation cash to pay for Gold OA.)

Stevan Harnad

American Scientist Open Access Forum

Thursday, May 20. 2010

On Not Conflating Open Data (OD) With Open Access (OA)

Anon: "I hope you don’t mind my asking you for guidance – I follow the IR list and you are obviously expert in this area. I am having a debate with a colleague who argues that forcing researchers to give up their data to archives and repositories breeches their autonomy and control over intellectual property. He goes so far as to position the entire open access movement in the camp of the neoliberal agenda of commodifying knowledge for capitalist dominated state authority (at the expense of researchers – often very junior team members – who actually create the data).".

It is important to distinguish OA (Open Access to refereed research journal articles) from Open Data (Open Access to research data, OD).

It is important to distinguish OA (Open Access to refereed research journal articles) from Open Data (Open Access to research data, OD). All researchers, without exception, want to maximise access to their refereed research findings as soon as they are accepted for publication by a refereed journal, in order to maximise their uptake, usage and impact. Otherwise they would not be providing access to them, by publishing them. The impact of their research findings is what their careers, as well as research progress, are all about.

But raw data are not research findings until they have been data-mined and analysed. Hence, by the same token (except in rare exceptions), researchers are not merely data-gatherers, collecting data so that others can go on to do the data-mining and analysis: In science especially, their data-collection is driven by their theories, and their attempts to test and validate them. In the humanities too, the intellectual contributions are rarely databases themselves; the scholarly contributions are the author's analysis and interpretation of their data -- and these are often reported in books (long in the writing), which are not part of OA's primary target content, because books are definitely not all or mostly giveaway content, written solely to maximise their uptake, usage and impact (at least not yet). [See Figure, below.]

In short, with good reason, OD is not immediate, exception-free author give-away content, whereas OA is. It may be reasonable, when data-gathering is funded, that the funders stipulate how long the data may be held for exclusive data-analysis by the fundee, before it must be made openly accessible. But, in general, primary research data -- just like books, software, audio, video, and unrefereed research -- are not amenable to OA mandates because there may be good reasons why their creators do not wish to make them OA, at least not immediately. Indeed, that is the reason that all OA mandates, whether by funders or universities, are very specifically restricted to refereed research journal publications.

In the new world of OA mandates, which is merely a PostGutenberg successor to the Gutenberg world of "publish-or-perish" mandates, it is critically important to distinguish carefully what is required (and why) from what is merely recommended (and why).

Anon: "I agree there is a risk of misuse and appropriation of the open access agenda, but that is true for any technology, or any social change more generally".

Researchers' unwillingness to make their laboriously gathered data immediately OA is not just out of fear of misuse and misappropriation. It is much closer to the reason that a sculptor does not do the hard work of mining rock for a sculpture only in order to put the raw rock on craigslist for anyone to buy and sculpt for themselves, let alone putting it on the street corner for anyone to take home and sculpt for themselves. That just isn't what sculpture is about. And the same is true of research (apart from some rare exceptions, like the Human Genome Project, where the research itself is the data-gathering, and the research findings are the data).

Researchers' unwillingness to make their laboriously gathered data immediately OA is not just out of fear of misuse and misappropriation. It is much closer to the reason that a sculptor does not do the hard work of mining rock for a sculpture only in order to put the raw rock on craigslist for anyone to buy and sculpt for themselves, let alone putting it on the street corner for anyone to take home and sculpt for themselves. That just isn't what sculpture is about. And the same is true of research (apart from some rare exceptions, like the Human Genome Project, where the research itself is the data-gathering, and the research findings are the data). Anon: "And I believe researchers generally have more to gain than lose from sharing data but hard evidence on this point – again for data, not outputs, is almost non-existent so far. If you can direct me to any articles or arguments, I would be grateful".There is no hard evidence on this because -- except in exceptional cases -- it is simply not true. The work of science and scholarship does not end with data-gathering, it begins with it, and motivates it. If funders and universities mandated away the motivation to gather the data, they would not be left with an obedient set of data-gatherers, duly continuing to gather data so that anyone and everyone could then go ahead and data-mine it immediately. They would simply be mandating away much of the incentive to gather the data in the first place.

To put it another way: The embargo on making refereed research articles immediately OA -- the access delay that publishers seek in order to protect their revenue -- is the tail wagging the dog: Research progress and researchers' careers do not exist in the service of publishers' revenues, but vice versa. In stark contrast to this, however, the "embargo" on making primary research data OD is necessary and justified (in most cases) if researchers are to have any incentive for gathering data (and doing research) at all.

The length of the embargo is another matter, and can and should be negotiated by research funders on a field by field or even a case by case basis.

So although it is crucial not to conflate OA and OD (thereby needlessly eliciting author resistance to OA when all they really want to resist is immediate OD), there is indeed a connection between OA and OD, and universal OA will undoubtedly encourage more OD to be provided, sooner, than the current status quo does.

Anon: "An important point in addition is that the archives I work with, while aspiring to openness, cannot adopt full and unqualified open access. Issues of sensitive and confidential data, and consent terms from human research subjects, have to be respected. We strive to make data as open and free as possible, subject to these limits. Typically, agreeing to a licence specifying legal and ethical use is all that is required. So in fact, researchers do retain control, to some extent, over the terms and conditions of reuse when they deposit their data for sharing in data archives".Yes, of course even OD will need to have some access restrictions, but that is not the point, and that is not why researchers in general have good reason not be favorably disposed to immediate mandatory OD -- whereas they have no reason at all not to be favorably disposed to immediate mandatory OA.

It is also important to bear in mind that the fundamental motivation for OA is research access and progress, not research archiving and preservation (although those are of course important too). Data must of course be archived and preserved as well, but that, again, is not OD. Closed Access data-archiving would serve that purpose -- and to the extent that researchers store digital data in any form, closed access digital archiving is what all researchers do already. Proposing to help them with data-preservation is not the same thing as proposing that they make their data immediately OD.

Stevan Harnad

American Scientist Open Access Forum

Saturday, April 24. 2010

Canada's 1st Institution-Wide Green OA Mandate; Planet's 90th: Concordia University

[This is Canada's 11th OA Mandate: 8 funder mandates and 2 departmental mandates, but it's Canada's first institution-wide one. Sweden's Blekinge Institute of Technology has also just adopted an institution-wide OA mandate, its second, alongside a funder mandate: See ROARMAP.]

[This is Canada's 11th OA Mandate: 8 funder mandates and 2 departmental mandates, but it's Canada's first institution-wide one. Sweden's Blekinge Institute of Technology has also just adopted an institution-wide OA mandate, its second, alongside a funder mandate: See ROARMAP.]Concordia University Opens its Research Findings to the World; Senate Supports Free Internet Access to Faculty and Student Research

MONTREAL, April 22 (AScribe Newswire) -- Concordia University's academic community has passed a landmark Senate Resolution on Open Access that [requires] all of its faculty and students to make their peer-reviewed research and creative output freely accessible via the internet. Concordia is the first major university in Canada where faculty have given their overwhelming support to a concerted effort to make the full results of their research universally available.

"Concordians have, once again, found a way to share their innovative findings and creativity with communities the world over", says Judith Woodsworth, President and Vice-Chancellor of Concordia. "As befits its role as host of the Congress of the Humanities and Social Sciences next month, our university is now leading the way on this year's Congress theme: Connected Understanding/le savoir branche."

Gerald Beasley, Concordia's University Librarian, was instrumental in the campus-wide dialogue on open access that began more than a year ago. "I am delighted that Senate voted to support the recommendations of all four Faculty Councils and the Council of the School of Graduate Studies. There are only a handful of precedents in North America for the kind of leadership that Concordia faculty have demonstrated by their determination to make publicly-funded research available to all rather than just the minority able to afford the rapidly rising subscription costs of scholarly databases, books and journals."

This past year, Concordia launched Spectrum, an open access digital repository that continues to grow beyond its initial 6,000 dissertations submitted at Concordia, and at its predecessors Sir George Williams University and Loyola College. [In addition to requiring deposit of peer-reviewed journal articles, the] Senate Resolution encourages all of Concordia's researchers to deposit their research and creative work in Spectrum.

Saturday, January 30. 2010

Arxiv Arcana

Nat Gustafson-Sundell wrote:

Nat Gustafson-Sundell wrote:NGS: "I don't expect local repositories to ever offer quality control."Of course not. They are merely offering a locus for authors to provide free access to their preprint drafts before submitting them to journals for peer review, and to their final drafts (postprints) after they have been peer-reviewed and accepted for publication by a journal.

Individual institutions cannot peer-review their own research output (that would be in-house vanity-publishing).

And global repositories like arxiv or pubmedcentral or citeseerx or google scholar cannot assume the peer-review functions of the thousands and thousands of journals that are actually doing the peer- review today. That would add billions to their costs (making each into one monstrous (generic?) megajournal: near impossible, practically, if it weren't also totally unnecessary -- and irrelevant to OA and its costs).

NGS: "Also, users have said again and again that they prefer discovery by subject, which will be possible for semantic docs in local repositories or better indexes (probably built through better collaborations), but not now."Search should of course be central and subject-tagged, over a harvested central collection from the distributed local IRs, not local, IR by IR.

(My point was that central deposit is no longer necessary nor desirable, either for content-provision or for search. The optimal system is institutional deposit (mandated by institutions as well as funders) and then central harvesting for search.

NGS: "I agree that it would be great if local repositories were more used, and eventually, the systems will be in place to make it possible, but every study I've seen still shows local repository use to remain disappointingly low, although some universities are doing better than others.""Use" is ambiguous, as it can refer both to author use (for deposit) and user use (for search and retrieval). We agree that the latter makes no sense: users search at the harvester level, not the IR level.

But for the former (low author "use," i.e., low levels of deposit), the solution is already known: Unmandated IRs (i.e., most of the existing c. 1500 IRs) are near empty (of OA's target content, which is preprints and postprints of peer-reviewed journal articles) whereas mandated IRs (c. 150, i.e.m 1%!) are capturing (or on the way to capturing) their full annual postprint output.

So the solution is mandates. And the locus of deposit for both institutional and funder mandates should be institutional, not central, so the two kinds of mandates converge rather than compete (requiring multiple deposit of the same paper).

For the special case of arxiv, with its long history of unmandated deposit, a university's IR could import its own remote arxiv deposits (or export its local deposits to arxiv) with software like SWORD, but eventually it is clear that institution-external deposit makes no sense:

Institutions are the universal providers of all peer-reviewed research, funded and unfunded, across all fields. One-stop/one-step local deposit (followed by automatic import. export. and harvesting to/ from whatever central services are needed) is the only sensible, scaleable and sustainable system, and also the one that is most conducive to the growth of universal OA deposit mandates from institutions, reinforced by funder mandates likewise requiring institutional deposit, rather than discouraged by gratuitously requiring institution-external deposit.

NGS: "Inter-institutional repositories by subject area (however broadly defined) simply work better, such as arXiv or even the Princeton-Stanford repository for working papers in the classics.""Work better" for what? Deposit or search? You are conflating the locus of search (which should, of course, be cross-institutional) with the locus of deposit, which should be institutional, in order to accelerate institutional deposit mandates and in order to prevent discouraging adoption and compliance because of the prospect of having to deposit the same paper in more than one place.

(Yes, automatic import/export/harvesting software is indifferent to whether it is transferring from local IRs to central CRs or from central CRs to local IRs, but the logistics and pragmatics of deposit and deposit mandates -- since the institution is always the source of the content -- make it obvious that one-time deposit institutionally fits all output, systematically and tractably, whereas willy-nilly IR/CR deposit, depending on fields' prior deposit habits or funder preferences is a recipe for many more years of the confusion, inaction, absence of mandates, and near-absence of OA content that we have now.)

NGS: "Currently, universities are paying external middlemen an outsized fee for validation and packaging services. These services can and should be brought "in-house" (at least as an ideal/ goal to develop toward whenever the opportunities can be seized) except in cases where prices align with value, which occurs still with some society and commercial publications."I completely agree that along with hosting their own peer-reviewed research output, and mandating its deposit in their own IRs, institutions can also use their IRs (along with specially developed software for this purpose) to showcase, manage, monitor, and measure their own research output. That is what OA metrics (local and global) will make possible.

But not till the problem of getting the content into OA IRs is solved. And the solution is institutional and funder mandates -- for institutional (not institution-external) deposit.

NGS: "To the extent that an arXiv or the inter-institutional repository for humanities research which will be showing up in 3-7 years moves toward offering these services, they are clearly preferable to old fashioned subscription models (since the financial support is for actual services) and current local repositories which do not offer everything needed in the value chain (as listed in Van de Sompel et al. 2004)."(1) The reason 99% of IRs offer no value is that 99% of IRs are at least 85% empty. Only the 1% that are mandated are providing the full institutional OA content -- funded and unfunded, across all disciplines -- that all this depends on.

(2) The central collections, as noted, are indispensable for the services they provide, but that does not include locus of deposit and hosting: There, central deposit is counterproductive, a disservice.

(3) With local hosting of all their research output, plus central harvesting services, institutions can get all they need by way of search and metrics, partly through local statistics, partly from central ones.

NGS: " I remember when I first read an article quoting a researcher in an arXiv covered field who essentially said that journals in his field were just for vanity and advancement, since all the "action" was in arXiv (Ober et al. 2007 quoting Manuel 2001 quoting McGinty 1999) -- now think about the value of a repository that doesn't just store content and offer access."This familiar slogan, often voiced by longstanding arxiv users, that "Journals are obsolete: They're only for tenure committees. We [researchers] only use the arxiv" is as false, empirically, as it is incoherent, logically: It is just another instance of the "Simon Says" phenomenon: (Pay attention to what Simon actually does, not to what he says.)

Although it is perfectly true that most arxiv users don't bother to consult journals any more -- using the OA version in arxiv only, and referring to the journal's canonical version-of-record only in citing -- it is equally (and far more relevantly) true that they all continue to submit all those papers to peer-reviewed journals, and to revise them according to the feedback from the referees, until they are accepted and published.

That is precisely the same thing that all other researchers are doing, including the vast majority that do not self-archive their peer-reviewed postprints (or, even more rarely, their unrefereed preprints) at all.

So journals are not just for vanity and advancement; they are for peer review. And arxiv users are just as dependent on that as all other researchers. (No one has ever done the experiment of trying to base all research usage on nothing but unrefereed preprints and spontaneous user feedback.)

So the only thing that is true in what "Simon says" is that when all papers are available, OA, as peer-reviewed final drafts (and sometimes also supplemented earlier by the prerefereeing drafts) there is no longer any need for users or authors to consult the journal's proprietary version of record. (They can just cite it, sight unseen.)

But what follows from that is that journals will eventually have to scale down to becoming just peer-review service-providers and certifiers (rather than continuing also to be access-providers or document producers, either on-paper or online).

Nothing follows from that about the value of repositories, except that they are useless if they do not contain the target content (at least after peer review, and, where possible and desired by authors, also before peer review).

Harnad, S. (1998/2000/2004) The invisible hand of peer review. Nature [online] (5 Nov. 1998), Exploit Interactive 5 (2000): and in Shatz, B. (2004) (ed.) Peer Review: A Critical Inquiry. Rowland & Littlefield. Pp. 235-242.

NGS: "Do I think the financial backing will remain in place? It depends on the services actually offered and to what extent subject repositories could replace a patchwork system of single titles offered by a patchwork of publishers."At the moment the issue is whether arxiv, such as it is (a central locus for institution-external deposit of institutional research content in some fields, mostly physics, plus a search and alerting service), can be sustained by voluntary sub-sidy/scription -- not whether, if arxiv also somehow "took over" the function of journals (peer review), that too could be paid for by voluntary sub-sidy/ scription...

NGS: "Universities could save a great deal by refusing to pay the same overhead over and over again to maintain complete collections in single subject areas (not to mention paying for other people's profits)."I can't quite follow this: You mean universities can cancel journal subscriptions? How do those universities' users then get access to those cancelled journals' contents, unless they are all being systematically made OA? Apart from those areas of physics where it has already been happening since 1991, that isn't going to happen in most other fields till OA is mandated by the universal providers of that content, the universities (reinforced by mandates from their funders).

Then (but only then) can universities cancel their journal subscriptions and use (part of) their windfall saving to pay (journals!) for the peer-review of their own research output, article by article (instead of buying in other universities' output, journal by journal).

NGS: "More importantly, more could be done to make articles useful and discoverable in a collaborative environment, from metadata to preservation, so that the value chain is extended and improved (my sci-fi includes semantic docs, not just cataloged texts, and improved, or multi-stage, peer review, or peer review on top of a working papers repository)."All fine, and desirable -- but not until all the OA content is being provided, and (outside of physics), it isn't being provided -- except when mandated...

So let's not build castles in Spain before we have their contents safely in hand.

NGS: "I think there's been plenty of 'chatter' to indicate that the basic assumptions in conversations between universities are changing (see recent conference agendas), so that we can expect to see more and more practical plans to collaborate on metadata, preservation, and , yes, publications."I'll believe the "chatter" when it has been cashed into action (deposit mandates). Till then it's just distraction and time-wasting.

NGS: "My head spins to think of the amount of money to be saved on the development of more shared platforms, although, the money will only be saved if other expenditures are slowly turned off."All this talk about money, while the target content -- which could be provided at no cost -- is still not being provided (or mandated)...

NGS: "Sandy mentioned in another post that she [he] would hope for arXiv like support for university monographs..."Monographs (not even a clearcut case, like peer-reviewed articles, which are all, already, author give-aways, written only for usage and impact) are moot, while not even peer-reviewed articles are being deposited, or mandated...

NGS: "Open access and NFP publications which do offer the full value chain have been proven to have much lower production costs per page than FP publishers and they do not suffer any impact disadvantages -- and these are still operated on a largely stand-alone basis, without the advantages that can be gained by sharing overhead."Cash castles in Spain again, while the free content is not yet being provided or mandated...

NGS: "Maybe local repositories really are the way to go, since then each institution has more control over its own contribution, but the collaboration and the support will still need to occur to support discovery (implying metadata, both in production and development of standards and tools) and preservation."No, search and preservation are not the problem: content is.

NGS: "I suppose another problem with local repositories, however, is that a consensus is far less likely to unite around local repositories as a practical option at this juncture -- the case can't just be made with words, you need the numbers and arXiv has them -- and while I am interested to see strong local repositories emerge, there is greater sense in supporting what can be achieved, since we need more steps in the right direction.""The numbers" say the following:

Physicists have been depositing their preprints and postprints spontaneously (unmandated) in arxiv since 1991, but in the ensuing 20 years this commendable practice has not been taken up by other disciplines. The numbers, in other words, are static, and stagnant. The only cases in which they have grown are those where deposit was mandated (by institutions and funders).

And for that, it no longer makes sense (indeed it goes contrary to sense) to deposit them institutional-externally, instead of mandating institutional deposit and then harvesting centrally.

And the virtue of that is that it distributes the costs of managing deposits sustainably, by offloading them onto each institution, for its own output, instead of depending on voluntary institutional sub-sidy/scription for obsolete and unnecessary central deposit.

(See also the "denominator fallacy," which arises when you compare the size of size of central repositories with the size of institutional repositories: The world's 25,000 peer-reviewed journals publish about 2.5 million articles annually, across all fields. A repository's success rate is the proportion of its annual target contents that are being deposited annually. For an institution, the denominator is its own total annual peer-reviewed journal article output across all fields. For a central repository, it is the total annual article output -- in the field(s) it covers -- from all the institutions in the world. Of course the central repository's numerator is greater than any single institutional repository's numerator. But its denominator is far greater still. Arxiv has famously been doing extremely well for certain areas of physics, unmandated, for two decades. But in other areas arxiv is not not doing so well, relative to the field's true denominator; and most other central repositories are likewise not doing well, In fact, it is pretty certain that -- apart from physics, with its 2-decade tradition of deposit, plus a few other fields such as economics (preprints) and computer science -- unmandated central repositories are doing exactly as badly unmandated institutional repositories are doing, namely, about 15%.)

Stevan Harnad

American Scientist Open Access Forum

Wednesday, December 9. 2009

Comments on Raym Crow's (2002) SPARC position paper on institutional repositories

Comments on 2002 SPARC Position Paper on Institutional Repositories by Raym Crow

(Note: These comments were originally posted on Sun Aug 04 2002)

The SPARC position paper, "The Case for Institutional Repositories," by Raym Crow (2002), is timely and will serve a useful purpose in mapping out for universities exactly why it is in their best interests to self-archive their research output, and how they should go about doing so.

I will only comment on a few passages, having mostly to do with the topic of "certification" (peer review) in which SPARC's message may have become a little garbled along the same lines that like-minded precursor initiatives (notably E-biomed and Scholar's Forum) have likewise been a little garbled: E-biomed: A Proposal for Electronic Publications in the Biomedical Sciences

Scholars' Forum: A New Model For Scholarly Communication

To overview the point in question very briefly:

To provide open access (i.e., free, online, full-text access) to the research output of universities and research institutions worldwide -- output that is currently accessible only by paying access-tolls to the 24,000 peer reviewed journals in which their 2.5 million annual research papers are published -- does not call for or depend upon any changes at all in the peer review system. On the contrary, it would be a profound strategic (and factual) mistake to give the research community the incorrect impression that there is or ought to be any sort of link at all between providing open access to their own research literature by self-archiving it and any modification whatsoever in the peer review system that currently controls and certifies the quality of that research.

The question of peer-review modification has absolutely nothing to do with the institutional repositories and self-archiving that the SPARC paper is advocating. The only thing that authors and institutions need to be clearly and explicitly reassured about (because it is true) is that self-archiving in institutional Eprints Archives will preserve intact that very same peer-reviewed literature (2.5 million peer-reviewed papers annually, in 24,000 peer-reviewed journals) to which it is designed to provide open access.

Hence, apart from providing these reassurances, it is best to leave the certification/peer-review issue alone!

Here is where this potentially misleading and counterproductive topic is first introduced in the SPARC paper's section on "certification":

RC: "CERTIFICATION: Most of the institutional repository initiatives currently being developed rely on user (including author) communities to control the input of content. These can include academic departments, research centers and labs, administrative groups, and other sub-groups. Faculty and others determine what content merits inclusion and act as arbiters for their own research communities. Any at the initial repository submission stage thus comes from the sponsoring community within the institution, and the rigor of qualitative review and certification will vary."There is a deep potential ambiguity here. The SPARC paper might merely be referring here to how much, and how, institutions might decide to self-vet their own research output when it is still in the form of pre-peer-review preprints,and that would be fine:

"1.5. Distinguish unrefereed preprints from refereed postprints"

But this institutional self-vetting of whatever of its own pre-refereeing research output a university decides to make public online should on no account be described as "qualitative review and certification"! That would instead be peer review, and peer review is the province of the qualified expert referees (most of them affiliated with other institutions, not the author's institution) who are called upon formally by the editors of independent peer-reviewed journals to referee the submissions to those journals; this quality-review is not the province of the institution that is submitting the research. Self-archiving is not self-publishing, and peer-review cannot be self-administered:

"1.4. Distinguish self-publishing (vanity press) from self-archiving (of published, refereed research)"

It merely invites confusion to characterize whatever preliminary self-vetting an institution may elect to do on the contents of the unrefereed preprint sector of its Eprint Archives with what it is that journals do when they implement peer review.

Worse, it might invite the conflation of self-archiving with self-publishing, if what the SPARC paper has in mind here is not just the unrefereed preprint sector of the institutional repository, but what would be its refereed postprint sector, consisting of those papers that are certified as having met a specific journal's established quality standards after classical peer review has taken its standard course:

"What is an Eprint Archive?"

"What is an Eprint?"

"What should be self-archived?"

"What is the purpose of self-archiving?"

"Is self-archiving publication?"

It is extremely important to clearly differentiate an institution's self-vetting of the unrefereed sector of its archive from the external quality control and certification provided by refereed journals that subsequently yields the refereed sector of its archive. Nothing is gained by conflating the two:

"Peer-review reform: Why bother with peer review?"

RC: "In some instances, the certification will be implicit and associative, deriving from the reputation of the author's host department. In others, it might involve more active review and vetting of the research by the author's departmental peers. While more formal than an associative certification, this certification would typically be less compelling than rigorous external peer review. Still, in addition to the primary level certification, this process helps ensure the relevance of the repository's content for the institution's authors and provides a peer-driven process that encourages faculty participation."These are all reasonable possibilities for the preliminary self-selection and self-vetting of an institution's unrefereed preprints. But implying that they amount to anything more than that -- by using the term "peer" for both this internal self-vetting and external peer review, and suggesting that there is some sort of continuum of "compellingness" between the two -- is not helpful or clarifying but instead leads to (quite understandable) confusion and resistance on the part of researchers and their institutions:

For, having read the above, the potential user who previously knew the refereed journal literature -- consisting of 24,000 peer-reviewed journals, 2,5 million refereed articles per year, each clearly certified with each journal's quality-control label, and backed by its established reputation and impact -- now no longer has a clear idea what literature we might be talking about here! Are we talking about providing open access to that same refereed literature, or are we talking about substituting some home-grown, home-brew in its place?

Yet there is no need at all for this confusion: As correctly noted in the SPARC paper, University Eprint Archives ("Institutional Repositories") can have a variety of contents, but prominent among them will be the university's own research output (self-archived for the sake of the visibility, usage, impact, and their resulting individual and institutional rewards, as well described elsewhere in the SPARC paper). That institutional research output has, roughly, two embryonic stages: pre-peer-review (unrefereed) preprints and post-peer-review (refereed) postprints.

Now the pre-peer-review preprint sector of the archive may well require some internal self-vetting (this is up to the institution), but the post-peer-review postprint sector certainly does not, for the "vetting" there has been done -- as it always has been -- by the external referees and editors of the journals to which those papers were submitted as preprints, and by which they were accepted for publication (possibly only after several rounds of substantive revision and re-refereeing) once the refereeing process had transformed them into the postprints.

Nor is the internal self-vetting of the preprint sector any sort of substitute for the external peer review that dynamically transforms the preprints into refereed, journal-certified postprints.

In the above-quoted passage, the functions of the internal preprint self-vetting and the external postprint refereeing/certification are completely conflated -- and conflated, unfortunately, under what appears like an institutional vanity-press penumbra, a taint that the self-archiving initiative certainly does not need, if it is to encourage the opening of access to its existing quality-controlled, certified research literature, such as it is, rather than to some untested substitute for it.

RC: It should be noted that to serve the primary registration and certification functions, a repository must have some official or formal standing within the institution. Informal, grassroots projects - however well-intentioned - would not serve this function until they receive official sanction.Universities should certainly establish whatever internal standards they see fit for pre-filtering their pre-refereeing research before making it public. But the real filtration continues to be what it always was, namely, classical peer review, implemented and certified as it always was. This needs to be made crystal clear!

RC: " OVERLAY JOURNALS: Third-party online journals that point to articles and research hosted by one or more repositories provide another mechanism for peer review certification in a disaggregated model."Unfortunately, the current user of the existing, toll-access refereed-journal literature is becoming more and more confused about just what is actually being contemplated here! Does institutional self-archiving mean that papers lose the quality-control and certification of peer-reviewed journals and have it replaced by something else? By what? And what is the evidence that we would then still have the same literature we are talking about here? Does institutional self-archiving mean giving up the established forms of quality control and certification and replacing them by untested alternatives?

There also seems to be some confusion between the more neutral concept of (1) "overlay journals" (OJs) (e.g., Arthur Smith, which merely use Eprint Archives for input (the online submission/refereeing of author self-archived preprints) and output (the official certification of author self-archived postprints as having been peer-reviewed, accepted and "published" by the OJ in question), but leave the classical peer review system intact; and the vaguer and more controversial notion of (2) "deconstructed journals" (DJs) on the "disaggregated model" (e.g., John W.T. Smith), in which (as far as I can ascertain) what is being contemplated is the self-archiving of preprints and their subsequent "submission" to one or many evaluating/certifying entities (some of which may be OJs, others some other unspecified kind of certifier) who give the papers their respective "stamps of approval."

"Re: Alternative publishing models - was: Scholar's Forum: A New Model...

JWT Smith has made some testable empirical conjectures, which could eventually be tested in a future programme of empirical research on alternative research quality review and certification systems. But they certainly do not represent an already tested and already validated ("certified"?) alternative system, ready for implementation in place of the 2.5 million annual research articles that currently appear in the 24,000 established refereed journals!

As such, untested speculations of this kind are perhaps a little out of place in the context of a position paper that is recommending concrete (and already tested) practical steps to be taken by universities in order to maximize the visibility, accessibility and impact of their research output (and perhaps eventually to relieve their library serials budgetary burden too).

Author/institution self-archiving of research output -- both preprints and postprints -- is a tested and proven supplement to the classical journal peer review and publication system, but by no means a substitute for it. Self-archiving in Open Access Eprint Archives has now been going on for over a decade, and both its viability and its capacity to increase research visibility and impact have been empirically demonstrated.

Substitutes for the existing journal peer review and publication system, in contrast, require serious and systematic prior testing in their own right; there is nothing anywhere near ready there for practical recommendations other than the feasibility of Overlay Journals (OJs) as a means of increasing the efficiency and speed and lowering the cost of classical peer review. Almost no testing of any other model has been done yet; there are no generalizable findings available, and there are many prima facie problems with some of the proposed models (including JWT Smith's "disaggregated" model, [DJs]) that have not even been addressed:

See the discussion (and some of the prima facie problems) of JWT Smith's model under:

"Alternative publishing models - was: Scholar's Forum: A New Model..."

"Journals are Quality Certification Brand-Names"

"Central vs. Distributed Archives"

"The True Cost of the Essentials (Implementing Peer Review)"

"Workshop on Open Archives Initiative in Europe"

In contrast, there has been a recent announcement that the Journal of Nonlinear Mathematical Physics will become openly accessible as an "overlay journal" (OJ) on the Physics Archive .

This is certainly a welcome development -- but note that JNMP is a classically peer-reviewed journal, and hence the "overlay" is not a substitute for classical peer review: It merely increases the visibility, accessibility and impact of the certified, peer-reviewed postprints while at the same time providing a faster, more efficient and economical way of processing submissions and implementing [classical] peer review online.

Indeed, Overlay Journals (OJs) are very much like the Open-Access Journals that are the target of Budapest Open Access Strategy 2.

Deconstructed/Disaggregated Journals (DJs), in contrast, are a much vaguer, more ambiguous, and more problematic concept, nowhere near ready for recommendation in a SPARC position paper.

RC: "While some of the content for overlay journals might have been previously published in refereed journals, other research may have only existed as a pre-print or work-in-progress."This is unfortunately beginning to conflate the notion of the "overlay" journal (OJ) with some of the more speculative hypothetical features of the "deconstructed" or "disaggregated" journal (DJ):

The (informal) notion of an overlay journal is quite simple: If researchers are self-archiving their preprints and postprints in Eprint Archives anyway, there is, apart from any remaining demand for paper editions, no reason for a journal to put out its own separate edition at all: Instead, the preprint can first be deposited in the preprint sector of an Eprint Archive. The journal can be notified by the author that the deposit is intended as a formal submission. The referees can review the archived preprint. The author can revise it according to the editor's disposition letter and the referee reports. The revised draft can again be deposited and re-refereed as a revised preprint. Once a final draft is accepted, that then becomes tagged as the journal-certified (refereed) postprint.

End of story. That is an "overlay" journal (OJ), with the postprint permanently "certified" by the journal-name as having met that journal's established quality standards. The peer review is classical, as always; the only thing that has changed is the medium of implementation of the peer review and the medium of publication (both changes being in the direction of greater efficiency, functionality, speed, and economy).

A deconstructed/disaggregated journal (DJ) is an entirely different matter. As far as I can ascertain, what is being contemplated there is something like an approval system plus the possibility that the same paper is approved by a number of different "journals." The underlying assumptions are questionable:

(1) Peer review is neither a static red-light/green-light process nor a grading system, singular or multiple: The preprint does not receive one or a series of "tags." Peer review is a dynamic process of mediated interactions between an author and expert referees, answerable to an expert editor who selects the referees for their expertise and who determines what has to be done to meet the journal's quality standards -- a process during which the content of the preprint undergoes substantive revision, sometimes several rounds of it. The "grading" function comes only after the preprint has been transformed by peer review into the postprint, and consists of the journal's own ranking in the established (and known) hierarchy of journal quality levels (often also associated with the journal's citation impact factor).

It is not at all clear whether and how having raw preprints certified as approved -- singly or many times over -- by a variety of "deconstructed journals" (DJs) can yield a navigable, sign-posted literature of the known quality and quality-standards that we have currently. (And to instead interactively transform them into postprints is simply to reinvent peer review.)

(2) Even more important: Referees are a scarce resource. Referees sacrifice their precious research time to perform this peer-reviewing duty for free, normally at the specific request of the known editor of a journal of known quality, and with the knowledge that the author will be answerable to the editor. The result of this process is the navigable, quality-controlled refereed research literature we have now, with the quality-grade certified by the journal label and its established reputation.

It is not at all clear (and there are many prima facie reasons to doubt) that referees would give of their time and expertise to a "disaggregated" system to provide grades and comments on raw preprints that might or might not be graded and commented upon by other (self-selected? appointed?) referees as well, and might or might not be responsive to their recommendations. Nor is it clear that a disaggregated system would continue to yield a literature that was of any use to other users either.

Classical peer review already exists, and works, and it is the fruits of that classical peer review that we are talking about making openly accessible through self-archiving, nothing more (or less)! Journals (more specifically, their editorial boards and referees) are the current implementers of peer review. They have the experience, and their quality-control "labels" (the journal-names) have the established reputations (and citation impact factors) on which such "metadata" tags depend for their informational value in guiding users. There is no need either to abandon journals or to re-invent them under another name ("DJ").

A peer-reviewed journal, medium-independently, is merely a peer-review service provider and certifier. That is what they are, and that is what they will continue to be. Titles, editorial boards and their referees may migrate, to be sure. They have done so in the past, between different toll-access publishers; they could do so now too, if/when necessary, from toll-access to open-access publishers. But none of this involves any change in the peer review system; hence there should be no implication that it does.

(JWT Smith also contemplates paying referees for their services, another significant and untested departure from classical peer review, with the potential for bias and abuse -- if only there were enough money available to make it worth referees' while, which there is not! At realistic rates, offering to pay a referee for stealing his research time to review a paper would risk adding insult to injury.)

So there is every reason to encourage institutions to self-archive their research output, such as it is, before and after peer review. But there is no reason at all to link this with speculative scenarios about new publication and/or peer review systems, which could well put the very literature we are trying to make more usable and used at risk of ceasing to be useful or usable to anyone.

The message to researchers and their institutions should be very clear:

The self-archiving of your research output, before (preprints) and after (postprints) peer-reviewed publication will maximize its visibility, usage, and impact, with all the resulting benefits to you and your institution. Self-archiving is merely a supplement to the existing system, an extra thing that you and your institution can do, in order to enjoy these benefits. You need give up nothing, and nothing else need change.

In addition, one possible consequence, if enough researchers and their institutions self-archive enough research long enough, is that your institutional libraries might begin to enjoy some savings on their serials expenditures, because of subscription cancellations. This outcome is not guaranteed, but it is a possible further benefit, and might in turn lead to further restructuring of the journal publication system under the cancellation pressure -- probably in the direction of cutting costs and downsizing to the essentials, which will probably reduce to just providing peer review alone. The true cost of that added value, per paper, will in turn be much lower than the total cost now, and it will make most sense to pay for it out of the university's annual windfall subscriptions savings as a service, per outgoing paper, rather than as a product, per incoming paper, as in toll-access days. This outcome too would be very much in line with the practice of institutional self-archiving of outgoing research that is being advocated by the SPARC position paper.

The foregoing paragraph, however, only describes a hypothetical possibility, and need not and should not be counted as among the sure benefits of author/institution self-archiving -- which are, to repeat: maximized visibility, usage, and impact for institutional research output, resulting from maximized accessibility.

RC: "As a paper could appear in more than one journal and be evaluated by more than one refereeing body, these overlays would allow the aggregation and combination of research articles by multiple logical approaches - for example, on a particular theme or topic (becoming the functional equivalent of anthology volumes in the humanities and social sciences); across disciplines; or by affiliation (faculty departmental bulletins that aggregate the research of their members)."Here the speculative notion of substituting "disaggregated journals" (DJs) for classical peer review is being conflated with the completely orthogonal matter of collections and alerting: An open-access online research literature can certainly be linked and bundled and recombined in a variety of very useful ways, but this has nothing whatsoever to do with the way its quality is arrived at and certified as such. Until an alternative has been found, tested and proven to yield at least comparable sign-posted quality, the classical peer review system is the only game in town. Let us not delay the liberation of its fruits from access-barriers still longer by raising the spectre of freeing them not only from the access-tolls but also from the self-same peer review system that (until further notice) generated and certified their quality!

"Rethinking "Collections" and Selection in the PostGutenberg Age"

RC: "Such journals exist today-for example, the Annals of Mathematics overlay to arXiv and Perspectives in Electronic Publishing, to name just two-and they will proliferate as the volume of distributed open access content increases."The Annals of Mathematics is an "overlay" journal (OJ) of the kind I described above, using classical peer review. It is not an example of the "disaggregated" quality control system (DJ).

Perspectives in Electronic Publishing, in contrast, is merely a collection of links to already published work.

It does not represent any sort of alternative to classical peer review and journal publication.

RC: "Besides overlay journals pointing to distributed content, high-value information portals - centered around large, sophisticated data sets specific to a particular research community - will spawn new types of digital overlay publications based on the shared data."Journals that are overlays to institutional research repositories are merely certifying that papers bearing their tag have undergone their peer-review and have met their established quality standards. This has nothing to do with alternative forms of quality control, disaggregated or otherwise.

Post hoc collections (link-portals) have nothing to do with quality control either, although they will certainly be valuable for other purposes.

RC: "Regardless of journal type, the basis for assessing the quality of the certification that overlay journals provide differs little from the current journal system: eminent editors, qualified reviewers, rigorous standards, and demonstrated quality."Not only does it not differ: Overlay Journals (OJs) will provide identical quality and standards -- as long as "overlay" simply means having the implementation of peer review (and the certification of its outcome) piggy-back on the institutional archives, as it should.

Alternative forms of quality control (e.g., DJs), on the other hand, will first have to demonstrate that they work.

And neither of these is to be confused with the post-hoc function of aggregating online content, peer-reviewed or otherwise.

This should all be made crystal clear in the SPARC paper, partly by stating it in a clear straighforward way, and partly by omitting the speculative options that only cloud the picture needlessly (and have nothing to do with institutional self-archiving and its rationale [open access], but simply risk confusing and discouraging would-be self-archivers and their institutions).

RC: "In addition to these analogues to the current journal certification system, a disaggregated model also enables new types of certification models. Roosendaal and Geurts have noted the implications of internal and external certification systems."Please, let us distinguish the two by calling "internal certification" pre-certification (or "self-certification") so as not to confuse it with peer review, which is by definition external (except in that happy but rare case where an institution happens to house enough of the world's qualified experts on a given piece of research not to have to consult any outside experts).

A good deal of useful pre-filtering can be done by institutions on their own research output, especially if the institution is large enough. (CERN has a very rigorous internal review system that all outgoing research must undergo before it is submitted to a journal for peer review.)

But, on balance, "internal certification" rightly raises the spectre of vanity press publication. Nor is it a coincidence that when universities assess their own researchers for promotion and tenure, they tend to rely on the external certification provided by peer reviewed journals (weighted sometimes by their impact factors) rather than just internal review. The same is true of the external assessors of university research output.

So, please, let us not link the very desirable and face-valid goal of maximizing universities' research visibility and research impact through open access provided by institutional self-archiving with the much more dubious matter of institutional self-certification.

RC: "Certification may pertain at the level of internal, methodological considerations, pertinent to the research itself - the standard basis for most scholarly peer review. Alternatively, the work may be gauged or certified by criteria external to the research itself - for example, by its economic implications or practical applicability. Such internal and external certification systems would typically operate in different contexts and apply different criteria. In a disaggregated model, these multiple certification levels can co-exist."This is all rather vague, and somewhat amateurish, and would (in my opinion) have been better left out of this otherwise clear and focussed call for institutional self-archiving of research output.

And the idea of expecting referees to spend their precious time refereeing already-refereed and already-certified (i.e., already-published) papers yet again is unrealistic in the extreme, especially considering the growing number of papers, the scarcity of qualified expert referees (who are otherwise busy doing the research itself), and the existing backlogs and delays in refereeing and publication.

Besides, as indicated already, refereeing is not passive tagging or grading: It is a dynamic, interactive, and answerable process in which the preprint is transformed into the accepted postprint, and certified as such. Are we to imagine each of these papers being re-written every time they are submitted to yet another DJ?

There is a lot to be said for postpublication revision and updating of the postprints ("post-postprints") in response to postpublication commentary (or to correct substantive errors that come to light later), but it only invites confusion to call that "disaggregated journal publication." The refereed, journal-certified postprint should remain the critical, canonical, scholarly and archival milestone that it is, perpetually marking the fact that that draft successfully met that journal's established quality standards. Further iterations of this refereeing/certification process make no sense (apart from being profligate with scarce resources) and should in any case be tested for feasibility and outcome before being recommended!

RC: "To support both new and existing certification mechanisms, quality certification metadata could be standardized to allow OAI-compliant harvesting of that information. This would allow a reader to determine whether there is any certificationinformation about an article, regardless of where the article originated or where it is discovered."Might I venture to put this much more simply (and restrict it to the refereed research literature, which is my only focus)? By far the most relevant and informative "metadatum" certifying the information in a research paper is the JOURNAL-NAME of the journal in which it was published (signalling, as it does, the journal's established reputation, quality level, and impact factor)! (Yes, the AUTHOR-NAME, and the AUTHOR-INSTITUTION metadata-tags may be useful sometimes too, but those cases do not, as they say, "scale" -- otherwise style="font-style: italic;">self-certification would have replaced peer review long ago. COMMENT-tags would be welcome too, but caveat emptor.)

"Peer Review, Peer Commentary, and Eprint Archive Policy"

Please let us not lose sight of the fact that the main purpose of author/institution self-archiving in institutional Eprint Archives is to maximize the visibility, uptake and impact of research output by maximizing its accessibility (by provising open access). It is not intended as an experimental implementation of speculations about untested new forms of quality control! That would be to put this all-important literature needlessly at risk (and would simply discourage researchers and their institutions from self-archiving it at all).

There is a huge amount of further guiding information that can be derived from the literature to help inform navigation, search and usage. A lot of it will be digitometric analysis based on usage measures such as citation, hits, and commentary

But none of these digitometrics should be mistaken for certification, which, until further notice, is a systematic form of expert human interaction and judgement called peer review.

Harnad, S. & Carr, L. (2000) Integrating, Navigating and Analyzing Eprint Archives Through Open Citation Linking (the OpCit Project). Current Science 79(5): 629-638.

RC: "Depending on the goals established by each institution, an institutional repository could contain any work product generated by the institution's students, faculty, non-faculty researchers, and staff. This material might include student electronic portfolios, classroom teaching materials, the institution's annual reports, video recordings, computer programs, data sets, photographs, and art works-virtually any digital material that the institution wishes to preserve. However, given SPARC's focus on scholarly communication and on changing the structure of the scholarly publishing model, we will define institutional repositories here-whatever else they might contain-as collecting, preserving, and disseminating scholarly content. This content may include pre-prints and other works-in-progress, peer-reviewed articles, monographs, enduring teaching materials, data sets and other ancillary research material, conference papers, electronic theses and dissertations, and gray literature."This passage is fine, and refocusses on the items of real value in the SPARC position paper.

RC: "To control and manage the accession of this content requires appropriate policies and mechanisms, including content management and document version control systems. The repository policy framework and technical infrastructure must provide institutional managers the flexibility to control who can contribute, approve, access, and update the digital content coming from a variety of institutional communities and interest groups (including academic departments, libraries, research centers and labs, and individual authors). Several of the institutional repository infrastructure systems currently being developed have the technical capacity to embargo or sequester access to submissions until the content has been approved by a designated reviewer. The nature and extent of this review will reflect the policies and needs of each individual institution, possibly of each participating institutional community. As noted above, sometimes this review will simply validate the author's institutional affiliation and/or authorization to post materials in the repository; in other instances, the review will be more qualitative and extensive, serving as a primary certification."This is all fine, as long as it is specified that what is at issue is institutional pre-certification or self-certification of its unrefereed research (preprints).

For peer-reviewed research the only institutional authentication required is at most that the AUTHOR-NAME and JOURNAL-NAME are indeed as advertised! (The integrity of the full text could be vetted too, but I'm inclined to suggest that that would be a waste of time and resources at this point. What is needed right now is that institutions should create and fill their own Eprint Archives with their research output, pre- and post-refereeing, immediately. The "definitive" text, until journals really all become "overlay" journals, is currently in the hands of the publishers and subscribing libraries. For the time being, let authors "self-certify" their refereed, published texts as being what they say they are; let's leave worrying about more rigorous authentication for later. For now, the goal should be to self-archive as much research output as possible, as soon as possible, with minimal fuss. The future will take care of itself.

RC: "Institutional repository policies, practices, and expectations must also accommodate the differences in publishing practices between academic disciplines. The early adopter disciplines that developed discipline-specific digital servers were those with an established pre-publication tradition. Obviously, a discipline's existing peer-to-peer communication patterns and research practices need to be considered when developing institutional repository content policies and faculty outreach programs. Scholars in disciplines with no prepublication tradition will have to be persuaded to provide a prepublication version; they might fear plagiarism or anticipate copyright or other acceptance problems in the event they were to submit the work for formal publication. They might also fear the potential for criticism of work not yet benefiting from peer review and editing. For these non-preprint disciplines, a focus on capturing faculty post-publication contributions may prove a more practical initial strategy."Agreed. And here are some prima facie FAQs for allaying each of these by now familiar prima facie fears:

Authentication

Corruption

Certification

Evaluation

Peer Review

Copyright