Thursday, October 23. 2014

The Access Gap at the Research Growth Tip

The EC-commisioned Science-Metrix study has a lot of interesting and useful information that I hope the EC will apply and use.

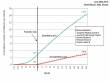

The EC-commisioned Science-Metrix study has a lot of interesting and useful information that I hope the EC will apply and use.Access Timing. The fundamental problem highlighted by the Science-Metrix findings is timing: Over 50% of all articles published 2007-2012 are freely available today. But the trouble is that their percentage in the most critical years, namely, the 1-2 years following publication, is far lower than that! This is partly because of publisher OA embargoes, partly because of author fears and sluggishness, but mostly because not enough strong, effective OA mandates have as yet been adopted by institutions and funders. I hope the Science-Metrix study will serve to motivate and accelerate the adoption of strong, effective OA mandates worldwide. That will narrow the gap at the all-important growth tip of research, which is its first 1-2 years.

A few things to bear in mind:

1. Delayed Access. Publishers have essentially resigned themselves to Delayed Access — i.e., free online access 1-2 years after publication. They know they can’t stop it, and they know it doesn’t have a significant effect on subscription revenues. Hence the real battle-ground for OA is the growth region of research: the 1-2 years following publication. That’s why OA mandates are so important.

2. Embargoes. Most OA mandates allow an OA embargo during the first year folllowing publication. But there are ways that immediate research needs can be fulfilled even during an OA embargo, namely, via institutional repositories’ semi-automatic copy-request Button. For this Button to fulfill its purposes, however, OA mandates must require deposit immediately upon acceptance for publication, not just after a 6-12-month OA embargo has elapsed. There are still too few such immediate-deposit mandates, but the Science-Metrix study would have missed the "almost-OA" access that they provide unless it also measured Button-based copy-provision.

3. Green OA, Gold OA and Non-OA. It is incorrect that "Green OA" means only repository-based OA. Of course OA (free online access) provided on authors' websites is Green OA too. The best way to define Green OA is OA provided by other than the publisher: Gold OA is provided by the publisher (though often paid for by the author or the author's institution or funder). Green OA is provided by the author, wherever the author provides the free online access. (And, although it is not the kind of OA advocated or mandated by institutions and funders, 3rd-party "bootleg" OA, apart from being hard to ascertain, is also Green OA: it certainly doesn't merit a color of its own -- and probably a lot of the back access is 3rd-party-provided rather than author-provided.) So the Science-Metrix data would be more informative and easier to interpret if it were all clearly classified as either Green OA, Gold OA, or non-OA. That would give a clearer idea of the relative size and growth rate of the two roads to OA.

4. The OA Impact Advantage. I am sure that Gold OA would show the same OA impact advantage as Green OA if it were equally possible to measure it. The trouble is determining the non-OA baseline for comparison. Green OA impact studies can do this easily, by comparing OA and non-OA articles published in the same journal issue and year; Gold OA impact studies have the problem of equating OA and non-OA journals for content and quality. And although there are junk journals among both non-OA and Gold OA journals, it is undeniable that their proportions are higher among Gold OA journals (see Beall's list) whereas the proportion of Gold OA journals themselves is still low. So their impact estimates would be dragged down by the junk-Gold journals.

5. From Fools Gold to Fair Gold. The Science-Metrix study is right that toll-access publishing will prove unsustainable in the long run. But it is mandatory Green OA self-archiving that will drive the transition to Fair-Gold OA sooner rather than later.

Harnad, S (2014) The only way to make inflated journal subscriptions unsustainable: Mandate Green Open Access. LSE Impact of Social Sciences Blog 4/28

Vincent-Lamarre, P., Boivin, J., Gargouri, Y., Lariviere, V., & Harnad, S. (2014). Estimating Open Access Mandate Effectiveness: I. The MELIBEA Score. arXiv preprint arXiv:1410.2926.

P.S. I learned from Richard van Noorden's posting on this that the Open Access Button -- which names and shames publishers for embargoing OA whenever a user encounters a non-OA paper -- can now also email an automatic request to the author for a copy (if it can find the author's email address). This new capability complements the already existing copy-request Button implemented in many institutional repositories (see EPprints and DSpace), which is reliably linked to the author's email address. The purpose of the repositories' copy-request Button is to complement and reinforce institutional and funder OA mandates that require authors to deposit their final, refereed drafts immediately upon acceptance for publication rather than only after a publisher OA embargo has elapsed.

Tuesday, September 16. 2014

Algorithmic Almanacs: Lots, Bots and Nots

“The world's most prolific writer: Sverker Johansson has created more than three million Wikipedia articles, around one tenth of the entire content of the site. How, and why, does he do it?” -- Norwegian Inflight Magazine]

The question's interesting, though the right description is not that Sverker Johansson “wrote” millions of Wikipedia articles but that he wrote an online search algorithm (a “bot") that generated them automatically (otherwise the writer of a payroll algorithm would be the “author" of gazillions of paychecks…).

The question's interesting, though the right description is not that Sverker Johansson “wrote” millions of Wikipedia articles but that he wrote an online search algorithm (a “bot") that generated them automatically (otherwise the writer of a payroll algorithm would be the “author" of gazillions of paychecks…).How and why indeed! It’s interesting, though, that so many Wikipedia entries can be generated algorithmically. The boundary between an encyclopedia and an almanac or even a chronicle of events has long been blurred by Wikipedia.

But it is a good idea to keep in mind that what is easy to generate algorithmically (and via its close cousin, crowdsourcing) in the googlized digital era, is simple or 1st-order facts: Answers to what?/when?/where? questions: Apples are red, the sky is blue, it rained in Burma on Tuesday, Chelsea beat Burnley 4-2..

The real source of all this factual information is Google’s global digital database, and the fact that Google has reverse-indexed it all, making it searchable by Boolean (and/or/not) search algorithms as well as by more complex computational (Turing) algorithms.

The questions that are much harder to answer algorithmically, even with all of the Google database and all the Boolean/Turing tools, are the higher-order how?/why? questions. If those could all be answered algorithmically, most of theoretical (i.e., non-experimental) science would be finished by now.

And the reason for that is probably that our brains don’t find all those how/why answers just algorithmically either, but also via dynamical (analog, sensorimotor) means that may prove accessible to future Turing-Test scale sensorimotor robots, but certainly not to today's purely symbolic bots, operating on purely symbolic databases.

In other words, it’s down to the symbol grounding problem again…

Stevan Harnad

Saturday, July 20. 2013

OA 2013: Tilting at the Tipping Point

Summary: The findings of Eric Archambault’s (2013) pilot study on the percentage of OA that is currently available are very timely, welcome and promising. The study finds that the percentage of articles published in 2008 that are OA in 2013 is between 42-48%. It does not estimate, however, when in that 5-year interval the articles were made OA. Hence the study cannot indicate what percentage of articles being published in 2013 is being made OA in 2013. Nor can it indicate what percentage of articles published before 2013 is OA in 2013. The only way to find that out is through a separate analysis of immediate Gold OA, delayed Gold OA, immediate Green OA, and delayed Green OA, by discipline, by year publication year, by OA year.

“This paper re-assesses OA availability in 2008”For papers that were published in 2008 -- but when were those articles made available OA?

8% of all articles published in 2008 (or 1/5 of the 42% that were OA) were made OA via Gold, which means they were made available OA in 2008.

But 34% (or 4/5 of the 42% that were OA) were either Green or hybrid Gold or delayed Gold [it is not at all clear why these were all conflated in the analysis]:

Of this 4/5 of what was made OA, it is likely that the largest portion was Green. But when each article was made Green OA is unknown. Some was made Green OA immediately in 2008; but some was delayed Green – potentially up to any point in the interval between 2008 and the date the sampling was done.

For the proportion of the 4/5 OA that was delayed Gold OA (i.e., made OA by the subscription publisher after 6-12 months or longer) that delay has to be calculated. (Bjork and Laakso found that the second largest portion of OA was delayed Gold. The names of the delayed Gold journals are known, and so are their delay periods.)

The portion of the 4/5 OA that was hybrid Gold was made Gold OA immediately in 2008 by the publisher, but hybrid Gold represents the smallest portion of the 4/5 OA. The names of the hybrid Gold journals are known. Whether the articles were hybrid Gold or Green needs to be ascertained and separate calculation need to be made.

Until all this is known, it is not known what proportion of 2008 articles was OA in 2008. The rest of the findings are not about OA at all, but about embargoed access through some indeterminate delay between 2008 and 2013 (5 years!).

“the tipping point for OA has been reached and… one can expect that, from the late 2000s onwards, the majority of published academic peer-reviewed journal articles were available for free to end-users. ”But when? What publication date was accessible as of what OA date for that publication? Otherwise this is not about OA (which means immediate online access) but about OA embargoes and delays. This is not what is meant by a “tipping point.”

“The paper presents the results for the pilot phase of a study that aims to estimate the proportion of peer-reviewed journal articles which are freely available, that is, OA for the last ten years (the pilot study is on OA availability in 2008). ”This study seems to be on OA availability of 2008 articles, not OA availability in 2008. And availability somewhere within 10 years is not OA.

“An effective definition of OA for this study is the following: ‘OA, whether Green or Gold, is about giving people free access to peer-reviewed research journal articles. ”(Free online access. But to be OA, the access must be immediate, not delayed, and permanent, not temporary.)

“OA is rarely free”This is not quite the point: It is publication that is not free. Its costs must be paid for -- either via subscriptions, subsidies or publication charges.

Subscription fees cover publication costs by charging subscribers, for access. Author publication fees cover publication costs by charging authors, for publication.

OA is simply toll-free online access, irrespective of whether publication is paid for via subscriptions, subsidies, or publication fees.

“Thus, the term toll-access, to distinguish the non-OA literature, is avoided here. ”Toll access is the correct term when access must be paid for. This should not be conflated with how publication costs are paid for.

“The core use of Ulrich in this project was to calibrate the proportion of papers from each of 22 disciplines used to present disaggregated statistics. ”But were the disciplines weighted also by their proportion of total annual article output?

(Treating disciplines as equal had been one of our mistakes, resulting in some discrepancies with Bjork & Laakso. When disciplines are properly weighted, our figures agree more with Bjork & Laakso’s. -- The remaining discrepancy is more challenging, and it is about the uncertainty of when articles were made OA, in the case of Green: the publication date is not enough; nor the sampling date, unless it is in the same year! We are now conducting a study on publication date vs OA date, to estimate the proportion of immediate-Green vs the average latency of delayed Green compared to immediate Gold and delayed Gold.)

“When selecting journals to be included for an article-level database such as Scopus, deciding whether to include a journal has a direct impact on production costs and partly because of this, database publishers tend to have a bias towards larger journals”True, but WoS and SCOPUS also have quality criteria, whereas Ulrichs does not. Ulrich can and does cover all.

“Despite a 50% increase in journal coverage, Scopus only has about 20% more articles. A sensitivity analysis was performed”There is still the question of quality: Including more probably journals may mean lowering average quality.

And there is also the question of discipline size: Estimating overall and average %OA cannot treat 22 disciplines as equal if some publish much more articles than others.

“For gold articles, an estimate of the proportion of papers was made from the random sample by matching the journals that were known to be gold in 2008. ”This solves the problem of the potential discrepancy between publication date and OA date for Gold OA -- but not for Green OA, of which there is about 3 times as much as Gold. Nor for delayed Gold OA.

“ [Articles] were selected by tossing the 100,000 a few more times using the rand() command in Excel, then proceeding to the selection of the required number of records. ”This presumably provided the articles in the 22 disciplines, but were they equal or proportionate?

“A test was then conducted with 20,000 records being provided to the Steven Harnad team in Montreal. ”But what was the test? To search for those 20,000 records on the web with our robot? And what was the date of this OA test (for articles published in 2008)?

“the team led by Harnad measured only 22% of OA in 2008 overall ‘out of the 12,500 journals indexed by Thomson Reuters using a robot that trawled the Web for OA full-texts’ (Gargouri et al., 2012) ”Our own study was %OA for articles published in 2008 and indexed by WoS, as sampled in 2011: The Archambault pilot study was conducted two years later, and on articles indexed by SCOPUS. There may have been more 2008 articles made OA two years later; and more indexed by SCOPUS than WoS.

It is also crucial to estimate both the %OA and the latency of the OA, in order to estimate the true annual %OA and also its annual growth rate (for both Green and delayed Gold). And it has to be balanced by discipline size, if it is to be a global average of total articles, rather than unweighted disciplines.

“a technique to measure the proportion of OA literature based on the Web of Science produces fairly low recall and seriously underestimates OA availability. ”Agreed, if the objective is a measure of %OA based indiscriminately on total quantity of articles.

But the WoS/SCOPUS/Ulirichs differences could also be differences in quality -- and definitely differences in the degree to which researchers need access to the journals in question. WoS includes all the "must have" (“core”) journals, and then some; SCOPUS still more; and Ulrichs still more. So these four layers and their %OA should be analyzed and interpreted separately too.

“This extensive analysis therefore suggests that 48% of the literature published in 2008 may be available for free. ”Yes, but when were those 2008 articles made available free?

“one can infer that OA availability very likely passed the tipping point in 2008 (or earlier) and that the majority of peer-reviewed/scholarly papers published in journals in that year are now available for free in one form or another to end-users. ”It's not clear what a "tipping point" is (50%?). And a tipping point for what: OA? Or eventual delayed OA after an N-year embargo?

What the pilot study’s result shows is not that OA reached the 50% point for 2008 in 2008! It reached the 50% point for 2008 somewhere between 2008 and when the sampling was done!

What we need to know now is how fast %OA per year for that year (or the immediately preceding one) reaches 50%.

“These results suggest that using Scopus and an improved harvester ‘to trawl the Web for OA full-texts’ could yield substantially more accurate results than the methods used by Björk et al. and Harnad et al. ”But why bundle hybrid and delayed Gold with Green, immediate and delayed? They are not at all the same thing!

Hybrid Gold is immediate Gold.

“Embargo” is ambiguous – it can be "delayed Gold," provided by the publisher after an embargo, or it can be embargoed Green, provided by the author after an embargo.

These mean different things for OA and need to be calculated separately.

For hybrid Gold and delayed Gold, the OA dates can be known exactly. For Green they cannot. This is a crucial difference, yet Green is the biggest category.

“Pay-per-article OA, journals with embargo periods and journals allowing partial indexing following granting agencies’ OA policies are considered hybrid, and these data are bundled here with green OA (self-archiving). ”"Journals with embargo periods" is ambiguous, because there are subscription journals that make their own articles free online after an embargo period (“delayed Gold”), and there are subscription journals that embargo how long before their authors can make their own papers Green OA.

And some authors do and some authors don't make their articles Green OA.

And some authors do and don't comply with the Green embargoes.

And some authors are mandated to make their articles Green OA by their funders or institutions.

And allowable embargo lengths vary from mandate to mandate.

And mandates are growing with time.

What is needed is separate analysis (by discipline, weighted) for Gold, hybrid Gold, Delayed Gold and Green. And Green in particular needs to be separately analyzed for immediate-Green and delayed Green.

Only such an analysis will give an estimate of the true extent and growth rate for immediate Gold, immediate Green, and delayed Gold and delayed Green (per 6-month increment, say), by discipline.

“It seems that the tipping point has been passed (OA availability over 50%) in Biology, Biomedical Research, Mathematics & Statistics, and General Science & Technology”Much as I wish it were some I am afraid this is not yet true (or cannot be known on the basis of the results of this pilot study).

50% has only been reached for 2008 articles some time between 2008 and the time the study’s sample was collected. And the fields are of different size. And the dates are much surer for Gold, hybrid Gold than for delayed Gold and Green, both immediate and delayed.

“many previous studies might have included disembargoed papers and pay-per-article OA, which is not the case here”But both hybrid Gold and Delayed Gold should be analyzed separately from Green because their respective OA dates is knowable. And as such, the results should be added to pure Gold, to estimate overall Gold OA, immediate and delayed.

Green OA, though bigger, has to have estimates of Green OA latency, by the field: i.e., the average delay between publication date and OA date, in order to estimate the percentage of immediate Green and various degrees of delayed Green.

“These data present the relative citation rate of OA publications overall, Gold OA and hybrid OA forms relative to publications in each discipline. ”First, it has to be repeated that it is a mistake to lump together Green with Hybrid and Delayed Gold, for the reasons mentioned earlier (regarding date of publication and date of OA), but also because the Gold OA vs non-OA citation comparison (for pure Gold as well as Delayed Gold) is a between journal comparison – making it hard to equate for content and quality -- whereas the Green OA vs non-OA citation comparison is a within journal comparison (hence much more equivalent in content and quality).

(Hybrid Gold, in contrast, does allow within-journal comparisons, but the sample is very small and might also be biased in other ways.)

“many Gold journals are younger and smaller”Yes, but even more important, many Gold OA journals are not of the same quality as non-OA journals. Journals are hard to equate for quality. That is why within-journal comparisons are more informative than between-journal comparisons for the citation advantage.

“Gold journals might provide an avenue for less mainstream, more revolutionary science. ”Or for junk science (as you note): This speculative sword can cut both ways; but today it's just speculation.

“the ARC [citation impact] is not scale-invariant, and larger journals have an advantage as this measure is not corrected sufficiently for journal size”This is another reason the OA citation advantage is better estimated via within-journal comparisons rather than between-journal comparisons.

“the examination of OA availability per country”Again, country-differences would be much more informative if clearly separated by Gold, Hybrid Gold, Delayed Gold and Green, as well as by levels of journal quality, from WoS core, to rest of WoS, to SCOPUS, to Ulrichs.

“Finding that the tipping point has been reached in open access is certainly an important discovery”If only it were sure!

By the way, "tipping point" is a pop expression, and it does not particularly mean 50%. It means something like: the point at which growth in a temporal process has become unstoppable in its trajectory toward 100%. This can occur well before 50% or even after. It requires other estimates rather than just one-off total percentages. It needs year to year growth curves. What we have here is the 50% point for 2008 papers (in some fields), reached some time between 2008 and today!

“This means that aggressive publishers such as Springer are likely to gain a lot in the redesigned landscape”It is not at all clear how this pilot study finding of the 50% point for free access to 2008 articles (via Gold, and even more via Green OA) has now become a message about "aggressive" publishers (presumably regarding some form of Gold OA)? The finding is not primarily about Gold OA publishing!

“green OA only appears to move slowly, whereas Gold OA and hybrid toll before the process as opposed to toll after are in the fast lane”It is even less clear how these results – concerning year 2008 articles, made OA some time between 2008 and now, about one third of them Gold OA and about 2/3 of them Green, with no year by year growth curves -- show that Green grows slowly and Gold is in the fast lane?

(There has probably indeed been a growth spurt in Gold in the past few years, most of it because of one huge Gold mega-journal, PLOS ONE: But how do the results of the present study support any conclusion on relative growth rates of Gold and Green? And especially given that Green growth depends on mandate growth, and Green mandates are indeed growing, with 20 new US major funding agencies mandating Green just this year [2-13]!)

“The market power will shift tremendously from the tens of thousands of buyers that publishers’ sales staff nurtured to the millions of researchers that will now make the atomistic decision of how best to spend their publication budget”Where do all these market conjectures come from, in a study that has simply shown that 50% of 2008 articles are freely accessible online 5 years later, partly via Gold, but even more via Green?

Archambault, Eric (2013) The Tipping Point - Open Access Comes of AgeISSI 2013 Proceedings of 14th International Society of Scientometrics and Informetrics Conference, Vienna, Austria, 15-19 July 2013

Wednesday, February 23. 2011

Critique of McCabe & Snyder: Online not= OA, and OA not= OA journal

Comments on:

Comments on: The following quotes are from McCabe, MJ (2011) Online access versus open access. Inside Higher Ed. February 10, 2011.McCabe, MJ & Snyder, CM (2011) Did Online Access to Journals Change the Economics Literature?

Abstract: Does online access boost citations? The answer has implications for issues ranging from the value of a citation to the sustainability of open-access journals. Using panel data on citations to economics and business journals, we show that the enormous effects found in previous studies were an artifact of their failure to control for article quality, disappearing once we add fixed effects as controls. The absence of an aggregate effect masks heterogeneity across platforms: JSTOR boosts citations around 10%; ScienceDirect has no effect. We examine other sources of heterogeneity including whether JSTOR benefits "long-tail" or "superstar" articles more.

MCCABE: …I thought it would be appropriate to address the issue that is generating some heat here, namely whether our results can be extrapolated to the OA environment….If "selection bias" refers to authors' bias toward selectively making their better (hence more citeable) articles OA, then this was controlled for in the comparison of self-selected vs. mandated OA, by Gargouri et al (2010) (uncited in the McCabe & Snyder (2011) [M & S] preprint, but known to the authors -- indeed the first author requested, and received, the entire dataset for further analysis: we are all eager to hear the results).

1. Selection bias and other empirical modeling errors are likely to have generated overinflated estimates of the benefits of online access (whether free or paid) on journal article citations in most if not all of the recent literature.

If "selection bias" refers to the selection of the journals for analysis, I cannot speak for studies that compare OA journals with non-OA journals, since we only compare OA articles with non-OA articles within the same journal. And it is only a few studies, like Evans and Reimer's, that compare citation rates for journals before and after they are made accessible online (or, in some cases, freely accessible online). Our principal interest is in the effects of immediate OA rather than delayed or embargoed OA (although the latter may be of interest to the publishing community).

MCCABE: 2. There are at least 2 “flavors” found in this literature: 1. papers that use cross-section type data or a single observation for each article (see for example, Lawrence (2001), Harnad and Brody (2004), Gargouri, et. al. (2010)) and 2. papers that use panel data or multiple observations over time for each article (e.g. Evans (2008), Evans and Reimer (2009)).We cannot detect any mention or analysis of the Gargouri et al. paper in the M & S paper…

MCCABE: 3. In our paper we reproduce the results for both of these approaches and then, using panel data and a robust econometric specification (that accounts for selection bias, important secular trends in the data, etc.), we show that these results vanish.We do not see our results cited or reproduced. Does "reproduced" mean "simulated according to an econometric model"? If so, that is regrettably too far from actual empirical findings to be anything but speculations about what would be found if one were actually to do the empirical studies.

MCCABE: 4. Yes, we “only” test online versus print, and not OA versus online for example, but the empirical flaws in the online versus print and the OA versus online literatures are fundamentally the same: the failure to properly account for selection bias. So, using the same technique in both cases should produce similar results.Unfortunately this is not very convincing. Flaws there may well be in the methodology of studies comparing citation counts before and after the year in which a journal goes online. But these are not the flaws of studies comparing citation counts of articles that are and are not made OA within the same journal and year.

Nor is the vague attribution of "failure to properly account for selection bias" very convincing, particularly when the most recent study controlling for selection bias (by comparing self-selected OA with mandated OA) has not even been taken into consideration.

Conceptually, the reason the question of whether online access increases citations over offline access is entirely different from the question of whether OA increases citations over non-OA is that (as the authors note), the online/offline effect concerns ease of access: Institutional users have either offline access or online access, and, according to M & S's results, in economics, the increased ease of accessing articles online does not increase citations.

This could be true (although the growth across those same years of the tendency in economics to make prepublication preprints OA [harvested by RepEc] through author self-archiving, much as the physicists had started doing a decade earlier in Arxiv, and computer scientists started doing even earlier [later harvested by Citeseerx] could be producing a huge background effect not taken into account at all in M & S's painstaking temporal analysis (which itself appears as an OA preprint in SSRN!).

But any way one looks at it, there is an enormous difference between comparing easy vs. hard access (online vs. offline) and comparing access with no access. For when we compare OA vs non-OA, we are taking into account all those potential users that are at institutions that cannot afford subscriptions (whether offline or online) to the journal in which an article appears. The barrier, in other words (though one should hardly have to point this out to economists), is not an ease barrier but a price barrier: For users at nonsubscribing institutions, non-OA articles are not just harder to access: They are impossible to access -- unless a price is paid.

(I certainly hope that M & S will not reply with "let them use interlibrary loan (ILL)"! A study analogous to M & S's online/offline study comparing citations for offline vs. online vs. ILL access in the click-through age would not only strain belief if it too found no difference, but it too would fail to address OA, since OA is about access when one has reached the limits of one's institution's subscription/license/pay-per-view budget. Hence it would again miss all the usage and citations that an article would have gained if it had been accessible to all its potential users and not just to those whose institutions could afford access, by whatever means.)

It is ironic that M & S draw their conclusions about OA in economic terms (and, predictably, as their interest is in modelling publication economics) in terms of the cost/benefits, for an author, of paying to publish in an OA journal. concluding that since they have shown it will not generate more citations, it is not worth the money.

But the most compelling findings on the OA citation advantage come from OA author self-archiving (of articles published in non-OA journals), not from OA journal publishing. Those are the studies that show the OA citation advantage, and the advantage does not cost the author a penny! (The benefits, moreover, accrue not only to authors and users, but to their institutions too, as the economic analysis of Houghton et al shows.)

And the extra citations resulting from OA are almost certainly coming from users for whom access to the article would otherwise have been financially prohibitive. (Perhaps it's time for more econometric modeling from the user's point of view too…)

I recommend that M & S look at the studies of Michael Kurtz in astrophysics. Those, too, included sophisticated long-term studies of the effect of the wholesale switch from offline to online, and Kurtz found that total citations were in fact slightly reduced, overall, when journals became accessible online! But astrophysics, too, is a field in which OA self-archiving is widespread. Hence whether and when journals go online is moot, insofar as citations are concerned.

(The likely hypothesis for the reduced citations -- compatible also with our own findings in Gargouri et al -- is that OA levels the playing field for users: OA articles are accessible to all their potential usesr, not just to those whose institutions can afford toll access. As a result, users can self-selectively decide to cite only the best and most relevant articles of all, rather than having to make do with a selection among only the articles to which their institutions can afford toll access. One corollary of this [though probably also a spinoff of the Seglen/Pareto effect] is that the biggest beneficiaries of the OA citation advantage will be the best articles. This is a user-end -- rather than an author-end -- selection effect...)

MCCABE: 5. At least in the case of economics and business titles, it is not even possible to properly test for an independent OA effect by specifically looking at OA journals in these fields since there are almost no titles that switched from print/online to OA (I can think of only one such title in our sample that actually permitted backfiles to be placed in an OA repository). Indeed, almost all of the OA titles in econ/business have always been OA and so no statistically meaningful before and after comparisons can be performed.The multiple conflation here is so flagrant that it is almost laughable. Online ≠ OA and OA ≠ OA journal.

First, the method of comparing the effect on citations before vs. after the offline/online switch will have to make do with its limitations. (We don't think it's of much use for studying OA effects at all.) The method of comparing the effect on citations of OA vs. non-OA within the same (economics/business, toll-access) journals can certainly proceed apace in those disciplines, the studies have been done, and the results are much the same as in other disciplines.

M & S have our latest dataset: Perhaps they would care to test whether the economics/business subset of it is an exception to our finding that (a) there is a significant OA advantage in all disciplines, and (b) it's just as big when the OA is mandated as when it is self-selected.

MCCABE: 6. One alternative, in the case of cross-section type data, is to construct field experiments in which articles are randomly assigned OA status (e.g. Davis (2008) employs this approach and reports no OA benefit).And another one -- based on an incomparably larger N, across far more fields -- is the Gargouri et al study that M & S fail to mention in their article, in which articles are mandatorily assigned OA status, and for which they have the full dataset in hand, as requested.

MCCABE: 7. Another option is to examine articles before and after they were placed in OA repositories, so that the likely selection bias effects, important secular trends, etc. can be accounted for (or in economics jargon, “differenced out”). Evans and Reimer’s attempt to do this in their 2009 paper but only meet part of the econometric challenge.M & S are rather too wedded to their before/after method and thinking! The sensible time for authors to self-archive their papers is immediately upon acceptance for publication. That's before the published version has even appeared. Otherwise one is not studying OA but OA embargo effects. (But let me agree on one point: Unlike journal publication dates, OA self-archiving dates are not always known or taken into account; so there may be some drift there, depending on when the author self-archives. The solution is not to study the before/after watershed, but to focus on the articles that are self-archived immediately rather than later.)

Stevan Harnad

Gargouri, Y., Hajjem, C., Lariviere, V., Gingras, Y., Brody, T., Carr, L. and Harnad, S. (2010) Self-Selected or Mandated, Open Access Increases Citation Impact for Higher Quality Research. PLOS ONE 5 (10). e13636

Harnad, S. (2010) The Immediate Practical Implication of the Houghton Report: Provide Green Open Access Now. Prometheus 28 (1): 55-59.

Sunday, October 31. 2010

Scholarly/Scientific Impact Metrics in the Open Access Era

Workshop on Open Archives and their Significance in the Communication of Science, Swedish University of Agricultural Sciences, Uppsala, Sweden, 16-17 November 2010

Workshop on Open Archives and their Significance in the Communication of Science, Swedish University of Agricultural Sciences, Uppsala, Sweden, 16-17 November 2010Scholarly/Scientific Impact Metrics in the Open Access Era

Stevan Harnad

Canada Research Chair in Cognitive Sciences

Université du Québec à Montréal

CANADA

&

School of Electronics and Computer Science

University of Southampton

UNITED KINGDOM

OVERVIEW: The real merit of research is in its specific, substantive content. But if a contribution proves important and useful, it will be taken up, built upon and cited in subsequent research. Scientometrics attempts to estimate and quantify this research uptake and impact. The classical metric of research productivity had been publication counts ("publish or perish") and the prestige of their publication venues (refereed journals or scholarly monographs), based on their prior track records for quality and importance. Publication counts were soon supplemented by "journal impact factors" (average citation counts), and eventually also by individual article and author citation counts. In the online era, the potential metrics have extended further to include download counts, growth and decay rates for metrics, co-citation measures, and more elaborate a-priori formulas such as the "h-index" and its variants. Still prominently missing today, however, are three things: (1) book metrics, (2) a validation of the metrics, discipline by discipline, that tests and confirms their meaning and predictive power, especially in research assessment, and (3) a sufficiently large and open webwide database to allow the global research community to test, validate and monitor its metrics (which are currently collected systematically only by proprietary commercial databases). The Open Access (OA) movement (for providing free online access to all journal articles) is helping to generate the requisite OA database for articles by extending universities' and funders' "publish or perish" mandates to also require their authors to make their publications OA by depositing them in their institution's OA repository. OA not only makes it possible to harvest research impact metrics webwide, but it has also been shown to increase them (the "OA Impact Advantage"). I will describe the new OA metrics, the OA Advantage, how OA metrics can be tested and validated for use in research assessment.Brody, T., Carr, L., Harnad, S. and Swan, A. (2007) Time to Convert to Metrics. Research Fortnight pp. 17-18.

Brody, T., Carr, L., Gingras, Y., Hajjem, C., Harnad, S. and Swan, A. (2007) Incentivizing the Open Access Research Web: Publication-Archiving, Data-Archiving and Scientometrics. CTWatch Quarterly 3(3).

Carr, L., Hitchcock, S., Oppenheim, C., McDonald, J. W., Champion, T. and Harnad, S. (2006) Extending journal-based research impact assessment to book-based disciplines.

Dror, I. and Harnad, S. (2009) Offloading Cognition onto Cognitive Technology. In Dror, I. and Harnad, S. (Eds) (2009): Cognition Distributed: How Cognitive Technology Extends Our Minds. Amsterdam: John Benjamins

Gargouri, Y., Hajjem, C., Lariviere, V., Gingras, Y., Brody, T., Carr, L. and Harnad, S. (2010) Self-Selected or Mandated, Open Access Increases Citation Impact for Higher Quality Research. PLOS ONE 5(10) e13636

Harnad, S. (2010) The Immediate Practical Implication of the Houghton Report: Provide Green Open Access Now. Prometheus 28: 55-59.

Harnad, S. (2010) Open Access to Research: Changing Researcher Behavior Through University and Funder Mandates. In Parycek, P. & Prosser, A. (Eds.): EDEM2010: Proceedings of the 4th Inernational Conference on E-Democracy. Austrian Computer Society, 13-22

Harnad, S. (2010) Opening Research on the Web: Hastening the Inevitable. Internet Evolution. June 6.

Harnad, S. (2009) Open Access Scientometrics and the UK Research Assessment Exercise. Scientometrics 79 (1)

Sunday, September 26. 2010

Needed: OA -- Not Alternative Impact Metrics for OA Journals

An article by Ulrich Herb (2010) [UH] is predicated on one of the oldest misunderstandings about OA: that OA ≡ OA journals ("Gold OA") and that the obstacle to OA is that OA journals don't have a high enough impact factor:

An article by Ulrich Herb (2010) [UH] is predicated on one of the oldest misunderstandings about OA: that OA ≡ OA journals ("Gold OA") and that the obstacle to OA is that OA journals don't have a high enough impact factor:UH:The usual solution that is proposed for this non-problem is that we should therefore give OA journals a higher weight in performance evaluation, despite their lower impact factor, in order to encourage OA.

"This contribution shows that most common methods to assess the impact of scientific publications often discriminate Open Access publications – and by that reduce the attractiveness of Open Access for scientists."

(This is nonsense, and it is not the "solution" proposed by UH. A journal's weight in performance evaluation needs to be earned -- on the basis of its content's quality and impact -- not accorded by fiat, in order to encourage OA.)

The "solution" proposed by UH is not to give OA journals a higher a-priori weight, but to create new impact measures that will accord them a higher weight.

UH:New impact measures are always welcome -- but they too must earn their weights based on their track-records for validity and predictivity.

"Assuming that the motivation to use Open Access publishing services (e.g. a journal or a repository) would increase if these services would convey some sort of reputation or impact to the scientists, alternative models of impact are discussed."

And what is urgently needed by and for research and researchers is not more new impact measures but more OA.

And the way to provide more OA is to provide OA to more articles -- which can be done in two ways, not just the one way of publishing in OA journals (Gold OA), but by self-archiving articles published in all journals (whether OA or non-OA) in institutional repositories, to make them OA ("Green OA").

Ulrich Herb seems to have misunderstood this completely (equating OA with Gold OA only). The contradiction is evident in two successive paragraphs:

UH:The bold-face passage in the second paragraph is completely erroneous, and in direct contradiction with what is stated in the immediately preceding paragraph. For the increased citations generated by making articles in any journal (OA or non-OA) OA by making them freely accessible online are included in the relevant databases used to calculate journal impact. Indeed, most of the evidence that OA increases citations comes from comparing the citation counts of articles (in the same journal and issue) that are and are not made OA by their authors. (And these within-journal comparisons are necessarily based on Green OA, not Gold OA.)

"Commonly used citation-based indicators provide some arguments pro Open Access: Scientific documents that can be used free of charge are significantly more often downloaded and cited than Toll Access documents are (Harnad & Brody, 2004 ; Lawrence, 2001). Moreover the frequency of downloads seems to correlate with the citation counts of scientific documents (Brody, Harnad & Carr, 2006).

"Nevertheless there is lack of tools and indicators to measure the impact of Open Access publications. Especially documents that are self-archived on Open Access Repositories (and not published in an Open Access Journal) are excluded from the relevant databases (WoS, JCR, Scopus, etc.) that are typically used to calculate JIF-scores or the h-index" [emphasis added].

Yes, there are journals (OA and non-OA -- mostly non-OA!) that are not (yet) indexed by some of the databases (WoS, JCR, Scopus, etc.); but that is not an OA problem.

Yes, let's keep enhancing the visibility and harvestability of OA content; but that is not the OA problem: the problem is that most content is not yet OA.

And yes, let's keep developing rich, new OA metrics; but you can't develop OA metrics until the content is made OA.

References

Brody, T., Carr, L., Gingras, Y., Hajjem, C., Harnad, S. and Swan, A. (2007) Incentivizing the Open Access Research Web: Publication-Archiving, Data-Archiving and Scientometrics. CTWatch Quarterly 3(3).

Harnad, S. (2008) Validating Research Performance Metrics Against Peer Rankings. Ethics in Science and Environmental Politics 8 (11) doi:10.3354/esep00088 (Special issue: The Use And Misuse Of Bibliometric Indices In Evaluating Scholarly Performance)

Harnad, S. (2009) Open Access Scientometrics and the UK Research Assessment Exercise. Scientometrics 79 (1)

Herb, Ulrich (2010) OpenAccess Statistics: Alternative Impact Measures for Open Access documents? An examination how to generate interoperable usage information from distributed Open Access services., 2010 In: L'information scientifique et technique dans l'univers numérique. Mesures et usages. L'association des professionnels de l'information et de la documentation, ADBS, pp. 165-178

Wednesday, July 21. 2010

Why It Is Not Enough Just To Give Green OA Higher Weight Than Gold OA

MELIBEA's validator assesses OA policies using an algorithm that generates for each policy a one-dimensional measure, "OA%val," based on a number of weighted factors.

MELIBEA's validator assesses OA policies using an algorithm that generates for each policy a one-dimensional measure, "OA%val," based on a number of weighted factors.In assigning weights to these factors it is it not just a matter of whether one puts a greater weight on green than on gold overall. The devil is in the details. Since MELIBEA's "OA%val" is one-dimensional, the exact weights assigned by the algorithm matter very much, for in some crucial combinations the "score" can be deleterious to green (and hence to OA itself) by assigning any non-zero weight at all to gold in an OA policy evaluation. I will use the most problematic case to illustrate:

With all the policy components that one can combine in order to give an OA policy a score, consider the relative weighting one is to give to four policy models:

Policy Model 1 neither requires green nor funds gold (gr/go)One can agree to weight GR/GO > gr/go

Policy Model 2 does not require green, but funds gold (gr/GO)

Policy Model 3 requires green and does not fund gold (GR/go)

Policy Model 4 requires green and funds gold (GR/GO).

One can also agree (as above) to weight GR/go > gr/GO

One can even agree to weight GR/GO > GR/go (although I do have reservations about this, because the potential deterrent effects of over-demanding early policy models on the spread of green OA mandates, but I will not bring these reservations into this discussion)

The problematic case concerns whether to assign a greater weight to gr/GO than to gr/go in the MELIBEA score (i.e., whether gr/GO > gr/go, Policy 4 vs. Policy 3).

I am strongly opposed to weighting gr/GO > gr/go, because I am convinced that when an institution adopts a premature gold payment policy without first adopting a green requirement policy, this diminishes rather than increases the likelihood of an upgrade to a green requirement.

So in that case, despite the fact that a gr/GO policy no doubt generates somewhat more OA than gr/go, this small local increase OA is not better for the growth of OA overall. Rather, it reinforces the widespread misconception that the way to generate OA is to pay for gold OA (and then wait for others to do the same). Such a policy neglects the much more important need to mandate green OA, cost-free, first. It tries to pay for OA even while subscriptions are still paying the full cost of publication, hence still tying down most of the potential funds to pay for gold OA. Giving a gr/GO policy a higher weight than gr/go obscures the fact that paid gold can only cover a small fraction of an institution's output, and at an extra cost, whereas requiring green covers all of it, and at no extra cost.

There are ways to remedy this, algorithmically (for example, by giving GO a non-zero weight only when GR also has a non-zero weight).

The important point to note, however, is that these algorithmic subtleties are not resolved by simply stating that one assigns a higher weight -- even a much higher weight -- to GR than to GO: Promoting the right priorities in OA policy design requires a much more nuanced approach.

Regarding the question of IR (institutional repository) vs CR (central repository) deposit too, the devil is in the details. Just as one more Gold OA article is indeed one more piece of OA, exactly as one more Green OA deposit is, so too one more CR deposit is indeed one more piece of OA, exactly as one more IR deposit is.

But the goal is to weight the algorithm to promote stronger policy models, not just to promote isolated increments in OA. And just as a policy that pays for gold without mandating green is generating only a little more OA at the expense of not generating a lot more OA, so a funder policy that mandates CR deposit instead of IR deposit is generating only a little more OA at the expense of not generating a lot more OA (by reinforcing -- at no cost, and with no loss in OA -- the adoption of a cooperative, convergent IR deposit policy for the rest of each institution's output, funded and unfunded, across all its discipline, instead of gratuitously competing with institutional OA policies, by adopting a divergent CR deposit policy).

The problem is not with publishers' green policies but with institutions' (and funders) lack of green policies! Over 60% of journals endorse immediate Green OA deposit for the postprint and over 40% more for the preprint (hence over 90% of all articles, overall), yet only 15% of articles are being deposited annually overall, because less than 1% of institutions have yet mandated deposit.

This is the real gap that needs to be closed -- and can be closed, immediately, by mandating Green OA. And this is what is completely overlooked by institutions and funders hurrying to pay for gold OA instead of first mandating green OA, or funders needlessly mandating CR deposit instead of IR deposit.

The fact is that there are still much fewer than even 1% mandates (about 160, out of a total of perhaps 18,000 universities plus 8,000 research institutions and at least several hundred major funders, funding across multiple institutions, worldwide). The lesson before us is hence most definitely not that mandates are not enough; it is that there are not enough mandates -- far from it.

Gold OA payment is minor matter, providing a small amount of OA, whereas green OA mandates are a major priority, able to scale up to providing 100% OA. Gold is nothing but a distraction -- for either an institution or a funder -- until and unless it first mandates green.

Nor is the problem that publishers are only paying lip-service to repository deposit. The problem is that the overwhelming majority of institutions and funders are still only paying lip service to repository deposit -- instead of mandating it.

Nor will funders and institutions pre-emptively paying publishers for gold without first mandating green (while subscriptions are still paying for publishing, tying up the potential funds to pay for gold) solve the problem of getting green mandated by institutions and funders.

For these reasons it is not enough, in evaluating OA Policy factors, just to give Green OA a higher weight than Gold OA.

Monday, July 19. 2010

Ameliorating MELIBEA's Open Access Policy Evaluator

The MELIBEA Open Access policy validator is timely and promising. It has the potential to become very useful and even influential in shaping OA mandates -- but that makes it all the more important to get it right, rather than releasing MELIBEA prematurely, when it still risks increasing confusion rather than providing clarity and direction in OA policy-making.

SUMMARY: The MELIBEA evaluator of Open Access policies could prove useful in shaping OA mandates -- but it still needs a good deal of work. Currently it conflates institutional and funder policies and criteria, mixes green and gold OA criteria, color-codes in an arbitrary and confusing way, and needs to validate its weights (e.g., against policy success criteria such as the percentage and growth rate of annual output deposited since the policy was adopted).

Remedios Melero is right to point out that -- unlike the CSIC Cybermetrics Lab's 's University Rankings and Repository Rankings -- the MELIBEA policy validator is not really meant to be a ranking. Yet MELIBEA has set up its composite algorithm and its graphics to make it a ranking just the same.

It is further pointed out, correctly, that MELIBEA's policy criteria for institutions and funders are not (and should not be) the same. Yet, with the coding as well as the algorithm, they are treated the same way (and funder policy is taken to be the generic template, institutional policy merely an ill-fitting special case).

It is also pointed out, rightly, that a gold OA publishing policy is not central to institutional OA policy making -- yet there it is, contributing sizeable components to the MELIBEA algorithm.

It is also pointed out that MELIBEA's green color code has nothing to do with the "green OA" coding -- yet there it is -- red, green yellow -- competing with the widespread use of green to designate OA self-archiving, and thereby inducing confusion, both overt and covert.

MELIBEA could be a useful and natural complement to the ROARMAP registry of OA policies. I (and no doubt other OA advocates) would be more than happy to give MELIBEA feedback on every aspect of its design and rationale.

But as it is designed now, I can only agree with Steve Hitchcock's points and conclude that consulting MELIBEA today would be likely to create and compound confusion rather than helping to bring the all-important focus and direction to OA policy-making that I am sure CSIC, too, seeks, and seeks to help realize.

Here are just a few prima facie points:

(1) Since MELIBEA is not, and should not be construed as a ranking of OA policies -- especially because it includes both institutional and funder policies -- it is important not to plug it into an algorithm until and unless the algorithm has first been carefully tested, with consultation, to make sure it weights policy criteria in a way that optimizes OA progress and guides policy-makers in the right direction.

(2) For this reason, it is more important to allow users to generate separate flat lists of institutions or funders on the various policy criteria, considered and compared independently, rather than on the basis of a prematurely and arbitrarily weighted joint algorithm.

(3) This is all the more important since the data are based on less then 200 institutions, whereas the CSIC University Rankings are based on thousands. Since the population is still so small, MELIBEA risks having a disproportionate effect on initial conditions and hence direction-setting; all the more reason not to amplify noise and indirection by assigning untested initial weights without carefully thinking through and weighing the consequences.

(4) A potential internal cross-validator of some of the criteria would be a reliable measure of outcome -- but that requires much more attention to estimating the annual size and growth-rate of each repository (in terms of OA's target contents, which are full-text articles), normalized for institution size, annual total target output (an especially tricky denominator problem in the case of multi-institutional funder repositories) and the age of the policy. Policy criteria (such as request/require or immediate/delayed) should be cross-validated against these outcome measures (such as percentage and growth rate of annual target output) in determining the weights in the algorithm.

(5) The MELIBEA color coding needs to be revised -- and revised quickly, if there is to be an algorithm at all. All those arbitrary colors in the display of single repositories as ranked by the algorithm are both unnecessary and confusing, and the validator is not comprehensibly labelled. The objective should be to order and focus clearly and intuitively. Whatever is correlated with more green OA output (such as a higher level or faster growth rate in OA's target content, normalized) should be coded as darker or bigger shades of green. The same should be true for the policy criteria, separately and jointly: in each case, request/require, delayed/immediate, etc., the greenward polarity is obvious and intuitive. This should be reflected in the graphics as well as in any comparative rankings.

(6) If it includes repositories with no OA policy at all (i.e., just a repository and an open invitation to deposit) then all MELIBEA is doing is duplicating ROAR and ROARMAP, whereas its purpose, presumably, is to highlight, weigh and compare specific policy differences among (the very few) repositories that DO have policies.

(7) The sign-up data are also rather confusing; the criteria are not always consistent, relevant or applicable. The sign-up seems to be designed to make a funder-mandate the generic option, whereas this is quite the opposite of reality. There are far more institutions and institutional repositories and policies than there are funders, many of the funder criteria do not apply to institutions, and many of the institutional criteria make no sense for funders. There should be separate criterial lists for institutional policies and for funder policies; they are not the same sort of thing. There is also far too much focus and weight on gold OA policy and payment. If included at all, they should only be at the end, as an addendum, not the focus at the beginning, and on a par with green OA policy.

(8) There is also potential confusion on the matter of "waivers" or "opt-outs": There are two aspects of a mandate. One concerns whether or not deposit is required (and if so, whether that requirement can be waived) and the other concerns whether or not rights-reservation is required (and if so, whether that requirement can be waived). These two distinct and independent requirements/waivers are completely conflated in the current version of MELIBEA.

I hope there will be substantive consultation and conscientious redesign of these and other aspects of MELIBEA before it can be recommended for serious consideration and use.

Stevan Harnad

American Scientist Open Access Forum

Thursday, July 8. 2010

Ranking Institutional Repositories

Isidro Aguillo wrote in the American Scientist Open Access Forum:

Isidro Aguillo wrote in the American Scientist Open Access Forum: IA: "I disagree with [Hélène Bosc's] proposal [to eliminate from the top 800 institutional repository rankings the multi-institution repositories and the repositories that contain the contents of other repositories as subsets]. We are not measuring only [repository] contents but [repository] contents AND visibility [o]n the web."Yes, you are measuring both contents and visibility, but presumably you want the difference between (1) the ranking of the top 800 repositories and (2) the ranking of the top 800 institutional repositories to be based on the fact that the latter are institutional repositories whereas the former are all repositories (central, i.e., multi-institutional, as well as institutional).

Moreover, if you list redundant repositories (some being the proper subsets of others) in the very same ranking, it seems to me the meaning of the RWWR rankings become rather vague.

IA: "Certainly HyperHAL covers the contents of all its participants, but the impact of these contents depends o[n] other factors. Probably researchers prefer to link to the paper in INRIA because of the prestige of this institution, the affiliation of the author or the marketing of their institutional repository."All true. But perhaps the significance and usefulness of the RWWR rankings would be greater if you either changed the weight of the factors (volume of full-text content, number of links) or, alternatively, you designed the rankings so the user could select and weight the criteria on which the rankings are displayed.

Otherwise your weightings become like the "h-index" -- an a-priori combination of untested, unvalidated weights that many users may not be satisfied with, or fully informed by...

IA: "But here is a more important aspect: If I were the president of INRIA I [would] prefer people using my institutional repository instead CCSD. No problem with the [CCSD], they are [doing] a great job and increasing the reach of INRIA, but the papers deposited are a very important (the most important?) asset of INRIA."But how heavily INRIA papers are linked, downloaded and cited is not necessarily (or even probably) a function of their direct locus!

What is important for INRIA (and all institutions) is that as much as possible of their paper output should be OA, simpliciter, so that it can be linked, downloaded, read, applied, used and cited. It is entirely secondary, for INRIA (and all institutions), where their papers are made OA, compared to the necessary condition that they are made OA (and hence freely accessible, useable, harvestable).

Hence (in my view) by far the most important ranking factor for institutional repositories is how much of their (annual) full-text institutional paper output is indeed deposited and made OA. INRIA would have no reason to be disappointed if the locus from which its content was being searched, retrieved and linked happened to be some other, multi-institutional harvester. INRIA still gets the credit and benefits from all those links, downloads and citations of INRIA content!

(Having said that, locus of deposit does matter, very much, for deposit mandates. Deposit mandates are necessary in order to generate OA content. And -- for strategic reasons that are elaborated in my own reply to Chris Armbruster -- it makes a big practical difference for success in reaching agreement on adopting a mandate in the first place that both institutional and funder mandates should require convergent institutional deposit, rather than divergent and competing institutional vs. institution-external deposit. Here too, your RWWR repository rankings would be much more helpful and informative if they gave a greater weight to the relative size of each institutional repository's content and eliminated multi-institutional repositories from the institutional repository rankings -- or at least allowed institutional repositories to be ranked independently on content vs links.

I think you are perhaps being misled here by the analogy with your sister rankings of world universities rather than just their repositories. In university rankings, the links to the university site itself matter a lot. But in repository rankings, links matter much less than how much institutional content is freely accessible at all. For the degree of usage and impact of that content, harvester sites may be more relevant measures, and, after all, downloads and citations, unlike links, carry their credits (to the authors and institutions) with them no matter where the transaction happens to occur...

IA: "Regarding the other comments we are going to correct those with mistakes but it is very difficult for us to realize that Virginia Tech University is 'faking' its institutional repository with contents authored by external scholars."I have called Gail McMillan at Virginia Tech to inquire about this, and she has explained it to me. The question was never whether Virginia Tech was "faking"! They simply host content over and above Virginia Tech content -- for example, OA journals whose content originates from other institutions.

As such, the Virginia Tech repository, besides providing access to Virginia Tech's own content, like other institutional repositories, is also a conduit or portal for accessing the content of other institutions (e.g., those providing the articles in the OA journals Virginia Tech hosts). The "credit" for providing that conduit, goes to Virginia Tech, of course. But the credit for the links, usage and citations goes to those other institutions!

When an institutional repository is also used as a portal for other institutions, its function becomes a hybrid one -- both an aggregator and a provider. I think it's far more useful and important to try to keep those functions separate, in both the rankings and the weightings of institutional repositories.

Stevan Harnad

American Scientist Open Access Forum

Saturday, July 3. 2010

Google Scholar Boolean Search on Citing Articles

Formerly, with Google Scholar (first launched in November 2004) (1) you could do a google-like boolean (and, or, not, etc.) word search, which ranked the articles that it retrieved by how highly cited they were. Then, for any individual citing article in that ranked list of citing articles, (2) you could go on to retrieve all the articles citing that individual cited article, again ranked by how highly cited they were. But you could not go on to do a boolean word search within just that set of citing articles; as of July 1 you can. (Thanks to Joseph Esposito for pointing this out on liblicense.)

Of course, Google Scholar is a potential scientometric killer-app that is just waiting to design and display powers far, far greater and richer than even these. Only two things are holding it back: (a) the sparse Open Access content of the web to date (only about 20% of articles published annually) and (b) the sleepiness of Google, in not yet realizing what a potentially rich scientometric resource and tool they have in their hands (or, rather, their harvested full-text archives).

Citebase gives a foretaste of some more of the latent power of an Open Access impact and influence engine (so does citeseerx), but even that is pale in comparison with what is still to come -- if only Green OA self-archiving mandates by the world's universities, the providers of all the missing content, hurry up and get adopted so that they can be implemented, and then all the target content for these impending marvels (not just 20% of it) can begin being reliably provided at long last.

(Elsevier's SCOPUS and Thomson-Reuters' Web of Knowledge are of course likewise standing by, ready to upgrade their services so as to point also to the OA versions of the content they index -- if only we hurry up and make it OA!)

Harnad, S. (2001) Proposed collaboration: google + open citation linking. OAI-General. June 2001.

Harnad, S. (2001) Research access, impact and assessment. Times Higher Education Supplement 1487: p. 16.

Brody, T., Kampa, S., Harnad, S., Carr, L. and Hitchcock, S. (2003) Digitometric Services for Open Archives Environments. In Proceedings of European Conference on Digital Libraries 2003, pp. 207-220, Trondheim, Norway.

Hitchcock, Steve; Woukeu, Arouna; Brody, Tim; Carr, Les; Hall, Wendy & Harnad, Stevan. (2003) Evaluating Citebase, an open access Web-based citation-ranked search and impact discovery service. ECS Technical Report, University of Southampton.

Harnad, Stevan (2003) Maximizing Research Impact by Maximizing Online Access. In: Law, Derek & Judith Andrews, Eds. Digital Libraries: Policy Planning and Practice. Ashgate Publishing 2003.

Harnad, S. (2006) Online, Continuous, Metrics-Based Research Assessment. ECS Technical Report, University of Southampton.

Brody, T., Carr, L., Harnad, S. and Swan, A. (2007) Time to Convert to Metrics. Research Fortnight pp. 17-18.

Brody, T., Carr, L., Gingras, Y., Hajjem, C., Harnad, S. and Swan, A. (2007) Incentivizing the Open Access Research Web: Publication-Archiving, Data-Archiving and Scientometrics. CTWatch Quarterly 3(3).

Harnad, S. (2008) Validating Research Performance Metrics Against Peer Rankings. Ethics in Science and Environmental Politics 8 (11) [The Use And Misuse Of Bibliometric Indices In Evaluating Scholarly Performance]

Harnad, S., Carr, L. and Gingras, Y. (2008) Maximizing Research Progress Through Open Access Mandates and Metrics. Liinc em Revista 4(2).

Harnad, S. (2009) The PostGutenberg Open Access Journal. In: Cope, B. & Phillips, A (Eds.) The Future of the Academic Journal. Chandos.

Harnad, S. (2009) Open Access Scientometrics and the UK Research Assessment Exercise. Scientometrics 79 (1)

(Page 1 of 4, totaling 31 entries)

» next page

EnablingOpenScholarship (EOS)

Quicksearch

Syndicate This Blog

Materials You Are Invited To Use To Promote OA Self-Archiving:

Videos:

audio WOS

Wizards of OA -

audio U Indiana

Scientometrics -

The American Scientist Open Access Forum has been chronicling and often directing the course of progress in providing Open Access to Universities' Peer-Reviewed Research Articles since its inception in the US in 1998 by the American Scientist, published by the Sigma Xi Society.

The American Scientist Open Access Forum has been chronicling and often directing the course of progress in providing Open Access to Universities' Peer-Reviewed Research Articles since its inception in the US in 1998 by the American Scientist, published by the Sigma Xi Society.

The Forum is largely for policy-makers at universities, research institutions and research funding agencies worldwide who are interested in institutional Open Acess Provision policy. (It is not a general discussion group for serials, pricing or publishing issues: it is specifically focussed on institutional Open Acess policy.)

You can sign on to the Forum here.

Archives

Calendar

|

|

May '21 | |||||

| Mon | Tue | Wed | Thu | Fri | Sat | Sun |

| 1 | 2 | |||||

| 3 | 4 | 5 | 6 | 7 | 8 | 9 |

| 10 | 11 | 12 | 13 | 14 | 15 | 16 |

| 17 | 18 | 19 | 20 | 21 | 22 | 23 |

| 24 | 25 | 26 | 27 | 28 | 29 | 30 |

| 31 | ||||||

Categories

Blog Administration

Statistics

Last entry: 2018-09-14 13:27

1129 entries written

238 comments have been made