Quicksearch

Your search for metrics returned 102 results:

Wednesday, April 20. 2016

Evolutionarily Stable Strategies

[This comment was written before I read Richard Poynder's Interview of Tim Gowers. In part 2 I comment after having read the posting.]

[This comment was written before I read Richard Poynder's Interview of Tim Gowers. In part 2 I comment after having read the posting.]Part 1

I don't know about Richard, but I have not despaired of green, or green mandates; I've just grown tired of waiting.

I don't see pre-emptive gold (i.e., pre-green "fool's gold") as an alternative but as just another delay factor, the principal delay factor being human sluggishness.

And I think the notion of a "flip" to fool's gold is incoherent -- an "evolutionary unstable strategy," bound to undo itself: not only because it requires self-sacrificial double-payment locally as well as unrealistic collaboration among nations, institutions, funders, fields and publishers globally, but because the day after it was miraculously (and hypothetically) attained globally it would immediately invite defection (from nations, institutions, funders, and fields) to save money (invasion by the "cheater strategy"). Subscriptions and gold OA "memberships" are simply incommensurable, let alone transformable from one into the other. (Memberships are absurd, and only sell -- a bit, locally -- while subscriptions still prevail, via local Big Deals.

The only evolutionarily stable strategy is offloading onto green OA repositories all but one of the things that publishers traditionally do, leaving only the service of peer review to be paid for as fair-gold OA.

But that requires universal green OA first, not flipped pre-emptive fool's gold.

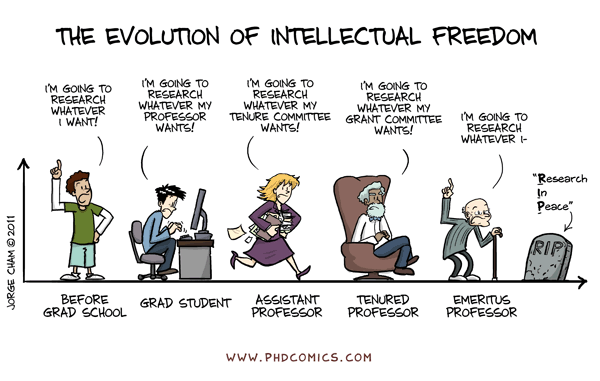

It will all eventually sort itself out that way after a huge series of false-starts. My loss of patience is not just with the needless loss of time but with the boringly repetitious nature of the recurrent false starts. I'd say my last five years, at the very least, have been spent just repeating myself in the face of the very same naive bright-eyed, bushy-tailed and non-viable non-starters. Locally in space and time, some people sometimes listened to my objections and my alternative strategy, but globally the very same non-starters kept popping up, one after the other, independently.

So (with an occasional exception like this) I've stopped preaching. Time will either show that I was wrong or, like evolution, it will undo the maladaptive strategies and stumble blindly, but inevitably toward the stable strategy (which also happens to be the optimal one): universal green first, then a rapid downsizing and transition to scalable, affordable, sustainable fair-gold. Amen.

Part 2

1. Publisher green OA embargoes are ineffectual against the right green OA mandate: immediate deposit plus the almost-OA Button

2. That a “self-styled archivangelist” has left the arena is neither news nor an OA development. It is indeed just symbolic.

3. The fool's gold "flip" is an evolutionarily unstable strategy, fated to flop, despite the fond hopes RCUK, Wellcome, VSNU or MPG.

4. The "impact factor" is, as ever, utterly irrelevant to OA, one way or the other. Metrics will only be diversified and enriched by OA.

5. An immediate-deposit requirement is not an "onerous bureaucratic rule" but a few extra keystrokes per paper published: a no-brainer. Researchers are not "foot-soldiers" but finger-soldiers, and the immediate-deposit mandate is just intended to set those last few digits into motion (the publish-or-perish mandate having already mobilized the legions ahead of it).

6. Leaders are welcome (if not Wellcome), but boycotts are busts (and there have been plenty).

7. Exposés of publisher profiteering are welcome, but not solutions. In any case, the root problem is not affordability but accessibility, and providing access (via green OA) is also the solution, first to accessibility and then, as a natural matter of course, to affordability (post-green fair-gold).

8. Founding a new gold OA journal is hardly new. Offloading everything but peer review onto green repositories is also not new (in fact it will be part of the post-green end-game: fair-gold). But making it scalable and sustainable pre-emptiively would be new...

9. Subsidizing fair-gold costs would be fine, if someone had the resources to subsidize at least 30,000 journals across all disciplines. But while journals are being sustained by subscriptions, and there is no alternative way to access the contents, there is unlikely to be enough subsidy money to do the job. (Universally mandated green, in contrast, would allow journal subscriptions to be cancelled, releasing the money to pay for fair-gold out of just a fraction of the windfall savings.)

10. The impact factor, it cannot be repeated often enough, is absolutely irrelevant to (green) OA. The known track-record of journals, in contrast, will always be a factor.

11. Open "peer" review, or crowd-sourced quality control, likewise a notion aired many times, is, IMHO, likewise a non-starter. Suitable for peddling products and blog postings, but not for cancer cures and serious science or scholarship. (That said, anyone is everyone is already free to post their unrefereed work for all comers; that's what blogs and open commentary are for...)

12. Open online collaboration is very welcome (and more and more widespread) but it is a supplement, not a substitute, for publishing peer-reviewed findings.

13. Mathematics and, to a lesser extent, physics, are manifestly atypical fields in that their practitioners are (1) more willing than others to make their own unrefereed findings public and (2) eager to see and use the unrefereed findings of others. If this had been true of other fields, Arxiv would long ago have become the global unrefereed preprints and refereed postrprint repository for all fields, universal (central) green OA would already have been reached long ago, and the transition to fair gold would already have taken place. (Arxiv has been held up -- including, for a while, by me -- as the way to go since 1991. But things have not gone that way. That's why I switched to promoting distributed institutional repositories.)

14. What if the "P" in APCs -- for those who are "imPlacably opposed" to article processing charges -- stood instead for Peer-Review, and paid only for the editorial expertise in the refereeing (the peers review for free): selecting referees, selecting which referee recommendations need to be followed, selecting which revisions have done so and are hence accepted. These are the sole costs of fair gold -- but they are predicated on universal green to "overlay" on...

15. The two crucial features of peer review are expertise and answerability. This is what is provided by a qualified editor and established journal and absent in self-selected, crowd-sourced, take-it-or-leave-it vetting (already proposed many times, including by another distinguished mathematician). "Fair OA" is synonymous with fair gold, but universal green is the only viable way to get there.

16. Open peer commentary is a fine idea (if I do say so myself) but it is a supplement to peer review, not a substitute for it.

...And let's get our figures straight

Rick Anderson posted the following comment on Richard Poynder's posting in google+: “Institutional Green OA mandates (as distinct from non-mandatory OA policies) are effectively nonexistent in the US, and it's difficult to see how they could ever become widespread at the institutional level. That's just the US, of course, but the US produces an awful lot of research publication.”

According to ROARMAP, which was recently upgraded to expand, classify and verify the entries, although it is probably not yet exhaustive (some mandates may not yet be registered) there are 764 OA policies worlwide, at least 629 of them Green (i.e., they either or request deposit)

The following are the total(subset) figures broken down by country for

total policies and the subset requiring - not requesting - deposit

for Institional and Funder policies.

Worldwide:

Inst 632 (390)

Fund 132 (82)

US

Inst 96 (69)

Fund 34 (11)

UK

Inst: 93 (79)

Fund 24 (23)

Germany

Inst 26 (2)

Fund 1 (0)

Netherlands

Inst 11 (6)

Fund 0

France

Inst 17 (3)

Fund 3 (3)

Canada

Inst 15 (7)

Fund 12 (9)

Australia

Inst 31 (15)

Fund 2 (2)

Rick Anderson: Happy to provide examples.

Duke University -- ROARMAP says deposit is required and that the policy does not specify whether or not a waiver is available. According to Duke's policy, "The Provost or Provost's designate will waive application of the license for a particular article... upon written request by a Faculty member."

Harvard (Faculty of Arts & Sciences) -- ROARMAP says that deposit is required and may not be waived. The Harvard policy says that the institution "will waive application of the policy for a particular article upon written request by a Faculty member."

Oberlin College -- ROARMAP says that deposit is required. Oberlin's policy says that while the submission of bibliographic metadata is required, "the Scholarly Communications Officer will automatically waive application of the policy for a particular article upon written request by the author."

Bucknell University -- ROARMAP says that deposit is required and that the possibility of a waiver is "unspecified." The policy says that "the license granted to Bucknell University for an article will be automaticaly waived for any reason and without sanction at the sole discretion of the faculty member." (Deposit of bibliographic data is requested but not required.)

That's probably enough for now. I can provide more upon request. In fact, if you'd like, I'd be happy to examine all 96 of the US institutional policies identified in ROARMAP, analyze the accuracy of the ROARMAP characterization of each one, and publish what I find (whatever the result might be). Would you like me to do that?

Stevan Harnad: The Harvard FAS OA Policy model (which may or may not have been adopted by the other institutions you cite without their fully understanding its conditions) is that:

(1) Full-text deposit is required

but

(2) Rights-retention (and OA) may be waived on an individual article basis

The deposit requirement (1) cannot be waived, and is not waived if the author elects to waive (2).

This is the policy that Peter calls "dual deposit/release" (and I call immediate-deposit/optional-access, ID/OA):

http://users.ecs.soton.ac.uk/harnad/Hypermail/Amsci/7841.html

(soton site temporarily down today, apologies)

Rick Anderson: Stevan, your characterisation of the Harvard policy seems to me to be simply inaccurate. The full text may be read at https://osc.hul.harvard.edu/policies/fas/. The relevant sentence reads as follows: "The Dean or the Dean's designate will waive application of the policy for a particular article upon written request by a Faculty member explaining the need." This language seems pretty clearly to me to refer to the policy as a whole, not just one component of it -- nor does the policy itself include an OA requirement; instead it provides the possibility that "the Provost's Office may make the article available to the public in an open-access repository" (note the word "may," not usually a prominent feature in mandatory instructions).

For the avoidance of doubt, I am contacting Harvard's Office for Scholarly Communication to either confirm or correct my reading of its policy. I'll let you know what I find out.

Peter Suber: Stevan's restatement of the Harvard policy is correct. Our waiver option only applies to the license, not to the deposit.

Rick Anderson: OK, thanks for clearing that up, Peter. (You guys might want to consider revising the wording of your policy to resolve the ambiguity.)

Stevan, do you disagree that ROARMAP's characterisations of the other policies I cited above are inaccurate?

Stevan Harnad: The other three policies you cited seem to have adopted the Harvard model policy. If they have diverged from it, they need to indicate that explicitly (and unambiguously). ROARMAP incorporates updates of corrections when it receives them. The ones you mention were either registered by the institutions themselves or derived from their documentation and sent to them for vetting.

I cannot vouch for 100% compliance or accuracy. But your assertion was not about that. Your assertion was “Institutional Green OA mandates (as distinct from non-mandatory OA policies) are effectively nonexistent in the US."

Do you think your four examples show that? One out of the four, Harvard FAS, would already disconfirm "nonexistent" ("effectively" being a weasel-word) even without the added fact that Harvard is not just any university, and the one whose model many US universities have adopted. And even if you could show (as you certainly have not done) that not one of the remaining 65 US institutional mandates (out of the total 96 US institutional OA policies in ROARMAP) was a mandate. Do you disagree?

Rick Anderson: All of the examples I provided (including the Harvard example) constitute evidence in support of my statement, since they are instances in which Green OA is not mandatory. They don't constitute the entire evidence base. I made my statement based on the fact that I have read many OA policies from US institutions, and I have not yet encountered (nor heard of) a single one that requires faculty to make their work available on an OA basis. A policy that requires deposit but does not require OA is not a mandatory OA policy.

If my assertion is wrong, it should be easy to refute. Can you cite a significant number of examples of US institutions that do require faculty to make their work available on an OA basis (as distinct from merely requiring deposit)?

Stevan Harnad: I would like to avoid empty semiological quibbling. The US has 96 institutional OA policies. That is uncontested. Of these, 69 are registered as deposit mandates, hence mandates.

There are many other conditions (such as whether and when it is mandatory to make the deposit OA), but it may be helpful to understand that the reason mandatory (full-text) deposit is the crucial requirement is that if (and only if) the full-text is deposited, the repository's automated copy-request Button (if and when implemented) can provide almost-immediate, almost-OA to any user who clicks it (if the author too chooses to comply, with a click).

The hypothesis (and it is indeed a hypothesis, not a certainty) is that this compromise mandate (DD/R, ID/OA), if universally adopted, will not only provide almost 100% Green OA, but will prove sufficient to eventually make subscriptions cancellable, thereby inducing journal publisher downsizing, the phasing out of obsolete products and services, and a transition to affordable, scalable and sustainable Fair-Gold OA, charging for peer-review alone, and paid for out of a fraction of the institutional subscription cancellation savings, instead of the over-priced, double-paid, and unnecessary Fool's Gold that is on offer now, paid for out of already over-stretched subscription as well as research funds.

Tuesday, January 5. 2016

Suspicions

RP: "I have repeatedly said that I harbour no suspicions about EOS. You repeatedly assert that I do.I do read your posts, Richard, including the snippets you tweet, to garner traffic for your blog.

You likewise keep assigning a variety of different motivations to me that are not true (and how would you know what my motivations are anyway?)

But, by way of proof that I read your posts, here are a few snippets of my own, about suspicions, secretiveness and attributing motivations, to remind you:

RP: "I would like to thank Bernard Rentier for his detailed and frank account of EOS... That said, it does seem odd to me that it took Rick Anderson two attempts to get this response from EOS."You seem to be (1) confusing derisiveness (about the risible) with defensiveness, Richard, and (for some longstanding reason I really cannot fathom) (2) confusing openly, publicly "castigat[ing] anyone who dares express a contrary view" with "non-transparency and non-inclusiveness... instinctively [un]democratic."

RP: "To reiterate: I did not mention EOS in my post, and I harbour no suspicions about the organisation... That said, the defensive response to Rick’s questions about EOS underlines for me the fact that OA advocates are not by nature inclined to be open in their processes."

RP: "In fact, one might argue that the overly defensive responses to Rick’s questions themselves flirt with paranoia. Certainly they confirm me in my belief that there is a strand within the OA movement that tends towards non-transparency and non-inclusiveness."

RP: "Nor are they instinctively democratic. One need only monitor the Global Open Access List for a few weeks to see the hauteur with which OA “old sweats” pronounce on the topic, and castigate anyone who dares express a contrary view."

I think you are quite mistaken. There is nothing undemocratic or non-transparent or non-exclusive about open, public criticism, quite the opposite (and regardless of the "hauteur" with which it might be expressed).

And I continue to hold and air openly the view -- which you are democratically free to ignore or refute or deride -- that (insofar as EOS or BOAI or your humble servant are concerned) you are sowing suspicions -- about closedness and exclusiveness -- that have no substance whatsoever.

I have two interpretations as to why you are doing this, one more charitable, the other less so:

The charitable interpretation is that you really believe the suspicions, which are fuelled by (or themselves fuel) your longstanding hypothesis that the reason the open access movement is moving so slowly is that it does not have an umbrella organization that includes all interested parties. (I think the hypothesis is mistaken, and that the slow progress is because of conflicts of interest -- as well as apathy -- that would not be resolved even if it were possible to draw everyone into the same tent.)

The uncharitable interpretation -- but even that one, since I know you, and know you have integrity, is only about what may be an unconscious "instinctive" tendency (dare I call it a journalistic one?), rather than a deliberate, calculating strategy -- is that you are airing the suspicions at times when there is no OA news of substance because they draw attention and traffic.

RP: "BOAI was a meeting between a small group of like-minded people, and organised by a philanthropist with a specific political agenda. In the wake of that meeting OSI committed several million dollars to fund a number of OA initiatives (and has continued to play a key role in the OA movement since then). As such, those who attended BOAI took the Soros money but did nothing to make the movement “official” or inclusive, or seek to engage the research community in their plans..."I have to leave it to others to reply to this, as I do not think it deserves to be dignified by a response. If I made one it would undoubtedly be derisive...

There is a secondary hypothesis I also think you may hold, Richard (though I'm ready to say I'm mistaken, if you deny it), which is that you feel there is something undemocratic or contrary to academic freedom about OA mandates. I think the instincts that may be fuelling this secondary hypothesis in you are (1) the feeling that academics today are already far too put upon, along with (2) scepticism about metrics and perhaps about research evaluation in general, including peer review.

This is scepticism that I may partly share, but that I regard as having nothing to do with OA itself, which is about access to published, peer-reviewed research, such as it is. Reforms would be welcome, but what's needed in the meanwhile is access.

(And of course mandating a few dozen extra keystrokes per year for their own good is hardly a credible academic grievance; the real reasons for the resistance are not ergonomic but symbolic, ideological, psychological and wrong-headed. In a word, risible.)

And last, I think you are (instinctively) conflating OA with FOI.

Stevan Harnad

Tuesday, December 29. 2015

A caricature of its own making

From the thread "A creature of its own making?" on GOAL (Global Open Access List).

From the thread "A creature of its own making?" on GOAL (Global Open Access List).Jean-Claude Guédon: "Alicia Wise always speaks with a forked tongue! I wonder how much she is paid to practise this dubious art?"I wonder what is going on here? Why are we getting lessons in etiquette on GOAL rather than discussing OA matters of substance?

Richard Poynder: "I am not aware that Alicia Wise has ever been anything other than polite to members of this list. It does not show open access in a good light that every time she posts to the list her comments generate the kind of response we see below."

Yes, Alicia is paid to keep on talking Elsevier double-talk. Yes, she does it politely. That's not the point. The point is that it is double-talk:

Alicia Wise: "All our authors... have both gold and green Open Access publishing options."What that means is:

That is indeed fork-tongued double-talk*: Say what sounds like one thing but mean another, and say it politely. (Why rile the ones you are duping?)You may either (1) pay

(always over and above what you pay for subscriptions overall, always heavily, and sometimes even doubly)

for (gold) OA

or else you may (2) wait

(for twelve or more months*)

for (green) OA.

So, yes, Richard is right -- and others (including myself: google “harnad pogo”) have already said it time and time again in this self-same Forum -- that Elsevier is not the only one to blame. There are the dupers (Elsevier) and the duped (universities and their researchers). We all know that.*Actually, it's double-double-talk, and, as pointed out many times before, if Elsevier authors were sensible they would realize that they can provide immediate, unembargoed green OA if they wish, ignoring Elsevier's never-ending attempts at updating their pseudo-legal double-talk to sound both permissive and prohibitive at the same time.

But it is not a co-conspiracy -- much as conspiratorial thinking comes in handy at lean times when there is nothing new to talk about.

So although the dupees have themselves to blame for allowing themselves to be duped, that does not put them on the same plane of culpability as the dupers. After all, it is the dupers who gain from the duping, and the dupees who lose, whether or not they have themselves to blame for falling for it.

Blaming the victim, as Richard does, below, also has a long pedigree in this Forum, but I will not rebut it again in detail. The short answer is that adopting effective Green OA mandates (rather than vilifying the victims for their foolishness) is the remedy for all the damage the victims have unwittingly allowed to be done them for so long.

And stop fussing about metrics. They too will sort themselves out completely once we have universally mandated (and provided) green OA.

Richard Poynder: "What Jean-Claude’s criticism of large publishers like Elsevier and Wiley omits is the role that the research community has played in their rise to power, a role that it continues to play. In fact, not only has the research community been complicit [emphasis added] in the rise and rise [sic] of the publishing oligarchy that Jean-Claude so deprecates, but one could argue that it created it — i.e. this oligarchy is a creature of its own making.And so are Richard's reproaches...

"After all, it is the research community that funds these publishers, it is the research community that submits papers to these publishers (and signs over copyright in the process), and it is the research community that continues to venerate the brands (essentially a product of the impact factor) that allow these publishers to earn the high profits that Jean-Claude decries.

"And by now seeking to flip this oligarchy’s journals to OA the research community appears to be intent on perpetuating its power (and doubtless profits).

"One might therefore want to suggest that Jean-Claude’s animus is misdirected. [emphasis added]"

Your increasingly bored archivangelist,

Stevan Harnad

Monday, December 7. 2015

Why Scholars Scull

Disagreement is always good — creative, even. I am not trying to change Richard Poynder's mind, just openly airing points and counterpoints, in the spirit of open peer commentary...

Disagreement is always good — creative, even. I am not trying to change Richard Poynder's mind, just openly airing points and counterpoints, in the spirit of open peer commentary...1. I agree that the home pages of Institutional Repositories that simply tout their generic overall deposit counts are doing numerology.

2. "Dark deposit" is rather ominous-sounding. The reality is that there are:

(i) undeposited articles,And there's the Button to supplement them. One can always describe cups as X% full or as (1-X)% empty.

(ii) metadata-only deposits,

(iii) full-text non-OA article immediate-deposits (which are non-OA for varying intervals),

(iv) full-text OA delayed-deposits and

(v) full-text OA immediate-deposits.

3. No, to calculate yearly deposit ratios using WoS or SCOPUS in order to estimate total yearly deposit ratios is definitely not "deceptive": it is valid for WoS-indexed or SCOPUS-indexed output (which also happens to be the output that the OA movement is mostly about, and for), but it might be an underestimate or overestimate for non-WoS/SCOPUS output, if for some reason their ratio differs. So what?

4. Numerology is meaningless numbers, counted for their own sake, and interpreted according to taste (which can be occult, ornate or obtuse). Calculating correlations between mandate conditions and deposit ratios and drawing predictive conclusions from correlations whose probability of having occurred by chance is less that 5% is conventional predictive statistics (which only turns into numerology if you do a fishing expedition with a very large number of tests and fail to adjust your significance level for the likelihood that 5% of the significant correlations will have occurred by chance). We did only a small number of tests and had predicted a-priori which ones were likely to be significant, and in what direction.

5. Yes, there are far too few mandates, just as there are far too few deposits. Nevertheless, there were enough to detect the statistically significant trends; and if they are put into practice, there will be more effective mandates and more deposits. (The HEFCE/Liege immediate-deposit condition for eligibility for research evaluation turned out to be one of the statistically significant conditions.)

6. I heartily agree that academics are excessively micromanaged and that evaluative metrics can and do become empty numerology as well. But I completely disagree that requiring scholars and scientists to do a few extra keystrokes per published article (5 articles per year? 5 minutes per article?) counts as excessive micro-management, any more than "publish or perish" itself does. Both are in fact close to the very core of a scholar's mission and mandate (sic) qua scholar: To conduct research and report their findings -- now updated to making it OA in the online era. Justifiable animus against excessive and intrusive micromanagement is no excuse for shooting oneself in the foot by resisting something that is simple, takes no time, and is highly beneficial to the entire scholarly community.

7. Cultures don't change on a wish or a whim (or a "subversive proposal"!); they change when the pay-off contingencies (not necessarily financial!) change. That's how publish-or-perish worked (publication- and citation-bean-counting for employment, promotion, tenure, funding) and the online era now requires a tiny, natural extension of publish-or-perish to publish-and-deposit for eligibility for bean-counting.

8. And if we remind ourselves, just for a moment, as to why it is that scholars scull in the first place -- which is not for the sake of publication- and citation-bean-counting for employment, promotion, tenure, funding), is it not so that their findings can be accessed, used and built upon by all their would-be users?

Stevan Harnad

Wednesday, December 2. 2015

On Horses, Water, and Life-Span

"I have a feeling that when Posterity looks back at the last decade of the 2nd A.D. millennium of scholarly and scientific research on our planet, it may chuckle at us... I don't think there is any doubt in anyone's mind as to what the optimal and inevitable outcome of all this will be: The [peer-reviewed journal| literature will be free at last online, in one global, interlinked virtual library... and its [peer review] expenses will be paid for up-front, out of the [subscription-cancelation] savings. The only question is: When? This piece is written in the hope of wiping the potential smirk off Posterity's face by persuading the academic cavalry, now that they have been led to the waters of self-archiving, that they should just go ahead and drink!" -- Harnad (1999)I must admit I've lost interest in following the Open Access Derby. All the evidence, all the means and all the stakes are by now on the table, and have been for some time. Nothing new to be learned there. It's just a matter of time till it gets sorted and acted upon; the only lingering uncertainty is about how long that will take, and that is no longer an interesting enough question to keep chewing on, now that all's been said, if not done.

Comments on: Richard Poynder (2015) Open Access, Almost-OA, OA Policies, and Institutional Repositories. Open And Shut. December 01, 2015A few little corrections and suggestions on Richard's paper:

(1) The right measure of repository and policy success is the percentage of an institution's total yearly peer-reviewed research article output that is deposited as full text immediately upon acceptance for publication. (Whether the deposit is immediately made OA is much less important, as long as the copy-request Button is (properly!) implemented. Much less important too are late deposits, author Button-request compliance rates, or other kinds of deposited content. Once all refereed articles are being deposited immediately, all the rest will take care of itself, sooner or later.)

(2) CRIS/Cerif research-asset-management tools are complements to Institutional Repositories, not competitors.

(3) The Australian ERA policy was a (needless) flop for OA. The UK's HEFCE/Ref2020 policy, in contrast, looks like it can become a success. (None of this has anything to do with the pro's or con's of either research evaluation, citations, or metrics in general.)

(4) No, "IDOA/PEM" (Deposit mandates requiring immediate deposits for research evaluation or funding, with the Button) will not increase "dark deposit," they will increase deposit -- and mandate adoption, mandate compliance, OA, Button-Use, Almost-OA, access and citations. They will also hasten the day when universal IDOA/PEM will make subscriptions cancellable and unsustainable, inducing conversion to fair-Gold OA (instead of today's over-priced, double-paid and unnecessary Fool's-Gold OA. But don't ask me "how long?" I don't know, and I no longer care!)

(5) The few anecdotes about unrefereed working papers are completely irrelevant. OA is about peer-reviewed journal articles. Unrefereed papers come and go. And eprints and dspace repositories clearly tag papers as refereed/unrefereed and published/unpublished. (The rest is just about scholarly practice and sloppiness, both from authors and from users.)

(6) At some point in the discussion, Richard, you too fall into the usual canard about impact-factor and brand, which concerns only Gold OA, not OA.

RP: "Is the sleight of hand involved in using the Button to promote the IDOA/PEM mandate justified by the end goal — which is to see a proliferation of such mandates? Or to put it another way, how successful are IDOA/PEM mandates likely to prove?"No sleight of hand -- just sluggishness of hand, on the part of (some) authors (both for Button compliance and mandate compliance) and on the part of (most) institutions and funders (for the design and adoption of successful IDOA/PEM mandates (with Button). And the evidence is all extremely thin, one way or the other. Of course successful IDOA/PEM mandates (with Button) are (by definition!) better than relying on email links at publisher sites. "Successful" means near 100% compliance rate for immediate full-text deposit. And universal adoption of successful IDOA/PEM mandates (with Button) means universal adoption of successful IDOA/PEM mandates (with Button). (Give me that and worries about author Button-compliance will become a joke.)

The rest just depends on the speed of the horses -- and I am not a betting man (when it comes to predicting how long it will take to reach the optimal and inevitable). (Not to mention that I am profoundly against horse-racing and the like -- for humanitarian reasons that are infinitely more important than OA ever was or will be.)

Wednesday, December 17. 2014

Open Access Metrics: Use REF2014 to Validate Metrics for REF2020

Steven Hill of HEFCE has posted “an overview of the work HEFCE are currently commissioning which they are hoping will build a robust evidence base for research assessment” in LSE Impact Blog 12(17) 2014 entitled Time for REFlection: HEFCE look ahead to provide rounded evaluation of the REF

Steven Hill of HEFCE has posted “an overview of the work HEFCE are currently commissioning which they are hoping will build a robust evidence base for research assessment” in LSE Impact Blog 12(17) 2014 entitled Time for REFlection: HEFCE look ahead to provide rounded evaluation of the REFLet me add a suggestion, updated for REF2014, that I have made before (unheeded):

Scientometric predictors of research performance need to be validated by showing that they have a high correlation with the external criterion they are trying to predict. The UK Research Excellence Framework (REF) -- together with the growing movement toward making the full-texts of research articles freely available on the web -- offer a unique opportunity to test and validate a wealth of old and new scientometric predictors, through multiple regression analysis: Publications, journal impact factors, citations, co-citations, citation chronometrics (age, growth, latency to peak, decay rate), hub/authority scores, h-index, prior funding, student counts, co-authorship scores, endogamy/exogamy, textual proximity, download/co-downloads and their chronometrics, tweets, tags, etc.) can all be tested and validated jointly, discipline by discipline, against their REF panel rankings in REF2014. The weights of each predictor can be calibrated to maximize the joint correlation with the rankings. Open Access Scientometrics will provide powerful new means of navigating, evaluating, predicting and analyzing the growing Open Access database, as well as powerful incentives for making it grow faster.

Harnad, S. (2009) Open Access Scientometrics and the UK Research Assessment Exercise. Scientometrics 79 (1)

(Also in Proceedings of 11th Annual Meeting of the International Society for Scientometrics and Informetrics 11(1), pp. 27-33, Madrid, Spain. Torres-Salinas, D. and Moed, H. F., Eds. 2007)

The Only Substitute for Metrics is Better Metrics (2014)

and

On Metrics and Metaphysics (2008)

REF2014 gives the 2014 institutional and departmental rankings based on the 4 outputs submitted.

That is then the criterion against which the many other metrics I list below can be jointly validated, through multiple regression, to initialize their weights for REF2020, as well as for other assessments. In fact, open access metrics can be — and will be — continuously assessed, as open access grows. And as the initialized weights of the metric equation (per discipline) are optimized for predictive power, the metric equation can replace the peer rankings (except for periodic cross-checks and updates) -- or at least supplement it.

Single metrics can be abused, but not only can abuses be named and shamed when detected, but it becomes harder to abuse metrics when they are part of a multiple, inter-correlated vector, with disciplinary profiles of their normal interactions: someone dispatching a robot to download his papers would quickly be caught out when the usual correlation between downloads and later citations fails to appear. Add more variables and it gets even harder.

In a weighted vector of multiple metrics like the sample I had listed, it’s no use to a researcher if told in advance that for REF2020 the metric equation will be the following, with the following weights for their particular discipline:

REF2020Rank =

w1(pubcount) + w2(JIF) + w3(cites) +w4(art-age) + w5(art-growth) + w6(hits) + w7(cite-peak-latency) + w8(hit-peak-latency) + w9(citedecay) + w10(hitdecay) + w11(hub-score) + w12(authority+score) + w13(h-index) + w14(prior-funding) +w15(bookcites) + w16(student-counts) + w17(co-cites + w18(co-hits) + w19(co-authors) + w20(endogamy) + w21(exogamy) + w22(co-text) + w23(tweets) + w24(tags), + w25(comments) + w26(acad-likes) etc. etc.

The potential list could be much longer, and the weights can be positive or negative, and varying by discipline.

"The man who is ready to prove that metric knowledge is wholly impossible… is a brother metrician with rival metrics…”

The Only Substitute for Metrics is Better Metrics

Comment on: Mryglod, Olesya, Ralph Kenna, Yurij Holovatch and Bertrand Berche (2014) Predicting the results of the REF using departmental h-index: A look at biology, chemistry, physics, and sociology. LSE Impact Blog 12(6)

"The man who is ready to prove that metaphysical knowledge is wholly impossible… is a brother metaphysician with a rival theory” Bradley, F. H. (1893) Appearance and Reality

The topic of using metrics for research performance assessment in the UK has a rather long history, beginning with the work of Charles Oppenheim.

The topic of using metrics for research performance assessment in the UK has a rather long history, beginning with the work of Charles Oppenheim. The solution is neither to abjure metrics nor to pick and stick to one unvalidated metric, whether it’s the journal impact factor or the h-index.

The solution is to jointly test and validate, field by field, a battery of multiple, diverse metrics (citations, downloads, links, tweets, tags, endogamy/exogamy, hubs/authorities, latency/longevity, co-citations, co-authorships, etc.) against a face-valid criterion (such as peer rankings).

See also: "On Metrics and Metaphysics" (2008)

Oppenheim, C. (1996). Do citations count? Citation indexing and the Research Assessment Exercise (RAE). Serials: The Journal for the Serials Community, 9(2), 155-161.

Oppenheim, C. (1997). The correlation between citation counts and the 1992 research assessment exercise ratings for British research in genetics, anatomy and archaeology. Journal of documentation, 53(5), 477-487.

Oppenheim, C. (1995). The correlation between citation counts and the 1992 Research Assessment Exercise Ratings for British library and information science university departments. Journal of Documentation, 51(1), 18-27.

Oppenheim, C. (2007). Using the h-index to rank influential British researchers in information science and librarianship. Journal of the American Society for Information Science and Technology, 58(2), 297-301.

Harnad, S. (2001) Research access, impact and assessment. Times Higher Education Supplement 1487: p. 16.

Harnad, S. (2003) Measuring and Maximising UK Research Impact. Times Higher Education Supplement. Friday, June 6 2003

Harnad, S., Carr, L., Brody, T. & Oppenheim, C. (2003) Mandated online RAE CVs Linked to University Eprint Archives: Improving the UK Research Assessment Exercise whilst making it cheaper and easier. Ariadne 35.

Hitchcock, Steve; Woukeu, Arouna; Brody, Tim; Carr, Les; Hall, Wendy and Harnad, Stevan. (2003) Evaluating Citebase, an open access Web-based citation-ranked search and impact discovery service Technical Report, ECS, University of Southampton.

Harnad, S. (2004) Enrich Impact Measures Through Open Access Analysis. British Medical Journal BMJ 2004; 329:

Harnad, S. (2006) Online, Continuous, Metrics-Based Research Assessment. Technical Report, ECS, University of Southampton.

Brody, T., Harnad, S. and Carr, L. (2006) Earlier Web Usage Statistics as Predictors of Later Citation Impact. Journal of the American Association for Information Science and Technology (JASIST) 57(8) pp. 1060-1072.

Brody, T., Carr, L., Harnad, S. and Swan, A. (2007) Time to Convert to Metrics. Research Fortnight 17-18.

Brody, T., Carr, L., Gingras, Y., Hajjem, C., Harnad, S. and Swan, A. (2007) Incentivizing the Open Access Research Web: Publication-Archiving, Data-Archiving and Scientometrics. CTWatch Quarterly 3(3).

Harnad, S. (2008) Validating Research Performance Metrics Against Peer Rankings. Ethics in Science and Environmental Politics 8 (11) doi:10.3354/esep00088 The Use And Misuse Of Bibliometric Indices In Evaluating Scholarly Performance

Harnad, S. (2008) Self-Archiving, Metrics and Mandates. Science Editor 31(2) 57-59

Harnad, S., Carr, L. and Gingras, Y. (2008) Maximizing Research Progress Through Open Access Mandates and Metrics. Liinc em Revista 4(2).

Harnad, S. (2009) Open Access Scientometrics and the UK Research Assessment Exercise. Scientometrics 79 (1) Also in Proceedings of 11th Annual Meeting of the International Society for Scientometrics and Informetrics 11(1), pp. 27-33, Madrid, Spain. Torres-Salinas, D. and Moed, H. F., Eds. (2007)

Harnad, S. (2009) Multiple metrics required to measure research performance. Nature (Correspondence) 457 (785) (12 February 2009)

Harnad, S; Carr, L; Swan, A; Sale, A & Bosc H. (2009) Maximizing and Measuring Research Impact Through University and Research-Funder Open-Access Self-Archiving Mandates. Wissenschaftsmanagement 15(4) 36-41

Wednesday, May 21. 2014

Web Science and the Mind: Montreal July 7-18

Sponsored by Microsoft Research Connections

UQÀM Cognitive Sciences Summer School 2014

WEB SCIENCE AND THE MIND

JULY 7 - 18 2014 Universite du Québec à Montreal, Montreal, Canada

Registration: http://www.summer14.isc.uqam.ca/page/inscription.php?lang_id=2

Full Programme: http://users.ecs.soton.ac.uk/harnad/Temp/AbsPrelimProg3.htm

Cognitive Science and Web Science have been converging in the study of cognition:

(i) distributed within the brain

(ii) distributed between multiple minds

(iii) distributed between minds and media

SPEAKERS AND TOPICS

Katy BORNER Indiana U Humanexus: Envisioning Communication and Collaboration

Les CARR U Southampton Web Impact on Society

Simon DeDEO Indiana U Collective Memory in Wikipedia

Sergey DOROGOVTSEV U Aveiro Explosive Percolation

Alan EVANS Montreal Neurological Institute Mapping the Brain Connectome

Jean-Daniel FEKETE INRIA Visualizing Dynamic Interactions

Benjamin FUNG McGill U Applying Data Mining to Real-Life Crime Investigation

Fabien GANDON INRIA Social and Semantic Web: Adding the Missing Links

Lee GILES Pennsylvania State U Scholarly Big Data: Information Extraction and Data Mining

Peter GLOOR MIT Center for Collective Intelligence Collaborative Innovation Networks

Jennifer GOLBECK U Maryland You Can't Hide: Predicting Personal Traits in Social Media

Robert GOLDSTONE Indiana U Learning Along with Others

Stephen GRIFFIN U Pittsburgh New Models of Scholarly Communication for Digital Scholarship

Wendy HALL U Southampton It's All In the Mind

Harry HALPIN U Edinburgh Does the Web Extend the Mind - and Semantics?

Jiawei HAN U Illinois/Urbana Knowledge Mining in Heterogeneous Information Networks

Stevan HARNAD UQAM Memetrics: Monitoring Measuring and Mapping Memes

Jim HENDLER Rensselaer Polytechnic Institute The Data Web

Tony HEY Microsoft Research Connections Open Science and the Web

Francis HEYLIGHEN Vrije U Brussel Global Brain: Web as Self-organizing Distributed Intelligence

Bryce HUEBNER Georgetown U Macrocognition: Situated versus Distributed

Charles-Antoine JULIEN Mcgill U Visual Tools for Interacting with Large Networks

Kayvan KOUSHA U Wolverhampton Web Impact Metrics for Research Assessment

Guy LAPALME U Montreal Natural Language Processing on the Web

Vincent LARIVIERE U Montreal Scientific Interaction Before and Since the Web

Yang-Yu LIU Northeastern U Controllability and Observability of Complex Systems

Richard MENARY U Macquarie Enculturated Cognition

Thomas MALONE MIT Collective Intelligence: What is it? How to measure it? Increase it?

Adilson MOTTER Northwestern U Bursts, Cascades and Time Allocation

Cameron NEYLON PLOS Network Ready Research: The Role of Open Source and Open Thinking

Takashi NISHIKAWA Northwestern U Visual Analytics: Network Structure Beyond Communities

Filippo RADICCHI Indiana U Analogies between Interconnected and Clustered Networks

Mark ROWLANDS Miami U Extended Mentality: What It Is and Why It Matters

Robert RUPERT U Colorado What is Cognition and How Could it be Extended?

Derek RUTHS McGill U Social Informatics

Judith SIMON ITAS Socio-Technical Epistemology

John SUTTON Macquarie U Transactive Memory and Distributed Cognitive Ecologies

Georg THEINER Villanova U Domains and Dimensions of Group Cognition

Peter TODD Indiana U Foraging in the World Mind and Online

Friday, October 11. 2013

Spurning the Better to Keep Burning for the Best

Björn Brembs (as interviewed by Richard Poynder) is not satisfied with "read access" (free online access: Gratis OA): he wants "read/write access" (free online access plus re-use rights: Libre OA).

Björn Brembs (as interviewed by Richard Poynder) is not satisfied with "read access" (free online access: Gratis OA): he wants "read/write access" (free online access plus re-use rights: Libre OA).The problem is that we are nowhere near having even the read-access that Björn is not satisfied with.

So his dissatisfaction is not only with something we do not yet have, but with something that is also an essential component and prerequsite for read/write access. Björn wants more, now, when we don't even have less.

And alas Björn does not give even a hint of a hint of a practical plan for getting read/write access instead of "just" the read access we don't yet have.

All he proposes is that a consortium of rich universities should cancel journals and take over.

Before even asking what on earth those universities would/should/could do, there is the question of how their users would get access to all those cancelled journals (otherwise this "access" would be even less than less!). Björn's reply -- doubly alas -- uses the name of my eprint-request Button in vain:

The eprint-request Button is only legal, and only works, because authors are providing access to individual eprint requestors for their own articles. If the less-rich universities who were not part of this brave take-over consortium of journal-cancellers were to begin to provide automatic Button-access to all those extra-institutional users, their institutional license costs (subscriptions) would sky-rocket, because their Big-Deal license fees are determined by publishers on the basis of the size of each institution's total usership, which would now include all the users of all the cancelling institutions, on Björn's scheme.

So back to the work-bench on that one.

Björn seems to think that OA is just a technical matter, since all the technical wherewithal is already in place, or nearly so. But in fact, the technology for Green Gratis ("read-only") OA has been in place for over 20 years, and we are still nowhere near having it. (We may, optimistically, be somewhere between 20-30%, though certainly not even the 50% that Science-Metrix has optimistically touted recently as the "tipping point" for OA -- because much of that is post-embargo, hence Delayed Access (DA), not OA.

Björn also seems to have proud plans for post-publication "peer review" (which is rather like finding out whether the water you just drank was drinkable on the basis of some crowd-sourcing after you drank it).

Post-publication crowd-sourcing is a useful supplement to peer review, but certainly not a substitute for it.

All I can do is repeat what I've had to say so many times across the past 20 years, as each new generation first comes in contact with the access problem, and proposes its prima facie solutions (none of which are new: they have all been proposed so many times that they -- and their fatal flaws -- have already have each already had their own FAQs for over a decade.) The watchword here, again, is that the primary purpose of the Open Access movement is to free the peer-reviewed literature from access-tolls -- not to free it from peer-review. And before you throw out the peer review system, make sure you have a tried, tested, scalable and sustainable system with which to replace it, one that demonstrably yields at least the same quality (and hence usability) as the existing system does.

Till then, focus on freeing access to the peer-reviewed literature such as it is.

And that's read-access, which is much easier to provide than read-write access. None of the Green (no-embargo) publishers are read-write Green: just read-Green. Insisting on read-write would be an excellent way to get them to adopt and extend embargoes, just as the foolish Finch preference for Gold did (and just as Rick Anderson's absurd proposal to cancel Green (no-embargo) journals would do).

And, to repeat: after 20 years, we are still nowhere near 100% read-Green, largely because of phobias about publisher embargoes on read-Green. Björn is urging us to insist on even more than read-Green. Another instance of letting the (out-of-reach) Best get in the way of the (within-reach) Better. And that, despite the fact that it is virtually certain that once we have 100% read-Green, the other things we seek -- read-write, Fair-Gold, copyright reform, publishing reform, perhaps even peer review reform -- will all follow, as surely as day follows night.

But not if we contribute to slowing our passage to the Better (which there is already a tried and tested means of reaching, via institutional and funder mandates) by rejecting or delaying the Better in the name of holding out for a direct sprint to the Best (which no one has a tried and tested means of reaching, other than to throw even more money at publishers for Fool's Gold). Björn's speculation that universities should cancel journals, rely on interlibrary loan, and scrap peer-review for post-hoc crowd-sourcing is certainly not a tried and tested means!

As to journal ranking and citation impact factors: They are not the problem. No one is preventing the use of article- and author-based citation counts in evaluating articles and authors. And although the correlation between journal impact factors and journal quality and importance is not that big, it's nevertheless positive and significant. So there's nothing wrong with libraries using journal impact factors as one of a battery of many factors (including user surveys, usage metrics, institutional fields of interest, budget constraints, etc.) in deciding which journals to keep or cancel. Nor is there anything wrong with research performance evaluation committees using journal impact factors as one of a battery of many factors (alongside article metrics, author metrics, download counts, publication counts, funding, doctoral students, prizes, honours, and peer evaluations) in assessing and rewarding research progress.

The problem is neither journal impact factors nor peer review: The only thing standing between the global research community and 100% OA (read-Green) is keystrokes. Effective institutional and funder mandates can and will ensure that those keystrokes are done. Publisher embargoes cannot stop them: With immediate-deposit mandates, 100% of articles (final, refereed drafts) are deposited in the author's institutional repository immediately upon acceptance for publication. At least 60% of them can be made immediately OA, because at least 60% of journals don't embargo (read-Green) OA; access to the other 40% of deposits can be made Restricted Access, and it is there that the eprint-request Button can provide Almost-OA with one extra keystroke from the would-be user to request it and one extra keystroke from the author to fulfill the request.

That done, globally, and we can leave it to nature (and human nature) to ensure that the "Best" (100% immediate OA, subscription collapse, conversion to Fair Gold, all the re-use rights users need, and even peer-review reform) will soon follow.

But not as long as we continue spurning the Better and just burning for the Best.

Stevan Harnad

Sunday, September 29. 2013

Branding vs. Freeing

C P Chandrasekhar (2013) Only the Open Access Movement can address the adverse impact of Western domination of the world of knowlege. Frontline (Oct 4 2913)

Interesting article, but I am afraid it misses the most important points:

Interesting article, but I am afraid it misses the most important points:1. As so often happens, the article takes "OA" to mean Gold OA journals, completely missing Green OA self-archiving and the importance and urgency of mandating it.Providing OA can completely remedy (a), which will in turn help mitigate (b) and that in turn may improve (c). (OA will also greatly enrich and strengthen the variety and validity of metrics.)

2. The article greatly underestimates the role of quality levels and quality control (peer review standards) in the perceived and actual importance and value of research findings, journal articles and journals -- focusing instead on over-reliance and abuse of citation metrics (which does indeed occur, but is not the central problem behind disparities in (a) user access to journals to read, (b) author access to journals publish in, or (c) researcher access to funding to do research with).

But not if we instead just tilt against impact factors and press for new forms of "branding." Branding is simply the earned reputation of a journal based on its track-record for quality, and that means its peer review standards, as certified by the journal's name ("brand").

What is needed is neither new Gold OA journals, nor new forms of "branding." What is needed is Open Access to the peer-reviewed journal literature, such as it is, free for all online: peer-reviewed research needs to be freed from access-denial, not from peer review.

And the way for India and China (and the rest of the world too) to reach that is for their research institutions and funders to mandate Green OA self-archiving of all their peer-reviewed research output.

That's all there is to it. The rest is just ideological speculation, which can no more provide Open Access than it can feed the hungry, cure the sick, or protect from injustice. It simply distracts from the tried and tested practical path that needs to be taken to get the job done.

Stevan Harnad

(Page 1 of 11, totaling 102 entries)

» next page

EnablingOpenScholarship (EOS)

Quicksearch

Syndicate This Blog

Materials You Are Invited To Use To Promote OA Self-Archiving:

Videos:

audio WOS

Wizards of OA -

audio U Indiana

Scientometrics -

The American Scientist Open Access Forum has been chronicling and often directing the course of progress in providing Open Access to Universities' Peer-Reviewed Research Articles since its inception in the US in 1998 by the American Scientist, published by the Sigma Xi Society.

The American Scientist Open Access Forum has been chronicling and often directing the course of progress in providing Open Access to Universities' Peer-Reviewed Research Articles since its inception in the US in 1998 by the American Scientist, published by the Sigma Xi Society.

The Forum is largely for policy-makers at universities, research institutions and research funding agencies worldwide who are interested in institutional Open Acess Provision policy. (It is not a general discussion group for serials, pricing or publishing issues: it is specifically focussed on institutional Open Acess policy.)

You can sign on to the Forum here.

Archives

Calendar

|

|

May '21 | |||||

| Mon | Tue | Wed | Thu | Fri | Sat | Sun |

| 1 | 2 | |||||

| 3 | 4 | 5 | 6 | 7 | 8 | 9 |

| 10 | 11 | 12 | 13 | 14 | 15 | 16 |

| 17 | 18 | 19 | 20 | 21 | 22 | 23 |

| 24 | 25 | 26 | 27 | 28 | 29 | 30 |

| 31 | ||||||

Categories

Blog Administration

Statistics

Last entry: 2018-09-14 13:27

1129 entries written

238 comments have been made