ASDEL: Assessment Delivery Engine for QTIv2 questions

1 INTRODUCTION

At last year's JISC/CETIS conference it was recommended that the community needed to 'kick start' the use of the IMS Question and Test Interoperability version 2 (QTIv2) specifications1. At this meeting it was felt that in order to achieve this, there needed to be a robust set of tools and services that conformed to the QTIv2 specification. R2Q2 is a recently funded JISC project that successfully implemented a rendering and response engine for a single question (also termed an item), for which there are sixteen types described in the specification and implemented in R2Q2. While this is useful it does not implement the whole of the specification regarding a test process. The specification detailed how a test is to be presented to candidates, the order of the questions, the time allowed etc.

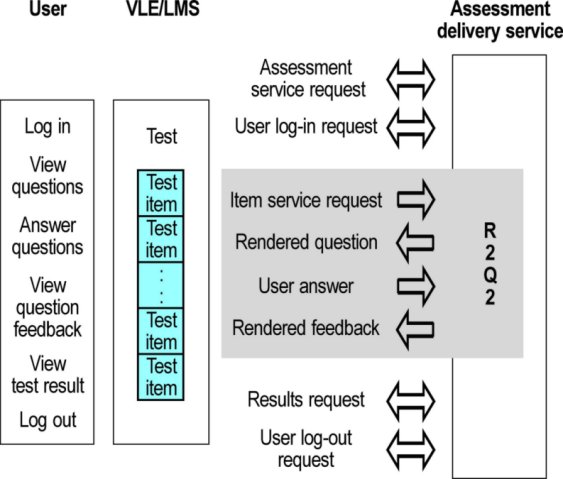

Figure 1. Overview of the test process.

Formative assessment aims to provide appropriate feedback to learners, helping them gauge more accurately their understanding of the material set. It is also used as a learning activity in its own right to form understanding/knowledge. It is something lecturers/teachers would love to do more of but do not have the time to develop, set, and then mark as often as they like. A formative e-assessment system allows lecturers/teachers to develop and set the work once, allows the learner to take the formative test at a time and place of their convenience, possibly as often as they like, obtain meaningful feedback, and see how well they are progressing in their understanding of the material. McAlpine: (2002, p6) 2 also suggests that formative assessment can be used by learners to 'highlight areas of further study and hence improve future performance'. Steve Draper3 distinguishes different types of feedback, highlighting the issue that although a system may provide feedback, its level and quality is still down to the author.

The JISC funded reference model for assessment, FREMA, has developed a number of Service Usage Models (SUMs) on assessment, one of which is for summative assessment. This identifies the services required for a complete summative process including many of the administrative functions. Any implementation of e-assessment, if it is to support flexible and tailored assessment for non-traditional and workplace learners as well as those in higher education, needs to provide for both summative and formative assessment. The overall process of taking a test is shown in Figure 1. While the process focuses on formative assessment, it still meets the core requirements of the summative SUM. In this project we aim to build a test engine to the IMS Question and Test Interoperability version 2.1 specifications that can be deployed as a stand-alone web application or as part of a SOA enabled VLE.

The project will be developed in two phases. The first is the technical development of the engine in accordance with the IMS specification and based on the IMS schema. It will provide for: delivery of an assessment consisting of an assembly of QTI items, scheduling of assessments against users and groups, delivery of items using a web interface, including marking and feedback, and a Web service API for retrieving assessment results. The second phase will integrate with the other projects in this call on item banking and item authoring. If you are interested in tools for QTI item-banking and item-authoring, please see our sister projects, Minibix and AQuRate.

2 PROJECT DESCRIPTION

The aim of this project is to provide a test engine to comply completely with the IMS QTIv2.1 specification, to integrate with the other JISC funded project from this call on item banking and item authoring, and to support projects that may be funded under the April 2007 circular. While not required for this implementation, the software will be developed to be extensible with a view to support future provision for sequencing and adaptive logic in assessments and other future enhancements. The project will be developed in two phases.

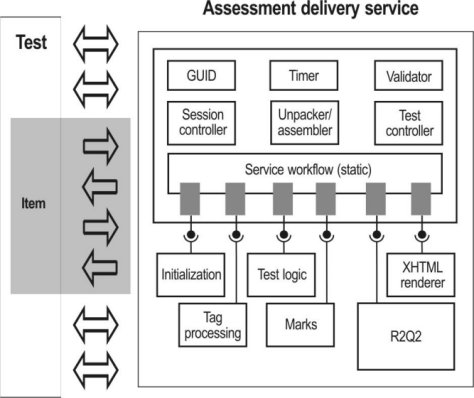

Figure 2. Original Architecture for the Assessment Delivery system.

We will liaise with the two other projects involved in the Assessment call II of the e-Learning Programme to agree collaboration and form a Projects Steering Group. These projects are AQuRate: Item Authoring (Kingston) and MiniBix, Item Banking (Cambridge).

Phase 1 is the technical development of the engine in accordance with the IMS QTIv2.1 specification and in accordance with the JISC e-framework approach of using web services in a Service Oriented Architecture (see Figure 2). The engine will take in a test as an IMS Content Package. The engine will unpack the content package and assemble the items into an assessment. The engine will import any additional material (images, videos, etc) required by the test, and it will then process the xml and deliver the test as scheduled to the candidate via a Web interface. Feedback will be given to the candidate and the marks processed in accordance with the schema sent to the engine. The results can be retrieved through the engine API. Throughout Phase 1 we will ensure liaison and coordination with the Item Authoring and Item Banking projects. At the end of Phase 1 we will have a 'Show and Tell' event, possibly at a CETIS Assessment SIG.

Phase 2 will integrate the deliverables from the AQuRate: Item Authoring (Kingston) and MiniBix, Item Banking (Cambridge) and produce an integrated demonstrator. AQuR@te will allow people to author items, which are stored in MiniBix. A test will incorporate these items and will be played through ASDEL.

Feed back from JISC and the advisory group, suggested that integration into a VLE may not necessarily be an advantage at this stage. In part because we are not building the complete suite of tools necessary in these three projects to provide an alternative to the suite of tools that currently exist for most VLEs. However, to build a light weight suite of tools that early adopters may use to construct tests and delivery to students in an formative setting, may be more effective, see figure 3.

Figure 3 Integration with other projects.

The first phase was the technical development of the engine in accordance with the IMS specification and based on the IMS schema. Rather than just build a tool we first developed a library, JQTI, incorporating of all the test functions required in the IMS QTIv2 specification. While this took the full six months to build the first tools, building the library will help build the other tools quickly and also allows people in the future to build a different tool that suits some new requirement.

The delivery engine was built using the JQTI library; we have called this engine ‘QTI Playr’ We have built a number of tests to show off the power of the Playr. To ensure that we have not just built tests to fit our player we have also arranged for Graham Smith to develop some more tests.

A QTI Validatr, essential to endure a test is constructed correctly. Not only will it validate the test, it also give indications of the error(s). In that same way as an Integrated Design Environment for writing program code, the Validatr will also allow experienced users to correct the XML of the test. The Validatr also has a visual front end that allows users to see the structure of the test and the different paths students can take through the tests, see figure 4.

Figure 4, The Validatr screen shot

A lightweight manger of the tests, called Assessr, will handle the upload of class lists and returning of the results.The test delivery engine only delivers the test, so the aim of the Assessr is to manger the test for the academic. We currently have it uploading a class list from a spreadsheet produced by our Banner system for each course. Banner is the student record used here at Southampton and many other universities in the UK. By using a spreadsheet we felt that it would give some flexibility to the academic to form different groups etc. The academic can than schedule the test and put embargos on the release of the test information, etc. The Assessr will then send the students a unique test number and a URL for the test. The students then logs into the Playr using the token and takes the test. The Assessr allows the academic to see which test they have set, who has taken them and which tests are shared with someone else. Often we teach in teams here, so other academic may need to see the results of a test, see figure 5.

Figure 5 the Assessr tool.

We also built a lightweight test construction tool, called Constructr. This will integrate with MiniBix. The tool is called a constructor so as to distinguish it from Item authoring. This is an extremely light weight tool that will simply allow you to select a pool of questions from an item bank and put them into a basic test. The main aim of this tool is to integrate with Minibix and to help demonstrate an end-to-end story.

All ASDEL software is available at www.qtitools.org

1 http://www.imsglobal.org/question/

2 Mhairi McAlpine: (2002) Principles of Assessment, Bluepaper Number 1CAA Centre, University of Luton, February.

3 STEPHEN W. DRAPER (2005) FEEDBACK, A TECHNICAL MEMO DEPARTMENT OF PSYCHOLOGY, UNIVERSITY OF GLASGOW, 10 APRIL 2005: HTTP://WWW.PSY.GLA.AC.UK/~STEVE/FEEDBACK.HTML