Thursday, July 30. 2009

Conflating Open Access With Copyright Reform: Not Helpful to Open Access

Critique of:

SUMMARY: Professor Shavell's paper on copyright abolition conflates (i) books with journal articles, (ii) Gold OA with Green OA, and (iii) the problem of Open Access with the problem of copyright reform. Although copyright reservation by authors and copyright reform are always welcome, they are unnecessary for universal Green OA; and needlessly suggesting that copyright reservation/reform is or ought to be made a prerequisite for OA simply slows down progress toward reaching the universal Green OA that is already fully within the global research community's grasp.

Shavell, Steven (2009) Should Copyright Of Academic Works Be Abolished?Professor Shavell's paper contains useful analysis and advice about scholarly/scientific book publication, economics and copyright in the digital era, but on the subject of refereed journal articles and open access it contains too many profound misunderstandings to be useful.

[S. Shavell's Summary] "The conventional rationale for copyright of written works, that copyright is needed to foster their creation, is seemingly of limited applicability to the academic domain. For in a world without copyright of academic writing, academics would still benefit from publishing in the major way that they do now, namely, from gaining scholarly esteem. Yet publishers would presumably have to impose fees on authors, because publishers would not be able to profit from reader charges. If these publication fees would be borne by academics, their incentives to publish would be reduced. But if the publication fees would usually be paid by universities or grantors, the motive of academics to publish would be unlikely to decrease (and could actually increase) -- suggesting that ending academic copyright would be socially desirable in view of the broad benefits of a copyright-free world. If so, the demise of academic copyright should be achieved by a change in law, for the "open access" movement that effectively seeks this objective without modification of the law faces fundamental difficulties."

[Note: Professor Shavell's posting as well as the critique below are unrefereed, unpublished drafts ("preprints"). They have both been posted publicly, but they do not constitute formal "publications" in the academic sense, let alone peer-reviewed publications. In particular, Professor Shavell's working draft was posted in order to solicit comments, on the basis of which it is likely to be revised before being submitted for publication. These unpublished postings can, however, be referred to and cited, as long as the user is careful to make it clear that they are prepublication working drafts rather than lapidary refereed postprints that have been accepted for publication by a journal. This is all part of evolving scholarly practice in the online era.]

(1) What are "academic works"? Shavell largely conflates the problem of book access/economics/copyright and journal-article access/economics/copyright, as well as their respective solutions.

The book and article problems are far from the same, and hence neither are their solutions. (And even among books, the boundary between trade books and "academic" books is fuzzy; nor is an esoteric scholarly monograph the same sort of thing as a textbook, a handbook, or a popularization for the general public by a scholar, although they are all "academic.")

Books are single items, bought one-time by individuals and institutions -- journal articles are parts of serials, bought as annual subscriptions, mostly by institutions.

Books are still largely preferred by users in analog form, not digital-only -- journal articles are increasingly sought and used in digital form, for onscreen use or local storage and print-off. (OA only concerns online access.)

Print-on paper books still cost a lot of money to produce -- digital journal article-texts are generated by their authors. In the online age, journals need only provide peer review and certification (by the journal's title and track-record): no print edition, production or distribution are necessary.

It is not clear that for most or even many authors of "academic works" (whatever that means) the sole "benefit" sought is scholarly uptake and impact ("scholarly esteem"), rather than also the hope of some royalty revenue -- whereas it is certain that all journal article authors, without a single exception, do indeed seek solely scholarly uptake and impact and nothing else.

(2) What is Open Access? Shavell largely conflates fee-based Gold OA (journal publishing) and Green OA (journal-article self-archiving), focusing only on the former, and stressing the deterrent effect of having to pay publishing fees.

(3) Why Pay Pre-Emptive Gold OA Fees? Gold OA publishing fees are certainly a deterrent today. But no publishing fees need be paid for Green OA while institutional subscriptions are still paying the costs of journal publishing.

If and when universal Green OA -- generated by universal Green OA self-archiving mandates from institutions (and funders) worldwide -- should eventually cause institutions to cancel their journal subscriptions, rendering subscriptions no longer a sustainable way of recovering the costs of journal publishing, journals will cut costs, phase out inessential products and services that are currently co-bundled into subscriptions, and downsize to just providing and certifying peer review, its much lower costs paid for on the fee-based Gold OA cost-recovery model out of the institutional windfall subscription cancellation savings.

Shavell instead seems to think that OA would somehow need to be paid for right now, by institutions and funders, out of (unspecified) Gold OA funds, even though subscriptions are still paying for publication today, and even though the pressing need is for OA itself, not for the money to pay for fee-based Gold OA publishing.

Universal OA can be provided by mandating Green OA today. There is no need whatsoever for any extra funds to pay for Gold OA.

(4) Why/How is OA a Copyright Issue at all? Shavell largely conflates the issue of copyright reform with the issue of Open Access, suggesting that the way to provide OA is to abolish copyright.

This is not only incorrect and unnecessary, but redirecting the concerted global efforts that are needed to universalize Green OA Mandates toward copyright reform or abolition will again just delay and deter progress towards universal Green OA.

Green OA can be (and is being) mandated without any need to abolish copyright (nor to find extra money to pay Gold OA fees).

Shavell seems to be unaware that over 90% of journals already endorse Green OA self-archiving in some form, 63% endorsing Green OA self-archiving of the refereed final draft immediately upon acceptance for publication. That means at least 63% Immediate Green OA is already potentially available, if mandated (in contrast to the 15% [not 5%] actual Green OA that is being provided spontaneously, i.e., unmandated, today).

And for the remaining 37% of journal articles, the Green OA mandates can require them to be likewise deposited immediately, as "Closed Access" instead of Open Access during any publisher access embargo, with the Institutional Repository's "email eprint request" Button tiding over research usage needs by providing "Almost OA" during any embargo.

This universally mandated 63% OA + 37% Almost-OA will not only provide almost all the research usage and impact that 100% OA will, but it will also hasten the well-deserved death of publisher access embargoes, under the mounting pressure for 100% OA, once the worldwide research community has at last had a taste of 63% OA + 37% Almost-OA (compared to the unmandated c. 15% OA -- not 4.6% as in Shavell's citation -- that we all have now).

In conclusion: Professor Shavell's paper on copyright abolition conflates (i) books with journal articles, (ii) Gold OA with Green OA, and (iii) the problem of Open Access with the problem of copyright reform. Although copyright reservation by authors and copyright reform are all always welcome, they are unnecessary for universal Green OA; and needlessly suggesting that copyright reservation/reform is or ought to be made a prerequisite for OA simply slows down progress toward reaching the universal Green OA that is already fully within the global research community's grasp.

Stevan Harnad

American Scientist Open Access Forum

Wednesday, July 29. 2009

The Power and Purpose of the Email Eprint Request Button

KG: "I do not think that using the request button is a valid OA strategy. My own experience was that I received few response when requesting an article. The St. Gallen IR manager said that requesters can obtain much more positive results when mailing to the scholar directly."(1) Michael White reported that the response rates for the email eprint request button at U. Stirling are about 50% fulfillment, 5% refusal and 45% no response.

(2) He also said that some of the no-responses may have been (2a) elapsed email addresses, (2b) temporary absence, (2c) embargoed theses, and (2d) author unfamiliarity with the purpose and use of the email eprint request Button.

(3) He also noted that the response rates may well improve with time. (I would add that that's virtually certain: It is still exceedingly early days for the Button. And time -- as well as the growing clamor for access [and impact] -- is on the Button's side.)

(4) It is harder to imagine why and how the long and complicated (and obsolescent) alternative procedure -- of a user discovering an article that has not been deposited by the author, finding the author's email address, and sending him an email eprint request, to which the author must respond by sending an email and attaching the eprint -- would "obtain much more positive results" than the author depositing the article in his IR, once, and letting the IR's Button send the email requests for the requesters to the author with no need for look-up, and only one click needed from the author to fulfill the request.

(5) The email eprint request Button does not provide OA; it only provides "Almost OA." But that's infinitely better than no OA. And the Button (and the Immediate-Deposit/Optional-Access -- ID/OA -- Mandate, for which the Button was designed) make it possible for institutions and funders to adopt Green OA mandates that neither need to allow exemptions from immediate deposit nor do they need to allow publishers to dictate whether or when the deposit is made.

(5) The email eprint request Button does not provide OA; it only provides "Almost OA." But that's infinitely better than no OA. And the Button (and the Immediate-Deposit/Optional-Access -- ID/OA -- Mandate, for which the Button was designed) make it possible for institutions and funders to adopt Green OA mandates that neither need to allow exemptions from immediate deposit nor do they need to allow publishers to dictate whether or when the deposit is made. (If publishers have a say, it is only about whether and when the deposit is made OA, not about whether or when the deposit is made at all. Since 63% of journals are already Green on immediate OA, the ID/OA Button means that an institution or funder can reach uncontroversial consensus on requiring 100% deposit, which then yields at least 63% immediate OA and 37% Almost-OA, whereas the alternative is not arriving at a consensus on mandating OA at all, or adopting a weaker mandate that only provides OA after an embargo period, or only at the publisher's behest, or allows author opt-out. And the most important thing is not only that the ID/OA provides more access and is easier to reach agreement on adopting, but it will also quite naturally drive embargoes into their well-deserved graves, as the mandates and their resulting OA -- and the demand for it -- grow and grow.)

KG: "The Oppenheim/Harnad "preprint & corrigenda" strategy "of tiding over a publisher's OA embargo: Make the unrefereed preprint OA before submitting to the journal, and if upon acceptance the journal seeks to embargo OA to the refereed postprint, instead update the OA preprint with a corrigenda file" is a valid OA strategy because the eprint is PUBLIC."What makes a strategy "valid" is that it works: increases access, Open Access, and Open Access mandates.

Both the "preprint&corrigenda" strategy and the "ID/OA-mandate&Button" strategy can increase access, OA, and OA mandates, but the ID/OA-mandate&Button strategy is universal: it scales up to cover all of OA's target content, whereas the preprint&corrigenda strategy is not universal, for it does not and cannot cover those disciplines (and individual authors) that have good (and bad) reasons not to want to make their unrefereed preprints public.

KG: "If an article is published then the author hasn't any right under OA aspects to choose which requester has enough "dignity" to receive an eprint. I cannot accept the arbitrariness of such a decision under OA circumstances."Relax. The reason neophyte self-archiving authors are not fulfilling Button requests is because they are either not receiving them or don't yet understand what to do with them, not because they are making value judgments about who does and does not merit the privilege of accessing their work!

They'll learn: If necessary, they'll learn under the pressure of the impact-weighting of publications in performance evaluation. But my hunch is that they already know they want the user-access and user-impact (from the eager way they do vanity-searches in the biobliography of every work they pick up in their research field, to check whether their own work has been cited). So all they really need to learn now is how the Button works, and why.

Stevan Harnad

American Scientist Open Access Forum

Fifty Years of Author Fulfillment of Reprint/Eprint Requests

SH: "[T]here is nothing either defensible or enforceable that a publisher can do or say to prevent a researcher from personally distributing individual copies of his own (published) research findings to individual researchers, for research purposes, in any form he wishes, analog or digital, at any time. That is what researchers have been doing for many decades, whether or not their right to do so was formally enshrined in a publisher's 'author-re-use' document."

RQJ: "This discussion strikes at the heart of green OA implementation. Among other things, it's why we have mandates."Actually that's not correct. What I was referring to above -- authors mailing an individual analog reprint or emailing an individual digital eprint to an individual requester for research purposes -- predates both OA (Green and Gold) and (Green) OA mandates.

The only connection with Green OA mandates is that email eprint requests for Closed Access deposits whose metadata are openly accessible allow users to request -- and authors to provide -- individual one-on-one "Almost OA" during any OA embargo period: That way Green OA mandates can require deposit of the final refereed draft immediately upon acceptance, with no exceptions or opt-outs, no matter how foolish a copyright transfer agreement the author may have signed.

If a Green OA mandate does not require immediate deposit, then it is completely at the mercy of publisher OA embargoes: The author deposits only if and when the publisher stipulates that he may deposit, because all deposits are OA. If, instead, immediate deposits are required in every case, without exception, but where OA is publisher-embargoed the deposit may instead be made Closed Access during the embargo, rather than OA, then the email eprint request button allows the author to provide "Almost OA" on an individual case by case basis for the Closed Access articles during the embargo.

But if the mandate instead requires deposit only after the publisher embargo has elapsed, that means the only access during the embargo period is subscriber-access. That means a great loss of potential research usage and impact.

RQJ: "I believe Harnad is likely incorrect as a matter of law (at least in the US), but ultimately this may end up as a court case that gives us more explicit guidance.If researchers sending individual reprints and eprints to individual requesters for research purposes has not gone to court for over a half century, it is difficult to imagine why someone would think it will go to court now: Publishers suddenly begin suing their authors for fulfilling reprint requests?

RQJ: "Note that "research findings" (which are the stuff of patent or academic integrity if protected at all) are very different from their expression in text, which is what is transferred through the copyright agreement."We are not talking about research findings, we are talking about copies of verbatim (published) reports of research findings: sending them to individual requesters, as scholars and scientists have been doing for over half a century (since at least the launch of Eugene Garfield's "Current Contents" and "Request-a-print" cards):

Swales, J. (1988), Language and scientific communication. The case of the reprint request. Scientometrics 13: 93–101. "This paper reports on a study of Reprint Requests (RRs). It is estimated that tens of millions of RRs are mailed each year, most being triggered by Current Contents..."

RQJ: "Note also that "what researchers have been doing for many decades" is disputable -- arguably what researchers did anteXerox was distribute the 100 or so offprints of their article that they got as part of their Faustian bargains."They could also mail out copies of their revised, accepted final drafts.

And whether or not any of that was "disputable" before xerox, it certainly wasn't ever contested -- neither with the onset of the xerox era, nor with the onset of the email era.

RQJ: "Note also that courts would be under strong conflicting pressures if a case like this ever actually got heard. On the one hand, Harnad's point is good that courts would want to identify ways to find for those sympathetic scholarly authors. On another, anyone who has been following the RIAA (or remembers Eldred) knows that some of the courts also have tried to find in favor of the owners of the copyrighted works and in favor of sanctity of contract."Notice that in all other cases but this very special one (refereed research journal articles) both author and publisher were allied on the same side of the copyright/access divide: both wanted to protect access to their (joint) product (and revenues) from piracy by third parties.

In stark contrast, in this one anomalous case -- author give-away research, written purely for maximal uptake, usage and impact, not at all for royalty revenue -- the publisher and the author are on opposite sides of the copyright/access divide, and publishers would not be suing pirates, but the authors of their own works (and not "works for hire!").

I would say that the differences from all prior cases are radical enough here to safely conclude that all prior bets are off, insofar as citing precedents and analogies are concerned.

And I would say that the de facto uncontested practices of millions of scholars and scientists annually for decades since well into both the photocopy and the email eras bear this out.

And although individual reprint/eprint request-fulfillment by authors is definitely not OA (though it is a harbinger of it), the growing clamor for OA today is surely making it all the harder for publishers now suddenly to do an abrupt about-face, endeavoring to contest individual reprint/eprint request-fulfillment by authors after all this time -- and now, of all times!

RQJ: "On a third hand, the institutional employers of the researchers might well try to assert WmfH or other compulsory license theories that trumped the publisher's copyright."You are thinking here about what institutions (and funders) could do to force the issue insofar as OA is concerned (and I agree, they do have an exceedingly strong hand, and could and should use it if it proves necessary).

But that is not even what we are talking about here: We are just talking about the longstanding pre-OA practice of individual reprint/eprint request-fulfillment by authors, for research purposes...

RQJ: "On a fourth, there's the public interest in "the Progress of Science" and a dearth of good empirical data as to which copyright regimes actually do promote that progress."All worthy and worthwhile, but probably not necessary, as neither individual reprint/eprint request-fulfillment by authors nor Immediate-Deposit/Optional-Access (ID/OA) mandates are copyright matters:

RQJ: "...Will it ever go to court? Maybe not. The publishers might win their particular case but lose the war by triggering a revolution."What is the "it" that you are wondering about? Over 90% of journals are already Green on immediate, unembargoed OA self-archiving in some form (63% for the refereed postprint, a further 32% for the unrefereed preprint).

So are you wondering whether the non-Green journals will try to sue their authors? No, they won't. At most, some may try to send them take-down notices, which their authors will either choose to honor or ignore.

But that isn't even what we are talking about here: We are talking about individual reprint/eprint request-fulfillment by authors, for research purposes: Wouldn't the time for authors to worry about that have been 50 years ago, before they began doing it, rather than now, when they and their children and grand-children have already been doing it with impunity for generations?

Stevan Harnad

American Scientist Open Access Forum

Monday, July 27. 2009

UK's 26th Green Open Access Mandate, Planet's 95th: Leicester

University of Leicester

(UK institutional-mandate)

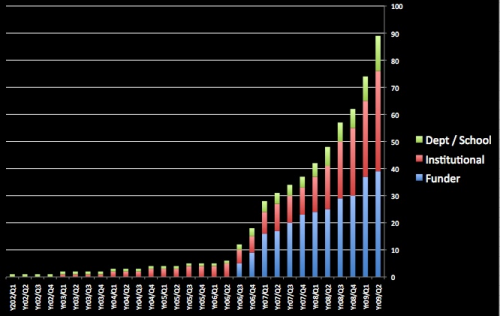

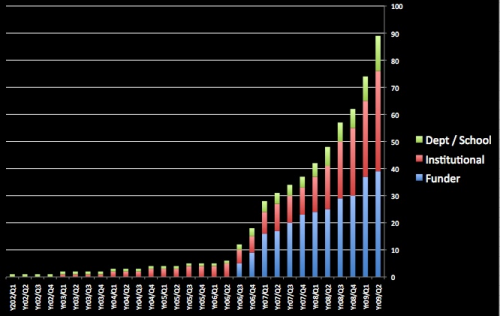

Institution's OA Repository: [growth data]

Institution's/Department's OA Self-Archiving Policy

The University, following a decision ratified by Senate on 27 May 2009, has joined a growing number of UK institutions, including UCL, Edinburgh, Glasgow and Southampton, in adopting an open access mandate for research publications. Open access means that a research publication can be freely accessed by anyone using an internet connection.

Academics are now required to submit their research publications to both the open access web-based Leicester Research Archive (LRA) and the internal central research publications database (RED). The LRA includes full text versions of publications where publishers' terms allow it (or the bibliographic reference otherwise); RED includes only bibliographic references.

This policy will:

-- enable analysis of Leicester’s outputs in the run up to REF and inform the selection of submissions;

-- enhance the visibility of Leicester’s research, increasing usage;

-- assist with compliance of research funders’ open access policies and their open access audits. The policies of the Research Councils and other funding bodies can be found in this document;

-- showcase Leicester’s research to potential collaborators and prospective postgraduate students;

-- ensure a publication’s long term preservation.

Friday, July 24. 2009

UK's 25th Green Open Access Mandate, Planet's 94th: Westminster

University of Westminster

(UK institutional-mandate)

Institution's OA Repository: [growth data]

Institution's/Department's OA Self-Archiving Policy

University of Westminster Policy on Dissemination of Research and Scholarly Output:

In line with current changes in research funders’ conditions, the Research Committee has approved a policy regarding the dissemination through open access of journal articles and conference papers. From 2007, academic members of staff are now required to deposit the final author-formatted version of all articles and conference papers they produce, subject to publishers’ policies, in WestminsterResearch.Registered by: Nina Watts (Metadata Librarian) wattsn AT westminster.ac.uk on 24 Jul 2009

WestminsterResearch is the University of Westminster's online repository, containing the research output of the University's academic community. This archive aims to capture the intellectual output of the University and be a resource containing details of all research and scholarly output.

Thursday, July 23. 2009

Post-Publication Metrics Versus Pre-Publication Peer Review

Patterson, Mark (2009) PLoS Journals – measuring impact where it matters writes:

And yes, multiple postpublication metrics will be a great help in navigating, evaluating and analyzing research influence, importance and impact.

But it is a great mistake to imagine that this implies that peer review can now be done on just a generic "pass/fail" basis.

Purpose of Peer Review. Not only is peer review dynamic and interactive -- improving papers before approving them for publication -- but the planet's 25,000 peer-reviewed journals differ not only in the subject matter they cover, but also, within a given subject matter, they differ (often quite substantially) in their respective quality standards and criteria.

It is extremely unrealistic (and would be highly dysfunctional, if it were ever made to come true) to suppose that these 25,000 journals are (or ought to be) flattened to provide a 0/1 pass/fail decision on publishability at some generic adequacy level, common to all refereed research.

Pass/Fail Versus Letter-Grades. Nor is it just a matter of switching all journals from assigning a generic pass/fail grade to assigning its own letter grade (A-, B+, etc.), despite the fact that that is effectively what the current system of multiple, independent peer-reviewed journals provides. For not only do journal peer-review standards and criteria differ, but the expertise of their respective "peers" differs too. Better journals have better and more exacting referees, exercising more rigorous peer review. (So the 25,000 peer-reviewed journals today cannot be thought of as one generic peer-review filter that accepts papers for publication in each field with grades between A+ and E; rather there are A+ journals, B- journals, etc.: each established journal has its own independent standards, to which its submissions are answerable)

Track Records and Quality Standards. And users know all this, from the established track records of the journals they consult as readers and publish in as authors. Whether or not we like to put it that way, this all boils down to selectivity across a gaussian distribution of research quality in each field. There are highly selective journals, that accept only the very best papers -- and even those often only after several rounds of rigorous refereeing, revision and re-refereeing. And there are less selective journals, that impose less exacting standards -- all the way down to the fuzzy pass/fail threshold that distinguishes "refereed" journals from journals whose standards are so low that they are virtually vanity-press journals.

Supplement Versus Substitute. This difference (and independence) among journals in terms of their quality standards is essential if peer-review is to serve as the quality enhancer and filter that it is intended to be. Of course the system is imperfect, and, for just that reason alone (amongst many others) a rich diversity of post-publication metrics are an invaluable supplement to peer review. But they are certainly no substitute for pre-publication peer review, or, most importantly, its quality triage.

Quality Distribution. So much research is published daily in most fields that on the basis of a generic 0/1 quality threshold, researchers simply cannot decide rationally or reliably what new research is worth the time and investment to read, use and try to build upon. Researchers and their work differ in quality too, and they are entitled to know a priori, as they do now, whether or not a newly published work has made the highest quality cut, rather than merely that it has met some default standards, after which users must wait for the multiple post-publication metrics to accumulate across time in order to be able to have a more nuanced quality assessment.

Rejection Rates. More nuanced sorting of new research is precisely what peer review is about, and for, and especially at the highest quality levels. Although authors (knowing the quality track-records of their journals) mostly self-select, submitting their papers to journals whose standards are roughly commensurate with their quality, the underlying correlate of a journal's refereeing quality standards is basically their relative rejection rate: What percentage of annual papers in their designated subject matter would meet their standards (if all were submitted to that journal, and the only constraint on acceptance were the quality level of the article, not how many articles the journal could manage to referee and publish per year)?

Quality Ranges. This independent standard-setting by journals effectively ranges the 25,000 titles along a rough letter-grade continuum within each field, and their "grades" are roughly known by authors and users, from the journals' track-records for quality.

Quality Differential. Making peer review generic and entrusting the rest to post-publication metrics would wipe out that differential quality information for new research, and force researchers at all levels to risk pot-luck with newly published research (until and unless enough time has elapsed to sort out the rest of the quality variance with post-publication metrics). Among other things, this would effectively slow down instead of speeding up research progress.

Turn-Around Time. Of course pre-publication peer review takes time too; but if its result is that it pre-sorts the quality of new publications in terms of known, reliable letter-grade standards (the journals' names and track-records), then it's time well spent. Offloading that dynamic pre-filtering function onto post-publication metrics, no matter how rich and plural, would greatly handicap research usability and progress, and especially at its all-important highest quality levels.

More Value From Post-Publication Metrics Does Not Entail Less Value From Pre-Publication Peer Review. It would be ironic if today's eminently valid and timely call for a wide and rich variety of post-publication metrics -- in place of just the unitary journal average (the "journal impact factor") -- were coupled with an ill-considered call for collapsing the planet's wide and rich variety of peer-reviewed journals and their respective independent, established quality levels onto some sort of global, generic pass/fail system.

Differential Quality Tags. There is an idea afoot that peer review is just some sort of generic pass/fail grade for "publishability," and that the rest is a matter of post-publication evaluation. I think this is incorrect, and represents a misunderstanding of the actual function that peer review is currently performing. It is not a 0/1, publishable/unpublishable threshold. There are many different quality levels, and they get more exacting and selective in the higher quality journals (which also have higher-quality and more exacting referees and refereeing). Users need these differential quality tags when they are trying to decide whether newly published work is worth taking the time to ready and making the effort and risk to try to build upon (at the quality level of their own work).

User/Author/Referee Experience. I think both authors and users have a good idea of the quality levels of the journals in their fields -- not from the journals' impact factors, but from their content, and their track-records for content. As users, researchers read articles in their journals; as authors they write for those journals, and revise for their referees; and as referees they referee for them. They know that all journals are not equal, and that "peer-reviewed" can be done at a whole range of quality levels.

Metrics As Substitutes for User/Author/Referee Experience? Is there any substitute for this direct experience with journals (as users, authors and referees) in order to know what their peer-reviewing standards and quality level are? There is nothing yet, and no one can say yet whether there will ever be metrics as accurate as having read, written and refereed for the journals in question. Metrics might eventually provide an approximation, though we don't yet know how close, and of course they only come after publication (well after).

Quality Lapses? Journal track records, user experiences, and peer review itself are certainly not infallible either, however; the usually-higher-quality journals may occasionally publish a lower-quality article, and vice versa. But on average, the quality of the current articles should correlate well with the quality of past articles. Whether judgements of quality from direct experience (as user/author/referee) will ever be matched or beaten by multiple metrics, I cannot say, but I am pretty sure they are not matched or beaten by the journal impact factor.

Regression on the Generic Mean? And even if multiple metrics do become as good a joint predictor of journal article quality as user experience, it does not follow that peer-review can then be reduced to generic pass/fail, with the rest sorted by metrics, because (1) metrics are journal-level, not article-level (though they can also be author-level) and, more important still, (2) if journal-differences are flattened to generic peer review, entrusting the rest to metrics, then the quality of articles themselves will fall, as rigorous peer review does not just assign articles a differential grade (via the journal's name and track-record), but it improves them, through revision and re-refereeing. More generic 0/1 peer review, with less individual quality variation among journals, would just generate quality regression on the mean.

REFERENCES

Bollen J, Van de Sompel H, Hagberg A, Chute R (2009) A Principal Component Analysis of 39 Scientific Impact Measures. PLoS ONE 4(6): e6022. doi:10.1371/journal.pone.0006022

Brody, T., Harnad, S. and Carr, L. (2006) . Journal of the American Association for Information Science and Technology (JASIST) 57(8) pp. 1060-1072.

Garfield, E., (1955) Citation Indexes for Science: A New Dimension in Documentation through Association of Ideas. Science 122: 108-111

Harnad, S. (1979) Creative disagreement. The Sciences 19: 18 - 20.

Harnad, S. (ed.) (1982) Peer commentary on peer review: A case study in scientific quality control, New York: Cambridge University Press.

Harnad, S. (1984) Commentaries, opinions and the growth of scientific knowledge. American Psychologist 39: 1497 - 1498.

Harnad, Stevan (1985) Rational disagreement in peer review. Science, Technology and Human Values, 10 p.55-62.

Harnad, S. (1990) Scholarly Skywriting and the Prepublication Continuum of Scientific Inquiry Psychological Science 1: 342 - 343 (reprinted in Current Contents 45: 9-13, November 11 1991).

Harnad, S. (1986) Policing the Paper Chase. (Review of S. Lock, A difficult balance: Peer review in biomedical publication.) Nature 322: 24 - 5.

Harnad, S. (1996) Implementing Peer Review on the Net: Scientific Quality Control in Scholarly Electronic Journals. In: Peek, R. & Newby, G. (Eds.) Scholarly Publishing: The Electronic Frontier. Cambridge MA: MIT Press. Pp 103-118.

Harnad, S. (1997) Learned Inquiry and the Net: The Role of Peer Review, Peer Commentary and Copyright. Learned Publishing 11(4) 283-292.

Harnad, S. (1998/2000/2004) The invisible hand of peer review. Nature [online] (5 Nov. 1998), Exploit Interactive 5 (2000): and in Shatz, B. (2004) (ed.) Peer Review: A Critical Inquiry. Rowland & Littlefield. Pp. 235-242.

Harnad, S. (2008) Validating Research Performance Metrics Against Peer Rankings. Ethics in Science and Environmental Politics 8 (11) Special Issue: The Use And Misuse Of Bibliometric Indices In Evaluating Scholarly Performance

Harnad, S. (2009) Open Access Scientometrics and the UK Research Assessment Exercise. Scientometrics 79 (1)

Shadbolt, N., Brody, T., Carr, L. and Harnad, S. (2006) The Open Research Web: A Preview of the Optimal and the Inevitable, in Jacobs, N., Eds. Open Access: Key Strategic, Technical and Economic Aspects. Chandos.

Merits of Metrics. Of course direct article and author citation counts are infinitely preferable to -- and more informative than -- just a journal average (the journal "impact factor")."[R]eaders tend to navigate directly to the articles that are relevant to them, regardless of the journal they were published in... [T]here is a strong skew in the distribution of citations within a journal – typically, around 80% of the citations accrue to 20% of the articles... [W]hy then do researchers and their paymasters remain wedded to assessing individual articles by using a metric (the impact factor) that attempts to measure the average citations to a whole journal?

"We’d argue that it’s primarily because there has been no strong alternative. But now alternatives are beginning to emerge... focusing on articles rather than journals... [and] not confining article-level metrics to a single indicator... Citations can be counted more broadly, along with web usage, blog and media coverage, social bookmarks, expert/community comments and ratings, and so on...

"[J]udgements about impact and relevance can be left almost entirely to the period after publication. By peer-reviewing submissions purely for scientific rigour, ethical conduct and proper reporting before publication, articles can be assessed and published rapidly. Once articles have joined the published literature, the impact and relevance of the article can then be determined on the basis of the activity of the research community as a whole... [through] [a]rticle-level metrics and indicators..."

And yes, multiple postpublication metrics will be a great help in navigating, evaluating and analyzing research influence, importance and impact.

But it is a great mistake to imagine that this implies that peer review can now be done on just a generic "pass/fail" basis.

Purpose of Peer Review. Not only is peer review dynamic and interactive -- improving papers before approving them for publication -- but the planet's 25,000 peer-reviewed journals differ not only in the subject matter they cover, but also, within a given subject matter, they differ (often quite substantially) in their respective quality standards and criteria.

It is extremely unrealistic (and would be highly dysfunctional, if it were ever made to come true) to suppose that these 25,000 journals are (or ought to be) flattened to provide a 0/1 pass/fail decision on publishability at some generic adequacy level, common to all refereed research.

Pass/Fail Versus Letter-Grades. Nor is it just a matter of switching all journals from assigning a generic pass/fail grade to assigning its own letter grade (A-, B+, etc.), despite the fact that that is effectively what the current system of multiple, independent peer-reviewed journals provides. For not only do journal peer-review standards and criteria differ, but the expertise of their respective "peers" differs too. Better journals have better and more exacting referees, exercising more rigorous peer review. (So the 25,000 peer-reviewed journals today cannot be thought of as one generic peer-review filter that accepts papers for publication in each field with grades between A+ and E; rather there are A+ journals, B- journals, etc.: each established journal has its own independent standards, to which its submissions are answerable)

Track Records and Quality Standards. And users know all this, from the established track records of the journals they consult as readers and publish in as authors. Whether or not we like to put it that way, this all boils down to selectivity across a gaussian distribution of research quality in each field. There are highly selective journals, that accept only the very best papers -- and even those often only after several rounds of rigorous refereeing, revision and re-refereeing. And there are less selective journals, that impose less exacting standards -- all the way down to the fuzzy pass/fail threshold that distinguishes "refereed" journals from journals whose standards are so low that they are virtually vanity-press journals.

Supplement Versus Substitute. This difference (and independence) among journals in terms of their quality standards is essential if peer-review is to serve as the quality enhancer and filter that it is intended to be. Of course the system is imperfect, and, for just that reason alone (amongst many others) a rich diversity of post-publication metrics are an invaluable supplement to peer review. But they are certainly no substitute for pre-publication peer review, or, most importantly, its quality triage.

Quality Distribution. So much research is published daily in most fields that on the basis of a generic 0/1 quality threshold, researchers simply cannot decide rationally or reliably what new research is worth the time and investment to read, use and try to build upon. Researchers and their work differ in quality too, and they are entitled to know a priori, as they do now, whether or not a newly published work has made the highest quality cut, rather than merely that it has met some default standards, after which users must wait for the multiple post-publication metrics to accumulate across time in order to be able to have a more nuanced quality assessment.

Rejection Rates. More nuanced sorting of new research is precisely what peer review is about, and for, and especially at the highest quality levels. Although authors (knowing the quality track-records of their journals) mostly self-select, submitting their papers to journals whose standards are roughly commensurate with their quality, the underlying correlate of a journal's refereeing quality standards is basically their relative rejection rate: What percentage of annual papers in their designated subject matter would meet their standards (if all were submitted to that journal, and the only constraint on acceptance were the quality level of the article, not how many articles the journal could manage to referee and publish per year)?

Quality Ranges. This independent standard-setting by journals effectively ranges the 25,000 titles along a rough letter-grade continuum within each field, and their "grades" are roughly known by authors and users, from the journals' track-records for quality.

Quality Differential. Making peer review generic and entrusting the rest to post-publication metrics would wipe out that differential quality information for new research, and force researchers at all levels to risk pot-luck with newly published research (until and unless enough time has elapsed to sort out the rest of the quality variance with post-publication metrics). Among other things, this would effectively slow down instead of speeding up research progress.

Turn-Around Time. Of course pre-publication peer review takes time too; but if its result is that it pre-sorts the quality of new publications in terms of known, reliable letter-grade standards (the journals' names and track-records), then it's time well spent. Offloading that dynamic pre-filtering function onto post-publication metrics, no matter how rich and plural, would greatly handicap research usability and progress, and especially at its all-important highest quality levels.

More Value From Post-Publication Metrics Does Not Entail Less Value From Pre-Publication Peer Review. It would be ironic if today's eminently valid and timely call for a wide and rich variety of post-publication metrics -- in place of just the unitary journal average (the "journal impact factor") -- were coupled with an ill-considered call for collapsing the planet's wide and rich variety of peer-reviewed journals and their respective independent, established quality levels onto some sort of global, generic pass/fail system.

Differential Quality Tags. There is an idea afoot that peer review is just some sort of generic pass/fail grade for "publishability," and that the rest is a matter of post-publication evaluation. I think this is incorrect, and represents a misunderstanding of the actual function that peer review is currently performing. It is not a 0/1, publishable/unpublishable threshold. There are many different quality levels, and they get more exacting and selective in the higher quality journals (which also have higher-quality and more exacting referees and refereeing). Users need these differential quality tags when they are trying to decide whether newly published work is worth taking the time to ready and making the effort and risk to try to build upon (at the quality level of their own work).

User/Author/Referee Experience. I think both authors and users have a good idea of the quality levels of the journals in their fields -- not from the journals' impact factors, but from their content, and their track-records for content. As users, researchers read articles in their journals; as authors they write for those journals, and revise for their referees; and as referees they referee for them. They know that all journals are not equal, and that "peer-reviewed" can be done at a whole range of quality levels.

Metrics As Substitutes for User/Author/Referee Experience? Is there any substitute for this direct experience with journals (as users, authors and referees) in order to know what their peer-reviewing standards and quality level are? There is nothing yet, and no one can say yet whether there will ever be metrics as accurate as having read, written and refereed for the journals in question. Metrics might eventually provide an approximation, though we don't yet know how close, and of course they only come after publication (well after).

Quality Lapses? Journal track records, user experiences, and peer review itself are certainly not infallible either, however; the usually-higher-quality journals may occasionally publish a lower-quality article, and vice versa. But on average, the quality of the current articles should correlate well with the quality of past articles. Whether judgements of quality from direct experience (as user/author/referee) will ever be matched or beaten by multiple metrics, I cannot say, but I am pretty sure they are not matched or beaten by the journal impact factor.

Regression on the Generic Mean? And even if multiple metrics do become as good a joint predictor of journal article quality as user experience, it does not follow that peer-review can then be reduced to generic pass/fail, with the rest sorted by metrics, because (1) metrics are journal-level, not article-level (though they can also be author-level) and, more important still, (2) if journal-differences are flattened to generic peer review, entrusting the rest to metrics, then the quality of articles themselves will fall, as rigorous peer review does not just assign articles a differential grade (via the journal's name and track-record), but it improves them, through revision and re-refereeing. More generic 0/1 peer review, with less individual quality variation among journals, would just generate quality regression on the mean.

REFERENCES

Bollen J, Van de Sompel H, Hagberg A, Chute R (2009) A Principal Component Analysis of 39 Scientific Impact Measures. PLoS ONE 4(6): e6022. doi:10.1371/journal.pone.0006022

Brody, T., Harnad, S. and Carr, L. (2006) . Journal of the American Association for Information Science and Technology (JASIST) 57(8) pp. 1060-1072.

Garfield, E., (1955) Citation Indexes for Science: A New Dimension in Documentation through Association of Ideas. Science 122: 108-111

Harnad, S. (1979) Creative disagreement. The Sciences 19: 18 - 20.

Harnad, S. (ed.) (1982) Peer commentary on peer review: A case study in scientific quality control, New York: Cambridge University Press.

Harnad, S. (1984) Commentaries, opinions and the growth of scientific knowledge. American Psychologist 39: 1497 - 1498.

Harnad, Stevan (1985) Rational disagreement in peer review. Science, Technology and Human Values, 10 p.55-62.

Harnad, S. (1990) Scholarly Skywriting and the Prepublication Continuum of Scientific Inquiry Psychological Science 1: 342 - 343 (reprinted in Current Contents 45: 9-13, November 11 1991).

Harnad, S. (1986) Policing the Paper Chase. (Review of S. Lock, A difficult balance: Peer review in biomedical publication.) Nature 322: 24 - 5.

Harnad, S. (1996) Implementing Peer Review on the Net: Scientific Quality Control in Scholarly Electronic Journals. In: Peek, R. & Newby, G. (Eds.) Scholarly Publishing: The Electronic Frontier. Cambridge MA: MIT Press. Pp 103-118.

Harnad, S. (1997) Learned Inquiry and the Net: The Role of Peer Review, Peer Commentary and Copyright. Learned Publishing 11(4) 283-292.

Harnad, S. (1998/2000/2004) The invisible hand of peer review. Nature [online] (5 Nov. 1998), Exploit Interactive 5 (2000): and in Shatz, B. (2004) (ed.) Peer Review: A Critical Inquiry. Rowland & Littlefield. Pp. 235-242.

Harnad, S. (2008) Validating Research Performance Metrics Against Peer Rankings. Ethics in Science and Environmental Politics 8 (11) Special Issue: The Use And Misuse Of Bibliometric Indices In Evaluating Scholarly Performance

Harnad, S. (2009) Open Access Scientometrics and the UK Research Assessment Exercise. Scientometrics 79 (1)

Shadbolt, N., Brody, T., Carr, L. and Harnad, S. (2006) The Open Research Web: A Preview of the Optimal and the Inevitable, in Jacobs, N., Eds. Open Access: Key Strategic, Technical and Economic Aspects. Chandos.

Call to Register Universities' Open Access Mandates in ROARMAP

ROAR is the Registry of Open Access Repositories

ROARMAP is the Registry of Open Access Repository Material Archiving Policies

The purpose of ROARMAP is to register and record the open-access policies of those institutions and funders who are putting the principle of Open Access (as expressed by the Budapest Open Access Initiative and the Berlin Declaration) into practice as recommended by Berlin 3 (as well as the UK Government Science and Technology Committee).

Universities, research institutions and research funders:

Sample Institutional Self-Archiving Mandate

Arabic (please return to English version to sign) [Many thanks to Chawki Hajjem for the translation]

Chinese (please return to English version to sign) [Many thanks to Chu Jingli for the translation]

French (s.v.p. revenir sur cette version anglaise pour signer) [Beaucoup de remerciements a H. Bosc.]

German (Unterzeichnung bitte in der englischen Version) [Vielen Dank an K. Mruck.]

Hebrew (please return to English version to sign) [Many thanks to Miriam Faber and Malka Cymblista for the translation]

Italian (please return to English version to sign) [Many thanks to Susanna Mornati for the translation]

Japanese(please return to English version to sign) [Many thanks to Koichi Ojiro for the translation]

Russian (pozsaluista vozvratite k angliskomu variantu k dannym po zalemi) [Spasibo bolshoia Eleni Kulaginoi dla perevoda]

Spanish (ver tambien) (por favor volver a la version inglesa para firmar) [Muchas gracias a Hector F. Rucinque para la traduccion espanola]

ROARMAP is the Registry of Open Access Repository Material Archiving Policies

The purpose of ROARMAP is to register and record the open-access policies of those institutions and funders who are putting the principle of Open Access (as expressed by the Budapest Open Access Initiative and the Berlin Declaration) into practice as recommended by Berlin 3 (as well as the UK Government Science and Technology Committee).

Universities, research institutions and research funders:

If you have adopted a mandate to provide open access to your own peer-reviewed research output you are invited to click here to register and describe your mandate in ROARMAP. (For suggestions about the form of policy to adopt, see here.)Registering your OA mandate in ROARMAP will:

(1) record your own institution's commitment to providing open access to its own research output,

(2) help the research community measure its progress (see Figure below, courtesy of Alma Swan and Oasis) in providing open access worldwide, and

(3) encourage further institutions to adopt open-access mandates (so that your own institution's users can have access to the research output of other institutions as well).

Sample Institutional Self-Archiving Mandate

To register and describe your mandate, please click here."For the purposes of institutional record-keeping, research asset management, and performance-evaluation, and in order to maximize the visibility, accessibility, usage and impact of our institution's research output, our institution's researchers are henceforth to deposit the final, peer-reviewed, accepted drafts of all their journal articles (and accepted theses) into our institution's institutional repository immediately upon acceptance for publication."

Arabic (please return to English version to sign) [Many thanks to Chawki Hajjem for the translation]

Chinese (please return to English version to sign) [Many thanks to Chu Jingli for the translation]

French (s.v.p. revenir sur cette version anglaise pour signer) [Beaucoup de remerciements a H. Bosc.]

German (Unterzeichnung bitte in der englischen Version) [Vielen Dank an K. Mruck.]

Hebrew (please return to English version to sign) [Many thanks to Miriam Faber and Malka Cymblista for the translation]

Italian (please return to English version to sign) [Many thanks to Susanna Mornati for the translation]

Japanese(please return to English version to sign) [Many thanks to Koichi Ojiro for the translation]

Russian (pozsaluista vozvratite k angliskomu variantu k dannym po zalemi) [Spasibo bolshoia Eleni Kulaginoi dla perevoda]

Spanish (ver tambien) (por favor volver a la version inglesa para firmar) [Muchas gracias a Hector F. Rucinque para la traduccion espanola]

Wednesday, July 22. 2009

Hungary's First Green OA Mandate; Planet's 93rd: OTKA

HUNGARY funder-mandate

Hungarian Scientific Research Fund (OTKA)

Institution's/Department's OA Eprint Archives: [growth data]

Institution's/Department's OA Self-Archiving Policy:

Added by: Dr. Elod Nemerkenyi (Assistant for International Affairs) nemerkenyi.elod AT otka.hu on 22 Jul 2009All scientific publications resulting from support by an OTKA grant are required to be made available for free according to the standards of Open Access, either through providing the right of free access during publication or through depositing the publication in an open access repository. The deposit can be in any institutional or disciplinary repository, as well as in the Repository of the Library of the Hungarian Academy of Sciences – REAL: http://real.mtak.hu/

Tuesday, July 21. 2009

UK's 24th Green Open Access Mandate, Planet's 92nd: Coventry University

OA Self-Archiving Policy: Coventry University: Department of Media and Communication

OA Self-Archiving Policy: Coventry University: Department of Media and Communication

Coventry University: Department of Media and CommunicationFull list of institutions

(UK departmental-mandate)

http://www.coventry.ac.uk/cu/schoolofartanddesign/mediaandcommunication

Institution's/Department's OA Eprint Archives

[growth data] https://curve.coventry.ac.uk/cu/logon.do

CURVE, which stands for Coventry University Repository Virtual Environment, is Coventry University's existing institutional repository.

Institution's/Department's OA Self-Archiving Policy

Open Access Self-Archiving Policy: Coventry University, Department of Media and Communication

This Open Access Self-Archiving Policy requires all researchers in the Department of Media and Communication at Coventry University to deposit copies of their research outputs in CURVE (which stands for Coventry University Repository Virtual Environment), in order to make these outputs freely accessible and easily discoverable online, and so increase the visibility, dissemination, usage and impact of the Department’s research. This Open Access Self-Archiving Policy will be mandatory from 1st September 2009 onwards.

The Department’s Open Access Self-Archiving Policy makes it obligatory for each researcher in the Department to supply an electronic copy of the author’s final version of all peer-reviewed research outputs for deposit in CURVE immediately upon their acceptance for publication.

The policy also endorses the depositing in CURVE of an electronic copy of the author’s final accepted version of all non-peer reviewed research outputs, especially those that are likely to contribute to any future REF, as well as of research outputs published before the introduction of this policy.

Researchers will make these research outputs available for deposit in CURVE together with the relevant bibliographic metadata (name of author, title of publication, date and place of publication and so on). When doing so they will indicate whether a particular research output can be made publicly visible.

Where it is possible to do so all researchers in the Department of Media and Communication at Coventry University are required to designate outputs deposited in CURVE as being publicly available Open Access. This will enable the full text of the output and the associated metadata to be easily found, accessed, indexed and searched across a range of global search engines, archives and databases.

In those instances where it is not possible to do so – because it is necessary to comply with the legal requirements of a publisher’s or funder’s copyright policy or licensing agreement, for example - researchers can define outputs deposited in CURVE as being ‘closed access’ and for use only within Coventry University as an aid to the administration, management and reporting of research activity. In such cases only the metadata of the research output will be visible publicly, with Open Access to the full text being delayed for that period specified by the publisher or funder, often in the form of an eighteen, twelve or (preferably, at most) six month embargo. The full text of the output can then be made publicly available under Open Access conditions at a later date, immediately the period of the embargo has come to an end or permission to do so has otherwise been granted.

To keep such cases to an absolute minimum, from 1st September 2009 researchers in the Department of Media and Communication at Coventry University are expected, as much as is possible and appropriate, to avoid signing copyright or licensing agreements that do not allow electronic copies of the author’s final, peer reviewed and accepted version of their research outputs to be deposited in an institutional Open Access repository such as CURVE.

17 July 2009

Sunday, July 19. 2009

Science Magazine: Letters About the Evans & Reimer Open Access Study

Update Jan 1, 2010: See Gargouri, Y; C Hajjem, V Larivière, Y Gingras, L Carr,T Brody & S Harnad (2010) “Open Access, Whether Self-Selected or Mandated, Increases Citation Impact, Especially for Higher Quality Research”

Update Feb 8, 2010: See also "Open Access: Self-Selected, Mandated & Random; Answers & Questions"

Five months after the fact, this week's Science Magazine has just published four letters and a response about Evans & Reimer's Open Access and Global Participation in Science, Science 20 February 2009: 1025.

Five months after the fact, this week's Science Magazine has just published four letters and a response about Evans & Reimer's Open Access and Global Participation in Science, Science 20 February 2009: 1025. You might want to also take a peek at these three rather more detailed critiques that Science did not publish...:

"Open Access Benefits for the Developed and Developing World: The Harvards and the Have-Nots"Stevan Harnad

"The Evans & Reimer OA Impact Study: A Welter of Misunderstandings"

"Perils of Press-Release Journalism: NSF, U. Chicago, and Chronicle of Higher Education"

American Scientist Open Access Forum

(Page 1 of 2, totaling 14 entries)

» next page

EnablingOpenScholarship (EOS)

Quicksearch

Syndicate This Blog

Materials You Are Invited To Use To Promote OA Self-Archiving:

Videos:

audio WOS

Wizards of OA -

audio U Indiana

Scientometrics -

The American Scientist Open Access Forum has been chronicling and often directing the course of progress in providing Open Access to Universities' Peer-Reviewed Research Articles since its inception in the US in 1998 by the American Scientist, published by the Sigma Xi Society.

The American Scientist Open Access Forum has been chronicling and often directing the course of progress in providing Open Access to Universities' Peer-Reviewed Research Articles since its inception in the US in 1998 by the American Scientist, published by the Sigma Xi Society.

The Forum is largely for policy-makers at universities, research institutions and research funding agencies worldwide who are interested in institutional Open Acess Provision policy. (It is not a general discussion group for serials, pricing or publishing issues: it is specifically focussed on institutional Open Acess policy.)

You can sign on to the Forum here.

Archives

Calendar

Categories

Blog Administration

Statistics

Last entry: 2018-09-14 13:27

1129 entries written

238 comments have been made