Quicksearch

Your search for metrics returned 102 results:

Wednesday, June 18. 2008

OA in Canada June 2008: ELPUB (Toronto) & AAUP (Montreal)

ELPUB 2008, with the theme of Open Scholarship, meets in Toronto, Canada, June 25-27 2008.

Leslie Chan (U. Toronto) is Conference Chair.

Les Carr of U. Southampton/EPrints will conduct a workshop on

Repositories that Support Research Management

John Willinsky (UBC & Stanford) will deliver the opening keynote:

The Quality of Open Scholarship: What Follows from Open?

Stevan Harnad (UQAM & Southampton) will deliver the closing keynote:

Filling OA Space At Long Last: Integrating University and Funder Mandates and Metrics.

The Association of American University Presses (AAUP) meets in Montreal 26-28 June.

Plenary Session:

Open Access: From the Budapest Open to Harvard's Addendum

Moderated by Sandy Thatcher (Director, Penn State University Press) with presentations by Stevan Harnad (UQAM & Southampton) and Stanley Katz (Princeton).

Sunday, June 15. 2008

Citation Statistics: International Mathematical Union Report

SUMMARY: The IMU's cautions are welcome: Research metrics do need to be validated; they need to be multiple, rather than a single, unidimensional index; they need to be separately validated for each discipline, and the weights on the multiple metrics need to be calibrated and adjusted both for the discipline being assessed and for the properties on which it is being ranked. The RAE 2008 database provides the ideal opportunity to do all this discipline-specific validation and calibration, because it is providing parallel data from both peer panel rankings and metrics. The metrics, however, should be as rich and diverse as possible, to capitalize on this unique opportunity for joint validation. Open Access not only provides more metrics; it also augments them, as well as providing open safeguards against manipulation and misuse.

Charles Oppenheim wrote (in the American Scientist Open Access Forum):Numbers with a number of problems

International Mathematical Union Citation Statistics Report. Robert Adler, John Ewing (Chair), Peter Taylor

CHARLES OPPENHEIM: "I've now read the whole report. Yes, it tilts at the usual windmills, and rightly dismissed the use of Impact factors for anything but crude comparisons, but it fails to address the fundamental issue, which is: citation and other metrics correlate superbly with subjective peer review. Both methods have their faults, but they are clearly measuring the same (or closely related) things. Ergo, if you have evaluate research in some way, there is no reason NOT to use them! It also keeps referring to examples from the field of maths, which is a very strange subject citation-wise."I have now read the IMU report too, and agree with Charles that it makes many valid points but it misunderstands the one fundamental point concerning the question at hand: Can and should metrics be used in place of peer-panel based rankings in the UK Research Assessment Exercise (RAE) and its successors and homologues elsewhere? And there the answer is a definite Yes.

The IMU critique points out that research metrics in particular and statistics in general are often misused, and this is certainly true. It also points out that metrics are often used without validation. This too is correct. There is also a simplistic tendency to try to use one single metric, rather than multiple metrics that can complement and correct one another. There too, a practical and methodological error is correctly pointed out. It is also true that the "journal impact factor" has many flaws, and should on no account be used to rank individual papers or researchers, and especially not alone, as a single metric.

But what all this valuable, valid cautionary discussion overlooks is not only the possibility but the empirically demonstrated fact that there exist metrics that are highly correlated with human expert rankings. It follows that to the degree that such metrics account for the same variance, they can substitute for the human rankings. The substitution is desirable, because expert rankings are extremely costly in terms of expert time and resources. Moreover, a metric that can be shown to be highly correlated with an already validated variable predictor variable (such as expert rankings) thereby itself becomes a validated predictor variable. And this is why the answer to the basic question of whether the RAE's decision to convert to metrics was a sound one is: Yes.

Nevertheless, the IMU's cautions are welcome: Metrics do need to be validated; they do need to be multiple, rather than a single, unidimensional index; they do have to be separately validated for each discipline, and the weights on the multiple metrics need to be calibrated and adjusted both for the discipline being assessed and for the properties on which it is being ranked. The RAE 2008 database provides the ideal opportunity to do all this discipline-specific validation and calibration, because it is providing parallel data from both peer panel rankings and metrics. The metrics, however, should be as rich and diverse as possible, to capitalize on this unique opportunity for joint validation.

Here are some comments on particular points in the IMU report. (All quotes are from the report):

The meaning of a citation can be even more subjective than peer review.True. But if there is a non-metric criterion measure -- such as peer review -- on which we already rely, then metrics can be cross-validated against that criterion measure, and this is exactly what the RAE 2008 database makes it possible to do, for all disciplines, at the level of an entire sizeable nation's total research output...

The sole reliance on citation data provides at best an incomplete and often shallow understanding of research -- an understanding that is valid only when reinforced by other judgments.This is correct. But the empirical fact has turned out to be that a department's total article/author citation counts are highly correlated with its peer rankings in the RAE in every discipline tested. This does not mean that citation counts are the only metric that should be used, or that they account for 100% of the variance in peer rankings. But it is strong evidence that citation counts should be among the metrics used, and it constitutes a (pairwise) validation.

Using the impact factor alone to judge a journal is like using weight alone to judge a person's health.For papers, instead of relying on the actual count of citations to compare individual papers, people frequently substitute the impact factor of the journals in which the papers appear. As noted, this is a foolish error if the journal impact factor is used alone, but it may enhance predictivity and hence validity if added to a battery of jointly validated metrics.

The validity of statistics such as the impact factor and h-index is neither well understood nor well studied.The h-index (and its variants) were created ad hoc, without validation. They turn out to be highly correlated with citation counts (for obvious reasons, since they are in part based on them). Again, they are all welcome in a battery of metrics to be jointly cross-validated against peer rankings or other already-validated or face-valid metrics.

citation data provide only a limited and incomplete view of research quality, and the statistics derived from citation data are sometimes poorly understood and misused.It is certainly true that there are many more potential metrics of research performance productivity, impact and quality than just citation metrics (e.g., download counts, student counts, research funding, etc.). They should all be jointly validated, discipline by discipline and each metric should be weighted according to what percentage of the criterion variance (e.g., RAE 2008 peer rankings) it predicts.

relying primarily on metrics (statistics) derived from citation data rather than a variety of methods, including judgments by scientists themselves...The whole point is to cross-validate the metrics against the peer judgments, and then use the weighted metrics in place of the peer judgments, in accordance with their validated predictive power.

bibliometrics (using counts of journal articles and their citations) will be a central quality index in this system [RAE]Yes, but the successor of RAE is not yet clear on which metrics it will use, and whether and how it will validate them. There is still some risk that a small number of metrics will simply be picked a priori, without systematic validation. It is to be hoped that the IMU critique, along with other critiques and recommendations, will result in the use of the 2008 parallel metric/peer data for a systematic and exhaustive cross-validation exercise, separately for each discipline. Future assessments can then use the metric battery, with initialized weights (specific to each discipline), and can calibrate and optimize them across the years, as more data accumulates -- including spot-checks cross-validating periodically against "light-touch" peer rankings and other validated or face-valid measures.

sole reliance on citation-based metrics replaces one kind of judgment with another. Instead of subjective peer review one has the subjective interpretation of a citation's meaning.Correct. This is why multiple metrics are needed, and why they need to be systematically cross-validated against already-validated or face-valid criteria (such as peer judgment).

Research usually has multiple goals, both short-term and long, and it is therefore reasonable that its value must be judged by multiple criteria.Yes, and this means multiple, validated metrics. (Time-course parameters, such as growth and decay rates of download, citation and other metrics are themselves metrics.)

many things, both real and abstract, that cannot be simply ordered, in the sense that each two can be comparedYes, we should not compare the incomparable and incommensurable. But whatever we are already comparing, by other means, can be used to cross-validate metrics. (And of course it should be done discipline by discipline, and sometimes even by sub-discipline, rather than by treating all research as if it were of the same kind, with the same metrics and weights.)

lea to use multiple methods to assess the quality of researchValid plea, but the multiple "methods" means multiple metrics, to be tested for reliability and validity against already validated methods.

Measures of esteem such as invitations, membership on editorial boards, and awards often measure quality. In some disciplines and in some countries, grant funding can play a role. And peer review -- the judgment of fellow scientists -- is an important component of assessment.These are all sensible candidate metrics to be included, alongside citation and other candidate metrics, in the multiple regression equation to be cross-validated jointly against already validated criteria, such as peer rankings (especially in RAE 2008).

lure of a simple process and simple numbers (preferably a single number) seems to overcome common sense and good judgment.Validation should definitely be done with multiple metrics, jointly, using multiple regression analysis, not with a single metric, and not one at a time.

special citation culture of mathematics, with low citation counts for journals, papers, and authors, makes it especially vulnerable to the abuse of citation statistics.Metric validation and weighting should been done separately, field by field.

For some fields, such as bio-medical sciences, this is appropriate because most published articles receive most of their citations soon after publication. In other fields, such as mathematics, most citations occur beyond the two-year period.Chronometrics -- growth and decay rates and other time-based parameters for download, citations and other time-based, cumulative measures -- should be among the battery of candidate metrics for validation.

The impact factor varies considerably among disciplines... The impact factor can vary considerably from year to year, and the variation tends to be larger for smaller journals.All true. Hence the journal impact factor -- perhaps with various time constants -- should be part of the battery of candidate metrics, not simply used a priori.

The most important criticism of the impact factor is that its meaning is not well understood. When using the impact factor to compare two journals, there is no a priori model that defines what it means to be "better". The only model derives from the impact factor itself -- a larger impact factor means a better journal... How does the impact factor measure quality? Is it the best statistic to measure quality? What precisely does it measure? Remarkably little is known...And this is because the journal impact factor (like most other metrics) has not been cross-validated against face-valid criteria, such as peer rankings.

employing other criteria to refine the ranking and verify that the groups make senseIn other words, systematic cross-validation is needed.

impact factor cannot be used to compare journals across disciplinesAll metrics should be independently validated for each discipline.

impact factor may not accurately reflect the full range of citation activity in some disciplines, both because not all journals are indexed and because the time period is too short. Other statistics based on longer periods of time and more journals may be better indicators of quality. Finally, citations are only one way to judge journals, and should be supplemented with other informationChronometrics. And multiple metrics

The impact factor and similar citation-based statistics can be misused when ranking journals, but there is a more fundamental and more insidious misuse: Using the impact factor to compare individual papers, people, programs, or even disciplinesIndividual citation counts and other metrics: Multiple metrics, jointly validated.

the distribution of citation counts for individual papers in a journal is highly skewed, approximating a so-called power law... highly skewed distribution and the narrow window of time used to compute the impact factorTo the extent that distributions are pertinent, they too can be parametrized and taken into account in validating metrics. Comparing like with like (e.g., discipline by discipline) should also help maximize comparability.

using the impact factor as a proxy for actual citation counts for individual papersNo need to use one metric as a proxy for another. Jointly validate them all.

if you want to rank a person's papers using only citations to measure the quality of a particular paper, you must begin by counting that paper's citations. The impact factor of the journal in which the paper appears is not a reliable substitute.Correct, but this obvious truth does not need to be repeated so many times, and it is an argument against single metrics in general; and journal impact factor as a single factor in particular. But there's nothing wrong with using it in a battery of metrics for validation.

h-index Hirsch extols the virtues of the h-index by claiming that "h is preferable to other single-number criteria commonly used to evaluate scientific output of a researcher..."[Hirsch 2005, p. 1], but he neither defines "preferable" nor explains why one wants to find "single-number criteria."... Much of the analysis consists of showing "convergent validity," that is, the h-index correlates well with other publication/citation metrics, such as the number of published papers or the total number of citations. This correlation is unremarkable, since all these variables are functions of the same basic phenomenon...The h-index is again a single metric. And cross-validation only works against either an already validated or a face-valid criterion, not just another unvalidated metric. And the only way multiple metrics, all inter-correlated, can be partitioned and weighted is with multiple regression analysis -- and once again against a criterion, such as peer rankings.

Some might argue that the meaning of citations is immaterial because citation-based statistics are highly correlated with some other measure of research quality (such as peer review).Not only might some say it: Many have said it, and they are quite right. That means citation counts have been validated against peer review, pairwise. Now it is time to cross-validate and entire spectrum of candidate metrics, so each can be weighted for its predictive contribution.

The conclusion seems to be that citation-based statistics, regardless of their precise meaning, should replace other methods of assessment, because they often agree with them. Aside from the circularity of this argument, the fallacy of such reasoning is easy to see.The argument is circular only if unvalidated metrics are being cross-correlated with other unvalidated metrics. Then it's a skyhook. But when they are cross-validated against a criterion like peer rankings, which have been the predominant basis for the RAE for 20 years, they are being cross-validated against a face-valid criterion -- for which they can indeed be subsequently substituted, if the correlation turns out to be high enough.

"Damned lies and statistics"Yes, one can lie with unvalidated metrics and statistics. But we are talking here about validating metics against validated or face-valid criteria. In that case, the metrics lie no more (or less) than the criteria did, before the substitution.

Several groups have pushed the idea of using Google Scholar to implement citation-based statistics, such as the h-index, but the data contained in Google Scholar is often inaccurate (since things like author names are automatically extracted from web postings)...This is correct. But Google Scholar's accuracy is growing daily, with growing content, and there are ways to triangulate author identity from such data even before the (inevitable) unique author identifier is adopted.

Citation statistics for individual scientists are sometimes difficult to obtain because authors are not uniquely identified...True, but a good approximation is -- or will soon be -- possible (not for arbitrary search on the works of "Lee," but, for example, for all the works of all the authors in the UK university LDAPs).

Citation counts seem to be correlated with quality, and there is an intuitive understanding that high-quality articles are highly-cited.The intuition is replaced by objective data once the correlation with peer rankings of quality is demonstrated (and replaced in proportion to the proportion of the criterion variance accounted for) by the predictor metric.

But as explained above, some articles, especially in some disciplines, are highly-cited for reasons other than high quality, and it does not follow that highly-cited articles are necessarily high quality.This is why validation/weighting of metrics must be done separately, discipline by discipline, and why citation metrics alone are not enough: multiple metrics are needed to take into account multiple influences on quality and impact, and to weight them accordingly.

The precise interpretation of rankings based on citation statistics needs to be better understood.Once a sufficiently broad and predictive battery of metrics is validated and its weights initialized (e.g., in RAE 2008), further interpretation and fine-tuning can follow.

In addition, if citation statistics play a central role in research assessment, it is clear that authors, editors, and even publishers will find ways to manipulate the system to their advantage.True, but inasmuch as the new metric batteries will be Open Access, there will also be multiple metrics for detecting metric anomalies, inconsistency and manipulation, and for naming and shaming the manipulators, which will serve to control the temptation.

Harnad, S. (2001) Research access, impact and assessment. Times Higher Education Supplement 1487: p. 16.

Harnad, S., Carr, L., Brody, T. & Oppenheim, C. (2003) Mandated online RAE CVs Linked to University Eprint Archives: Improving the UK Research Assessment Exercise whilst making it cheaper and easier. Ariadne 35.

Brody, T., Kampa, S., Harnad, S., Carr, L. and Hitchcock, S. (2003) Digitometric Services for Open Archives Environments. In: Proceedings of European Conference on Digital Libraries 2003, pp. 207-220, Trondheim, Norway.

Harnad, S. (2007) Open Access Scientometrics and the UK Research Assessment Exercise. In Proceedings of 11th Annual Meeting of the International Society for Scientometrics and Informetrics 11(1), pp. 27-33, Madrid, Spain. Torres-Salinas, D. and Moed, H. F., Eds.

Brody, T., Carr, L., Harnad, S. and Swan, A. (2007) Time to Convert to Metrics. Research Fortnight pp. 17-18.

Brody, T., Carr, L., Gingras, Y., Hajjem, C., Harnad, S. and Swan, A. (2007) Incentivizing the Open Access Research Web: Publication-Archiving, Data-Archiving and Scientometrics. CTWatch Quarterly 3(3).

Harnad, S. (2008) Self-Archiving, Metrics and Mandates. Science Editor 31(2) 57-59

Harnad, S. (2008) Validating Research Performance Metrics Against Peer Rankings. Ethics in Science and Environmental Politics 8 (11) doi:10.3354/esep00088

Loet Leydesdorff wrote in the ASIS&T Special Interest Group on Metrics:

LL: "It seems to me that it is difficult to generalize from one setting in which human experts and certain ranks coincided to the existence of such correlations across the board. Much may depend on how the experts are selected. I did some research in which referee reports did not correlate with citation and publication measures."Much may depend on how the experts are selected, but that was just as true during the 20 years in which rankings by experts were the sole criterion for the rankings in the UR Research Assessment Exercise (RAE). (In validating predictive metrics one must not endeavor to be Holier than the Pope: Your predictor can at best hope to be as good as, but not better than, your criterion.)

That said: All correlations to date between total departmental author citation counts (not journal impact factors!) and RAE peer rankings have been positive, sizable, and statistically significant for the RAE, in all disciplines and all years tested. Variance there will be, always, but a good-sized component from citations alone seems to be well-established. Please see the studies of Professor Oppenheim and others, for example as cited in:

Harnad, S., Carr, L., Brody, T. & Oppenheim, C. (2003) Mandated online RAE CVs Linked to University Eprint Archives: Improving the UK Research Assessment Exercise whilst making it cheaper and easier. Ariadne 35.

LL: "Human experts are necessarily selected from a population of experts, and it is often difficult to delineate between fields of expertise."Correct. And the RAE rankings are done separately, discipline by discipline; the validation of the metrics should be done that way too.

Perhaps there is sometimes a case for separate rankings even at sub-disciplinary level. I expect the departments will be able to sort that out. (And note that the RAE correlations do not constitute a validation of metrics for evaluating individuals: I am confident that that too will be possible, but it will require many more metrics and much more validation.)

LL: "Similarly, we know from quite some research that citation and publication practices are field-specific and that fields are not so easy to delineate. Results may be very sensitive to choices made, for example, in terms of citation windows."As noted, some of the variance in peer judgments will depend on the sample of peers chosen; that is unavoidable. That is also why "light touch" peer re-validation, spot-checks, updates and optimizations on the initialized metric weights are also a good idea, across the years.

As to the need to evaluate sub-disciplines independently: that question exceeds the scope of metrics and metric validation.

LL: "Thus, I am bit doubtful about your claims of an 'empirically demonstrated fact'."Within the scope mentioned -- the RAE peer rankings, for disciplines such as they have been partitioned for the past two decades -- there is ample grounds for confidence in the empirical results to date.

(And please note that this has nothing to do with journal impact factors, journal field classification, or journal rankings. It is about the RAE and the ranking of university departments by peer panels, as correlated with citation counts.)

Stevan Harnad

American Scientist Open Access Forum

Sunday, June 8. 2008

Would Gold OA Publishing Fees Compromise Peer-Reviewed Journal Quality?

SUMMARY: Some authors today no doubt try to buy their way into fee-based Gold OA journals, and some Gold OA journals that are short on authors no doubt lower their quality standards to win authors. But something very similar is already true of the lower-end subscription-based journals that prevail today, and this will continue to be true of lower-end journals if and when Gold OA becomes universal. The demand for quality, however, (by [some] authors, referees and users) will ensure that the existing journal quality hierarchy continues to exist, regardless of the cost-recovery model (whether user-institution subscription fees or author-institution peer-review fees). The high-quality authors will still want to publish in high-quality rather than low-quality journals, and journals will still need to strive to generate track-records as high-quality journals -- not just (1) to attract the high-quality authors and work, but (2) to retain the high-quality peer-reviewers and (3) to retain users. Usage will in turn be (4) openly tracked by rich OA impact metrics, which will complement peer perceptions of the journal's (and author's) track record for quality.

SUMMARY: Some authors today no doubt try to buy their way into fee-based Gold OA journals, and some Gold OA journals that are short on authors no doubt lower their quality standards to win authors. But something very similar is already true of the lower-end subscription-based journals that prevail today, and this will continue to be true of lower-end journals if and when Gold OA becomes universal. The demand for quality, however, (by [some] authors, referees and users) will ensure that the existing journal quality hierarchy continues to exist, regardless of the cost-recovery model (whether user-institution subscription fees or author-institution peer-review fees). The high-quality authors will still want to publish in high-quality rather than low-quality journals, and journals will still need to strive to generate track-records as high-quality journals -- not just (1) to attract the high-quality authors and work, but (2) to retain the high-quality peer-reviewers and (3) to retain users. Usage will in turn be (4) openly tracked by rich OA impact metrics, which will complement peer perceptions of the journal's (and author's) track record for quality.It is likely that some fee-based Gold OA journals today (while Gold OA publishing is the minority route, in competition with conventional subscription-based publishing, rather than universal) are in some cases compromising the rigor of peer review and hence the quality of the article and journal. However, journals have always differed in quality and rigor of peer review, and researchers have always known which were the high and low quality journals, based on the journals' (open!) track-records for quality.

If and when Gold OA publishing should ever becomes universal (for example, if and when universal Green OA self-archiving should ever put an end to the demand for the subscription version, thereby making subscriptions unsustainable and forcing a conversion to fee-based Gold OA publishing) then the very same research community standards and practices that today favor those subscription journals who have the track records for the highest quality standards will continue to ensure the same standards for the highest quality journals: The high-quality authors will still want to publish in high-quality rather than low-quality journals, and journals will still need to strive to generate track-records as high-quality journals -- not just (1) in order to attract the high-quality authors and work, but (2) in order to retain the high-quality peer-reviewers (who, after all, do their work voluntarily, not for a fee, and not in order to generate journal revenue, and who will not referee if their advice is ignored for the sake of generating more journal revenue, making journal quality low) as well as (3) in order to retain users (who, although they no longer need to subscribe in order to access the journal, will still be influenced by the quality of the journal in what they choose to access and use). Usage will in turn be (4) openly tracked by rich OA impact metrics, which will complement peer perceptions of the journal's (and author's) track record for quality. This will again influence author choice of journals.

So, in sum: Some authors today no doubt try to buy their way into fee-based Gold OA journals, and some Gold OA journals short on authors no doubt lower their quality standards to win authors. But something very similar is already true of the lower-end subscription-based journals that prevail today, and this will continue to be true of lower-end journals if and when Gold OA becomes universal. The demand for quality, however, (by [some] authors, referees and users) will ensure that the existing journal quality hierarchy continues to exist, regardless of the cost-recovery model (whether user-institution subscription fees or author-institution peer-review fees).

Moreover, the author, user and institutional demand for the canonical print edition is still strong today, and unlikely to be made unsustainable by authors' free final, refereed drafts any time soon, even as Green OA mandates gradually make them universal. And there are already, among Gold OA journals, high-end journals like the PLoS journals, that are maintaining the highest peer-review standards despite the fact that they need paying authors. So quality still trumps price, for authors as well as publishers, on the high-quality end. Not to mention that as more and more of traditional publishing functions (access-provision, archiving) are offloaded onto the growing worldwide network of Green OA Institutional Repositories, the price of publishing will shrink. (I think the cost of peer review alone will be about $200, especially if a submission fee of, say, $50, creditable toward the publishing fee if the paper is accepted, is levied on all submissions, to discourage low-probability nuisance submissions and to distribute the costs of peer review across all submissions, rejected as well as accepted.)

(I also think that the idea of paying referees for their services (though it may have a few things to recommend it) is a nonstarter, especially at this historic point. It would (i) raise rather than lower publication costs; the payment (ii) could never be made high enough to really compensate referees' for their time and efforts; and referee payment too is (iii) open to abuse: If authors will pay to publish lower quality work, and journals will lower standards to get those author payments, then referees can certainly lower their standards to get those referee payments too! And that's true even if referee names are made publicly known, just as author-names and journal-names a publicly known) In short, universal OA, and the negligibly low costs of implementing classical peer review, would moot all that.)

Thursday, June 5. 2008

Hidden Cost of Failing to Access Information

"Disseminating research via the web is appealing, but it lacks journals' peer-review quality filter," says Philip Altbach in: Hidden cost of open access Times Higher Education Supplement 5 June 2008Professor Altbach's essay in the Times Higher Education Supplement is based on a breath-takingly fundamental misunderstanding of both Open Access (OA) and OA mandates like Harvard's: The content that is the target of the OA movement is peer-reviewed journal articles, not unrefereed manuscripts. Professor Altbach seems somehow to have confused OA with Wikipedia.

It is the author's peer-reviewed final drafts of their just-published journal articles that Harvard and 43 other institutions and research funders worldwide have required to be deposited in their institutional repositories. This is a natural online-era extension of institutions' publish or perish policy, adopted in order to maximise the usage and impact of their peer-reviewed research output.

The journal's (and author's) name and track record continue to be the indicators of quality, as they always were. The peers (researchers themselves) continue to review journal submissions (for free) as they always did.

The only thing that changes with OA is that all would-be users webwide -- rather than only those whose institutions can afford to subscribe to the journal in which it was published -- can access, use, apply, build upon and cite each published, peer-reviewed research finding, thereby maximising its "impact factor." (This also makes usage and citation metrics Open Access, putting impact analysis into the hands of the research community itself rather than just for-profit companies.)

And if and when mandated OA should ever make subscriptions unsustainable as the means of covering the costs of peer review, journals will simply charge institutions directly for the peer-reviewing of their research output, by the article, instead of charging them indirectly for access to the research output of other institutions, by the journal, as most do now. The institutional windfall subscription savings will be more than enough to pay the peer review costs several times over.

What is needed is more careful thought and understanding of what OA actually is, what it is for, and how it works, rather than uninformed non sequiturs such as those in the essay in question.

Stevan Harnad

American Scientist Open Access Forum

Wednesday, April 9. 2008

Harold Varmus on the NIH Green Open Access Self-Archiving Mandate

SUMMARY: Harold Varmus thinks the NIH Green OA self-archiving mandate isn't enough because (1) it doesn't provide enough usage rights, (2) it is subject to embargoes, (3) it only covers research from mandating funders, and (4) it doesn't reform copyright transfer. This is a miscalculation of practical priorities and an underestimation of the technical power of Green OA, which resides in self-mandates by institutions (such as Harvard's), rather than just funder mandates like NIH's. Institutions are the producers of all research output, and their Green OA self-mandates ensure the self-archiving of all their own published article output, in all disciplines, funded or unfunded, in their own Institutional Repositories (IRs). Self-archiving provides for all the immediate access and usage needs of all individual researchers, webwide. Access to most deposited articles can already be set as Open Access immediately. For the rest, IRs' semi-automatic "email eprint request" Button provides almost-immediate access. Access embargoes will die under the growing pressure of universal Green OA's power and benefits. Institutions' own IRs are also the natural locus for mandating direct deposit by both institutional and funder mandates. Copyright retention is not necessary as a precondition for mandating Green OA and puts the adoption of Green OA mandates at risk by demanding too much. Once Green OA mandates generate universal Green OA, copyright retention will follow naturally of its own accord.

Varmus, Harold (2008) Progress toward Public Access to Science. PLoS Biol 6(4): e101 doi:10.1371/journal.pbio.0060101Harold Varmus welcomes the NIH Green OA self-archiving mandate and the increased access it brings, but says it isn't enough because (1) it doesn't provide enough usage rights, (2) it is subject to embargoes, (3) it only covers research from mandating funders, (4) it doesn't reform copyright transfer. He instead stresses his preference for publishing in Gold OA journals, which provide (1) - (4). (He has said elsewhere that he does not consider Green OA to be OA.)

I believe Professor Varmus is mistaken on all four counts because of a miscalculation of practical priorities and an underestimation of the technical power of Green OA. Green OA can be mandated, whereas Gold OA cannot (and need not be). What is urgently needed by research and researchers today is OA, and that is provided immediately by Green OA. (Green OA is also likely to lead eventually to a transition to Gold OA.)

Professor Varmus also speaks of Green OA self-archiving as if it were a matter of central "public libraries" (like PubMed Central, which he co-founded, along with co-founding PLoS) that are "inherently archival" and provide only embargoed access rather than immediate access.

In reality the greatest power of Green OA self-archiving mandates resides mostly in self-mandates by institutions (such as Harvard's), rather than just funder mandates like NIH's. Institutions are the producers of all research output, and their Green OA self-mandates ensure the self-archiving of all their own published article output, in all disciplines, funded or unfunded, in their own Institutional Repositories (IRs). Those IRs are neither libraries nor archives. They are providers of immediate research access for would-be users worldwide, and they also provide an interim solution for usage needs during embargoes:

(1) USAGE RIGHTS. Self-archiving the author's final refereed draft (the "postprint") makes it possible for any user, webwide, to access, link, read, download, store, print-off, and data-mine the full text, as well as for search engines like google to harvest and invert it, for Google Scholar and OAIster to make it jointly searchable, for Citebase and Citeseer to provide download, citation and other ranking metrics, and for "public libraries" like PubMed Central to harvest them into archival central collections.

This provides for all the immediate access and usage needs of all individual researchers. Certain 3rd-party database, data-mining, and republication rights are still uncertain, but once Green OA mandates generate universal Green OA, these enhanced uses will follow naturally under the growing pressure generated by OA's demonstrated power and benefits to the worldwide research community. Over-reaching for Gold now risks losing the Green that is within our immediate grasp.

This provides for all the immediate access and usage needs of all individual researchers during any access embargo. Once Green OA mandates generate universal Green OA, access embargoes will die their well-deserved natural deaths of their own accord under the growing pressure generated by OA's demonstrated power and benefits to the worldwide research community. Again, grasp what is within reach first.

(3) UNFUNDED RESEARCH. Funder mandates only cover funded research, but they also encourage, complement and reinforce institutional mandates, which cover all research output, in all disciplines. Institutions' own IRs are also the natural, convergent locus for mandating direct deposit by both institutional and funder mandates. All IRs are OAI-compliant and interoperable, so their contents can be exported to funder repositories such as PubMed Central, and institutions can help monitor and ensure compliance with funder mandates as well as with their own institutional mandates -- but only if direct deposit itself is systematically convergent rather than diverging to multiple, arbitrary, institution-external deposit sites.

(4) COPYRIGHT RETENTION. Copyright retention is always welcome, but it is not only not necessary for providing Green OA, but, in asking for more than necessary, it risks making authors feel that it may put acceptance by their journal of choice at risk. Consequently, Harvard, for example, has found it necessary to add an opt-out clause to its copyright-retention mandate, which not only means that it is not really a mandate, but that it is not ensured of providing OA for all of its research output.

An immediate deposit IR mandate without opt-out (and with the Button) provides for all the immediate access and usage needs of all individual researchers, and once Green OA mandates generate universal Green OA, copyright retention will follow naturally of its own accord. First things first.

Stevan Harnad

American Scientist Open Access Forum

Sunday, March 9. 2008

Open Access Koans, Mantras and Mandates

On Sun, 9 Mar 2008, Andy Powell [AP] wrote in JISC-REPOSITORIES:

On Sun, 9 Mar 2008, Andy Powell [AP] wrote in JISC-REPOSITORIES: AP: You can repeat the IR mantra as many times as you like... it doesn't make it true.Plenty of figures have been posted on how much money institutions have wasted on their (empty) IRs in the eight years since IRs began. People needlessly waste a lot of money on lots of needless things. The amount wasted is of no intrinsic interest in and of itself.

Despite who-knows-how-much-funding being pumped into IRs globally (can anyone begin to put a figure on this, even in the UK?)...

The relevant figure is this:

For the answer, you do not have to go far: Just ask the dozen universities that have so far done both: The very first IR-plus-mandate was a departmental one (at Southampton ECS); but the most relevant figures will come from university-wide mandated IRs, and for those figures you should ask Tom Cochrane at QUT and Eloy Rodrigues at Minho (distinguishing the one-time start-up cost from the annual maintenance cost).How much does it actually cost just to set up an OA IR and to implement a self-archiving mandate to fill it?

And then compare the cost of that (relative to each university's annual research output) with what it would have cost (someone: whom?) to set up subject-based CRs (which? where? how many?) for all of that same university annual research output, in every subject, willy-nilly worldwide, and to ensure (how?) that it was all deposited in its respective CR.

(Please do not reply with social-theoretic mantras but with precisely what data you propose to base your comparative estimate upon!)

AP: most [IRs] remain largely unfilled and our only response is to say that funding bodies and institutions need to force researchers to deposit when they clearly don't want to of their own free will. We haven't (yet) succeeded in building services that researchers find compelling to use.We haven't (yet) succeeded in persuading researchers to publish of their own free will: So instead of waiting for researchers to wait to find compelling reasons to publish of their own free will, we audit and reward their research performance according to whether and what they publish ("publish or perish").

We also haven't (yet) succeeded in persuading researchers to publish research that is important and useful to research progress: So instead of waiting for researchers to wait to find compelling reasons to maximise their research impact, we review and reward research performance on the basis not just of how much research they publish, but also its research impact metrics.

Mandating that researchers maximise the potential usage and impact of their research by self-archiving it in their own IR, and auditing and rewarding that they do so, seems a quite natural (though long overdue) extension of what universities are all doing already.

AP: If we want to build compelling scholarly social networks (which is essentially what any 'repository' system should be) then we might be better to start by thinking in terms of the social networks that currently exist in the research community - social networks that are largely independent of the institution.Some of us have been thinking about building on these "social networks" since the early 1990's and we have noted that -- apart from the very few communities where these self-archiver networks formed spontaneously early on -- most disciplines have not followed the examples of those few communities in the ensuing decade and a half, even after repeatedly hearing the mantra (Mantra 1) urging them to do so, along with the growing empirical evidence of self-archiving's beneficial effects on research usage and impact (Mantra 2).

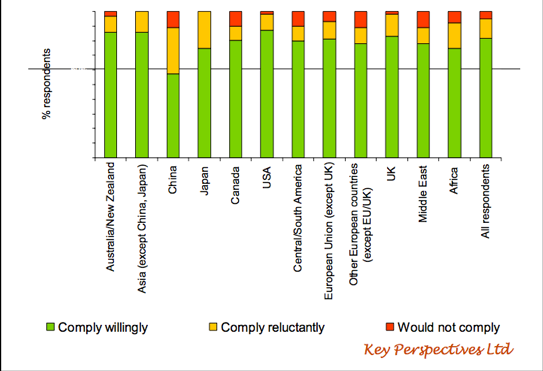

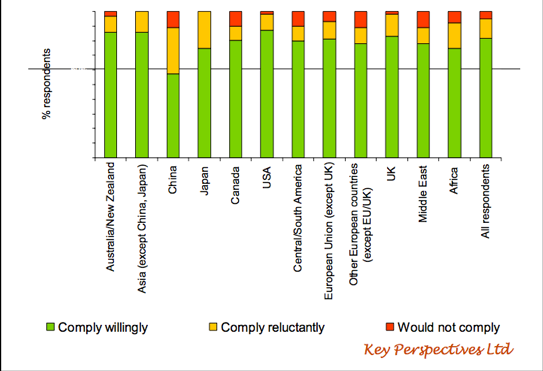

Then the evidence from the homologous precedent and example of (a) the institutional incentive system underlying publish-or-perish as well as (b) research metric assessment was reinforced by Alma Swan's JISC surveys: These found that (c) the vast majority of researchers report that they would not self-archive spontaneously of their own accord if their institutions and/or funders did not require it (mainly because they were busy with their institutions' and funders' other priorities), but 95% of them would self-archive their research if their institutions and/or funders were to require it -- and over 80% of them would do so willingly (Mantra 3).

Then the evidence from the homologous precedent and example of (a) the institutional incentive system underlying publish-or-perish as well as (b) research metric assessment was reinforced by Alma Swan's JISC surveys: These found that (c) the vast majority of researchers report that they would not self-archive spontaneously of their own accord if their institutions and/or funders did not require it (mainly because they were busy with their institutions' and funders' other priorities), but 95% of them would self-archive their research if their institutions and/or funders were to require it -- and over 80% of them would do so willingly (Mantra 3).

And then Arthur Sale's empirical comparisons of what researchers actually do when such requirements are and are not implemented fully confirmed what the surveys said that the researchers (across all disciplines and "social networks" worldwide) had said they would and would not do, and under what conditions (Mantra 4).

So I'd say we should not waste another decade and a half waiting for those fabled "social networks" to form spontaneously so the research community can at last have the OA that has not only already been demonstrated to be feasible and beneficial to them, but that they themselves have signed countless petitions to demand.

Indeed it is more a koan than a mantra that the only thing the researchers are not doing for the OA they overwhelmingly purport to desire is the few keystrokes per paper that it would it take to do a deposit rather than just sign a petition! (And it is in order to generate those keystrokes that the mandates are needed.)

AP: Oddly, to do that we might do well to change our thinking about how best to surface scholarly content on the Web to be both 1) user-centric (acknowledging that individual researchers want to take responsibility for how they surface their content, as happens, say, in the blogsphere) and 2) globally-centric (acknowledging that the infrastructure is now available that allows us to realise the efficiency savings and social network effects of large-scale globally concentrated services, as happens in, say, Slideshare, Flickr and so on).It is odd indeed that all these wonders of technology, so readily taken up spontaneously when people are playing computer games or blabbing in the blogosphere have not yet been systematically applied to their ergonomic practices, but the fact is that (aside from a few longstanding social networks) they have not been, and we have waited more than long enough. That systematic application is precisely what the now-growing wave of OA self-archiving mandates by funders (such as RCUK and NIH) and universities (such as Southampton ECS and Harvard) is meant to accelerate and ensure.

AP: Such a change in thinking does not rule the institution out of the picture, since the institution remains a significant stakeholder with significant interests... but it certainly does change the emphasis and direction and it hopefully stops us putting institutional needs higher up the agenda than the needs of the individual researcher.Individual researchers do not work in a vacuum. That is why we have institutions and funders. Those "research networks" already exist. As much as we may all admire the spontaneous, anonymous way in which (for example) Wikipedia is growing, we also have to note the repeatedly voiced laments of those academics who devote large portions of their time to such web-based activities without being rewarded for it by their institutions and funders (Mantra 5).

OA self-archiving mandates are precisely the bridge between (i) the existing canonical "social networks" and reward systems of the scholarly and scientific community -- their universities and research funders -- and (ii) the new world that is open before them.

OA self-archiving mandates are precisely the bridge between (i) the existing canonical "social networks" and reward systems of the scholarly and scientific community -- their universities and research funders -- and (ii) the new world that is open before them.It is time we crossed that bridge, at long last (Mantra 6).

Stevan Harnad

American Scientist Open Access Forum

Friday, February 8. 2008

New Ranking of Central and Institutional Repositories

The Webometrics Ranking of World Universities has created a new Ranking of Repositories, but in the announcement, a few salient points are overlooked:

The Webometrics Ranking of World Universities has created a new Ranking of Repositories, but in the announcement, a few salient points are overlooked:Yes, as noted, the three first ranks go to "thematic" (i.e., discipline- or or subject-based) Central Repositories (CRs): (1) Arxiv (Physics), (2) Repec (Economics) and (3) E-Lis (Library Science). That is to be expected, because such CRs are fed from institutions all over the world.

But the fourth-ranked repository -- and the first of the university-based Institutional Repositories (IRs), displaying only its own institutional output -- is (4) U Southampton EPrints (even though Southampton's University rank is 77th).

Moreover, the fifteenth place repository -- and the first of the department-based IRs -- is (15) U Southampton ECS EPrints (making it 10th ranked even relative to university-wide IRs!).

None of this is surprising: In 2000 Southampton created the world's first free, OAI-compliant IR-creating software -- EPrints -- now used (and imitated) worldwide.

But Southampton's ECS also adopted the world's first Green OA self-archiving mandate, now also being emulated worldwide. And that first mandate was a departmental mandate, which partly explains the remarkably high rank of Southampton's ECS departmental IR.

But these repository rankings (by Webometrics as well as by ROAR) should be interpreted with caution, because not all the CRs and IRs contain full-texts. Some only contain metadata. Southampton's university-wide IR, although 4th among repositories and 1st among IRs, is still mostly just metadata, because the university-wide mandate that U. Southampton has since adopted still has not been officially announced or implemented (because the university had been preparing for the 2008 Research Assessment Exercise returns). As soon as the mandate is implemented, that will change. (Southampton's ECS departmental IR, in contrast, mandated since 2002, is already virtually 100% full-text.)

But the moral of the story is that what Southampton is right now enjoying is not just the well-earned visibility of its research output, but also a competitive advantage over other institutions, because of its head-start, both in creating IRs and in adopting a mandate to fill them. (This head-start is also reflected in Southampton's unusually high University Metrics "G Factor," and probably in its University Webometric rank too. Citebase is likewise constrained by who has and has not begun to systematically self-archive. And Citeseer has alas stopped updating as of about 2003.)

I am not saying all this by way of bragging! I am begging other institutions to take advantage of the fact that it's still early days: Get a competitive head start too -- by creating an IR, and, most important of all, by adopting a Green OA self-archiving mandate!

Stevan Harnad

American Scientist Open Access Forum

Wednesday, January 30. 2008

The University's Mandate To Mandate Open Access

This is a guest posting (written at the invitation of Gavin Baker) for the new blog

This is a guest posting (written at the invitation of Gavin Baker) for the new blog "Open Students: Students for Open Access to Research."

Other OA activists are also encouraged to contribute to the Open Students blog.

My guess is that Open Access (OA) already sounds old hat to the current generation of students, and that you are puzzled more about why we are still talking about OA happening in the future, rather than in the distant past (as the 80's and 90's must appear to you!).

SUMMARY: Open Access (OA) will not come until universities, the research-providers, make it part of their mandate not only to publish their research findings, as now, but also to see to it that the few extra keystrokes it takes to make those published findings OA -- by self-archiving them in their institutional repositories, free for all online -- are done too. Students are in a position to help convince their universities to go ahead and mandate OA self-archiving, at long last.

Well, you're right to be both puzzled and impatient, but let me try to explain why it's been taking so long. (I say "try" because I have to admit that I too am still somewhat perplexed by the slowness of OA growth, even after sampling the sluggishness of its pace for nearly 2 decades now!) And then I'll try to suggest what you students can do to help speed OA on its way to its obvious, optimal, and long overdue destination.

What OA Is Not

First, what is Open Access (OA)? It's not about Open Source (OS) software -- i.e., it's not about making computer programs either open or free (though of course OA is in favor of and compatible with OS).

It's not about Creative Commons (CC) Licensing either -- i.e., it is not about making all digital creations re-usable and re-publishable (though again OA is in favor of and compatible with CC licensing).

Nor is it about "freedom of information" or "freedom of digital information" in general. (That's much too broad and vague: OA has a very specific kind of information as its target.)

And, I regret to say, OA is not about helping you get or share free access to commercial audio or video products, whether analog or digital: OA is completely neutral about that. OA's target is only author give-aways, not "consumer sharing" (though of course free user access webwide will be the outcome, for OA's special target content).

What Is OA's Target Content?

So let's start by being very explicit about OA's target content: It is the 2.5 million articles a year that are published in the planet's 25,000 peer-reviewed scholarly and scientific journals.

Eventually OA might also extend to some scientific and scholarly books (the ones that authors want to give away) and also to scholarly scientific data (if and when the researchers that collected them are ready to give them away); it may also extend to some software, some audio and some video.

But the only content to which OA applies without a single exception today is peer-reviewed journal articles. They are the works that their authors always wrote just so they should be read, used, applied, cited and built upon (mostly by their fellow researchers, worldwide). This is called "research impact". They were never written in order to earn their authors income from their sale.

These special authors -- researchers -- never sought or received any revenue from the sale of their journal articles. Indeed, the fact that there was a price-tag attached to accessing their articles (a price-tag usually paid through institutional library subscriptions) meant that these researcher-authors, and research itself, were losing research impact, because subscriptions to these journals were expensive, and most institutions could only afford access to a small fraction of them.

What Is OA?

To try to compensate for these access barriers in the old days of paper, these special give-away authors would provide supplementary access, by mailing free individual reprints of their articles, at their own expense, to any would-be user who had written to request a reprint. You can imagine, though, how slow, expensive and inefficient it must have been to have to supplement access in this way, in light of what the web has since made possible: First, the possibility of emailing eprints was an improvement, but the obvious and optimal solution was to put the eprint on the web directly, so any would-be user, webwide, could instantly access it directly, at any time.

And that, dear students, is the essence of OA: free, immediate, permanent, webwide access to peer-reviewed research journal articles: give-away content -- written purely for usage and impact, not for sales revenue -- finding, at last, the medium in which it can be given away, free for all, globally, big-time.

OA = Gold OA or Green OA

You thought OA was something else? Another form of publishing, maybe? with the author-institution paying to publish the article rather than the user-institution paying to access it? That is OA journal publishing. But OA itself just means: free online access to the article itself.

There are clearly two ways an author can provide free online access to an article: One is by publishing it in an OA journal (this is called the "golden road" to OA, or simply "Gold OA") and the other is by publishing it in a conventional subscription journal, but also self-archiving a supplementary version, free for all, on the web (this is called the "green road" to OA, or simply "Green OA").

OA ≠ Gold OA Only (or even Primarily)

Gold OA publishing is probably what peer-reviewed journal publishing will eventually settle on. But for now, only about 3000 of the 25,000 journals are Gold OA, and the majority of the most important journals are not among that 3000.

Moreover, most of the potential institutional money for paying for Gold OA is currently still tied up in each university's ongoing subscriptions to non-OA journals. So if Gold OA is to be paid for today, extra money needs to be found to pay for it (most likely out of already insufficient research funds).

Yet what is urgently needed by research and researchers today is not more money to pay for Gold OA, nor a conversion to Gold OA publishing, but OA itself.

And 100% OA can already be provided by authors -- through Green OA self-archiving -- virtually overnight.

It has therefore been a big mistake -- and is still one of the big obstacles slowing progress toward OA -- to imagine that OA means only, or primarily, Gold OA publishing. (This mistake will keep getting made, repeatedly, in the Open Student blog too -- mark my words!)

The "Subversive Proposal"

It was in 1994 that the explicit "subversive proposal" was first made that if a supplementary copy of each peer-reviewed journal article were self-archived online by its author, free for all, as soon as it was published (as some authors in computer science and physics had already been doing for years), then we could have (what we would now call) 100% (Green) OA virtually overnight.

But that magic night hasn't arrived -- not then, in 1994, not in the ensuing decade and a half, and not yet today. Why not?

"Zeno's Paralysis"

There are at least 34 reasons why it has not yet happened, all of them psychological, and all of them groundless. The syndrome even has a name: "Zeno's Paralysis":

"I worry about self-archiving my article because it would violate copyright... or because it would bypass peer review... or because it would destroy journals... or because the online medium is not reliable... or because I have no time to self-archive..."Meanwhile, evidence (demonstrating the obvious) was growing that making your article OA greatly increases its usage and impact:

Yet still most authors' fingers (85%) remained paralyzed. The solution again seemed obvious: The cure for Zeno's Paralysis was a mandate from authors' institutions and funders, officially stating that it is not only OK for their employees and fundees to self-archive, but that it is expected of them, as a crucial new part of the process of doing research and publishing their findings in the online era.

"Publish or Perish: Self-Archive to Flourish!"

The first explicit proposals to mandate self-archiving began appearing at least as early as 2000; and recommendations for institutional and funder Green OA self-archiving mandates were already in the Self-Archiving FAQ even before it became the BOAI Self-Archiving FAQ in 2002, as well as in the OSI EPrints Handbook. But as far as I know the first officially adopted self-archiving mandate was that of the School of Electronics and Computer Science at the University of Southampton in 2002-2003.

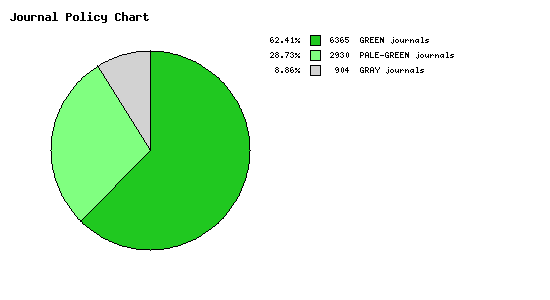

Since then, 91% of journals have already given self-archiving their official blessing:

Yet Alma Swan's surveys of authors' attitudes toward OA and OA self-archiving mandates -- across disciplines and around the world -- have found that although authors are in favor of OA, most will not self-archive until and unless it is mandated by their universities and/or their funders. If self-archiving were mandated, however, 95% of authors state that they would comply, over 80% of them stating they would comply willingly.

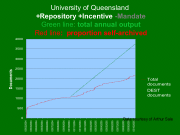

Arthur Sale's analyses of what authors actually do with and without a mandate have since confirmed that if unmandated, self-archiving in institutional repositories hovers at around 15% or lower, but with a mandate it approaches 100% within about 2 years:

What Students Can Do to Hasten the Optimal and Inevitable but Long Overdue Outcome:

To date, 37 Green OA self-archiving mandates have been adopted worldwide, and 9 more have been proposed. Some of those mandates (such as that of NIH in the US, RCUK in the UK and ERC in Europe) have been very big ones, but most have been research funder mandates (22) rather than university mandates (12), even though virtually all research originates from universities, not all of it is funded, and universities share with their own researchers and students the benefits of showcasing and maximizing the uptake of their joint research output. Among the proposed mandates, two are very big multi-university proposals (one for all 791 universities in the 46 countries of the European University Association and one for all the universities and research institutions of Brazil), but those mandate proposals have yet to be adopted.

The world's universities are OA's sleeping giant. They have everything to gain from mandating OA, but they are being extremely slow to realize it and to do something about it. Unlike you students, they have not grown up in the online age, and to them the online medium's potential is not yet as transparent and natural as it is to you. You can help awaken your university's sense of its own need for OA, as well as its awareness of the benefits of OA, and the means of attaining them, by making yourselves heard:

1. OA Self-Archiving Begins At Home: First, let the professors and administration of your university know that you need and want (and expect!) research articles to be freely accessible to you on the web -- the entire research output of your own university to begin with (and not just the fraction of its total research output that your university can afford to buy-back in the form of journal subscriptions!) -- so that you know what research is being done at your own university, whom to study with, whom to do research projects with (and even to help you select a university for undergraduate or graduate study in the first place).

2. Self-Archive Unto Others As You Would Have Them Self-Archive Unto You: Second, point out the "Golden [or rather Green!] Rule" to the professors and administration of your university: If each university self-archives its own research output, this will not only make it possible for you, as students, to access the research output of all other universities (and not just the fraction of the total research output of other universities that your own university can afford to buy-in in the form of journal subscriptions!), so that you can use any of it in your own studies and research projects. Far more important, it will also make it possible for all researchers, at all universities (including your own), to access all research findings, and use and apply them in their own research and teaching, thereby maximizing research productivity and progress for the entire university community worldwide -- as well as for the tax-paying public that funds it all, the ones for whose benefit the research is being conducted.

But please make sure you get the rationale and priorities straight! The (successful) lobbying for the NIH self-archiving mandate was based in part on a premise that may have gone over well with politicians, and perhaps even with voters, but if thought through, it would not be able to stand up to close scrutiny. The slogan had been: We need to have OA so that taxpayers can have access to health research findings that they themselves paid for. True. And sounds good. But how many of the annual 2.5 million peer-reviewed research journal articles published every year in the 25,000 journals across all disciplines does that really apply to? How many of those specialized articles are taxpaying citizens likely ever to want (or even be able) to read! Most of them are not even relevant or comprehensible to undergraduate students.

Peer-To-Peer Access

So the overarching rationale for OA cannot be public access (though of course public access comes with the territory). It has to be peer-to-peer access. The peers are the research specialists worldwide for whom most of the peer-reviewed literature is written. Graduate students are entering this peer community; undergraduates are on the boundaries of it. But the general tax-paying public has next to no interest in it at all.

By the very same token, you will not be able to persuade your professors and administration that OA to the peer-reviewed research literature needs to be mandated because students have a burning need and desire to read it all! Students benefit from OA, but that cannot be the primary rationale for OA. Peer-to-peer (i.e., researcher to researcher) access is what has to be stressed. It is researchers worldwide who are today being denied access to the research findings they need in order to advance their research for the benefit of us all -- for the benefit of present and future students for whom the findings will be digested and integrated in textbooks, and for the benefit of the general public for whom the findings will be applied in the form of technological advances and medicines for illnesses.

So it is daily, weekly, monthly research impact that is needlessly being lost, cumulatively, while we keep dragging our feet about providing OA. That's what you need to stress to your professors and administration: all those findings that could not be used and applied and built upon because they could not be accessed by all or even most of their potential users, because it simply costs too much to subscribe to all or most of the journals in which they were published.

Updating the Academic Mandate for the Online Era

Point out also that OA policies always fail if they are merely recommendations or requests. The only thing that will embolden and motivate all researchers to self-archive is self-archiving mandates. "Mandate" is not a bad word. It doesn't mean "coercion" or punishment. It's all carrots, not sticks. Professors have a mandate to teach, and test, and give marks. They also have a mandate to do research, and publish (or perish!). If they teach well and do good research, they earn promotions, salary increases, tenure, research funding, prizes. If they don't fulfill their mandate, they don't. It's the same with students: You have a mandate to study and acquire knowledge and skills. If you don't fulfill your mandate, you don't earn good marks.

Mandates and Metrics

So it is not so much a matter of adopting a new "Green OA self-archiving mandate" for faculty, but of adapting the existing mandate. OA self-archiving is a natural adaptation to the PostGutenberg Galaxy and its technical potential (just as we adapted to reading and writing, printing, libraries and photocopying). It is no longer enough to just do, write up, and publish research: It has to be self-archived too. And the carrots are already there to reward doing it: Faculty are already evaluated on how well they fulfill their research performance mandate not only by counting their publications, but by assessing their impact -- for which one of the most important metrics is how much it is taken up, used and cited by further research. And citation counts are among the things that OA has been shown to increase.

It's rather as if -- as a part of your mandate as students -- you now had to submit your work online (as most of you already do!) instead of on paper in order to be evaluated and graded. Except it's even better than that for faculty, because self-archiving their work will actually increase their "grades."

In short, it's a win/win/win situation for your university, for your professors and for you, the students -- if only your university gets round, at long last, to fast-forwarding us to the optimal and inevitable: by mandating Green OA self-archiving. Rather than be puzzled and impatient that they have not done it already, you should provide a strong show of support for their doing it now. Be ready with the answers to the inevitable questions about how and why (and when and where). And beware, the 34-headed monster of "Zeno's Paralysis" is still at large, and growing back each head the minute you lop one off...

Stevan Harnad

American Scientist Open Access Forum

Tuesday, January 1. 2008

Universities UK: Open Access Mandates, Metrics and Management -- PPTs now online

Universities UK Research Information and Management Workshop London 6 December 2008

Universities UK Research Information and Management Workshop London 6 December 2008 EurOpenScholar

Professor Bernard Rentier, Rector, University of Liege

The whole picture: the overall scholarly information landscape

Dr Alma Swan, Director, Key Perspectives Ltd

Mandates and Metrics: How Open Repositories Enable Universities to Manage, Measure and Maximise their Research Assets

Professor Stevan Harnad, Université du Québec à Montéal & University of Southampton

Optimising research management and assessment processes; the role of funders

Professor David Eastwood, Chief Executive, HEFCE

Overview: outline of the evolution of scholarly information, what advantages new changes will bring and economic impact for the UK

Dr. Michael Jubb, Director, Research Information Network

[addendum: US NIH Mandate ]

Tuesday, December 18. 2007

Open Access Metrics: An Autocatalytic Circle of Benefits

"Just as scientists would not accept the findings in a scientific paper without seeing the primary data, so should they not rely on Thomson Scientific's impact factor, which is based on hidden data. As more publication and citation data become available to the public through services like PubMed, PubMed Central, and Google Scholar®, we hope that people will begin to develop their own metrics for assessing scientific quality rather than rely on an ill-defined and manifestly unscientific number."Rossner et al are quite right, and the optimal, inevitable solution is at hand:

The prospect of having Open Research Metrics for analysis and research assessment -- along with the prospect of maximizing research usage and impact through OA -- will motivate adopting the mandates, closing the autocatalytic circle of benefits from OA.(1) All research institutions and research funders will mandate that all research journal articles published by their staff must be self-archived in their Open Access (OA) Institutional Repository.

(2) This will allow scientometric search engines such as Citebase (and others) to harvest their metadata, including their reference lists, and to calculate open, transparent research impact metrics.

Brody, T., Carr, L., Gingras, Y., Hajjem, C., Harnad, S. and Swan, A. (2007) Incentivizing the Open Access Research Web: Publication-Archiving, Data-Archiving and Scientometrics. CTWatch Quarterly 3(3).

Harnad, S. (2007) Open Access Scientometrics and the UK Research Assessment Exercise. Proceedings of 11th Annual Meeting of the International Society for Scientometrics and Informetrics 11(1) : 27-33, Madrid, Spain. Torres-Salinas, D. and Moed, H. F., Eds.

Shadbolt, N., Brody, T., Carr, L. and Harnad, S. (2006) The Open Research Web: A Preview of the Optimal and the Inevitable, in Jacobs, N., Eds. Open Access: Key Strategic, Technical and Economic Aspects. Chandos.

Stevan Harnad

American Scientist Open Access Forum

« previous page

(Page 6 of 11, totaling 102 entries)

» next page

EnablingOpenScholarship (EOS)

Quicksearch

Syndicate This Blog

Materials You Are Invited To Use To Promote OA Self-Archiving:

Videos:

audio WOS

Wizards of OA -

audio U Indiana

Scientometrics -

The American Scientist Open Access Forum has been chronicling and often directing the course of progress in providing Open Access to Universities' Peer-Reviewed Research Articles since its inception in the US in 1998 by the American Scientist, published by the Sigma Xi Society.

The American Scientist Open Access Forum has been chronicling and often directing the course of progress in providing Open Access to Universities' Peer-Reviewed Research Articles since its inception in the US in 1998 by the American Scientist, published by the Sigma Xi Society.

The Forum is largely for policy-makers at universities, research institutions and research funding agencies worldwide who are interested in institutional Open Acess Provision policy. (It is not a general discussion group for serials, pricing or publishing issues: it is specifically focussed on institutional Open Acess policy.)

You can sign on to the Forum here.

Archives

Calendar

|

|

May '21 | |||||

| Mon | Tue | Wed | Thu | Fri | Sat | Sun |

| 1 | 2 | |||||

| 3 | 4 | 5 | 6 | 7 | 8 | 9 |

| 10 | 11 | 12 | 13 | 14 | 15 | 16 |

| 17 | 18 | 19 | 20 | 21 | 22 | 23 |

| 24 | 25 | 26 | 27 | 28 | 29 | 30 |

| 31 | ||||||

Categories

Blog Administration

Statistics

Last entry: 2018-09-14 13:27

1129 entries written

238 comments have been made