Quicksearch

Your search for metrics returned 102 results:

Saturday, December 15. 2007

Putting Science Publishing Into Perspective

Commentary on: "Putting Science into Science Publishing" by Joseph Esposito, Publishing Frontier (blog) December 11 2007.The posting contains the by now familiar litany of lapses:

(1) Open Access is not only -- nor even primarily -- about Open Access Publishing (Gold OA): It is about OA itself, which includes Green OA, the far bigger and faster-growing form of OA: Authors making their own published, peer-reviewed non-OA journal articles (not only or primarily their unpublished preprints) OA by self-archiving them in their own OA Institutional Repositories. Only 10% of journals are Gold OA, but over 90% of journals endorse immediate Green OA self-archiving by their authors -- with over 60% endorsing the immediate self-archiving of the author's final peer-reviewed draft.

(2) The question of whether librarians will cancel journals is not about Gold OA: It is about Green OA. Joseph Esposito contemplates whole-journal cancellations of subscriptions to Gold OA journals, whereas the speculations have been about whether and when librarians would cancel non-OA journals as Green OA self-archiving grows. Green OA self-archiving grows anarchically, not journal by journal. So not only is it hard for a librarian to determine whether and when all the articles in a given journal have become OA, but all the evidence (from the publishers) to date in the few areas (of physics) where Green OA self-archiving is already at or near 100% is that there are as yet no detectable cancellations as a result of 100% Green OA. (Rather, the publishers themselves seem to be adopting Gold OA in these areas: SCOAP3.)

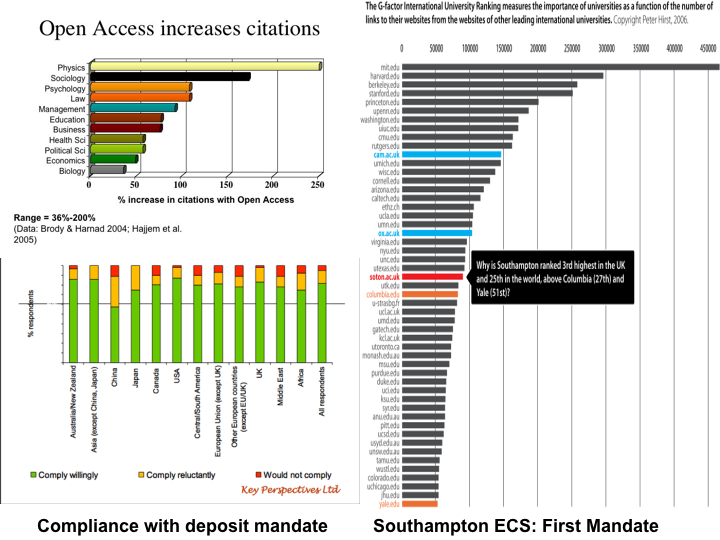

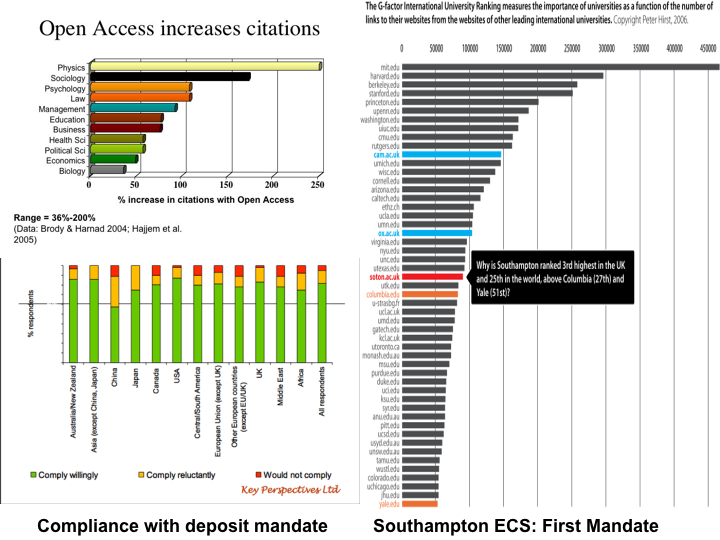

(3) The OA citation impact advantage is not about unpublished or low-impact Gold OA journal articles versus high-impact non-OA journal articles: It is about the additional citation impact provided by OA, for any non-OA article, including those articles published in high impact journals! They don't lose their non-OA citations: they just gain further OA citations.

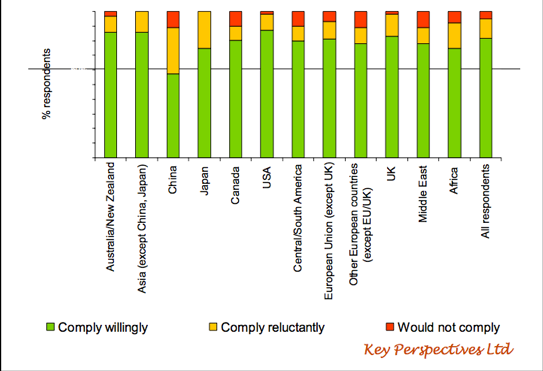

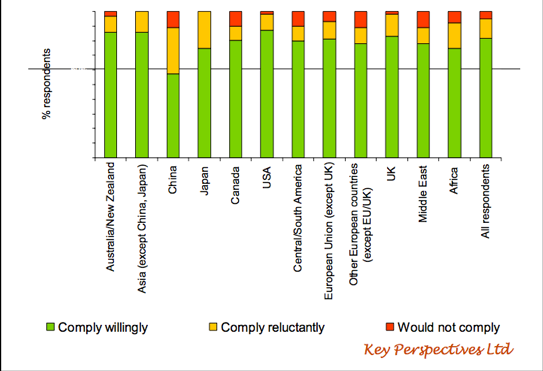

(4) The international, interdisciplinary survey evidence of Swan and Associates did not just tautologically confirm that people comply with requirements if required: The point was that over 95% of researchers report that they would comply with a Green OA self-archiving mandate from their employers or funders and 81% report they would do so willingly. (Only 14% said they would comply unwillingly, and 5% said they would not comply.) Arthur Sale's comparisons of actual mandates and compliance rates confirmed these findings, with spontaneous (unmandated) self-archiving rates hovering around 15%, encouraged self-archiving rates rising to about 30% and mandated, incentivized self-archiving rates approaching 100% within two years. (Not surprising, since academics are busy, and would be publishing much less too, if it were not for the existing universal publish-or-perish mandate.) Self-archiving is rewarded by the resulting enhanced research impact metrics, which their institutions also collect and credit, if researchers self-archive. [Added: see also Swan's rebuttal.]

In sum, OA is not about publishing, it is about maximizing research progress and impact. The outcome -- 100% OA -- is optimal and inevitable for research, researchers, their institutions, their funders, the vast R&D industry, and the tax-paying public.

Publishers need to adapt to the optimal and inevitable for research. Research is not conducted and reported in order to provide revenues to the publishing industry. The publishing industry is providing a value-added service -- which, in the online era is rapidly scaling down to just the management of peer review and the certification of its outcome: The peers review for free, the authors can generate and revise their electronic texts themselves, and their institutions can archive and provide access to the final, peer-reviewed drafts in their OA Institutional Repositories.

What is left of peer-reviewed journal publishing, then, is to implement the peer review itself, and to certify the outcome with the journal's name and track-record. For now, journals are still providing much more than that (paper edition, mark-up, PDF, distribution), in exchange for journal subscriptions, and as long there is still a market for all that, the publishing status quo remains.

If and when subscriptions should ever become unsustainable because of universal Green OA, journals can downsize and convert to Gold OA as SCOAP3 is already doing. But for now, it is up to the research community -- and the research community alone -- to hasten the transition to universal Green OA.

Stevan Harnad

American Scientist Open Access Forum

Saturday, November 24. 2007

Victory for Labour, Research Metrics and Open Access Repositories in Australia

Posted by Arthur Sale in the American Scientist Open Access Forum:

Posted by Arthur Sale in the American Scientist Open Access Forum:Yesterday, Australia held a Federal Election. The Australian Labor Party (the previous opposition) have clearly won, with Kevin Rudd becoming the Prime-Minister-elect.

What has this to do with the American Scientist Open Access Forum? Well the policy of the ALP is that the plans for the Research Quality Framework (the RQF - our research assessment exercise) will be immediately scrapped, and it will be replaced by a cheaper and metrics-based assessment, presumably a year or two later.

At first sight this is a setback for open access in Australia, because institutional repositories are not essential for a metrics-based research assessment. They just help improve the metrics. However, the situation may be turned to advantage, and there are several major pluses.(1) Previous RQF grants should have ensured that every university in Australia now has a repository. Just mostly empty, or mostly dark, or both.It should now be crystal clear to every university in Australia that citations and other measures will be key in the future. It should be equally clear that they should do everything possible to increase their performance on these measures. Any university that fails to immediately implement an ID/OA mandate (Immediate Deposit, Open Access when possible) in its institutional repository is simply deciding to opt out of research competition, or mistakenly thinks that it knows better. Although I suppose there is still the weak excuse that it is all too hard to understand or think about.

(2) The advisers in the Department of Education, Science & Technology (DEST) haven’t changed. The Accessibility Framework (ie open access) is still in place as a goal.

(3) A new metric-based evaluation could and should be steered to be a multi-metric based one. The ALP has already stated that it will be discipline-dependent.

(4) If the Rudd government is serious about efficiency in higher education, they could simply instruct DEST to require universities to put all their currently reported publications in a repository (ID/OA policy), from which the annual reports would be automatically derived. In addition all the desired publication metrics would also be derived, at any time. The Accessibility Framework would be achieved.

Here is the edited text of a press release by the shadow minister before the election. The boldface over some paragraphs is mine.

Arthur Sale

Professor of Computer Science

University of Tasmania[BEGINS]

Senator Kim Carr

Labor Senator for Victoria

Shadow Minister for Industry, Innovation, Science and Research

Thursday, 15 November 2007 (58/07)

Building a strong future for Australian research

Federal Labor’s key research initiatives, announced during yesterday’s Campaign Launch, highlight our commitment to a research revolution.

[snip]

A Rudd Labor Government will be committed to rebuilding the national innovation system and, over time, doubling the amount invested in R&D in Australia.

· Labor will bring responsibility for innovation, industry, science and research into a single Commonwealth Department.

· Labor will develop a set of national innovation priorities to sit over the national research priorities. Together, these will provide a framework for a national innovation system, ensuring that the objectives of research programs and other innovation initiatives are complementary.

· Labor will abolish the Howard Government’s flawed Research Quality Framework, and replace it with a new, streamlined, transparent, internationally verifiable system of research quality assessment, based on quality measures appropriate to each discipline. These measures will be developed in close consultation with the research community. Labor will also address the inadequacies in current and proposed models of research citation. Labor’s model will recognise the contribution of Australian researchers to Australia and the world.

[snip]

· Labor recognises the importance of basic research in the creation of new knowledge, and also the value and breadth of Australian research effort across the humanities, creative arts and social sciences as well as scientific and technological disciplines.

The Howard Government has allocated $87 million for the implementation of the RQF. Labor will seek to redirect the residual funds to encourage genuine industry collaboration in research.

[snip]

Thursday, November 22. 2007

UK Research Evaluation Framework: Validate Metrics Against Panel Rankings

Once one sees the whole report, it turns out that the HEFCE/RAE Research Evaluation Framework is far better, far more flexible, and far more comprehensive than is reflected in either the press release or the Executive Summary.

SUMMARY: Three things need to be remedied in the UK's proposed HEFCE/RAE Research Evaluation Framework:

(1) Ensure as broad, rich, diverse and forward-looking a battery of candidate metrics as possible -- especially online metrics -- in all disciplines.

(2) Make sure to cross-validate them against the panel rankings in the last parallel panel/metric RAE in 2008, discipline by discipline. The initialized weights can then be fine-tuned and optimized by peer panels in ensuing years.

(3) Stress that it is important -- indeed imperative -- that all University Institutional Repositories (IRs) now get serious about systematically archiving all their research output assets (especially publications) so they can be counted and assessed (as well as accessed!), along with their IR metrics (downloads, links, growth/decay rates, harvested citation counts, etc.).

If these three things are systematically done -- (1) comprehensive metrics, (2) cross-validation and calibration of weightings, and (3) a systematic distributed IR database from which to harvest them -- continuous scientometric assessment of research will be well on its way worldwide, making research progress and impact more measurable and creditable, while at the same time accelerating and enhancing it.

It appears that there is indeed the intention to use many more metrics than the three named in the executive summary (citations, funding, students), that the metrics will be weighted field by field, and that there is considerable open-mindedness about further metrics and about corrections and fine-tuning with time. Even for the humanities and social sciences, where "light touch" panel review will be retained for the time being, metrics too will be tried and tested.

This is all very good, and an excellent example for other nations, such as Australia (also considering national research assessment with its Research Quality Framework), the US (not very advanced yet, but no doubt listening) and the rest of Europe (also listening, and planning measures of its own, such as EurOpenScholar).

There is still one prominent omission, however, and it is a crucial one:

The UK is conducting one last parallel metrics/panel RAE in 2008. That is the last and best chance to test and validate the candidate metrics -- as rich and diverse a battery of them as possible -- against the panel rankings. In all other fields of metrics -- biometrics, psychometrics, even weather forecasting metrics – before deployment the metric predictors first need to be tested and shown to be valid, which means showing that they do indeed predict what they were intended to predict. That means they must correlate with a "criterion" metric that has already been validated, or that has "face-validity" of some kind.

The RAE has been using the panel rankings for two decades now (at a great cost in wasted time and effort to the entire UK research community -- time and effort that could instead have been used to conduct the research that the RAE was evaluating: this is what the metric RAE is primarily intended to remedy).

But if the panel rankings have been unquestioningly relied upon for 2 decades already, then they are a natural criterion against which the new battery of metrics can be validated, initializing the weights of each metric within a joint battery, as a function of what percentage of the variation in the panel rankings each metric can predict.

This is called "multiple regression" analysis: N "predictors" are jointly correlated with one (or more) "criterion" (in this case the panel rankings, but other validated or face-valid criteria could also be added, if there were any). The result is a set of "beta" weights on each of the metrics, reflecting their individual predictive power, in predicting the criterion (panel rankings). The weights will of course differ from discipline by discipline.

Now these beta weights can be taken as an initialization of the metric battery. With time, "super-light" panel oversight can be used to fine-tune and optimize those weightings (and new metrics can always be added too), to correct errors and anomalies and make them reflect the values of each discipline.

(The weights can also be systematically varied to use the metrics to re-rank in terms of different blends of criteria that might be relevant for different decisions: RAE top-sliced funding is one sort of decision, but one might sometimes want to rank in terms of contributions to education, to industry, to internationality, to interdisciplinarity. Metrics can be calibrated continuously and can generate different "views" depending on what is being evaluated. But, unlike the much abused "university league table," which ranks on one metric at a time (and often a subjective opinion-based rather than an objectiveone), the RAE metrics could generate different views simply by changing the weights on some selected metrics, while retaining the other metrics as the baseline context and frame of reference.)

To accomplish all that, however, the metric battery needs to be rich and diverse, and the weight of each metric in the battery has to be initialised in a joint multiple regression on the panel rankings. It is very much to be hoped that HEFCE will commission this all-important validation exercise on the invaluable and unprecedented database they will have with the unique, one-time parallel panel/ranking RAE in 2008.

That is the main point. There are also some less central points:

The report says -- a priori -- that REF will not consider journal impact factors (average citations per journal), nor author impact (average citations per author): only average citations per paper, per department. This is a mistake. In a metric battery, these other metrics can be included, to test whether they make any independent contribution to the predictivity of the battery. The same applies to author publication counts, number of publishing years, number of co-authors -- even to impact before the evaluation period. (Possibly included vs. non-included staff research output could be treated in a similar way, with number and proportion of staff included also being metrics.)

The large battery of jointly validated and weighted metrics will make it possible to correct the potential bias from relying too heavily on prior funding, even if it is highly correlated with the panel rankings, in order to avoid a self-fulfilling prophecy which would simply collapse the Dual RAE/RCUK funding system into just a multiplier on prior RCUK funding.

Self-citations should not be simply excluded: they should be included independently in the metric battery, for validation. So should measures of the size of the citation circle (endogamy) and degree of interdisciplinarity.

Nor should the metric battery omit the newest and some of the most important metrics of all, the online, web-based ones: downloads of papers, links, growth rates, decay rates, hub/authority scores. All of these will be provided by the UK's growing network of UK Institutional Repositories. These will be the record-keepers -- for both the papers and their usage metrics -- and the access-providers, thereby maximizing their usage metrics.

REF should put much, much more emphasis on ensuring that the UK network of Institutional Repositories systematically and comprehensively records its research output and its metric performance indicators.

But overall, thumbs up for a promising initiative that is likely to serve as a useful model for the rest of the research world in the online era.

References

Harnad, S., Carr, L., Brody, T. & Oppenheim, C. (2003) Mandated online RAE CVs Linked to University Eprint Archives: Improving the UK Research Assessment Exercise whilst making it cheaper and easier. Ariadne 35.

Brody, T., Kampa, S., Harnad, S., Carr, L. and Hitchcock, S. (2003) Digitometric Services for Open Archives Environments. Proceedings of European Conference on Digital Libraries 2003, pp. 207-220, Trondheim, Norway.

Harnad, S. (2006) Online, Continuous, Metrics-Based Research Assessment. Technical Report, ECS, University of Southampton.

Harnad, S. (2007) Open Access Scientometrics and the UK Research Assessment Exercise. Proceedings of 11th Annual Meeting of the International Society for Scientometrics and Informetrics 11(1), pp. 27-33, Madrid, Spain. Torres-Salinas, D. and Moed, H. F., Eds.

Brody, T., Carr, L., Harnad, S. and Swan, A. (2007) Time to Convert to Metrics. Research Fortnight pp. 17-18.

Brody, T., Carr, L., Gingras, Y., Hajjem, C., Harnad, S. and Swan, A. (2007) Incentivizing the Open Access Research Web: Publication-Archiving, Data-Archiving and Scientometrics. CTWatch Quarterly 3(3).

See also: Prior Open Access Archivangelism postings on RAE and metrics

Stevan Harnad

The following had been based on the earlier press alone, before seeing the full report. The press release had said:"For the science-based disciplines, a new bibliometric indicator of research quality is proposed, based on the extent to which research papers are cited by other publications. This new indicator will be combined with research income and research student data, to drive the allocation of HEFCE research funding in these disciplines"Comments:

"For the arts, humanities and social sciences (where quantitative approaches are less developed) we will develop a light touch form of peer review, though we are not consulting on this aspect at this stage."

(1) Citations, prior funding and student counts are fine, as candidate metrics. (Citations, suitably processed and contextually weighted, are a strong candidate, but it is important not to give prior funding too high a weight, or else it collapses the UK's Dual RAE/RCUK funding system into just a multiplier effect on prior RCUK funding.

(2) But where are the rest of the candidate metrics? article counts, book counts, years publishing, co-author counts, co-citations, download counts, link counts, download/citation growth rates, decay rates, hub/authority index, endogamy index... There is such a rich and diverse set of candidate metrics. It is completely arbitrary, and unnecessary, to restrict consideration to just an a-priori three.

(3) And how and against what are the metrics to be validated? The obvious first choice is the RAE panel rankings themselves: RAE has been relying on them unquestioningly for two decades now, and the 2008 RAE will be a (last) parallel panel/metric exercise.

(4) The weights on a rich, diverse battery of metrics should be jointly validated (using multiple regression analysis) against the panel rankings to initialize the weight of each metric, and then the weights should be calibrated and adjusted field by field, with the help of peer panels.

(5) It is not at all obvious that the humanities and social sciences do not have valid, predictive metrics too: They too write and read and use and cite articles and books, receive research funding, train postgraduate students, etc.

The metric RAE should be open-minded, open-ended forward-looking, in the digital online era, putting as many candidates as possible into the metric equation, testing the outcome against panel rankings, and then calibrating them, continuously, field by field, so as to optimise them. This is not the time to commit, a priori, to just 3 metrics, not yet validated and weighted, in science fields, nor to stick to panel review in other fields, again because of a priori assumptions about its metrics.

Let 1000 metric flowers bloom, initialize their weights against the parallel panel rankings (and any other validated or face-valid criteria) and then set to work optimising those initial weights, assessing continuously, field by field, across time. The metrics should be stored and harvestable from each institution's Open Access Institutional Repository, along with the research publications and data on which they are based.

American Scientist Open Access Forum

Sunday, November 18. 2007

Open Access in the Last Millennium

I thought that as the American Scientist Open Access Forum approaches its 10th year, readers might find it amusing (and perhaps enlightening) to see where the discourse stood 20 years ago. That was before the Web, before online journals, and before Open Access -- yet many of the same issues were already being debated.

I thought that as the American Scientist Open Access Forum approaches its 10th year, readers might find it amusing (and perhaps enlightening) to see where the discourse stood 20 years ago. That was before the Web, before online journals, and before Open Access -- yet many of the same issues were already being debated.Alhough I might have traced it back still further, to BBS Open Peer Commentary, 30 years ago, and although my first substantive posting was September 27 1986, for me it feels as if it all began with a jolt on November 19 1986, on sci.lang, with "Saumya,...you have shit-for-brains" -- which led to "Skywriting" (c. 1987, unpublished, unposted), which turned into "Scholarly Skywriting" (1990), Psycoloquy (1991), "PostGutenberg Galaxy" (1991), CogPrints (1997), the Self-Archiving FAQ (as of 1997), the AmSci Forum (1998), the critique of the e-biomed proposal (1999), EPrints (2000), mandates and metrics (2001), and then the BOAI (2002).

See how much of it is already lurking in this 1990 posting on COMMED:

Date: Tues, Mar 13 1990 4:12 am

From: har...@Princeton.EDU (Stevan Harnad)

To: loeb@geocub

Subject: Re: Journals

Cc: PA...@phoenix.cambridge.ac.uk, jour_...@nyuacf.BITNET

ON THE SCHOLARLY AND EDUCATIONAL POTENTIAL OF MULTIPLE EMAIL NETWORKS

[From: COMMED]Stevan Harnad

Princeton University

Gerald M. Phillips, Professor, Speech Communication, Pennsylvania State

University (G...@PSUVM.BITNET) wrote on Commed against the idea of

"on-line journals." His critique contains enough of the oft-repeated

(and I think erroneous) criticisms of the new medium that I think it's

worth a point by point rebuttal. I write as the editor, for over a

decade now, of a refereed international journal published by Cambridge

University Press (in the conventional paper/print medium), but also as

an impassioned advocate of multiple-email networks and their (I think)

revolutionary potential. I am also the new moderator of PSYCOLOQUY,

an email list devoted to scholarly electronic discussion in psychology

and related disciplines. Professor Phillips wrote:

> There is [1] an explicit hostility to print media on computer networks.

> There is a crisis in the publishing industry because of [2] technological

> innovations, [3] TV, and second hand booksellers, and among book reviewers

> there is consternation because [4] the number of journals proliferates and

> the quality of the texts declines. I am responding to the proposal to

> establish an electronic journal, and I am responding negatively.

Not one of these points speaks against electronic journals; rather,

they are points in their favor: (1) The hostility to print is justified,

inasmuch as it wastes time and resources and confers no advantage (which

cannot be duplicated by resorting to hard copy when needed anyway).

(2) Technological innovations such as photo-copying are problems for

the print media -- unsolved and probably unsolvable -- but not for the

virtual media, whose economics will be established pre-emptively along

more realistic lines, given the new technology. The passive CRTs in (3)

TV may be competing with the written word, but the interactive CRTs in

the electronic media are in a position to fight back. (4) Word glut and

quality decline are problems with the message (and how we control its

quality -- a real problem, in which I am very interested), not with the

medium. This leaves nothing of this first list of objections. Let's go on:

> The book, magazine, or journal is still the most convenient learning

> center known to civilization. It is portable, requires no power supply, is

> easily stored, and one can write comments on the pages without resorting

> to hypertext.

These arguments would have been just as apt if applied to Gutenberg

on behalf of the illuminated manuscript, or against writing itself, in

favor of the oral tradition. Other than habit, they have no logical or

practical support at all. And the clincher is that the situation is not

"either/or." To the extent that people are addicted to their marginal

doodling (or to electricity-free yurts), hard copy will always be

available as a supplement.

> Furthermore, the contemplation that enters composition of the

> typical article is important. Hasty publication results in error and sometimes

> danger. I urge examination of the editorial policies of NEW ENGLAND JOURNAL

> OF MEDICINE or DAEDALUS as examples of the best editorial policies. It is

> crucial in publishing to have careful editing and responsible writing.

There is one logical error and one non sequitur here: (i) Making it

POSSIBLE for people to communicate faster and on a more global scale

does not imply that they are no longer allowed to wait and reflect as

long as they wish! (ii) Ceterum censeo: Quality control is a

medium-independent problem; I have plenty of ideas about how to

implement peer review in this medium even more effectively than in the

print media.

> I do not wish to indict users of electronic media, but I have encountered

> a fair share of irresponsible people who write out of passion or worse --

> cuteness.

The problem here is a demographic one, having to do with the anarchic

initial conditions in which the new medium was developed. "Flaming"

was what the first electronic discussion was called, and it began as

spontaneous combustion among the creators of the medium (computer hackers,

for the most part) and students (who have a lot of idle time on their

hands). The form of trivial pursuit that ensued is no more representative

of the intrinsic possibilities of this medium than it would have been

if we had left it up to Gutenberg and a legion of linotype operators

to decide for us all what should appear on the printed page. Again, the

problem is with implementation and quality control, not the medium itself.

> I realize how important some exchanges are and I will argue

> with data and without passion for the efficacy of applying CMC to some

> aspects of classroom operation. Computerized cardfiles and other databases

> are essential to good scholarship. Networks like AMANET and similar

> medical operations provide important information conveniently. What

> characterizes a totally responsible network, however, is the willingness

> to spend money to make it work. Accumulating a database and monitoring its

> contents is crucial for uses of a network must have confidence in what they read.

These applications are all commendable, but supremely unimaginative.

The real revolutionary potential of electronic network communication is

in scholarship rather than education. I am convinced that the medium

is better matched to the pace and scope and interactiveness of human

mentation than any of its predecessors. In fact, it is as much of a

milestone as the advent of writing, and finally returns the potential pace

of the interaction -- which writing and print slowed down radically --

to the tempo of the natural speech from which so much of our cognitive

capacity arose.

> A great many scholars (mostly untenured) rail at the policies of contempo-

> rary scholarly journals, and often they are "on target." Journals sometimes

> use an "old boy" network to exclude new and vital ideas. Journals are often

> ponderously slow and it is difficult for many people to take editorial

> criticism. On the other hand, journals protect us from egregious error and

> and libel and the copyright laws protect us from plagiarism.

But the egregious error here is to fail to realize that electronic

networks can exercise peer review just as rigorously (or unrigorously)

as any other medium. And just as there are hierarchies of print

journals (ranked with respect to how rigorously they are refereed),

this can be done here too, including levels at which manuscripts or

ideas are circulated to one's peers for pre-referee scrutiny, as in

symposia and conferences, or even informal discussion. The possibilities

are enormous; objections like the above ones (and they are not unique

to Professor Phillips) serve only to demonstrate how the entrenched old

medium and its habits can blind us to promising alternatives.

> Plagiarism is a major concern in using an electronic network. I am

> hesitant to share material that might be useful because my copyrights are

> not protected on this network. I enjoy the chitchat effect, but I have

> told several people who have contacted me about my "on-line" course, that

> I would be happy to share articles or have them come out an observe. I

> would not attempt to offer advice using this medium. It would be

> guaranteed to be half-baked and inapposite.

I have two replies here; one objective and quite decisive, the other

a somewhat subjective observation: There are ways to implement peer

discussion that will preserve priority as safely as the ordinary

mail, telephone and word-processor media (none completely immune to

techno-vandalism these days, by the way) to which we already entrust

our prepublication ideas and findings. I'll discuss these in the future.

As food for thought, consider that it would be simple to implement a

network with read/write access only for a group of peers in a given

specialty, where every posting is seen by everyone who matters in the

specialty (and is archived for the record, to boot). These are the people

who ASSIGN the priorities. A wider circle might have read-only access,

and perhaps one of them might try (and even succeed) to purloin an

idea and publish it as his own -- either in a low-level print journal

or a low-level electronic group. So what? The peers saw it first, and

know whence it came, and where and when, with the archive to confirm it

(printed out in hard copy, if you insist!). That's the INTRINSIC purpose

of scholarly priority. If some enterprising vita-stuffer up for promotion

at New Age College pries the covers off my book and substitutes his own,

that's not a strike against the printed medium, is it?

Now the subjective point: It seems paradoxical, to say the least, to be

worried about word glut and quality decline at the same time as being

preoccupied with priority and plagiarism. Here is some more food for

thought: The few big ideas that there are will not fail to be attributed

to their true source as a result of the net. As to the many little ones

(the "minimal publishable units," or what have you), well, I suppose

that a scholar can spend his time trying to protect those too -- or he

can be less niggardly with them in the hope that something bigger might

be spawned by the interaction.

It's all a matter of scale. I'm inclined to think that for the really

creative thinker, ideas are not in short supply. It's the tree that

bears the fruit that matters: "He who steals my apples, steals trash,"

or something like that. The rival anecdote is that Einstein was asked

in the fifties by some tiresome journalist -- a harbinger of our

self-help/new-age era -- what activity he was usually engaged in when

he got his creative ideas (shaving? showering? walking? sleeping?), and

he replied that he really couldn't say, because he had only had one or

two creative ideas in his entire lifetime... (Nor was he particularly

secretive about them, I might add, engaging in intense scholarly

correspondence about them with his peers, most of whom could not even

grasp, much less pass them off as their own.)

> While serving on a promotion and tenure committee, I opposed consideration

> of materials "published" on-line in examining the credentials of candidates.

> That is an antediluvian view, I know, but in the sciences especially,

> accuracy and responsibility is critical and to date, only the referee

> process give us any assurance at all.

Too bad. Promotion/tenure review is a form of peer review too, and is

not such an oracular machine as to afford to ignore potentially

informative data. I, for one, might even consider looking at

unpublished (hence, a fortiori, unrefereed) manuscripts if there

appeared to be grounds for doing so, in order to make a more informed

decision. But never mind; if the direction I am advocating prevails,

peer review, such as it is, will soon be alive and well on the

electronic networks, and contributions will be certifiably CV-worthy.

> Interchanges like this are useful. We get a chance to exchange views with

> people we do not know and often we find some intriguing possibilities in

> these notes and messages. But I still do not know who I am communicating

> with and I have no confirmation of their data. I can use caveat emptor on

> their ideas, but I cannot give them professional credit for them, nor can

> I claim any for my own.In short, here is your extreme argument AGAINST

> electronic journals.

There is, I am told, a complexity-theoretic bottom-line in networking

called the "authentication problem." I can in principle post a libelous,

plagiaristic message in your name without being detected; hence it will be

difficult to formulate enforceable laws to regulate the net. In practice,

this need not be a problem, however, so look on the bright side. I

really am the one indicated on my login. And even if I weren't, it hardly

matters for THIS discussion (as opposed to the future peer-reviewed ones

mentioned earlier). All that matters is my message, which can stand on

its own merits as a counterargument FOR electronic journals.

Stevan Harnad

Gerald M. Phillips

> Two points you did not attack were (1) the problem of protection of

> copyrights and (2) the convenience of books. Note, please that read

> only does not protect anyone so long as personal computers have print

> screen keys.

Currently, copyright is protected if you copyright a hard copy of what

you have written. Anyone is free to do this prior to every screenful,

but it sure would slow "skywriting" down to the old terrestrial pace.

In practice, however, we don't bother to copyright until we're much

further downstream: Our scholarly correspondence, our conference papers

and our preliminary drafts circulated for "comment without quotation"

do not enjoy copyright, so why be more protective of electronic

drafts? Because they're easier to abscond with? But, as I wrote earlier,

if the primary read/write network to which it is posted consists of

all the peers of the realm, and they see it first, and it's archived

when they see it, what is there to fear? What better way to establish

priority? Isn't it their eyes that matter?

Books are much more a habit than a convenience. I'm sure that if you

gave me an itemized list of their virtues I could match them (and then

some) with the merits of electronic text. (E.g., books are portable,

but they have to be physically duplicated and lugged; in principle,

everything written could be available everywhere there's a plug or

antenna, to anyone, anytime... etc.)

> Furthermore, the overwhelming number of faculties do not

> participate in networks. It is somewhat like the problem people are

> having with VCRs. Most people can learn how to play movies. Few bother

> to learn how to record from broadcasts. Most PC users really have

> expensive typewriters. I know -- it is their own fault. And it is

> probably different in the sciences, but it seems to me that designing

> access to knowledge for a minority will only widen the ignorance gap.

Computers and networks have become so friendly that everyone is just a

2-minute demo away from sufficient facility for full access. The barrier

is so tiny that it's absurd to think that it can hold people back,

particularly once the revolutionary potential of scholarly skywriting is

demonstrated and a quorum of the peers of each realm become addicted. The

"virtual" environment can mimic what we're used to as closely as necessary

to mediate a total transition. In fact, nothing has a better chance

to NARROW the ignorance gap than the global, interactive and virtually

instantaneous airwaves of the friendly skies.

> I'd be interested in your proposals about ensuring quality. I am not so sure

> of your proposals re: read only, but I'd be happy to look at them. I am not

> a Luddite. I believe I have the largest enrollment class learning entirely

> via computer-mediated communication. And it is a performance class (group

> problem solving). It is both popular and effective, but the computer has

> been adapted to the needs of the class not the reverse. I think that

> putting journals on-line (at least at the moment) is a case of "we have the

> machinery, why not use it?"

The idea is to have a vertical (peer expertise) and a horizontal

(temporal-archival) dimension of quality control. The vertical dimension

would be a hierarchy of expertise, with read/write access for an

accredited group of peers at a given level and read-only access at the

level immediately below it, but with the right to post to a peer at

the next higher level, who can in turn post your contribution for you,

if he judges that it to is good enough. (A record of valuable mediated

postings could result in being voted up a level.) A single editor, or an

editorial board, are simply a special case of this very same mechanism,

where one person or only a few mediate all writing privileges.

That's the vertical hierarchy, based on degrees of expertise,

specialization, and record of contributions in a given field. In

principle, this hierarchy can trickle down all the way to general access

for nonspecialists and students at the lowest read/write level (the

equivalent of "flaming," and, unfortunately, the only level that exists

among the "unmoderated" groups on the net currently, while in today's

so-called "moderated" groups all contributions are filtered through one

person, usually one with no special qualifications or answerability).

So far, even among the elite, this would still be just brainstorming,

at the pilot stage of inquiry. The horizontal dimension would then take

the surviving products of all this skywriting, referee them the usual

way (by having them read, criticized and revised under peer scrutiny)

and then archiving them (electronically) according to the level of rigor

of the refereeing system they have gone through (corresponding, more or

less, to the current "prestige hierarchy" and level of specialization

among print journals). Again, an unrefereed "vanity press" could be the

bottom of the horizontal hierarchy.

> And please address the issue of those of us who make our living out of the

> printed word and fear plagiarism above earthquakes and forest fires.

> Gerald M. Phillips, Pennsylvania State University

I imagine that a different system of values and expectations will be

engendered by the net. One may have to make one's reputation increasingly

by being a fertile collaborator rather than a prolific monad. I think

interactive productivity ("interproductivity") will turn out to be

just as viable, answerable and rewardable a way of establishing one's

intellectual territory as the old way; it's just that the territory will

be much less exclusive, more overlapping and interdependent. That's the

cumulative direction in which inquiry has been heading all along anyway.

As to words themselves: I think it will be possible to protect them

just as well as in the old media. The ones who are really able to use

the language (like the ones who have really new ideas or findings) will

still be a tiny minority, as they are now and always will be, and we'll

know even better who they are and what they have written. It'll be easier

to steal a few of their screenfuls for lowly use, but, as always, it will

be impossible to steal their source. As to the rest -- marginal ideas and

marginal prose -- I can't really work up a sense of urgency about them;

it seems to me, however, that it will be just as easy as before to make

sure they get their dubious due, in terms of their official standing in

the two-dimensional hierarchy.

Stevan Harnad

Harnad, S. (1990) Scholarly Skywriting and the Prepublication Continuum of Scientific Inquiry Psychological Science 1: 342 - 343 (reprinted in Current Contents 45: 9-13, November 11 1991).Stevan Harnad

Harnad, S. (1991) Post-Gutenberg Galaxy: The Fourth Revolution in the Means of Production of Knowledge. Public-Access Computer Systems Review 2 (1): 39 - 53 (also reprinted in PACS Annual Review Volume 2 1992; and in R. D. Mason (ed.) Computer Conferencing: The Last Word. Beach Holme Publishers, 1992; and in: M. Strangelove & D. Kovacs: Directory of Electronic Journals, Newsletters, and Academic Discussion Lists (A. Okerson, ed), 2nd edition. Washington, DC, Association of Research Libraries, Office of Scientific & Academic Publishing, 1992); and in Hungarian translation in REPLIKA 1994; and in Japanese in Research and Development of Scholarly Information Dissemination Systems 1994-1995.

Harnad, S. (1995) Universal FTP Archives for Esoteric Science and Scholarship: A Subversive Proposal. In: Ann Okerson & James O'Donnell (Eds.) Scholarly Journals at the Crossroads; A Subversive Proposal for Electronic Publishing. Washington, DC., Association of Research Libraries, June 1995.

American Scientist Open Access Forum

Thursday, November 15. 2007

Publishing Management Consultant: "Open Access Is Research Spam"

Joseph Esposito is an independent management consultant (the "portable CEO") with a long history in publishing, specializing in "interim management and strategy work at the intersection of content and digital technology."

SUMMARY: Joseph Esposito, a management consultant, says Open Access (OA) is "research spam." But OA's explicit target content is all 2.5 million peer-reviewed articles published annually in the world's 25,000 peer-reviewed research journals. (So either all research is spam or OA is not spam after all!).

Esposito says researchers' problem isn't access to journal articles (they already have that): rather, it's not having the time to read them. This will come as news to the countless researchers worldwide who are denied access daily to the articles in the journals their institution cannot afford, and to the authors of those articles, who are losing all that potential research impact.

Search engines find it all, tantalizingly, but access depends on being able to afford the subscription tolls. Esposito also says OA is just for a small circle of peers: How big does he imagine the actual usership of most journal articles is?

Esposito applauds the American Chemical Society (ACS) executives' bonuses for publishing profit, even though ACS is supposed to be a Learned Society devoted to maximizing research access, usage and progress, not a commercial company devoted to deriving profit from restricting research access only to those who can afford to pay them for it (and for their bonuses).

Esposito describes the efforts of researchers to inform their institutions and funders of the benefits of mandating OA as lobbying, but he does not attach a name to what anti-OA publishers are doing when they hire expensive pit-bull consultants to spread disinformation about OA in an effort to prevent OA self-archiving from being mandated. (Another surcharge for researchers, in addition to paying for their bonuses?)

Esposito finds it tautological that surveys report that authors would comply with OA mandates, but he omits to mention that over 80% of those researchers report that they would self-archive willingly if mandated. (And where does Esposito think publishers would be without existing publish-or-perish mandates?)

Esposito is right, though, that OA is a matter of time -- but not reading time, as he suggests. The only thing standing between the research community and 100% OA to all of its peer-reviewed research article output is the time it takes to do the few keystrokes per article it takes to provide OA. That is what the mandates (and the metrics that reward them) are meant to accomplish at long last.

In an interview by The Scientist (a follow-up to his article, "The nautilus: where - and how - OA will actually work"), Esposito says Open Access (OA) is "research spam" -- making unrefereed or low quality research available to researchers whose real problem is not insufficient access but insufficient time.

In arguing for his "model," which he calls the "nautilus model," Esposito manages to fall (not for the first time) into many of the longstanding fallacies that have been painstakingly exposed and corrected for years in the self-archiving FAQ. (See especially Peer Review, Sitting Pretty, and Info-Glut.)

Like so many others, with and without conflicting interests, Esposito does the double conflation (1) of OA publishing (Gold OA) with OA self-archiving (of non-OA journal articles) (Green OA), and (2) of peer-reviewed postprints of published articles with unpublished preprints. It would be very difficult to call OA research "spam" if Esposito were to state, veridically, that Green OA self-archiving means making all articles published in all peer-reviewed journals (whether Gold or not) OA. (Hence either all research is spam or OA is not spam after all!).

Instead, Esposito implies that OA is only or mainly for unrefereed or low quality research, which is simply false: OA's explicit target is the peer-reviewed, published postprints of all the 2.5 million articles published annually in all the planet's 25,000 peer-reviewed journals, from the very best to the very worst, without exception. (The self-archiving of pre-refereeing preprints is merely an optional supplement, a bonus; it is not what OA is about, or for.)

Esposito says researchers' problem is not access to journal articles: They already have that via their institution's journal subscriptions; their real problem is not having the time to read those articles, and not having the search engines that pick out the best ones.

Tell that to the countless researchers worldwide who are denied access daily to the specific articles they need in the journals to which their institution cannot afford to subscribe. (No institution comes anywhere near being able to subscribe to all 25,000, and many are closer to 250.)

And tell it also to the authors of all those articles to which all those would-be users are being denied access; their articles are being denied all that research impact. Ask users and authors alike whether they are happy with affordability being the "filter" determining what can and cannot be accessed. Search engines find it all for them, tantalizingly, but whether they can access it depends on whether their institutions can afford a subscription.

Esposito says OA is just for a small circle of peers ("6? 60? 600? but not 6000"): How big does he imagine the actual usership of most of the individual 2.5 million annual journal articles to be? Peer-reviewed research is an esoteric, peer-to-peer process, for the contents of all 25,000 journals: research is conducted and published, not for royalty income, but so that it can be used, applied and built upon by all interested peer specialists and practitioners, to the benefit of the tax-payers who fund their research; the size of the specialties varies, but none are big, because research itself is not big (compared to trade, and trade publication).

Esposito applauds the American Chemical Society (ACS) executives' bonuses for publishing profit, oblivious to the fact that the ACS is supposed to be a Learned Society devoted to maximizing research access, usage and progress, not a commercial company devoted to deriving profit from restricting research access only to those who can afford to pay them for it.

Esposito also refers (perhaps correctly) to researchers' amateurish efforts to inform their institutions and funders of the benefits of mandating OA as lobbying -- passing in silence over the fact that the real lobbying pro's are the wealthy anti-OA publishers who hire expensive pit-bull consultants to spread disinformation about OA in an effort to prevent Green OA from being mandated.

Esposito finds it tautological that surveys report that authors would comply with OA mandates ("it's not news that people would comply with a requirement"), but he omits to mention that most researchers surveyed recognised the benefits of OA, and over 80% reported they would self-archive willingly if it was mandated, only 15% stating they would do so unwillingly. (One wonders whether Esposito also finds the existing and virtually universal publish-or-perish mandates of research institutions and funders tautological -- and where he thinks the publishers for whom he consults would be without those mandates.)

Esposito is right, though, that OA is a matter of time -- but not reading time, as he suggests. The only thing standing between the research community and 100% OA to all of its peer-reviewed research output is the time it takes to do a few keystrokes per article. That, and only that, is what the mandates are all about, for busy, overloaded researchers: Giving those few keystrokes the priority they deserve, so they can at last start reaping the benefits -- in terms of research access and impact -- that they desire. The outcome is optimal and inevitable for the research community; it is only because this was not immediately obvious that the outcome has been so long overdue.

But the delay has been in no small part also because of the conflicting interests of the journal publishing industry for which Esposito consults. So it is perhaps not surprising that he should perceive it otherwise, unperturbed if things continue at a (nautilus) snail's pace for as long as possible...

Stevan Harnad

American Scientist Open Access Forum

Friday, November 9. 2007

"Bibliometric Distortion?" OA is the Best Safeguard

Comment on: "Bibliometrics could distort research assessment"

Comment on: "Bibliometrics could distort research assessment" Guardian Education, Friday 9 November 2007 [but see follow-up]

Yes, any system (including democracy, health care, welfare, taxation, market economics, justice, education and the Internet) can be abused. But abuses can be detected, exposed and punished, and this is especially true in the case of scholarly/scientific research, where "peer review" does not stop with publication, but continues for as long as research findings are read and used. And it's truer still if it is all online and openly accessible.

The researcher who thinks his research impact can be spuriously enhanced by producing many small, "salami-sliced" publications instead of fewer substantial ones will stand out against peers who publish fewer, more substantial papers. Paper lengths and numbers are metrics too, hence they too can be part of the metric equation. And if most or all peers do salami-slicing, then it becomes a scale factor that can be factored out (and the metric equation and its payoffs can be adjusted to discourage it).

Citations inflated by self-citations or co-author group citations can also be detected and weighted accordingly. Robotically inflated download metrics are also detectable, nameable and shameable. Plagiarism is detectable too, when all full-text content is accessible online.

The important thing is to get all these publications as well as their metrics out in the open for scrutiny by making them Open Access. Then peer and public scrutiny -- plus the analytic power of the algorithms and the Internet -- can collaborate to keep them honest.

Harnad, S. (2007) Open Access Scientometrics and the UK Research Assessment Exercise. In Proceedings of 11th Annual Meeting of the International Society for Scientometrics and Informetrics 11(1), pp. 27-33, Madrid, Spain. Torres-Salinas, D. and Moed, H. F., Eds.Stevan Harnad

American Scientist Open Access Forum

Thursday, November 8. 2007

UUK report looks at the use of bibliometrics

Comments on UUK Press Release 8 November 2007:What metrics count as "bibliometrics"? Do downloads? hubs/authorities? Interdisciplinarity metrics? Endogamy/exogamy metrics? chronometrics, semiometrics?

UUK report looks at the use of bibliometrics

"This report will help Universities UK to formulate its position on the development of the new framework for replacing the RAE after 2008."

Some of the points for consideration in the report include:

Bibliometrics are probably the most useful of a number of variables that could feasibly be used to measure research performance.

There is evidence that bibliometric indices do correlate with other, quasi-independent measures of research quality - such as RAE grades - across a range of fields in science and engineering.Meaning that citation counts correlate with panel rankings in all disciplines tested so far. Correct.

There is a range of bibliometric variables as possible quality indicators. There are strong arguments against the use of (i) output volume (ii) citation volume (iii) journal impact and (iv) frequency of uncited papers.The "strong" arguments are against using any of these variables alone, or without testing and validation. They are not arguments against including them in the battery of candidate metrics to be tested, validated and weighted against the panel rankings, discipline by discipline, in a multiple regression equation.

'Citations per paper' is a widely accepted index in international evaluation. Highly-cited papers are recognised as identifying exceptional research activity.Citations per paper is one (strong) candidate metric among many, all of which should be co-tested, via multiple regression analysis, against the parallel RAE panel rankings (and other validated or face-valid performance measures).

Accuracy and appropriateness of citation counts are a critical factor.Not clear what this means. ISI citation counts should be supplemented by other citation counts, such as Scopus, Google Scholar, Citeseer and Citebase: each can be a separate metric in the metric equation. Citations from and to books are especially important in some disciplines.

There are differences in citation behaviour among STEM and non-STEM as well as different subject disciplines.And probably among many other disciplines too. That is why each discipline's regression equation needs to be validated separately. This will yield a different constellation of metrics as well as of beta weights on the metrics, for different disciplines.

Metrics do not take into account contextual information about individuals, which may be relevant.What does this mean? Age, years since degree, discipline, etc. are all themselves metrics, and can be added to the metric equation.

They also do not always take into account research from across a number of disciplines.Interdisciplinarity is a measurable metric. There are self-citations, co-author citations, small citation circles, specialty-wide citations, discipline-wide citations, and cross-disciplinary citations. These are all endogamy/exogamy metrics. They can be given different weights in fields where, say, interdisciplinarity is highly valued.

The definition of the broad subject groups and the assignment of staff and activity to them will need careful consideration.Is this about RAE panels? Or about how to distribute researchers by discipline or other grouping?

Bibliometric indicators will need to be linked to other metrics on research funding and on research postgraduate training."Linked"? All metrics need to be considered jointly in a multiple regression equation with the panel rankings (and other validated or face-valid criterion metrics).

There are potential behavioural effects of using bibliometrics which may not be picked up for some yearsYes, metrics will shape behaviour (just as panel ranking shaped behaviour), sometimes for the better, sometimes for the worse. Metrics can be abused -- but abuses can also be detected and named and shamed, so there are deterrents and correctives.

There are data limitations where researchers' outputs are not comprehensively catalogued in bibliometrics databases.The obvious solution for this is Open Access: All UK researchers should deposit all their research output in their Institutional Repositories (IRs). Where it is not possible to set access to a deposit as OA, access can be set as Closed Access, but the bibliographic metadata will be there. (The IRs will not only provide access to the texts and the metadata, but they will generate further metrics, such as download counts, chronometrics, etc.)

The report comes ahead of the HEFCE consultation on the future of research assessment expected to be announced later this month. Universities UK will consult members once this is published.Let's hope both UUK and HEFCE are still open-minded about ways to optimise the transition to metrics!

References

Harnad, S., Carr, L., Brody, T. & Oppenheim, C. (2003) Mandated online RAE CVs Linked to University Eprint Archives: Improving the UK Research Assessment Exercise whilst making it cheaper and easier. Ariadne 35.

Brody, T., Kampa, S., Harnad, S., Carr, L. and Hitchcock, S. (2003) Digitometric Services for Open Archives Environments. In Proceedings of European Conference on Digital Libraries 2003, pp. 207-220, Trondheim, Norway.

Harnad, S. (2006) Online, Continuous, Metrics-Based Research Assessment. Technical Report, ECS, University of Southampton.

Harnad, S. (2007) Open Access Scientometrics and the UK Research Assessment Exercise. In Proceedings of 11th Annual Meeting of the International Society for Scientometrics and Informetrics 11(1), pp. 27-33, Madrid, Spain. Torres-Salinas, D. and Moed, H. F., Eds.

Brody, T., Carr, L., Harnad, S. and Swan, A. (2007) Time to Convert to Metrics. Research Fortnight pp. 17-18.

Brody, T., Carr, L., Gingras, Y., Hajjem, C., Harnad, S. and Swan, A. (2007) Incentivizing the Open Access Research Web: Publication-Archiving, Data-Archiving and Scientometrics. CTWatch Quarterly 3(3).

See also: Prior Open Access Archivangelism Postings on RAE and metricsStevan Harnad

American Scientist Open Access Forum

Tuesday, November 6. 2007

Should Institutional Repositories Allow Opt-Out From (1) Mandates? (2) Metrics?

This is a response to a query from a Southampton colleague who received an unsolicited invitation from an unknown individual to contribute a chapter to an "Open Access" book (author pays) on the basis of a paper he had deposited in the ECS Southampton Institutional Repository (IR) -- and possibly on the basis of its download statistics:

This is a response to a query from a Southampton colleague who received an unsolicited invitation from an unknown individual to contribute a chapter to an "Open Access" book (author pays) on the basis of a paper he had deposited in the ECS Southampton Institutional Repository (IR) -- and possibly on the basis of its download statistics:The colleague asked:

(1) Is the book chapter that [identity deleted] is soliciting an example of Open Access?(1) Yes, Open Access (OA) books are instances of OA just as OA articles are. The big difference is that all peer-reviewed journal/conference articles, without exception, are written exclusively for research usage and impact, not for royalty income, whereas this is not true of all or even most books. Articles are all author give-aways, but most books are not. So articles are OA's primary target; books are optional and many will no doubt follow suit after systematic OA-provision for research articles has taken firm root globally. (Also important: article deposit in the IR can be mandated by researchers' employers and funders, as Southampton ECS and RCUK have done, but book deposit certainly cannot -- and should not -- be mandated.)

(2) Are download counts legitimate metrics for (2a) CVs, (2b) website statistics, (2c) departmental/institutional repository (IR) statistics?

(3) Can download statistics be abused?

(4) Should institutional authors be able to "opt out" of (4a) depositing their paper in their IR and/or (4b) having their download statistics displayed?

(2) Yes, download metrics, alongside citation metrics and other new metric performance indicators can and should be listed in CVs, website stats and IR stats. In and of themselves they do not mean much, as absolute numbers, but in an increasingly OA world, where they can be ranked and compared in a global context, they are potentially useful aids to navigation, evaluation, prediction and other forms of assesment and analysis. (We have published a study that shows there is a good-sized positive correlation between earlier download counts and later citation counts: Brody, T., Harnad, S. and Carr, L. (2006) Earlier Web Usage Statistics as Predictors of Later Citation Impact. Journal of the American Association for Information Science and Technology (JASIST) 57(8) pp. 1060-1072.)

(3) Yes, download statistics can be -- and will be -- abused, as many other online innovations (like email, discussion lists, blogs, search engines, etc.) can be abused by spammers and other motivated mischief-makers or self-promoters. But it is also true that those abuses can and will breed counter-abuse mechanisms. And in the case of academic download metrics inflation, there will be obvious, powerful ways to counteract and deter it if/when it begins to emerge: Anomalous download patterns (e.g., self-hits, co-author IP hits, robotic hits, lack of correlation with citations, etc.) can be detected, named and shamed. (It is easier for a commercial spammer to abuse metrics and get away with it than for an academic with a career that stands at risk once discovered!)

(4) No, researchers should definitely not be able to "opt out" of a deposit mandate: That would go against both the letter and spirit of a growing worldwide movement among researchers, their institutions and their funders to mandate OA self-archiving for the sake of its substantial benefits to research usage and impact. There is always the option of depositing a paper as Closed Access rather than Open Access, but I think a researcher would be shooting himself in the foot if he chose to do that on account of worries about the abuse of download statistics: It would indeed reduce the download counts, usage and citations of that researcher's work, but it would not accomplish much else. (On the question of opting out of the display of download (and other) metrics, I have nothing objective to add: It is technically possible to opt out of displaying metrics, and if there is enough of a demand for it, it should be made a feature of IRs; but it seems to me that it will only bring disadvantages and handicaps to those who choose to opt out of displaying their metrics, not only depriving them of data to guide potential users and evaluators of their work, but giving the impression that they have something to hide.)

I would also add that the invitation to contribute a book chapter by [identity deleted] might possibly be a scam along the lines of the bogus conference scams we have heard much about. The public availability of metadata, papers and metrics will of course breed such "invitations" too, but one must use one's judgment about which of them are eminently worth ignoring.

Stevan Harnad

American Scientist Open Access Forum

Wednesday, October 31. 2007

RePEc, Peer Review, and Harvesting/Exporting from IRs to CRs

The new RePEc blog is a welcome addition to the blogosphere. The economics community is to be congratulated for its longstanding practise of self-archiving its pre-refereeing preprints and exporting them to RePEc.

The new RePEc blog is a welcome addition to the blogosphere. The economics community is to be congratulated for its longstanding practise of self-archiving its pre-refereeing preprints and exporting them to RePEc.Re: the current RePEc blog posting, "New Peer Review Systems":

Experiments on improving peer review are always welcome, but what the worldwide research community (in all disciplines, economics included) needs most urgently today is not peer review reform, but Open Access (OA) to its existing peer-reviewed journal literature. It's far easier to reform access than to reform the peer-review system, and it's also already obvious exactly what needs to be done and how, for OA -- mandate RePEc-style self-archiving, but for the refereed postprints, not just the unrefereed preprints -- whereas peer-review reforms are still in the testing stage. It's not even clear whether once most unrefereed preprints and all refereed postprints are OA anyone will still feel any need for radical peer review reform at all; it may simply be a matter of more efficient online implementation.

So if I were part of the RePEc community, I would be trying to persuade economists (who, happily, already have the preprint self-archiving habit) to extend their practise to postprints -- and to persuade their institutions and funders to mandate postprint self-archiving in each author's own OAI-compliant Institutional Repository (IR). From there, if and when desired, its metadata can then also be harvested by, or exported to, CRs (Central Repositories) like RePEc or PubMed Central. (One of the rationales for OAI-interoperability is harvestability.)

But the primary place to deposit one's own preprints and postprints, in all disciplines, is "at home," i.e., in one's own institutional archive, for visibility, usage, impact, record-keeping, monitoring, metrics, and assessment -- and in order to ensure a scaleable universal practise that systematically covers all research space, whether funded or unfunded, in all disciplines, single or multi, and at all institutions -- universities and research institutes. (For institutions that have not yet created an IR of their own -- even though the software is free and the installation is quick, easy, and cheap -- there are reliable interim CRs such as Depot to deposit in, and harvest back from, once your institution has its own IR.)

Stevan Harnad

American Scientist Open Access Forum

Saturday, October 20. 2007

Video to Promote Open Access Mandates and Metrics

I unfortunately could not attend the European University Rectors' Conference on Open Access, convened by the Bernard Rentier, Rector of the University of Liège on 18 October 2007, but I did send this 23-minute video on OA Mandates and Metrics, part of which, so I understand from Alma Swan, was shown at the Conference:

Please feel free to use the video to promote OA mandates and metrics at your institution: download video

Here are the video's accompanying 3 figures as PPT or as PNG.

And here is an older, bigger PPT, with many more figures that you can use to explain and promote OA at your institution.

« previous page

(Page 7 of 11, totaling 102 entries)

» next page

EnablingOpenScholarship (EOS)

Quicksearch

Syndicate This Blog

Materials You Are Invited To Use To Promote OA Self-Archiving:

Videos:

audio WOS

Wizards of OA -

audio U Indiana

Scientometrics -

The American Scientist Open Access Forum has been chronicling and often directing the course of progress in providing Open Access to Universities' Peer-Reviewed Research Articles since its inception in the US in 1998 by the American Scientist, published by the Sigma Xi Society.

The American Scientist Open Access Forum has been chronicling and often directing the course of progress in providing Open Access to Universities' Peer-Reviewed Research Articles since its inception in the US in 1998 by the American Scientist, published by the Sigma Xi Society.

The Forum is largely for policy-makers at universities, research institutions and research funding agencies worldwide who are interested in institutional Open Acess Provision policy. (It is not a general discussion group for serials, pricing or publishing issues: it is specifically focussed on institutional Open Acess policy.)

You can sign on to the Forum here.

Archives

Calendar

|

|

May '21 | |||||

| Mon | Tue | Wed | Thu | Fri | Sat | Sun |

| 1 | 2 | |||||

| 3 | 4 | 5 | 6 | 7 | 8 | 9 |

| 10 | 11 | 12 | 13 | 14 | 15 | 16 |

| 17 | 18 | 19 | 20 | 21 | 22 | 23 |

| 24 | 25 | 26 | 27 | 28 | 29 | 30 |

| 31 | ||||||

Categories

Blog Administration

Statistics

Last entry: 2018-09-14 13:27

1129 entries written

238 comments have been made